This has been a long time coming, but new AWS C5a compute instances, powered by AMD EPYC 7002 Series processors, codenamed “Rome” are now generally available in the U.S. East, U.S. West, Europe, and Asia Pacific regions. These are now the sixth instance type that AWS has deployed using AMD EPYC processors, and a big step for Rome as it is now in a general-purpose AWS compute instance.

AWS EC2 C5a Instances

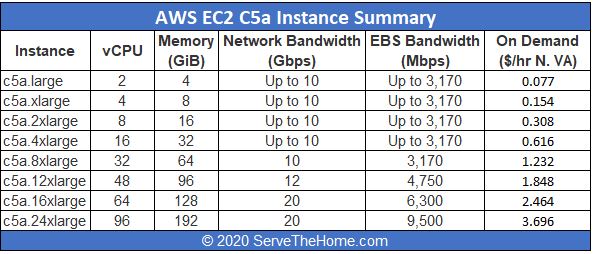

There are eight initial configurations that AWS says are in GA today. Here is the table:

What is very interesting here is that AWS says that the new instances will be about 10% less expensive for compute than their Intel counterparts. With an x86 instruction set, that is much less switching cost than moving from x86 to Arm for most AWS customers. In many cases, it is simply adding an “a” into an API launch call. For those looking to cut AWS costs, that is a small change that if an organization is using a lot of C5 instances, can translate into easy savings.

The companies also said that Amazon EC2 C5ad instances that come with local NVMe instance storage and Amazon EC2 C5an.metal / C5adn.metal bare metal instances are coming soon. That will help build a more complete EPYC portfolio that customers can use.

Final Words

There are a few interesting points here. First, Xeon-based C5 instances were launched in 2017. It gives us perhaps two aspects to ponder. First, we know that, at list pricing, AMD EPYC Rome CPUs perform very well on a price/ performance basis versus Intel Xeon. Seeing a 10% decrease over Intel 2017 cloud priced Xeons points to a few things. First, CPUs are not the main cost drivers in cloud infrastructure. Second, cloud service provider pricing is much different than what the general market sees. Third, AWS exercises a lot of control over cloud performance per dollar.

Even if the underlying hardware is faster, it can charge, partition, and meter resources as it pleases. In contrast, if a system costs roughly the same to make, and one company’s chips are faster, one can get much larger gains with newer generations since one pays for, and directly benefits from more performance. That is important to keep in mind as AWS introduces chips such as the AWS Graviton2 to the ecosystem. By serving as the consumption platform, it can detach the input pricing from the end-user pricing to a large degree. Having AMD EPYC instances helps keep pressure on Intel to lower input pricing creating a bigger spread for AWS.

You have listed the on-demand (regular) price as the spot price. Spot prices tend to be lower.

With a PCIe 4.0 platform (or just the amount of Lanes provided by EPYC as opposed to Intel) I can envision quite a few I/O and Network optimized use cases here as well as you get at least 2 PCIe 4.0 Lanes per Core with Intel Xeon Platinum 8xxx providing only 2 PCIE 3.0 lanes (and fewer cores).

A lot of folks are scratching their heads about AWS’ use of AMD’s CPUs. Why so hestitant?

Why is the pricing only 10% < Intel, when, say, Tencent's differential is more like 60%-80%? Why the big delay in the general-use rollout? Didn't want to kill Graviton? Seems like a good oppo for other cloud providers to keep taking share from AWS—not only beat AWS to mkt with AMD's latest server CPUs, have recently been and will be far better of perf/watt and many other metrics, but do it at bigger scale and with much cheaper prices. And here comes Epyc 3…