ASUS RS700-E11-RS12U Internal Hardware Overview

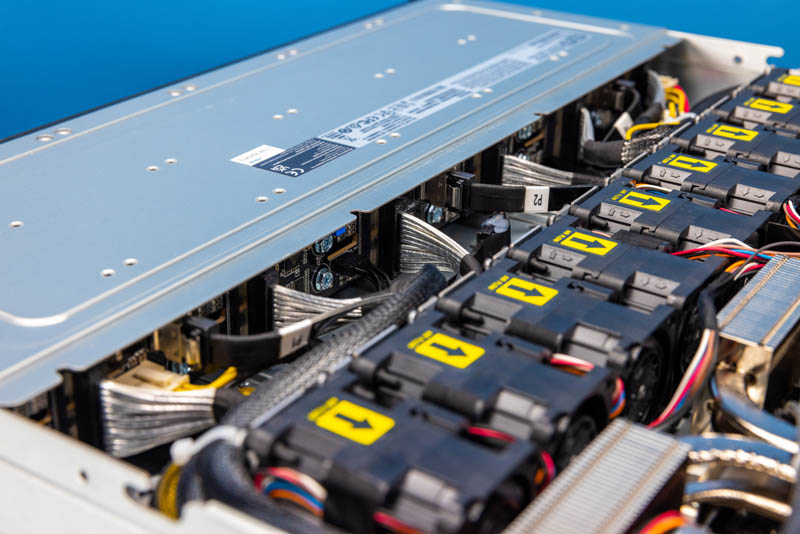

Here is a quick internal shot of the RS700-E11-RS12U. We are going to work from the front of the chassis back in our internal overview.

One feature that ASUS has, that is nice, is the NVMe backplane. The 12-slot backplane has cabled connections for NVMe SSD connectivity. There are, however, other cables connected that provide 12x SATA III lanes, one to each bay from the Intel C741 PCH. We are seeing fewer servers offer this SATA connectivity stock as NVMe SSDs have taken over the market. Still, it is there so if you do want to use a SATA SSD or HDD, you do not need to find and install another cable that routes over the correct distance.

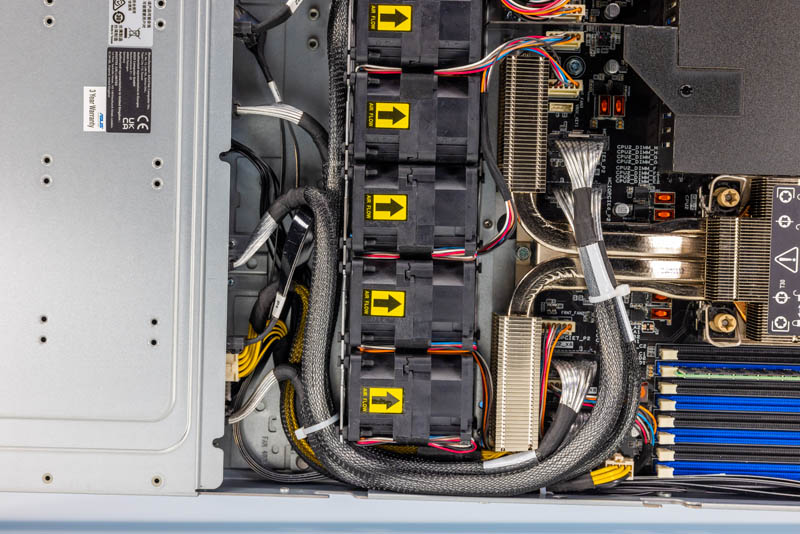

Behind the backplane is a series of nine dual fan modules. Like many 1U servers, these are not in hot-swap carriers as that adds a notable amount of cost to a system.

The nine fan modules are split with four cooling one CPU, four cooling the other, and the middle fan servicing both.

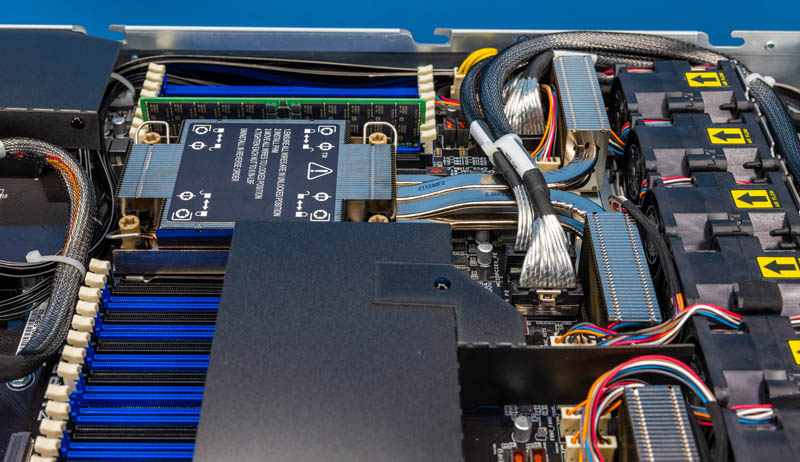

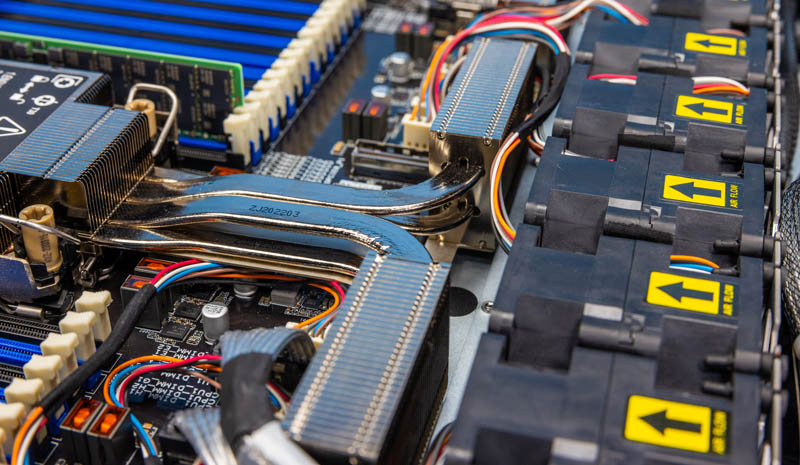

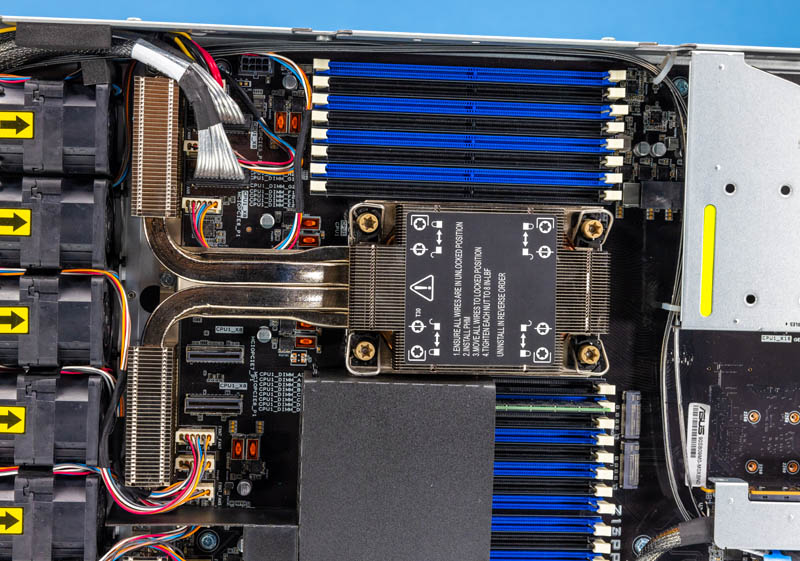

The airflow guide keeps air from going through the center 16x DDR5 DIMM slots and instead directs the majority of the airflow through the CPU heatsinks. The platform has a total of 16x DDR5 DIMMs per CPU for 32x total.

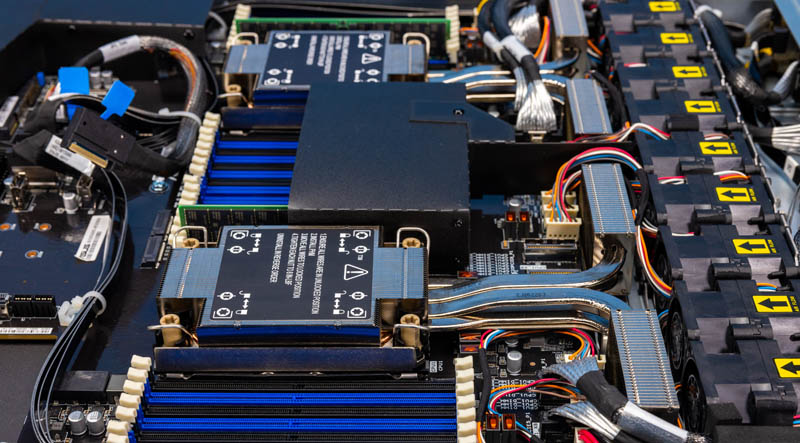

On the subject of the CPU heatsinks, the design ASUS is using worked surprisingly well. We tried up to 350W TDP 60-core Xeon Platinum parts and this server was able to cool them. As we will see in our performance section, this solution performed very well.

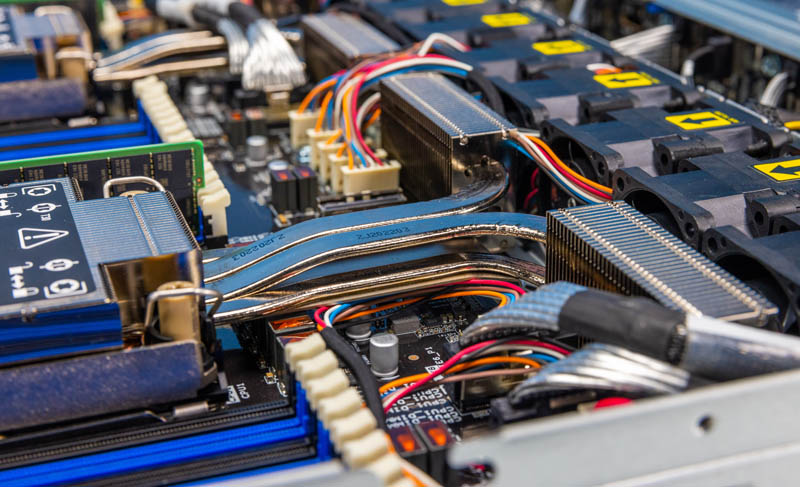

This is a gratuitous photo just because it was an interesting design with two levels of heat pipes.

Something else worth calling out is just how many front MCIO PCIe Gen5 connectors there are in front of the Intel Xeon “Sapphire Rapids” CPU sockets. These MCIO connectors are used to provide front-panel PCIe connectivity for NVMe SSDs. In older motherboards, PCIe connectivity was all behind the CPUs and memory. In newer systems, where PCIe Gen5 trace lengths are a challenge, we have a migration for much of the I/O to in front of the CPU sockets creating additional challenges for cooling. Here the cooling solution has to navigate around those front side MCIO headers.

Here is a quick look at some of the PCIe connectivity between the CPUs and power supplies. We can see much of this is cabled now as well.

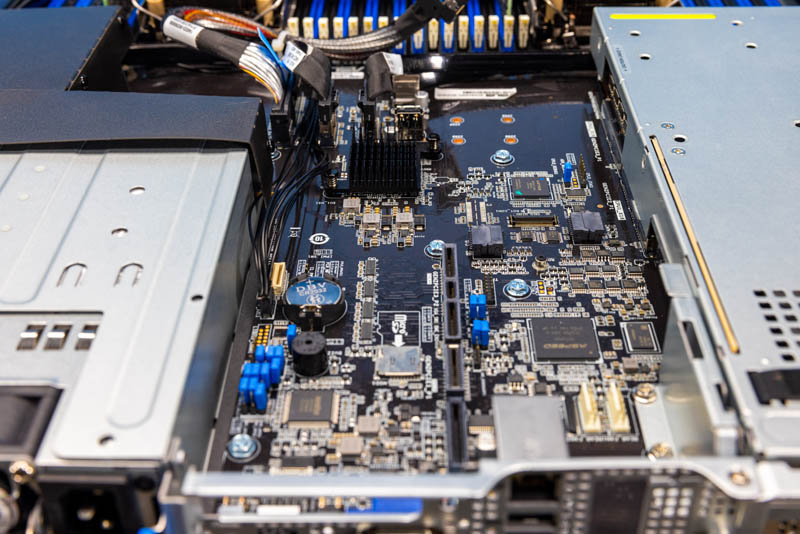

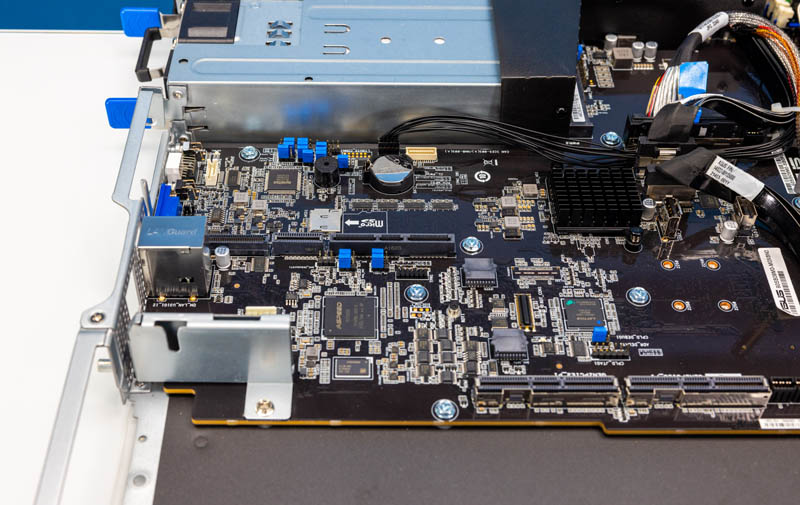

Here is the center section of the system. We can see the PCIe and SATA cables next to the Emmitsburg C741 PCH. We can then see a riser connector in the center along with a microSD card slot and an ASPEED AST2600 BMC.

There are additional connectors for the riser at the edge of the motherboard as well. Between the PCH and those risers are the two PCIe Gen4 M.2 slots for boot.

Here we have a dual-slot PCIe Gen5 x16 riser that can optionally handle dual-slot GPUs.

That riser is on the right rear of the system. The middle riser is more interesting. When they are installed, one can see the PCIe slot that is not located on the backplane. This is an internal slot designed to handle the ASUS PIKE RAID cards.

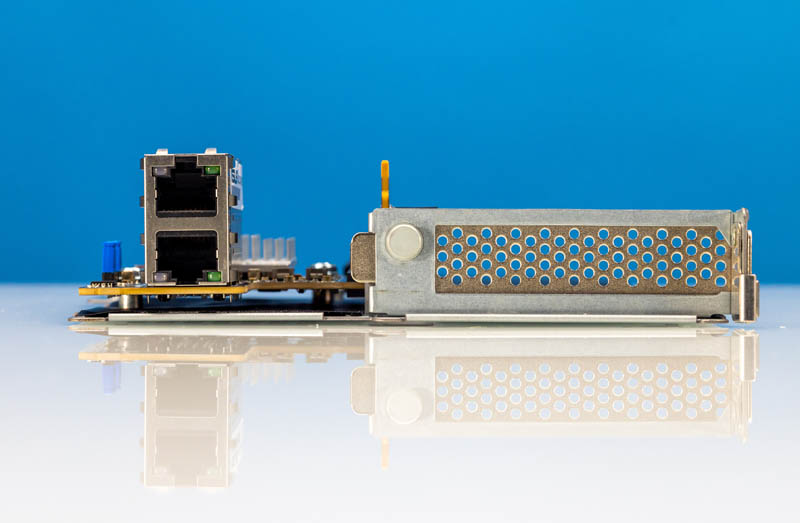

This center riser also has the Intel X710 dual 10Gbase-T LOM and another low-profile PCIe slot.

Here is another view of the riser and its three main features.

Next, let us take a look at the topology to see how this is all wired together.

It’s amazing how fast the power consumption is going up in servers…My ancient 8-core per processor 1U HP DL 360 Gen9 in my homelab has 2x 500 Watt platinum power supplies (well also 8x 2.5″ SATA drive slots versus 12 slots in this new server and no on-board M.2 slots and less RAM slots).

So IF someone gave me one of these new beasts for my basement server lab, would my wife notice that I hijacked the electric dryer circuit? Hmmm.

@carl, you dont need 1600W PSUs to power these servers. honestly, i dont see a usecase when this server uses more than 600W, even with one GPU – i guess ASUS just put the best rated 1U PSU they can find

Data Center (DC) “(server room) Urban renewal” vs “(rack) Missing teeth”: When I first landed in a sea of ~4000 Xeon servers in 2011, until I powered-off my IT career in said facility in 2021, pre-existing racks went from 32 servers per rack to many less per rack (“missing teeth”).

Yes the cores per socket went from Xeon 4, 6, 8, 10, 12, Eypc Rome 32’s. And yes with each server upgrade I was getting more work done per server, but less servers per rack in the circa 2009 original server rooms in this corporate DC after maxing out power/cooling.

Yes we upped our power/cooling game at the 12 core Xeon era with immersion cooling, as we built out a new server room. My first group of vats had 104 servers (2U/4node chassis) per 52U vat…The next group of vats with the 32-core Romes, we could not fill (yes still more core per vat though)….So again losing ground on a real estate basis.

….

So do we just agree that as this hockey stick curve of server power usage grows quickly, we live with a growing “missing teeth” issue over upgrade cycles, perhaps start to look at 5 – 8 year “urban renewal” cycles (rebuild of the given server room’s power/cooling infrastructure at great expense) instead of 2010-ish perhaps 10 – 15 year cycles?

For anyone running their own data center, this will greatly effect their TCO spreadsheets.

@altmind… I am not sure why you can’t imagine it using more than 600w when it used much much more (+200w @70% load, +370w at peak) in the review, all without a gpu.

@Carl, I think there is room for innovation in the DC space, I don’t see the power/heat density changing and it is not exactly easy to “just run more power” to an existing DC let alone cooling.

Which leads to the current nominal way to get out of the “Urban renewal” vs “Missing teeth” dilemma as demand for compute rises as new compute devices power/cooling needs per unit rise: “Burn down more rain forest” (build more data centers as our cattle herd grows).

But I’m not sure everyplace wants to be Northern Virginia, nor want to devote a growing % of their energy grid to facilities that use a lot of power (thus requiring more hard-to-site power generation facilities).

As for “I think there is room for innovation in the DC space”, this seems to be a basic physics problem that I don’t see any solution for on the horizon. Hmmm.