ASRock Rack 8U8X-GNR2 SYN B200 NVIDIA HGX B200 Assembly

The NVIDIA HGX B200 8-GPU assembly is on a tray that pulls out of the front of the chassis. GPUs between the compute silicon, the HBM packages, thermal sensors, and so forth can fail, and there are eight of them, so the HGX tray is a service item. Unlike in a some other systems, you do not have to remove 17-20 components and the entire system at least partially out of the rack to service these. Instead, the tray slides right out.

There are latches on either side of the front of the chassis.

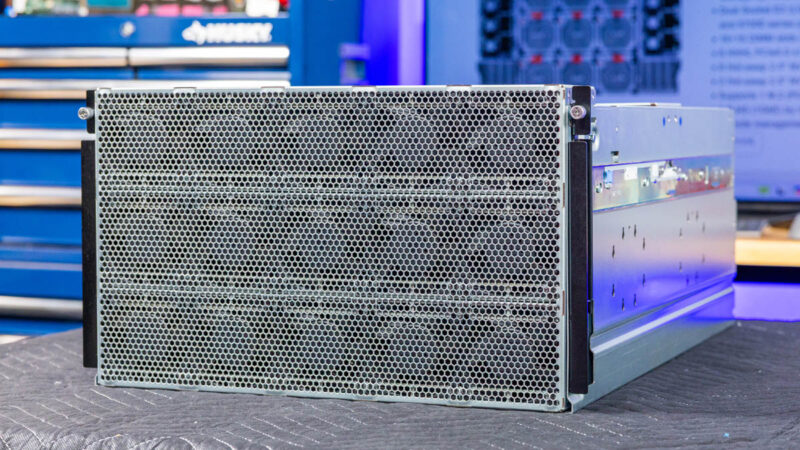

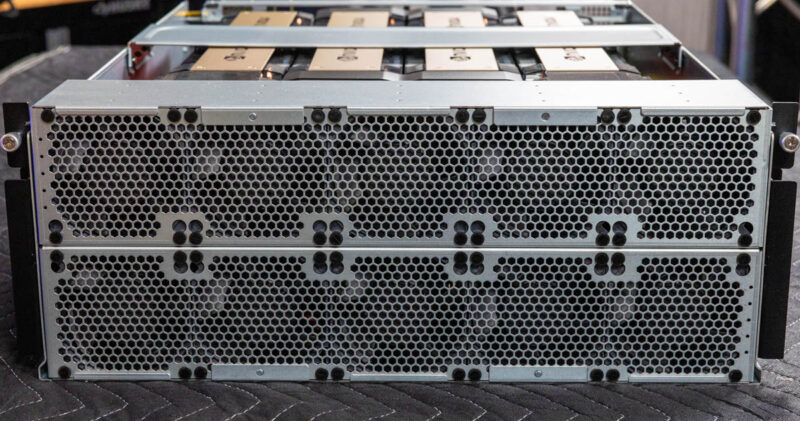

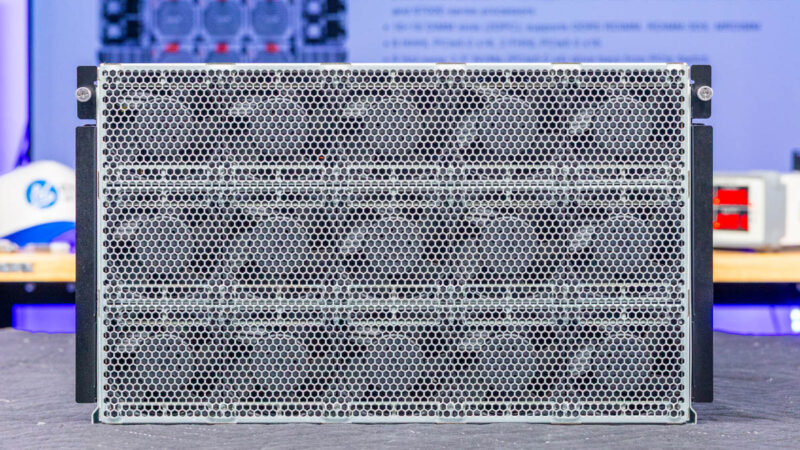

The NVIDIA HGX H200 generation had a 4U air cooled tray. With the NVIDIA HGX B200 generation, this is now a 6U tray, so we are not putting it on its side. Even though we have been reviewing these SXM systems for almost a decade, this is the first generation where it feels wrong to put it on its side as the heatsinks are massive.

The design here is really cool. Instead of designing some kind of custom sliding mechanism, ASRock Rack is just using a standard King Slide rail set to align the HGX tray to the chassis. It exactly like using those rails at a server level, except instead of the outer rails being installed in a rack, they are installed in the server.

Just for some frame of reference, here is the 4U NVIDIA HGX H200 assembly in its tray for comparison to the new 6U NVIDIA HGX B200 assembly we have in this new generation.

The front of the assembly is a giant fan wall that has grown by 2U and another row of fans in this generation. Fans are generally reliable, but these do take a second to swap. In most hyper-scale data centers with huge numbers of machines, if you ask how many spare fans they have the most common answer we hear is “a few” since fans fail so rarely. Still, this would be nice to be a front hot-swap item in the future.

Those fans have an important job: cooling the NVIDIA HGX B200 8-GPU.

These fans need to cool the NVIDIA Blackwell GPUs, NVLink switches, and more. The key to the NVIDIA HGX 8-GPU platform are the NVIDIA NVLink switches that allow the eight GPUs to take advantage of high-speed inter-GPU links.

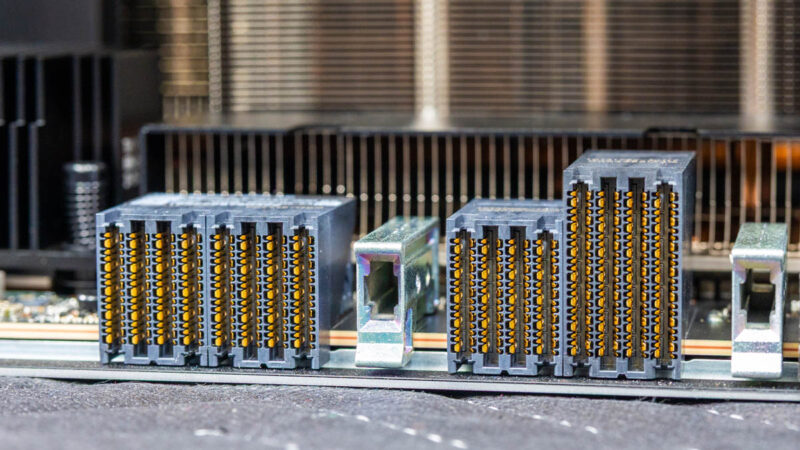

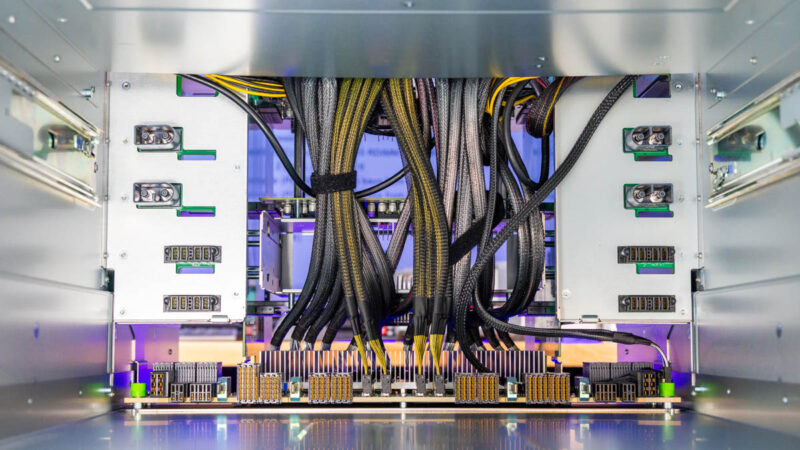

Connecting these to the main system is a big deal, so we have high-density UBB connectors on the baseboard.

We can see that each of these is a NVIDIA HGX B200 SXM6 180GB HBM3e GPU.

With eight GPUs, that is over 1.4TB of HBM3e memory.

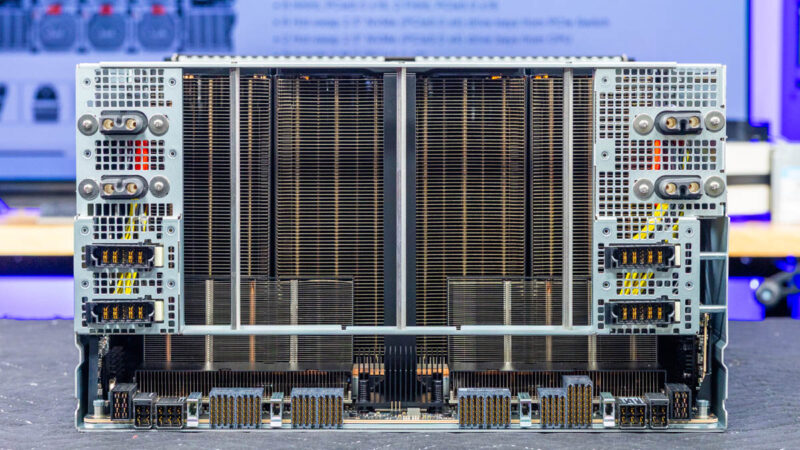

Here is a look a the tray from the rear so you can see just how tall these heatsinks are. The larger heatsinks certainly make this assembly heavy enough that we needed two people to move this.

In the back, we have the mating connectors for the power and data feeds to this board.

Bringing this full circle, here is inside the system where these connectors mate to.

That PCIe switch board is extremely important for these servers. Next, let us get to the rear of the server.

The sytem from Pegatron looks exactly the same.

The delta over 5 years (Fall? 2020) in the cost, power usage, capability of the 8-way Nvidia servers is quite impressive (along with a $5T market cap.)

Toward the end of my IT career I installed two dual X86 (Xeon or Epyc?) eight A100 based Nvidia servers (vendor: Lambda). My circa 2019 racks supported < 10 KW iirc. The servers (one per rack) were perhaps 4U and cost just one Starbucks coffee < $100K each.

Change is happening quite fast. These things are practically outmoded 2 years are being installed (H100 hourly rental costs 2024 vs 2025)…