Putting it Together: Marvell ThunderX2 v. 190 Raspberry Pi 4 4GB’s

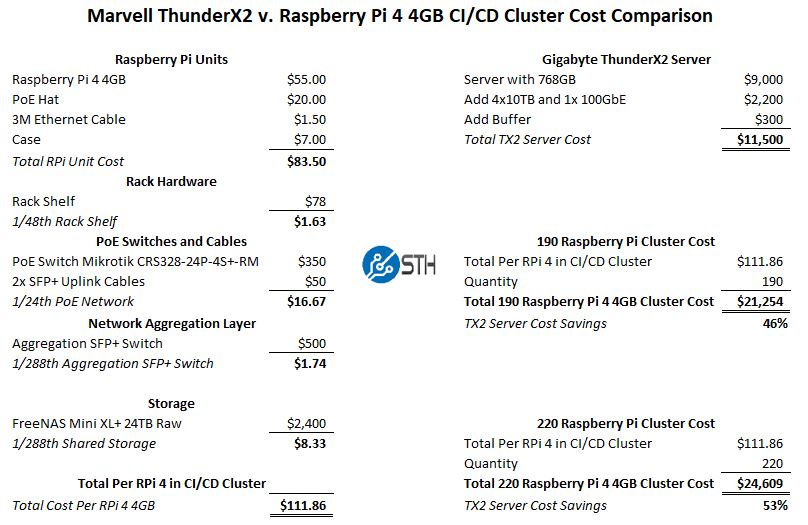

As you can imagine, what started as a simple ask, took a few weeks to get through. In the end, here is the back of the envelope of what we came up with:

Using our 190 Raspberry Pi 4 4GB cluster, the ThunderX2 is about 46% less expensive. That means that you end up paying about 1.85x to use “low cost” SBCs instead of a server.

Since someone will undoubtedly want the 220 number, it is 53% or 2.14x more.

Going Beyond the Numbers for TCO

The initial purchase costs are only part of the equation. In terms of rack space, the Raspberry Pi 4 solution uses four shelves with 48 nodes each. Each shelf requires a minimum of 2U but to remain serviceable most organizations leave 2-3U of extra space above the nodes in racks. That means we have 4x 4U or 4x 5U for the shelves with the nodes. We also have an aggregation switch plus eight 24-port PoE switches for a total of 9U worth of switches. We also need to put that FreeNAS Mini XL+ we are using somewhere. All told, this solution takes 26U in the best case.

We are comparing that to a 2U server. If rack space is valuable, you can use your TCO calculator to compare those figures.

Assuming 5W for the Raspberry Pi 4 4GB that is 190×5= 950W of power for the nodes. The nine switches can use ~40W each without PoE power for the nodes. We also have to add power for the NAS. That means this is a ~1400W solution. In our Updated Cavium ThunderX2 Power Consumption Results, we saw under 900W. Our Silicon Valley labs use $250-300 per KW provisioned each month. That means that the recurring costs are $125-150 more for us each month. Over two years, that is $3000-3600. Other regions have lower power costs.

The Raspberry Pi 4 4GB cluster has a total of 190 nodes, 9 switches, and 1 NAS for 200 systems that can fail. A failure may not take down the entire cluster, but it is much more likely to happen than on a single server. Conversely, if that single server fails, everything goes down.

There are a few more intangibles here. First, the ThunderX2 system runs OSes out of the box. Download Ubuntu or RedHat and install. The Raspberry Pi 4 is not fully SBSA compliant so one ends up using embedded images or special installers. The Gigabyte ThunderX2 server also has features like hot-swappable power supplies and out of band management which makes it easier to integrate into a data center.

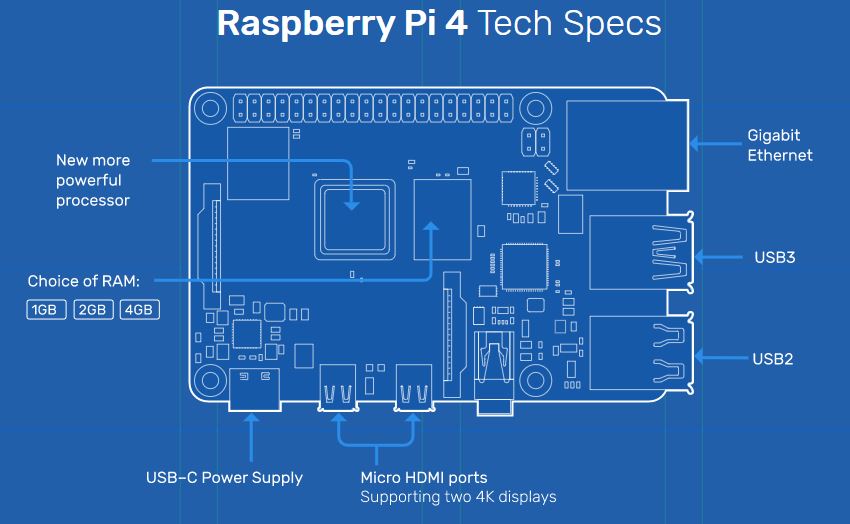

The Raspberry Pi 4’s have an integrated GPU that we are not using here. NVIDIA announced Arm support for GPU accelerated computing so that would probably be how we would add this to our ThunderX2 design. Still, if you are using the GPU, SBCs are admittedly better. We were looking at this for a CI/CD pipeline that was focused on Arm CPU performance.

Finally, there is how you get there. The Raspberry Pi 4 cluster will require a lot more time to build than simply racking a 2U server and plugging in 6 cables. Time spent, along with capital outlay, can happen in smaller chunks with the Raspberry Pi 4 which is one reason those clusters are popular. For many startups and hobbyists, a few hundred to add additional nodes each week or month may be easier to handle than purchasing a server upfront.

Final Words

Ever since we did our Cavium ThunderX2 Review and Benchmarks I have always wondered, how does it compare to lower-end boards. Over the past few weeks, the server market has changed. With the AMD EPYC 7002 launch, AMD now has the performance crown, core counts, PCIe Gen4 I/O expansion, and price advantage over Intel. Once we started to see the numbers, beyond Intel, the question turned to the Arm ecosystem.

When I was first approached about this project, I assumed that a Raspberry Pi 4 4GB cluster was cheaper. After all, the boards are $55 each. That is three digits smaller than a server. Doing the work, it turns out that just getting a big server is easier, and likely less expensive. I am fairly conservative with my numbers. Someone could take what we have here, and get closer to saying the Raspberry Pi 4 cluster costs 3x the server.

Admittedly there is wiggle room on both ends to make the Raspberry Pi 4 cluster look either better or worse and the intangibles we went over make this far from an exact science. Still, doing the exercise at least gives others who may want to take a similar track some framework to go by which is why we are sharing it on the main site.

There is still a ton of merit to small Raspberry Pi clusters. Even at 20-30 nodes, they make a ton of sense. By the time you are looking to do 100 nodes in a data center, it is probably worth at least exploring an Arm server if you are doing Arm on Arm (AoA) development.

We’ve done something similar to you using Packet’s Arm instances and AWS Graviton Instances. We were closer to 175-180 but our per-RPi4 costs were higher due to not using el cheapo switches like you did and we assumed a higher end case and heatsinks.

What about HiSilicon and eMAG?

Well, I guess using single OS instance server also simplifies a lot of CI/CD workflow as you do not need to work out all the clustering trickiness as you just run on one single server — like you would run on single RPi4. There is no need to cut beautiful server into hundreds of small VMs just to later make that a cluster. Sure, for price calculation this is needed, but in real life it just adds more troubles, hence your TCO calculation adds even more pro points to the single server as you save on stuff keeping cluster of RPis up & running.

That’s a Pi 3 B+ in the picture with the POE hat not a Pi4

What about HiSilicon and eMAG?

Maybe a better cluster option – Bitscope delivers power through the header pins, and can use busbars to power a row of 10 blades. For Pi3 clusters I did, it was $50 per blade/4 Pis, and $200 for 10 blade rack chassis.

Hi Patrick,

thank you for interesting article. In my opinion to compare single-box vs scale out alternatives one need to consider proper scaling

unit. I would try not RPi 4, but OdroidH2+ as it offers more RAM and SSD with much higher IO throughput (even with virtualization to get threads number).

If I am counting roughly then 2 alternatives should be at least comparable regarding the TCO with ThunderX2 alternative:

A) 24 x OdroidH2+, 32GB RAM, 1TB NVMe SSD = (360 USD per 1 SBC) + 1×24 Port Switch (no PoE) + DC Power Supply 400W

B) 48 x OdroidH2+, 16GB RAM, 500 GB NVMe SSD = (240 USD per 1 SBC) + 2×24 Port Switch (no PoE) + DC Power Supply 800W

Regards,

Milos

Hi Milos – the reason we did not use that OdroidH2+ is that it is x86. We were specifically looking at Arm on Arm here.