A few weeks ago, I had the pleasure of meeting a Silicon Valley dev-ops team that has space not far from us in our Fremont colocation. Their racks were decidedly different. Shelves of ODROID and Raspberry Pi devices instead of traditional servers. I asked about the setup and I was told it is for Android CI/CD (continuous integration/ delivery) pipelines. My first thought was “cool” but then I found out the slick way they are doing it, and asked why they are not virtualizing. It turns out, they did not think it was possible. When we look at the x86 server space, big virtualized servers outsell small devices, so why is it different in Arm?

Recently we were asked to look at doing a quick TCO calculation on using either a virtualized ThunderX2 server or Raspberry Pi 4 for Android CI/CD. We were given a specific metric: GeekBench on Android. The company also wanted to look at what I am calling Arm on Arm (AoA) since it did not want to use translators or emulation with x86 servers. It was time to take a journey of discovery and see if it makes fiscal sense to get an Arm server.

The Apparently Typical Arm CI/CD Setup

I have now seen several of these setups. Data centers do not allow photos, but the basic setups are similar. Take a basic single-board computer Arm device. Provide it power and a way to boot and orchestrate code. Scale this to hundreds of devices and you have your Arm CI/CD pipeline. Many virtualize and emulate/ translate, but if you want your Arm code to run on Arm, you end up with these massive Arm SBC “server” farms. We are going to use the Raspberry Pi 4 4GB as our base unit here since they are popular. Popular enough that we have seen BitScope Raspberry Pi Clusters with 144 nodes for rack-scale designs.

Powering the Raspberry Pi Clusters

Power is typically handled by a PoE switch. This provides power and data networking via a single Ethernet cable. When you have hundreds of Raspberry Pi’s, minimizing cables is a serious consideration I am told. There are a few other benefits. Chiefly that many managed PoE switches can remotely turn on/off ports. Connected devices then reboot which also allows them to potentially PXE boot (note at the time we are writing this, the PXE boot feature is planned but not implemented for the Raspberry Pi 4.)

This requires a PoE Hat but removes the need for other power sources, cables, and outlets to make that work. I have seen a cluster with several dozen nodes and individual USB power inputs, and it looked like a rat’s nest in comparison.

Using PoE Simplifies the process. There are a few more bits that I have seen. One needs a PoE switch. The individual device power pull is not great, but you still need a lot of ports and some safety margin. I have seen a few Cisco switches along with Netgear GS752TXP switches used. Generally, these have SFP+ 10GbE uplinks for 48 ports. Upstream is usually a 48 port SFP+ switch. One needs one PoE switch per 48 devices, four SFP+ DACs, and four upstream SFP+ ports (or a single QSFP+ breakout port.)

Storage

Most of the setups we are seeing provision via some sort of shared storage. We have seen some fairly low-end setups here including a QNAP TVS-951X 9-Bay NAS servicing a few hundred nodes. We have seen a few other clusters with their own local media, especially with lower-speed networking.

Other Odds and Ends

The other odds and ends tend to be cases. Cases, heatsinks, and fans keep devices cool and provide some protection. Those that use external PoE splitters we have seen simple solutions such as a $6 case with a zip tie and in one case a rubber band to keep the splitter and main case together.

Generally one can use Velcro and an inexpensive shelf to put 48x Raspberry Pi’s per shelf which can then mate to a switch to keep cable runs short and organized.

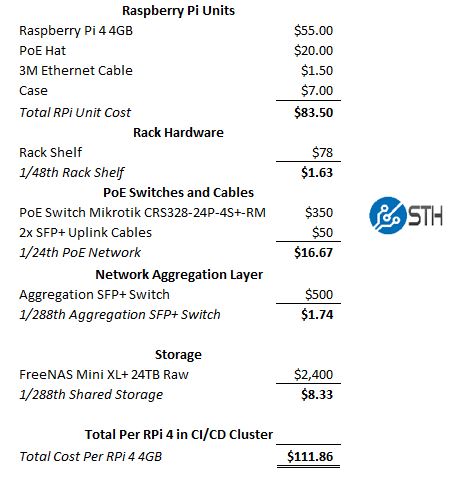

Raspberry Pi 4 Cluster Cost

If we want to compare an Arm server to a Raspberry Pi 4, we need to get a cost per unit for the Raspberry Pi 4 from above. Tallying up the total cost here:

We are using an unreleased $500 Mikrotik CRS326-24S+2Q+RM as our aggregation switch, but that should be out soon. Further, instead of the Cisco and Netgear units we have seen, we are using the Mikrotik CRS328-24P-4S+RM which allows you to scale in increments of 24 nodes. Still, the actual cost of the $55 Raspberry Pi 4 4GB is closer to twice what the board costs by the time you get it into an easy to manage cluster format and that is being conservative. Using higher-end network gear, as we see in the actual clusters, adds 10% or more to the per-node cost.

Again, one can assume a much higher oversubscription rate for networking and not get a 10GbE uplink capable PoE switch, but we are trying to match what we have seen actually deployed. There are cheaper boot drive options, but we wanted to use a setup that provides some redundancy and snapshot capabilities as we can get in a virtualized environment.

With a $111.86 per Raspberry Pi 4 4GB node cost, we now need two more pieces of information: relative performance and ThunderX2 server cost.

We’ve done something similar to you using Packet’s Arm instances and AWS Graviton Instances. We were closer to 175-180 but our per-RPi4 costs were higher due to not using el cheapo switches like you did and we assumed a higher end case and heatsinks.

What about HiSilicon and eMAG?

Well, I guess using single OS instance server also simplifies a lot of CI/CD workflow as you do not need to work out all the clustering trickiness as you just run on one single server — like you would run on single RPi4. There is no need to cut beautiful server into hundreds of small VMs just to later make that a cluster. Sure, for price calculation this is needed, but in real life it just adds more troubles, hence your TCO calculation adds even more pro points to the single server as you save on stuff keeping cluster of RPis up & running.

That’s a Pi 3 B+ in the picture with the POE hat not a Pi4

What about HiSilicon and eMAG?

Maybe a better cluster option – Bitscope delivers power through the header pins, and can use busbars to power a row of 10 blades. For Pi3 clusters I did, it was $50 per blade/4 Pis, and $200 for 10 blade rack chassis.

Hi Patrick,

thank you for interesting article. In my opinion to compare single-box vs scale out alternatives one need to consider proper scaling

unit. I would try not RPi 4, but OdroidH2+ as it offers more RAM and SSD with much higher IO throughput (even with virtualization to get threads number).

If I am counting roughly then 2 alternatives should be at least comparable regarding the TCO with ThunderX2 alternative:

A) 24 x OdroidH2+, 32GB RAM, 1TB NVMe SSD = (360 USD per 1 SBC) + 1×24 Port Switch (no PoE) + DC Power Supply 400W

B) 48 x OdroidH2+, 16GB RAM, 500 GB NVMe SSD = (240 USD per 1 SBC) + 2×24 Port Switch (no PoE) + DC Power Supply 800W

Regards,

Milos

Hi Milos – the reason we did not use that OdroidH2+ is that it is x86. We were specifically looking at Arm on Arm here.