The ASRock Rack EPYC4000D4U Motherboard an AMD EPYC 4005 “Grado” Platform

A few weeks ago, we reviewed the AMD EPYC 4004 powered Lenovo ThinkSystem ST45 V3. Two common lines of comments were that folks wanted more flexibility and an integrated BMC. Of course, we knew we had the ASRock Rack EPYC4000D4U in line for this launch. With its mATX form factor, it is a standard size motherboard that fits in plenty of chassis.

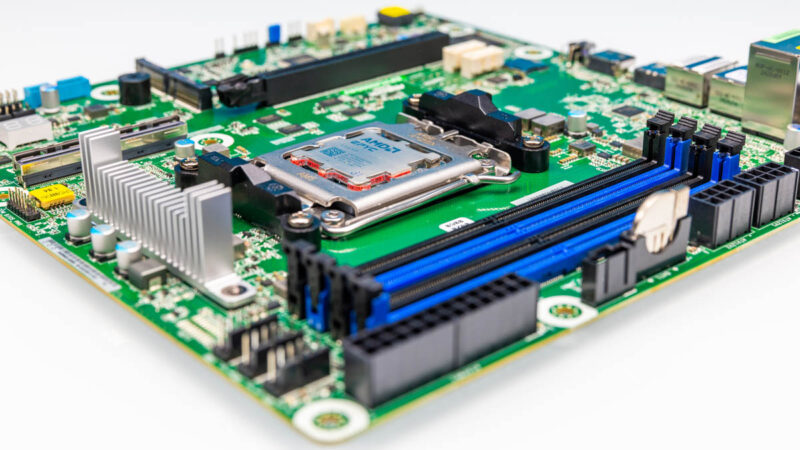

Here we have it with the AMD EPYC 4565P 16-core part. Of note, the board says that for 170W it needs liquid cooling but it supports the higher power CPUs. Some server platforms only support the lower power 65W TDP parts.

Unlike the Lenovo ThinkSystem ST45 V3, we get a full set of four DIMM slots and in the video, we showed this system with 192GB of DDR5-5600 ECC UDIMM memory in 4x 48GB. It should be noted that when you populate to 2 DPC, the memory goes down to DDR5-3600 clocks.

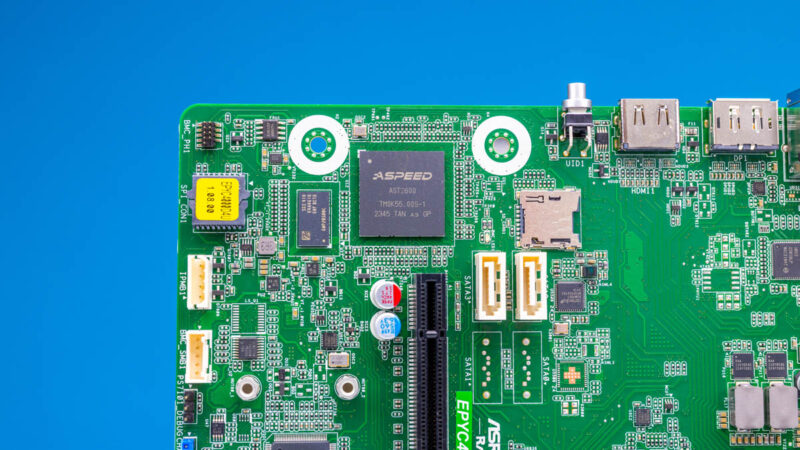

Onboard, we have an ASPEED AST2600 management controller for IPMI and out-of-band management.

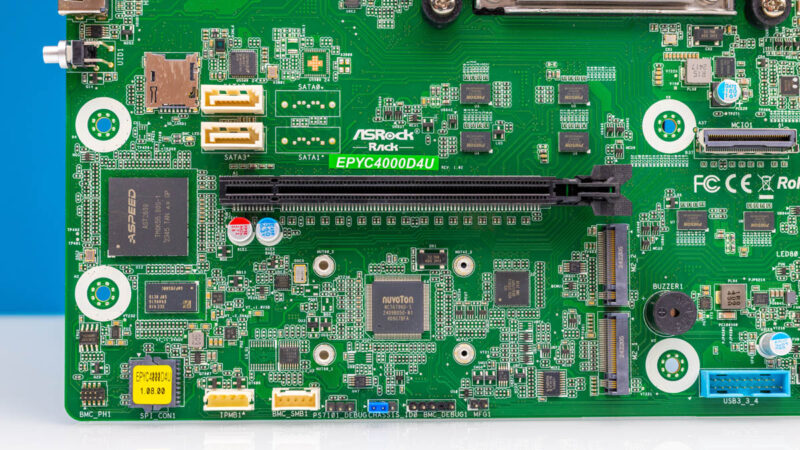

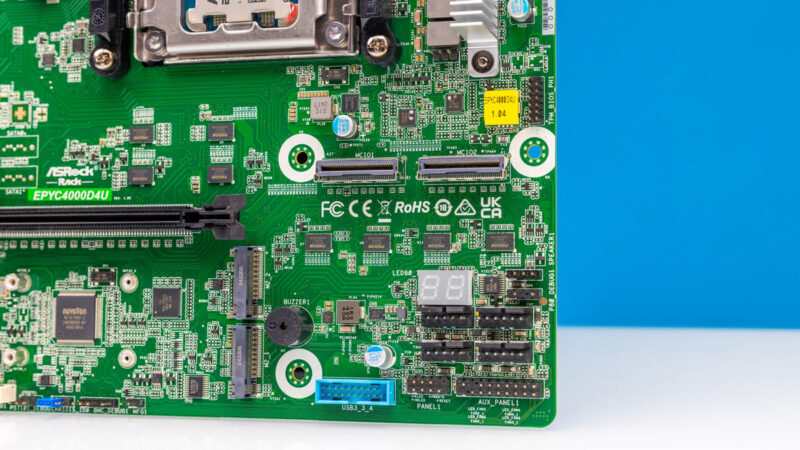

On the bottom, we have a PCIe Gen5 x16 slot and two M.2 slots. This is fewer physical slots than the Lenovo but with the benefit of being very easy to work on.

There are also two MCIO connectors for additional connectivity options.

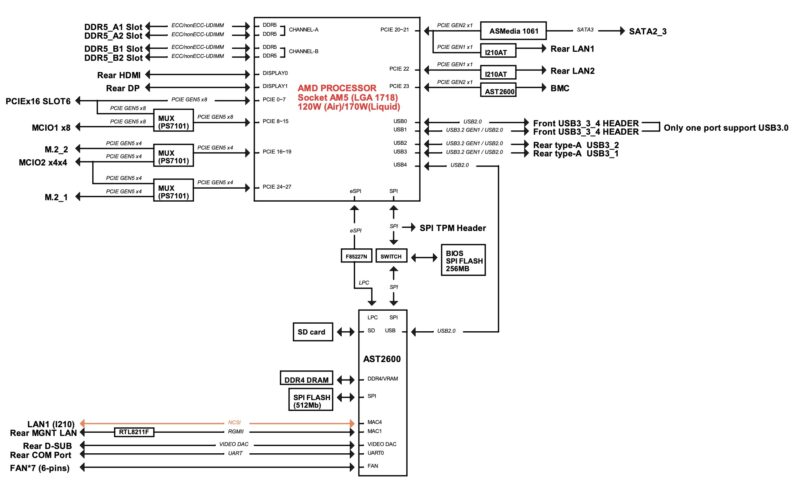

Of course, the platform itself does not have enough lanes to support all of these at full speed. The block diagram shows how those Phison chips onboard help to manage lanes when devices are or are not populated.

That also brings us to an important aspect of the parts: you can use a chipset to get more lanes, but you do not need to. An Intel C266 PCH for example uses something like 6W and adds cost to the motherboard. With the EPYC 4005 series, you do not need the chipset making the design much more modern like AMD’s higher-end EPYC CPUs, and Intel’s new Xeon 6700 and Xeon 6900 series. It is a bit strange, but the entry server market is one where the Xeon series still uses a chipset for I/O.

Next, let us get to the performance.

Without a chipset unlike the Supermicro H13SAE-MF, I’m curious about the idle power consumption of this board. This could be a gem for us who are in Europe.

I know that it is just nitpicking and that I really shouldn’t expect a consistent naming scheme from any company at this point, but I really wish that they had just called the v-cache model 4585PX3D, just like the desktop counterparts. Or would that be too straightforward?

Any idea when these will be available in retail? What was the announcement-to-Newegg-availability lag with the 4004 announcement?

Oh, I can answer my own question: Newegg says release on June 13.

Second that. Any data on the idle power consumption of this platform would be apreciated.

Geir and Kawaii, I will try to get ahold of one of these boards and test power consumption!

Would these be suitable for building an M.2 Gen 5×2 SSD only NAS? How many PCIe-lanes can I typically coulnt being available for storage?

When the title said: “Intel is exposed” I thought this would have a bit about: “TRAINING SOLO – On the Limitations of Domain Isolation Against Spectre-v2 Attacks” and a comparison between the two CPUs and the number of microcode patches in-the-wind; and the resulting performance loss.

You sound like a broken record good Patrick, but you’re right. AMD wins. Intel’s savior is that it takes so long for Dell and HPE to make new models and neither care about this space compared to AI systems because it takes hundreds of these little servers to earn the revenue of a HGX. If Dell and HPE don’t sell, then Intel wins

@Rob

There’s another Intel vulnerability this cycle discovered by ETH Zurich with a performance penalty – CVE-2024-45332. AMD is not affected.

Crucial needs to start making 64GB ECC UDIMMs at a tolerable price premium stat. 256GB kits anyone? Put a logo on the packaging along the lines of “AMD EPYC 4000 series ready”.

I just have to shake my head every time I think about how incompetent Intel has been in the high end consumer / entry level server market, an area they once dominated. It’s been obvious for years that the heterogeneous laptop architecture and endless artificial product segmentation by the marketing groups was absolutely killing them. These two things led them to constantly be late to market with the wrong product. Rearranging the deck chairs on the titanic at this point. I know this is a relatively small market, but the fact Pat never flushed the management in these groups is to me one of the many reasons he had to go. Other than the random annoying model numbers, AMD executing perfectly here.

Can anyone confirm if these new chips drop in compatible with any existing motherboard series ?

I’m curious how much of the Xeon E situation is down to heterogeneity in desktop parts making it genuinely more laborious to keep the Xeon Es up to date, since they have to either be distinct parts or leave a lot of disabled ‘E’ cores on the table; and how much is margin-driven and cannibalization-averse SKU slicing.

AMD presumably has an easier time making an Epyc 4005, since it’s pretty close to a Ryzen with some amount of binning and validation, rather than a distinct P-core only monolithic chip that is not borrowed directly from any Core Ultra; but (going by the benchmarking of non-X3D Zen5 Ryzen vs. current Intel desktop P-cores) it seems as though a P-core part of similar core count absolutely wouldn’t suck, unless your application really needs a 4585PX in which case the results aren’t going to be even close.(Maybe the Xeon Max HBM stuff, in small quantities, could actually be done at an acceptable price; but no sign of them trying that at present)

However, Intel can’t be thrilled at the idea that Xeon E basically needs to do twice as much work to be worth the money they currently want for it; especially if there are Xeon Ds or bottom-tier Xeon Scalables that are ‘supposed’ to fill those niches at their (higher) prices.

Between their current P-cores being at least reasonably credible vs. current Zen5; and them having the option to throw some of the networking features of Xeon D into Xeon E(or, if they want a fast solution; slapping a Xeon D into an LGA package); it seems like Intel absolutely could have a Xeon E that is actually worth buying; I’m just much less clear that they would want to sell one, especially if they can still use OEM loyalty to shift Xeon Es to customers for which if it can’t be purchased from Dell or HPE it doesn’t exist.

@fuzzyfuzzyfungus:

I think it comes down to Intel’s inability to produce their smaller designs themselves. They can’t source enough chiplets from TSMC to even satisfy huge OEM’s desktop needs. There’s still no Dell OptiPlex or Inspiron based on Arrow Lake despite the platform being from 2024. Even their newest Dell Tower line still carries older Raptor Lake with Arrow Lake being available only for the highest SKUs. Same for HP.

Before Arrow Lake released Intel has planned to manufacture the CPU chiplets themselves on A20 process, then only to make lower end themselves, but finally everything is made by TSMC and the A20 process was abandoned.

The entire platform for Arrow Lake had problems since Meteor Lake-S was supposed to be the first CPU on LGA-1851, but it too was so bad the entire line got canceled and repurposed for embedded only Meteor Lake-PS.

@fuzzyfuzzyfungus:

I agree with you that one approach could be to make a cut a down Granite Rapids-D. They have plenty of options to make a competitive product. That’s why it’s completely baffling to me why they decided to base Xeon E/W and consumer desktop off their laptop architectures. As the article pointed out, the inclusion of E cores created other problems like the P cores losing AVX-512 support, something Intel pioneered! They desperately need an entry level P core-only design to cover 4-16 cores, whether monolithic or chiplets. And it needs to have all the features that the E core team forced them to remove, or the marketing teams tried to segment into a ridiculous number of derivative SKU’s (AVX-512, FP16, etc).

Asus B650 Tuf Gaming Plus (Wifi) e.g. lists them already officially as supported (also Epyc 4004 series).

Quite a good board series. With current bios and proper bios-setting, it runs also pcie gen5 on the x16 GPU slot. I got one new for 120 Euro (incl. Tax)

Btw, with this Board an a a reasonable good power supply (e.g. Corsair RMx), you can get Idle as low as ~14-15W with a 9700X at Windows (total system power draw in 230V-Land, i have such a system) if you have no extra PCIe cards in and use iGPU only.

I’d guess, Eypc 4005 will be the same.

Zen5 Desktop paired with selected hardware can idle quite low. :-)

Trying to find a good motherboard capable of 2xM.2 and a PCI x8 slot with lanes to the CPU (not chipset).

I am mirroring my M.2 drives, and I have a HBA card for my spinning disks.

I’m surprised that it is impossible to find a motherboard instock/available!

I’m also interested in the minimum/average power watts. I don’t want to receive an unexpected noticeably higher power bill for a home server.

@praka

You can find 2x m.2(one each side)card with 8x pass through slot on top. Low enough to get low profile HBA card on top in normal case.

You bifurcate pci-e x16 slot to 8x4x4 and you’re done. I’m running my Nas this way for 4th year now. The 4x slot takes 10g NIC and 1x slot takes GPU(used for transcoding so no bandwidth needed)

Unless you’re talking intel, then bifurcation on non platinium xeons i not for you.

Btw 4lane pcie 3.0 is enough for 8 sata drives, and most HBAs are happy with that.

I am probably an outlier here, but I would still love to see more PCIe and quad channel memory (192 – 256GB at DDR5 5600 for up to ~179GB/sec). The 16-core is a bit memory bandwidth starved in certain scenarios. 16-cores is the sweet spot for our use case, so it’s not a limit for us to justify a jump to a 8004, or 9004/5 series CPU. It would just be beneficial to run 8-12 NVMe (32-48 PCIe lanes) + 8-16 PCIe for NIC’s. That would require a platform to offer 64+ PCIe lanes (need lanes for ancillary stuff like BMC, etc.). Just personal experience, but this would be a killer edge / SMB server for most.

Another comparison I would like to see is with the Epyc 4004 series, especially if the new V-Color 2x 64 GB DDR5 is available to show the generational change.