Now, for something that I am very excited about, the new Intel IPU E2200 codenamed Mount Morgan. Intel calls these IPUs, but again, the rest of the industry calls these DPUs. This is the update to the Intel IPU E2100 DPU. While I had photo opportunities with the E2100, we never actually got one for the lab, which I would have never guessed.

Perhaps it is now too late for that since the next-generation is coming. Also, please note we are covering these live at Hot Chips, so please excuse typos.

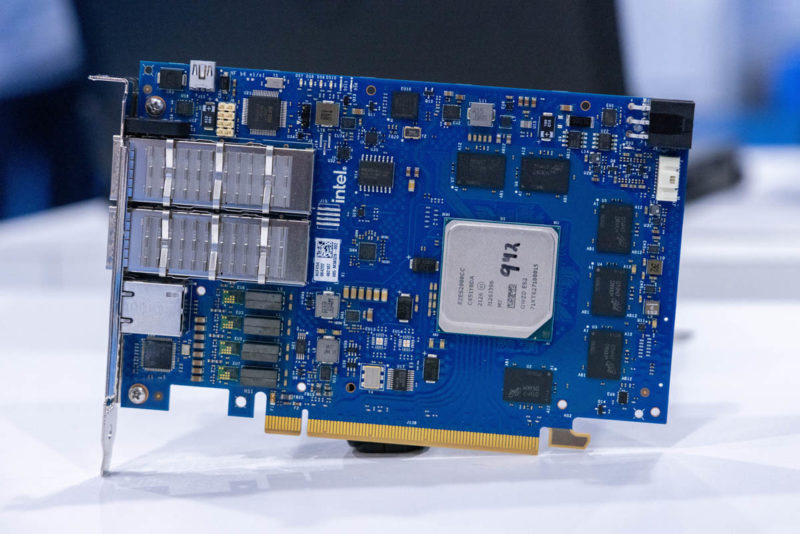

Intel IPU E2200 400G DPU at Hot Chips 2025

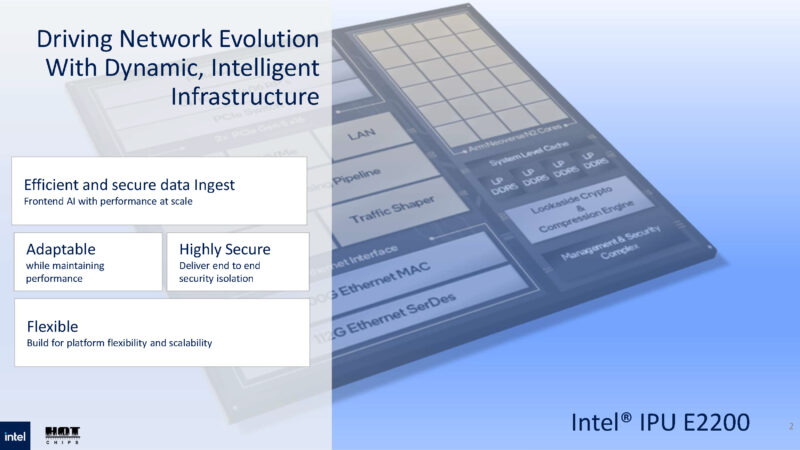

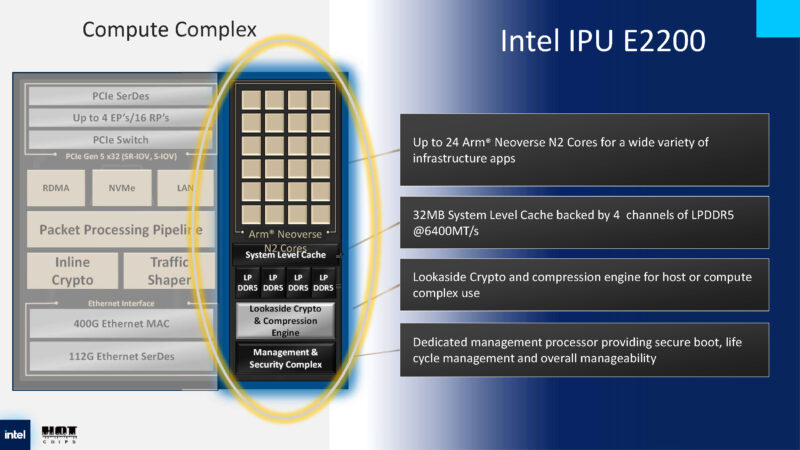

Here is the overview slide for the Intel IPU E2200 series. This is a TSMC N5 chip.

The goal of an IPU/DPU is to offload and accelerate common infrastructure workloads that will be delivered via networks. For some context, Google used the E2100 series as a DPU solution.

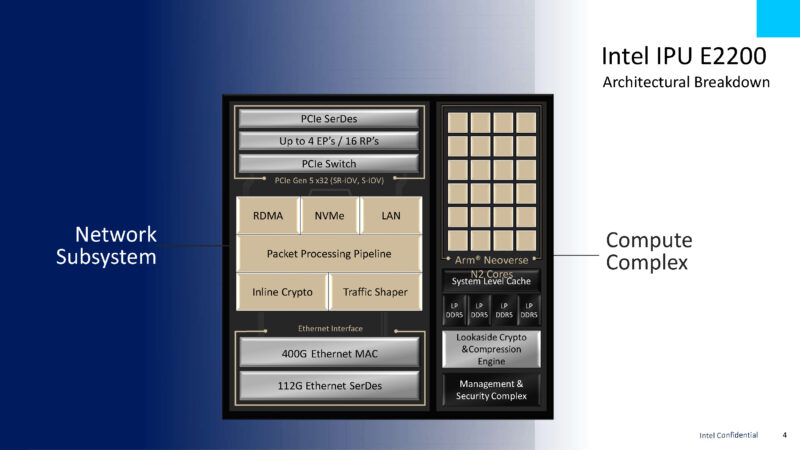

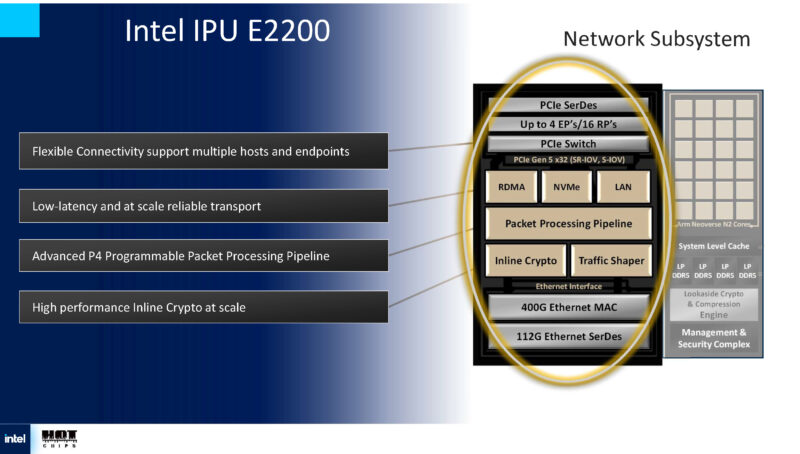

Now we are getting into the architecture. Here we can see that we have a 400G MAC which is awesome. There is an Arm Neoverse N2 core compute complex. We can also see a PCIe Gen5 x32 domain with a PCIe switch built-in. NVIDIA is leaning into its PCIe Gen6 switch with ConnectX-8 and the PCIe Gen5 switch in the BlueField-3 DPU is a major feature to make the DPU work.

In the networking subsystem, we have P4 programmability, high performance crypto and more. If you are wondering about the NVMe, the idea is that these DPUs can present NVMe devices over the network.

On the compute complex, there are up to 24 Arm Neoverse N2 cores fed by four channels of LPDDR5 memory.

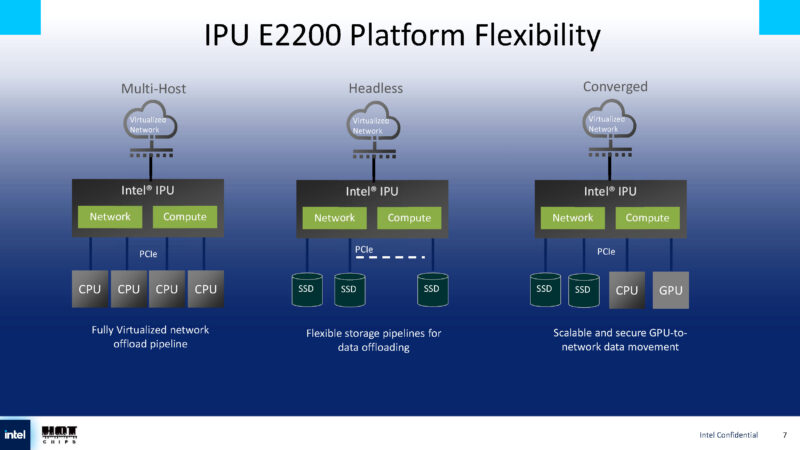

Intel says that this can be run multi-host, headless, and converged. Headless is like what we showed with the AIC J2024-04 2U 24x NVMe SSD JBOF Powered by NVIDIA BlueField DPU piece. In converged mode, it can operate as a mix. That is a lot of flexibility.

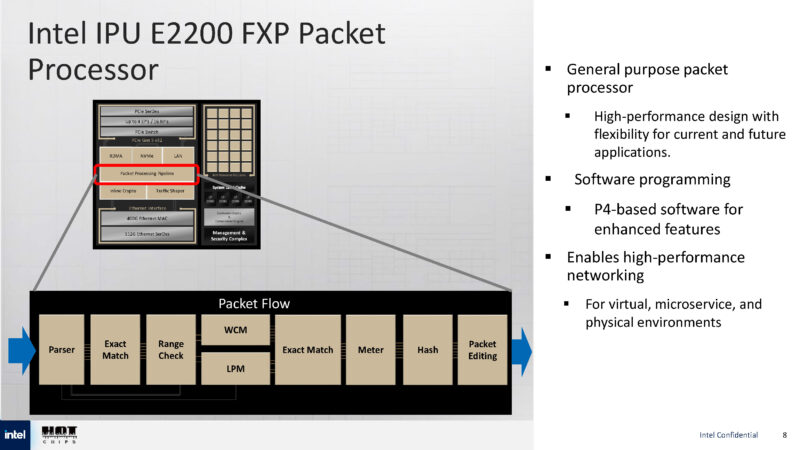

On the packet procesing side, Intel is going into the FXP Packet processor that uses P4 for programmability and hardware configurability.

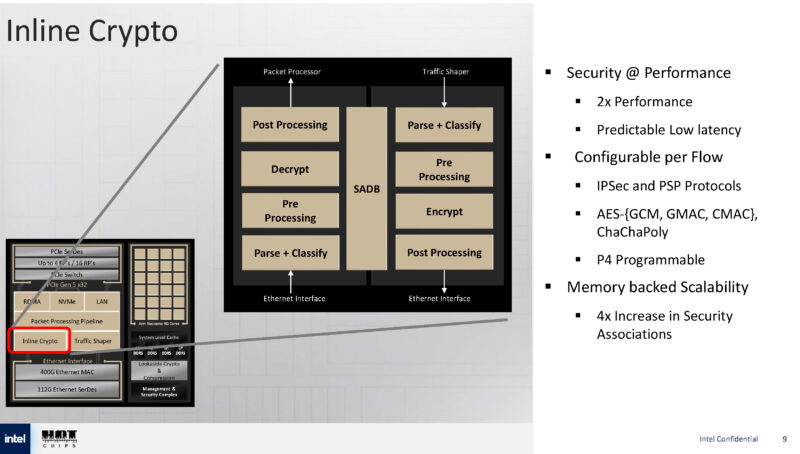

There is also an inline crypto engine that is configurable per flow.

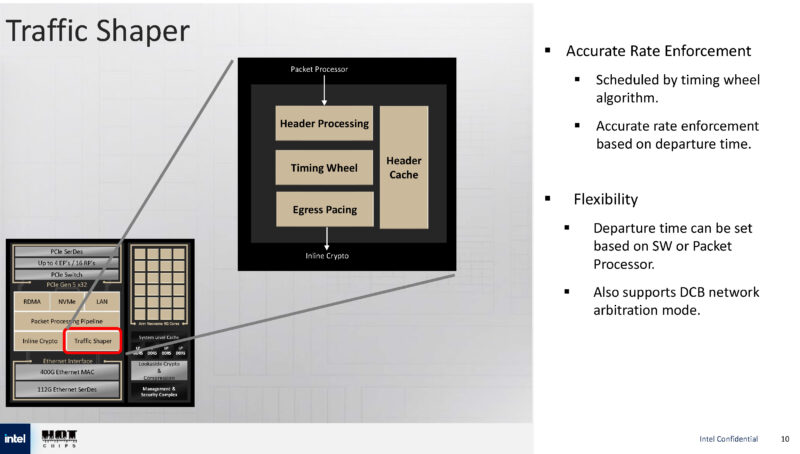

Intel also has a traffic shaper with a timing wheel algorithm.

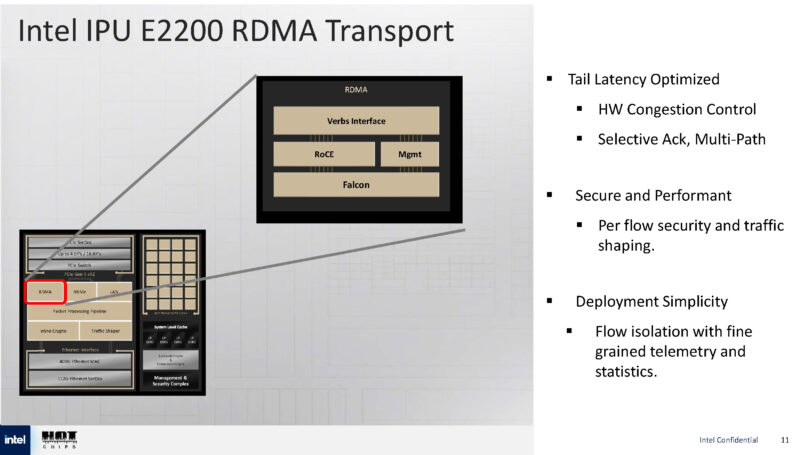

Here is the RDMA transport engine slide:

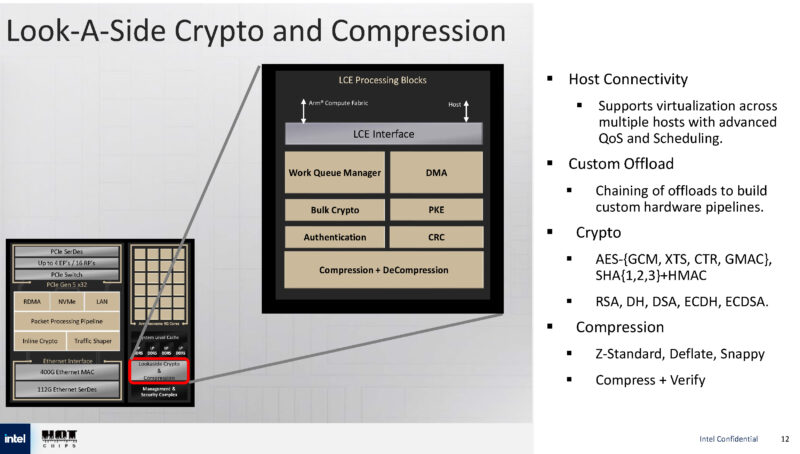

Something interesting is that ther are both inline crypto engines along with look aside. The lookaside does not say it is P4 programmable, so that shows a bit of the difference betwen the two.

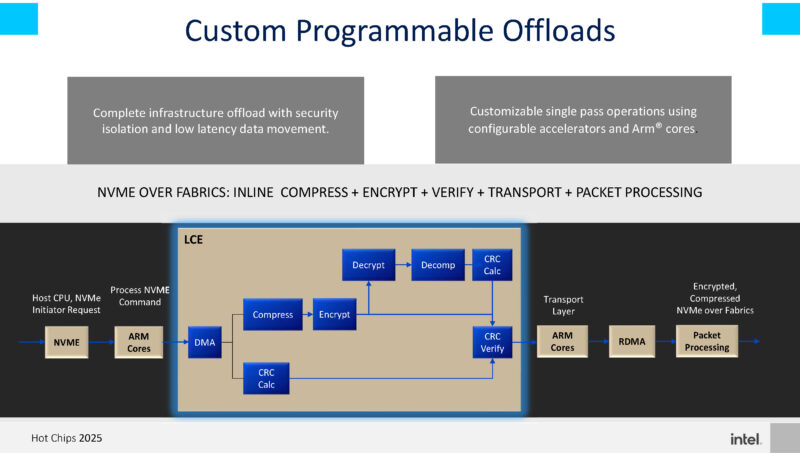

There are also programmable offload options using the different accelerators and IP blocks so some operations can happen on the network side, and some can hop over to the Arm side as an example.

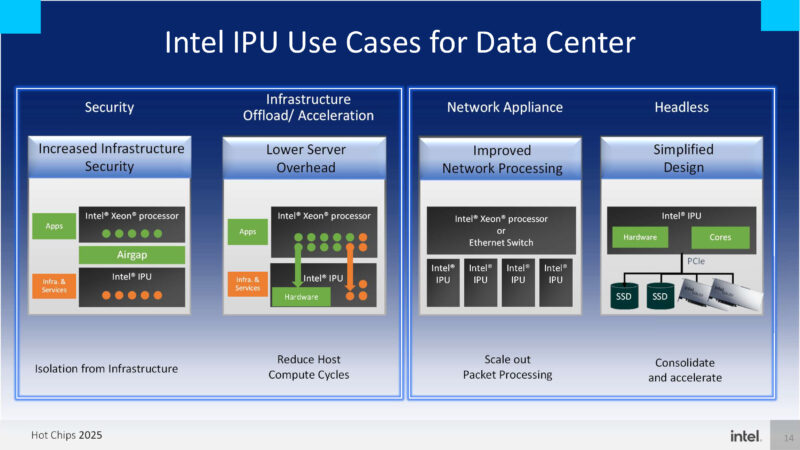

Intel has a number of use cases in the data center for its IPUs.

The big challenge is getting applications built around all of these.

Final Words

On one hand, this is awesome. NVIDIA needs competition in the DPU space. With Intel landing companies like Google and some Chinese hyperscalers with its IPUs, it has a lot of experience in this space. Hopefully we can see more Intel IPUs in the future (and get them in the lab!) At least with the E2200, Intel will match the BlueField-3 and AMD Pensando Salina 400 DPU’s 400G throughput.