Host Memory Buffer SSDs are an industry development searching for one primary objective: minimizing SSD costs. We have started to see more HMB SSDs over the past year as the technology has become more mature. In this article, we will cover HMB basics and show why this is an important technology for NVMe SSDs in certain segments.

Host Memory Buffer (HMB) SSDs: The Basics

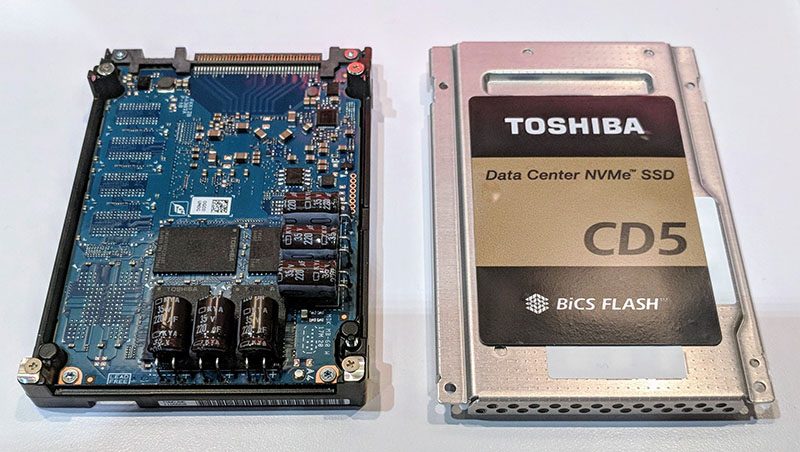

For generations, SSDs have had DRAM inside of drives. In the data center space, that is also why we have seen generations of SSDs with large capacitors to provide power to the DRAM in the event of power loss. Thsee are features that add significant cost to SSDs.

The HMB SSD is not the first type of DRAM-less SSD, even NVMe SSD, that we have seen. An example of this is that many SATA DOMs were DRAM-less even before HMB. What makes HMB different is that the performance is better than in those previous versions, and it was also brought into the official NVMe 1.2 spec.

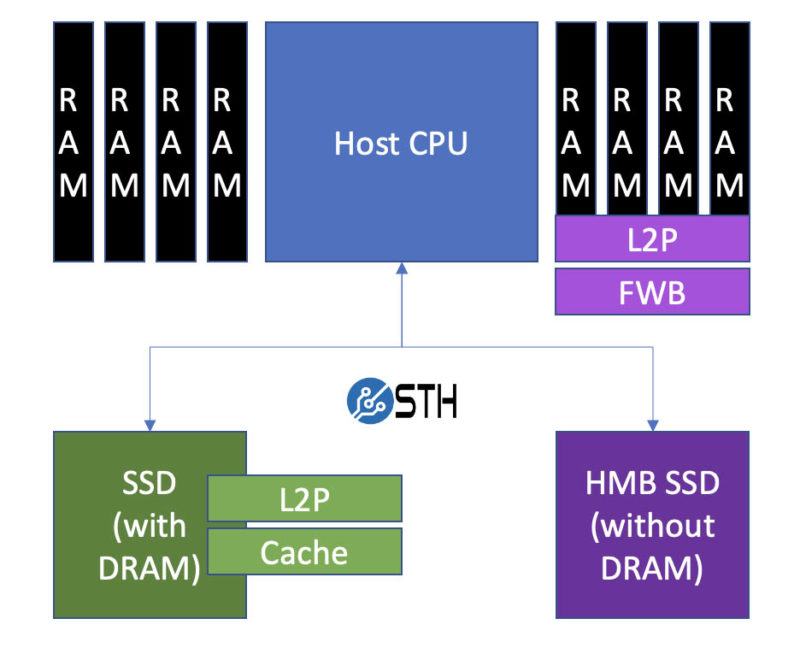

Perhaps the most important function that DRAM performed in SSDs for generations was as the L2P (logical to physical) table. This is the mapping table functionality that keeps track of where data is physically located on a SSD and gives it a logical mapping so the OS does not need to keep track of the internal SSD data movements.

By moving the L2P tables to main system memory, HMB SSDs are able to get the cost benefits of DRAM-less SSDs while also retaining a lot of performance of SSDs with onboard DRAM.

This requires a few major items to work. First, the host system and OS need to support HMB and NVMe 1.2 specs. This is because the main memory needs to be allocated for these tasks. Second, the SSD itself needs HMB-specific functions in its controller and firmware.

Examples of these functions are the HMB Activator and HMB Allocator. The HMB Activator’s most notable function is to handle the initialization of the HMB SSD. When the host system asks the SSD to identify itself, the HMB Activator flags that it is a HMB SSD by sending the HMPRE attribute. From there, the SSD and host system set up the HMB structures.

On the HMB Allocator side, the SSD needs to track and manage the memory that it has requested from the host system. In operation, the HMB Allocator does a lot of work. It has functions like allocating and releasing memory that resides over the PCIe bus and in a host system’s main memory. If the SSD supports HMB Fast Write Buffer, then the controller’s HMB allocator also needs to have the functionality to manage that.

HMB Fast Write Buffer

One feature of the HMB is that drives can include a Fast Write Buffer (FWB) as part of the HMB structure. The basic idea is that SSDs manufacturers can take advantage of the main memory’s speed and use the main memory as a write buffer for the NAND device. This allows for features like data being written to NAND more efficiently aligned to the NAND’s cells as it is flushed from the FWB to the NAND SSD.

The drawback is data security. In most current generation systems, the main memory is volatile DRAM without power-loss protection or PLP. In data center SSDs, PLP circuitry is added into the SSD so the SSD’s volatile DRAM can be flushed to non-volatile NAND in the event of power loss.

In theory, technologies like NVDIMMs and more exotic technology like the now defunct Optane DIMMs, could provide this type of service without data loss during a power event. Those technologies often cost more than adding PLP to NAND SSDs directly. At the same time, hyper-scalers in the data center have looked at effectively doing a conceptually similar version of this to manage vast NAND arrays.

At this point, we have discussed features, but the next question is why HMB exists in the first place.

Why HMB NVMe SSD Exist

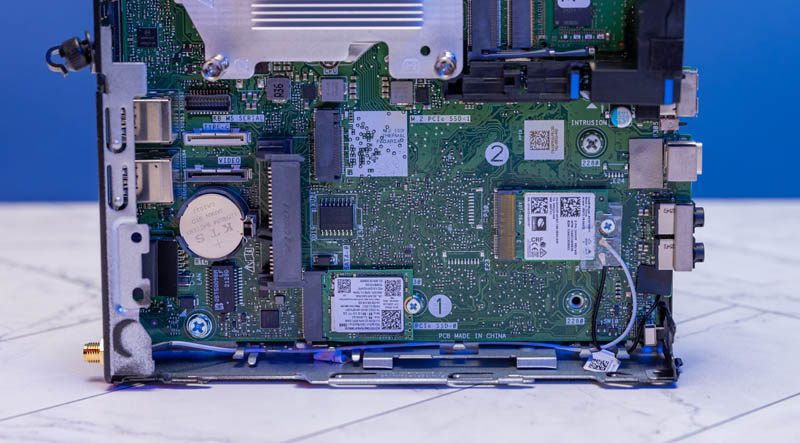

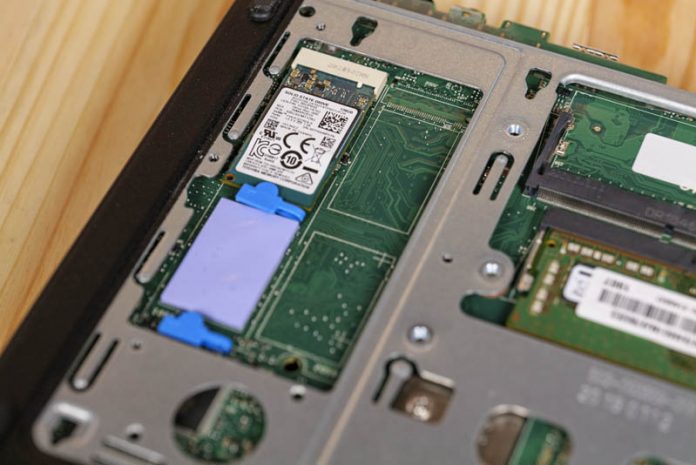

The primary reason we have HMB SSDs is cost, but there are other implications as well. Here is an example of a HMB NVMe SSD in a Project TinyMiniMicro node. In the 1L corporate desktop PC segment, having a lower cost and power SSD, while still being able to market a NVMe SSD is important.

Something else is illustrated by the example above. One can see that the SSD is a M.2 2280 or 80mm model. At the same time, this is only 80mm in size because of the extra PCB being used to reach an existing mounting point. Companies like Dell will use more compact SSDs in its systems even if the system can fit larger drives.

One can imagine how form factors can shrink with an assumption of smaller SSD PCBs.

Final Words

While many in the industry like to say that HMB SSDs are as fast as their DRAM-equipped alternatives, they are not. Instead, these are usually considered a value technology because it removes the DRAM package from a SSD and also can reduce the physical footprint of a drive.

Still, most major manufacturers have HMB SSDs in the market, so this is a technology that we expect to see for some time. It is also one that will become more interesting as servers enter the CXL era and the relatively rigid structures of where memory sits in a system become flexible.

I’m not sure I understand the point of a HMB. The host system already has a disk cache in memory, so writing to files is seen as fast by applications because the writes just go to system memory already. Then in the background the host OS sends that data from system memory to the SSD which then writes it to flash.

It sounds like with this technology, the SSD allocates a block of memory on the host, so when the host sends data from system memory to the SSD, the SSD puts it back into system memory but in its own area, and then later writes it to the flash.

This seems like a solution that would only benefit very old operating systems that don’t have a disk cache or don’t use it very well. On a modern OS I would’ve thought dispensing with the DRAM completely and just having a slow DRAM-less SSD would be fine, as the host OS would still store all writes in memory and send them to the SSD at a lower rate in the background.

Maybe you just have to configure the OS to lie about data safety and claim to the application that the data has been written to disk when it’s still in the cache, which appears to be what HMB does?

Or am I missing something?

Just to add, it looks like it also uses memory to store a sector mapping table. But surely it would be better to just let the host manage that directly? There are already filesystems like UBI that deal with wear-levelling on NAND chips so you’d think the host OS could easily manage that as well, if it was just given an interface to read from and write to the underlying flash directly.

It would be possible for the host to manage all this once the SSD stops pretending to be a hard drive with 512B or 4KiB writable sectors. The problem is also similar to what is encountered with SMR disks, great pains are taken to hide the underlying storage infrastructure for the sake of backwards compatibility. Host-managed flash and SMR HDD is still quite the niche technology although I believe this could work quite well with for example object storage or the like, it’s not unlikely the major cloud providers are already doing it this way.

However this has no place in a low priced laptop or desktop that has to support Windows. It would be interesting though if it were possible to just switch the drive to host-managed mode, that could drive some real innovation.

@malvineus: looks like you didn’t read the article carefully. it stated that “the most important function that DRAM performed in SSDs for generations was as the L2P (logical to physical) table”, not write buffer.

Wasn’t NVMe Zoned Namespace supposed to take care of a lot of this?

Or was I into the wrong brownies again?

From a security standpoint I wonder if there is a risk of having a caching table stored in a shared memory space, how exploitable or corruptable that could be compared to an internal ssd dram.