Skylake The Hidden Gem: Integrated Omni-Path Fabric

One of the biggest features is going to be Skylake-F, or Skylake CPUs with integrated fabric. Although it will likely be limited in sales, the fabric is underestimated in its potential impact.

Skylake-F is the codename for the Skylake package with integrated Omni-Path fabric. Much like the Intel Xeon Phi x200 generation has. From a price perspective, Intel charges under $200 premium for a dual port Omni-Path 100Gbps Xeon Phi x200 part. For comparison purposes, a Mellanox EDR Infiniband card costs around $1200.

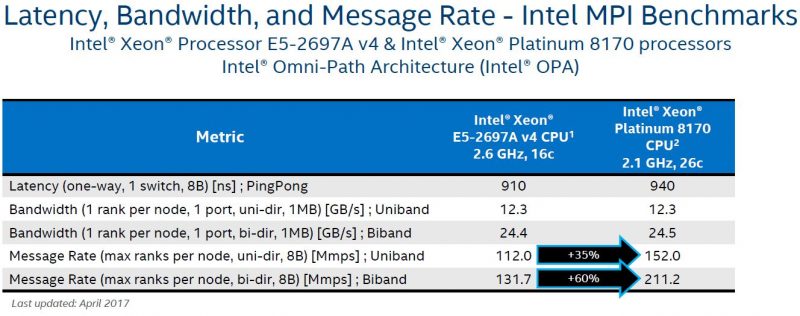

With the new mesh and more memory bandwidth, Omni-Path sees a significant speedup over previous generation CPUs.

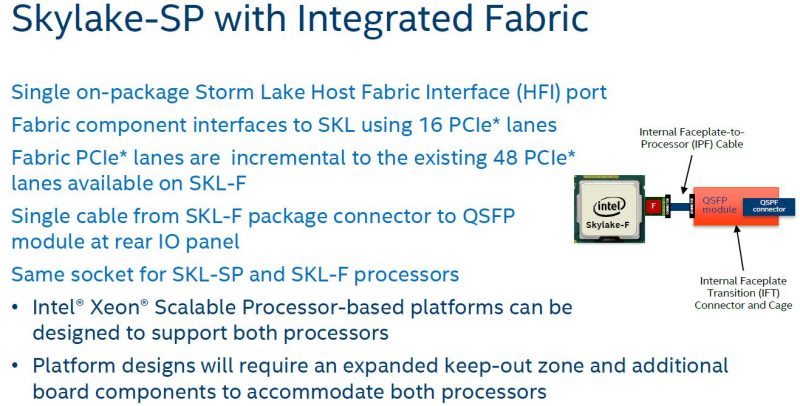

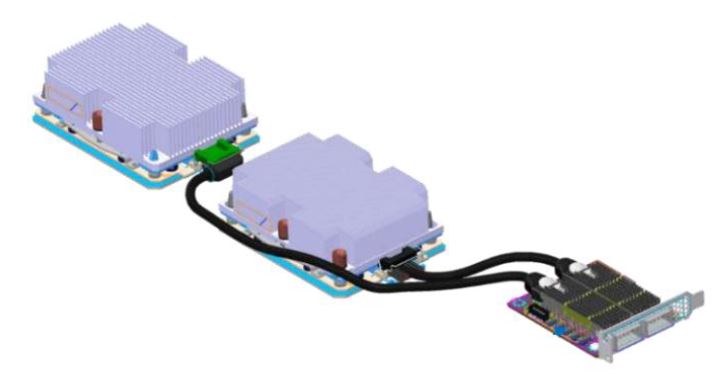

The actual implementation of Skylake-F looks something like this:

Each package has a protrusion where a cable is fed that connects to a backplane QSFP28 cage. That means each CPU has a 100Gbps RDMA network connection. In a four-socket system, that is 400Gbps of network capacity without using an add-in card or a standard PCIe lane.

If you adopt Omni-Path, you essentially get ultra low-cost 100Gbps RDMA enabled networking without using a PCIe 3.0 x16 slot. As a result, your effective PCIe lanes versus competitive products (e.g. AMD EPYC) skyrockets by 16 PCIe lanes per CPU. The downside is that you have to use Omni-Path. Unlike Mellanox VPI solutions, you cannot put these cards into Ethernet mode.

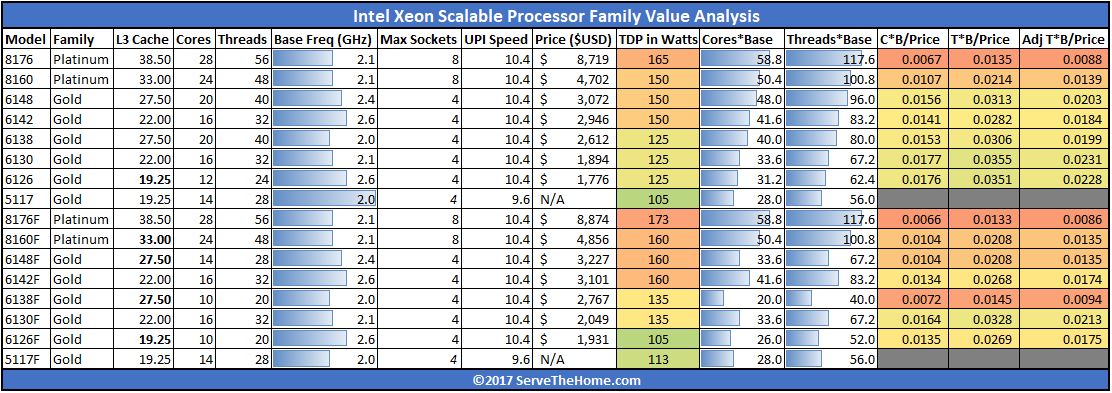

Just how low cost? Integrated 100Gbps OPA is about $155 more than the SKUs without it.

If Intel ever needs to change the game in this generation competing with EPYC in the scale-out storage/ hyper-converged storage space, adding an inexpensive 100GbE fabric option instead of OPA would be a complete win.

In the meantime, the bigger implication is that Intel is killing off the advantage that Xeon Phi has over the mainstream Xeons. In conjunction with adding AVX-512 we are seeing Intel move HPC back to its mainstream CPUs.

Final Words

Overall, the new Intel mesh interconnect architecture is required so the company can scale CPU cores and add more I/O without seeing ever increasing ring latencies. Each path on the mesh has fewer hops than the old rings had. If Intel gets serious about pushing fabric on package, then it is going to provide a huge value proposition for users.

Thanks for this Series of Articles on the two camp’s newest Server Processors.

Did you see Kevin Houston’s Article: http://bladesmadesimple.com/2017/07/what-you-need-to-know-about-intel-xeon-sp-cpus/ ?

He mentions the lack of Apache Pass (Optane) and the observation that the SKUs with only 2 UPI Links are essentially a Ring Topology; you need to go top shelf for a Mesh.

Those Cables on the Skylake-F look innovative. If you accept a bit of Latency you can wire up a Rack of 8-ways for a SuperSever – 42U x 8 x 28 = 9,408 Cores per Rack (or only half that many if you need 2U per 8-way).

Wish AMD had made a separate SKU for 4-way but I guess they’re counting on the Core doubling that next year’s switch to 7nm will bring.

Thanks again Patrick, for all these Articles.