The Supermicro SuperBlade system is flexible as it allows you to mix and match blades. We had access to six blades including the newest Broadwell-EP blades and previous generation Haswell-EP blades. We were able to verify that the blades worked in both blade chassis we had and were able to seamlessly mix new and older blades. This is a use case where one would purchase blades from one generation then add additional blades when newer generations came out. The only tangible difference we saw is that the Broadwell-EP blades had more cores and faster RAM. Otherwise, the blades were very similar.

Supermicro GPU Blade Overview

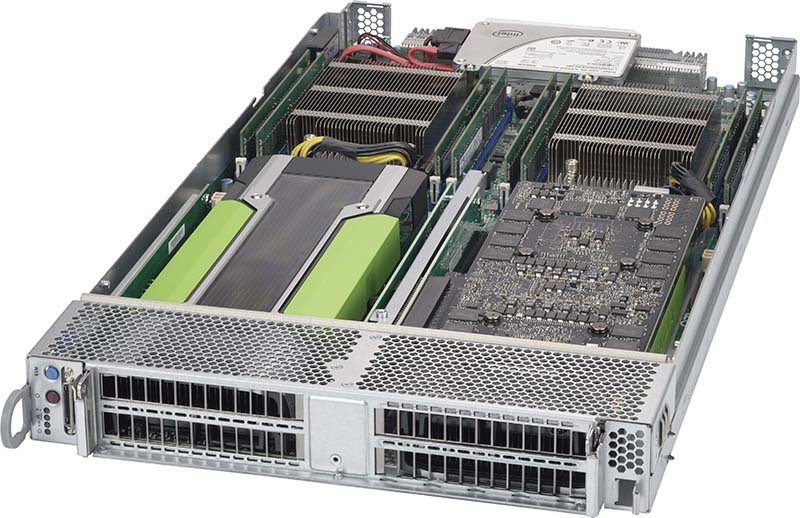

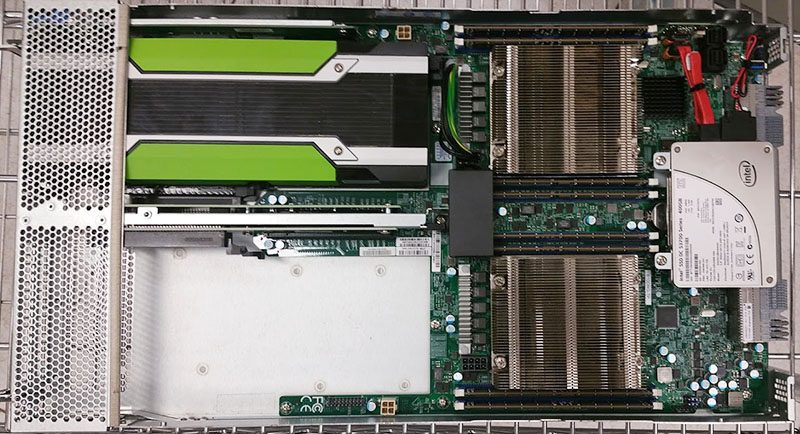

We had access to two chassis and a total of six GPU blades. Blades generally have standardized I/O connectors which mate to vendor specific back planes. Each of these connectors provides functionality like power and networking connectivity. Supermicro makes several different types of blades, we had the SBI-7128RG-X units to test. These blades support two Intel Xeon E5-2600 V3 or E5-2600 V4 processors, four DIMMs per processor (up to 1TB total). Base storage can be two SATA DOMs or a SATA DOM and 2.5″ SSD, and two GPUs. Our units were configured with one SATA DOM and one 2.5″ Intel DC S3700 400GB drive per node.

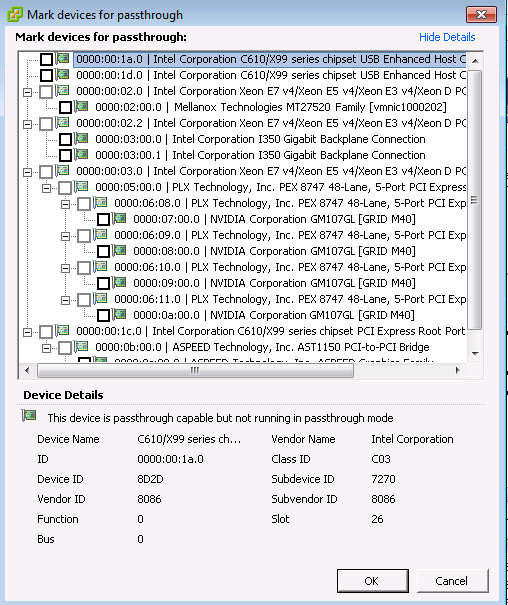

The GPU connectivity in our blades were for two dual slot PCIe 3.0 x16 devices. We tested the Supermicro SBI-7128RG-X blades in both single and dual GPU configurations. Each blade had a SATA DOM and a 2.5″ Intel DC S3700 400GB SSD for storage but also had two SFF-8643 ports available to connect 8 other SATA devices in other blade configurations.

Supermicro provides a number of cables with the blade chassis to provide power to the GPU cards. These options should cover just about every server oriented card that fit within the power envelope.

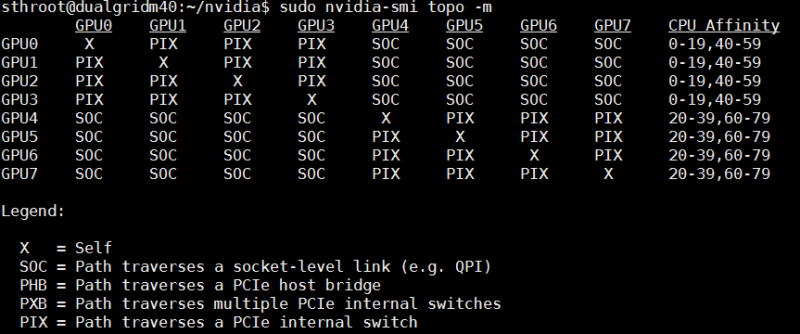

In terms of the internal connectivity, each double-width slot is tied to a specific CPU. That means you can peg an application to a CPU and GPU pair and avoid the QPI bus.

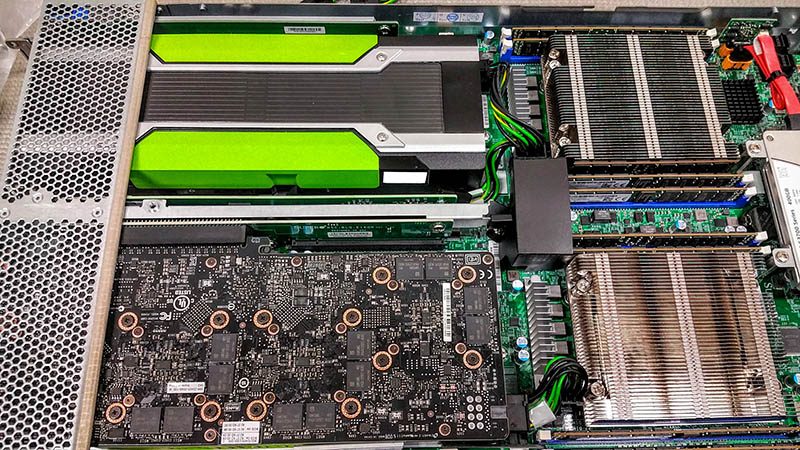

As you can see from above, we were even able to configure the blades to accept two quad GPU cards for 8 GPUs in total. That means that in a 7U 10 node chassis one can have 40 CPUs and 80GPUs which is extremely dense.

We did test a number of GPUs and with proper BIOS settings we were able to use them. Here is a look with a few GRID and Tesla cards installed in different nodes.

The Supermicro SBI-7128RG-X nodes worked very well in our testing.

Supermicro GPU Blade Performance

We used these Supermicro GPU blades for our Intel E5-2698 V4 benchmark piece during the Broadwell-EP launch week. We suggest reviewing that article for performance figures, and links to additional benchmark runs. We are going to pick a small sample set to discuss in the context of the Supermicro GPU Blade platform and our Supermicro SBI-7128RG-X nodes.

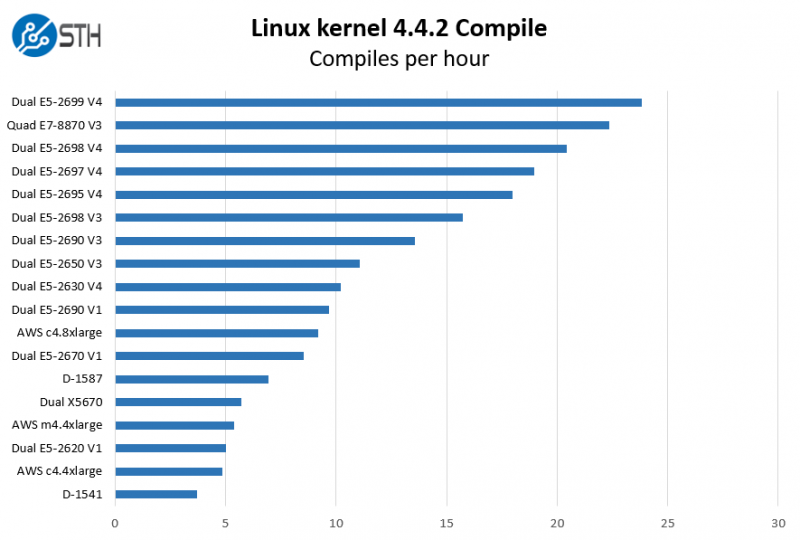

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make with every thread in the system. We are expressing results in terms of complies per hour to make the results easier to read.

In this graph you can see the relative performance of the Supermicro SBI-7128RG-X nodes with the newest Broadwell-EP generation dual E5-2698 V4 processors as well as previous generation E5-2690 V3 parts. Perhaps one of the more intriguing comparisons is between the dual E5-2670 V1 system and the dual Intel Xeon E5-2698 V4 Supermicro SBI-7128RG-X node. The Xeon E5-2670 V1 is a Sandy Bridge generation part that was extremely popular in that generation. It also is of a vintage that will start being replaced by Broadwell-EP (V4) systems. In terms of raw software compile performance, you can essentially do the work of three E5-2670 V1 systems with a single E5-2698 V4 blade. Even using these GPU compute blades, it means that you can consolidate 30 Sandy Bridge generation servers down to one blade chassis. Likewise, a 42U rack of these Blade servers can replace almost five Sandy Bridge generation compute and networking racks. Supermicro does make SuperBlades that are better suited to this consolidation scenario that can increase consolidation by more than twice this amount.

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads.

Moving to ray tracing, we can see performance we can see a similar consolidation story. These chips can provide a 3x improvement in CPU ray tracing applications over Quad Xeon E5-4620 V1 systems.

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here.

We added another result in our NAMD benchmarks for the dual Intel Xeon L5520 generation systems. If you have older generation systems such as these, a Supermicro SBI-7128RG-X with dual Intel Xeon E5-2698 V4 chips can bring your consolidation ratio to around 8 older generation servers to 1 blade. Each 7U SuperBlade 10-node chassis can then consolidate about two full racks of Xeon L5500 generation machines.

GPU Performance Note

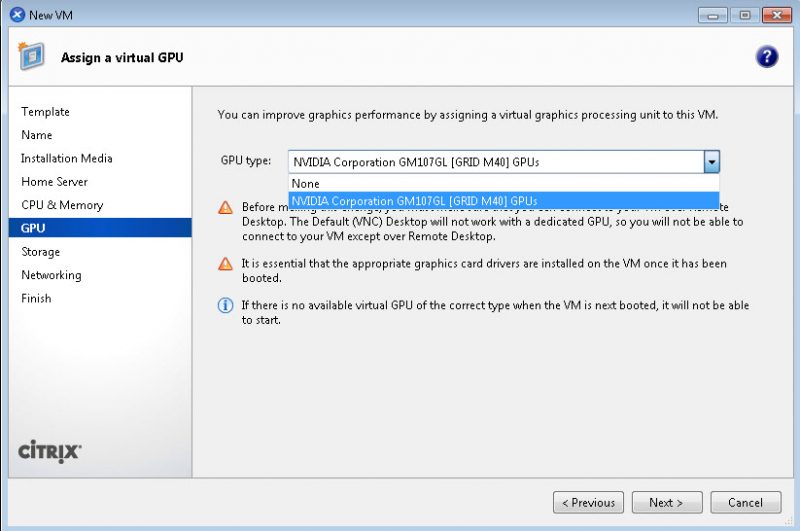

The Supermicro SuperBlade team requested that we publish this review before newer NVIDIA GPUs arrived. We will update our readers with new CUDA/ GPU compute benchmarks as they become available. We have been working with both GRID cards under VMware, Microsoft, and Citrix for VDI solutions.

Servicing the GPU SuperBlade

We wanted to test serviceability of the units. There are a number of reasons that one might want to service the unit and they include adding, upgrading or replacing a few common items such as a CPUs, DDR4 RDIMMs or GPUs.

Methodology

We have done a few serviceability tests in the past. With the SuperBlade systems being larger and more complex than individual servers we wanted to see how long it would take us to service common parts. To become familiar with the SuperBlade GPU node we tore one apart in the period of under 15 minutes. Armed with this knowledge, we then performed one test upgrade, installation or replacement as trial runs. We also used a manual phillips head screwdriver not an electric version. Supermicro now offers its own on-site support options. There are also numerous Supermicro VAR/ partners who service units. While we are not as fast as a regular technician who does this every day, we do build several servers a week and wanted to get some idea in terms of times to service common items.

Service test results

Here are descriptions of the four service tests we ran and timings for each of them.

- Remove a blade and install into a different slot – We pulled a blade, removing it from the enclosure. We removed a blade “blank” that helps with airflow. We installed the pulled blade back into the chassis and installed the blade blank into the remaining space.

- Total time: 1 minute 3 seconds.

- Swap CPUs – We swapped two Intel Xeon E5-2698 V4’s with two Intel Xeon E5-2630 V4 chips. This required removing the top lid to the blade and then removing each heatsink and CPU. We replaced both then re-installed the lid to the blade.

- Total time: 3 minutes 9 seconds

- Install GPUs – On all of our blades, we added two double width passively cooled GPUs. Each NVIDIA GRID GPU we used required a single 8-pin GPU power connection. We knew which cable to connect and there were a total of about 13 screws we had to un-do in order to easily access both slots. We timed adding one GPU and two GPUs including putting everything back together.

- Total time: for installing a single GPU: 7 minutes 21 seconds.

- Total time: for installing two GPUs: 8 minutes 49 seconds.

- These times would likely go down with more experience and using an electronic screwdriver, but it was still a very fast process.

- Memory upgrade – We swapped out memory from 8x 16GB DDR4-2400 RDIMMs to 8x 32GB DDR4-2400 RDIMMs in one of our nodes. This is extremely easy to do. There is one screw holding the blade cover on so the task is to remove the screw, slide off the lid, uninstall the old DIMMs, install the new DIMMs and replace the cover and screw.

- Total time: 1 minute 58 seconds:

All of these results are excellent. While the GPUs did require a bit of extra effort to install, they were held in extremely securely.

Supermicro GPU Blade Management

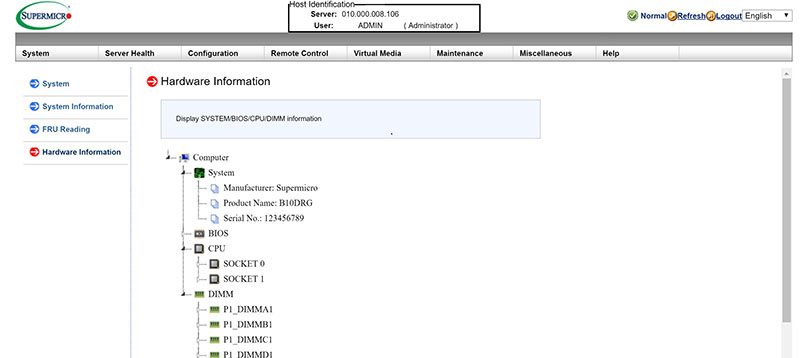

Beyond the chassis level management features we focused on in Part 2 of our SuperBlade review, Supermicro SBI-7128RG-X nodes can be managed individually either by a web GUI, IPMIview or other tools.

For a user logging into the individdual blade management level, the experience will be nearly identical and familiar to those who have managed traditional form factor Supermciro servers.

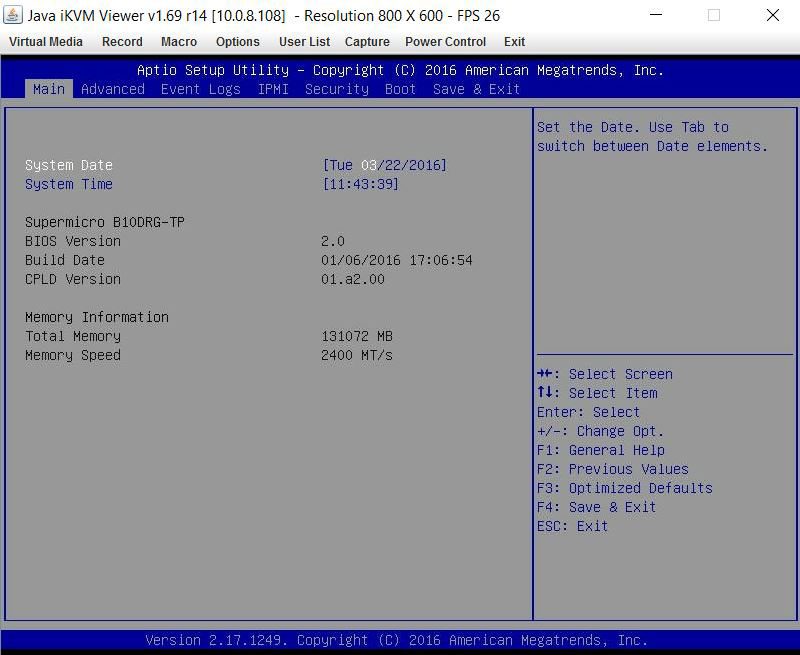

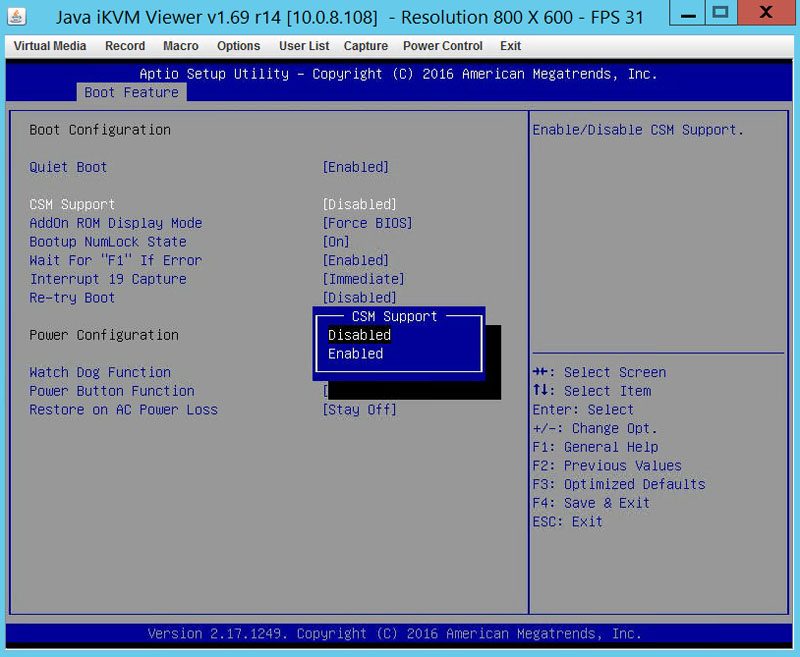

With GPU compute oriented options, this is even more important as BIOS settings do need to be adjusted by application and by GPU configuration. This is when Supermicro’s out of band iKVM feature is extremely useful.

Supermicro does include KVM-over-IP functionality with the motherboard. We have been testing servers from HPE, Dell, Lenovo and Intel that all required an additional add-in key to get this functionality. It is an absolute time and money saver in the data center and other vendors should follow Supermicro’s lead in this space.

GPU blade options that we did not get to test

There were a number of configurations that we were unable to test but were briefed on. We did express interest in testing these options in the future and will see if we can. The GPU node is very flexible. Our test nodes each had 2x PCIe 3.0 x16 slots. One option Supermicro supports is the ability to change the PCIe riser and get 2x PCIe 3.0 x8 slots per CPU for a total of 4x PCIe 3.0 x8 slots for four single width cards per blade. Further, we also saw options to replace one or both of the GPU areas with two 2.5″ HDD/ SSD holders which is useful if you need more non-hot swap drives per system.

Final Words

Having used six nodes across two chassis in the past few weeks, we have been very impressed by the GPU blades. The CPU and GPU performance between blade and traditional form factors are identical. The ease of management in the blade form factor, along with the density make the GPU blades a winning platform. The ease of management of the SuperBlade platform is excellent as was the serviceability. For those keeping track, these platforms can offer 20 compute cards per 7U which is more dense than the large 8 card in 4U systems. For those that use GPUs in HPC oriented applications these GPUs work well. We are also working on turning these nodes into VDI nodes that will be the subject of a future piece.

To see other parts of the review here is the table of contents:

- Part 1: Supermicro SuperBlade System Review: Overview and Supermicro SBE-710Q-R90 Chassis

- Part 2: Supermicro SuperBlade System Review: Management

- Part 3: Supermicro SuperBlade System Review: 10GbE Networking

- Part 4: Supermicro SuperBlade System Review: The GPU SuperBlade

Check out the other parts of this review to explore more aspects of the system.