When we were first contacted about testing a Supermicro SuperBlade GPU system, we were excited but needed to start planning immediately. The Supermicro SuperBlade GPU system is much larger than the average 1-4U server we review. In fact, we understand that several other publications were unable to review these units due to the power requirements. Luckily, the racks in our STH Sunnyvale data center lab each have 208V / 30A circuits so we were able to install these without issue. This is going to be a much longer piece than the average STH review because it is a much more complex system than your average server. Adding to that complexity, we had access to not one, but two blade chassis that Supermicro sent over. We are working on expanding coverage of the blade platforms to try different networking, different blades such as NVMe storage blades and etc.

Supermicro SuperBlade SBM-XEM-X10SM 10GbE Networking

We had access to a 10GbE switch module, the SBM-XEM-X10SM. The unit can be used in both the 10 blade and 14 blade chassis. In both of our 10 blade chassis, we essentially had one 10GbE port to the server and 10x 10GbE SFP+ ports that could be used for many other purposes. We were able to setup link aggregation to aggregate four ports to uplink switches (the switch actually supports up to 8 members to a group.) We were able to use the chassis switch to connect chassis together directly, without requiring a top of rack switch. We also even used the switch to connect five storage nodes directly to the chassis to serve as a Ceph storage cluster. Suffice to say we found the switch to be extremely flexible as we could use the chassis switch to provide networking for an entire rack.

We are working with Supermicro to add a second switch to this chassis. The SuperBlade platform can support up to two high speed switches. That will be the topic of another piece in the future.

The platform also supports up to two 1GbE switches which can be used for applications such as PXE boot and management interfaces. We did not have these 1GbE switches available to test, however we hope to get one or more in our chassis.

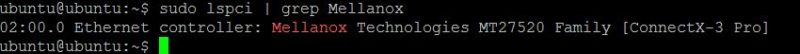

In terms of blade networking, our units came with Mellanox ConnectX-3 Pro networking. The onboard Mellanox ConnectX-3 Pro is a high end network controller that can support both Infiniband as well as Ethernet. That is an extremely flexible option and Supermicro has versions of the SuperBlade that can handle 56Gbps FDR Infiniband networking.

For those HPC customers that require low latency RDMA with Infiniband, this is an option that we did not get to test. Our setup was limited to 10GbE, however if you are looking for something faster there are other options available.

Networking Management

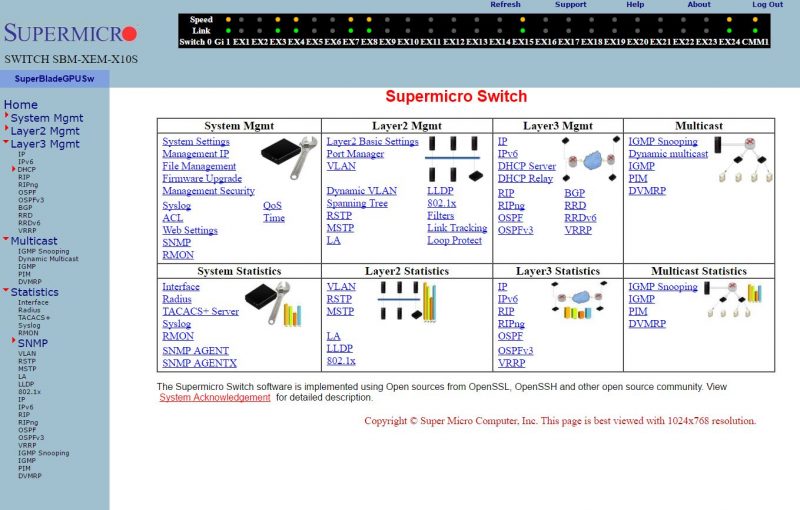

There are a few options for network management on the SBM-XEM-X10SM chassis switch we tested. One option is using a traditional serial console port which is available on the switch. The second option is a standard CLI interface. The third is a Web GUI which we are going to focus on in our review.

Logging onto the SBM-XEM-X10SM we immediately see a number of familiar options including Layer 2/3 features like VLAN management and even VRRP.

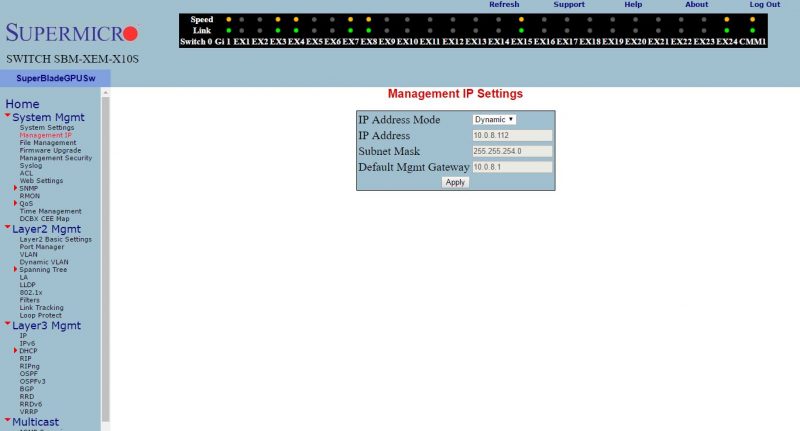

Overall the web interface allows even SMB users easily manage networking. Simple tasks like changing the management IP for the out-of-band management requires a few clicks either in the Web management or the SuperBlade chassis management and does not require learning CLI commands and then issuing them to a terminal interface.

Beyond simple administrative tasks, one can also dig deeper into the switch. For example, one can setup ACLs, VLANS and other features on a port by port basis or in groups of ports using the web interface.

Our onboard SBM-XEM-X10SM 10GbE switch also included an integrated chassis management module (CMM.) This meant that we did not need a separate CMM in the chassis. The CMM provides access to management interfaces for the chassis and blades as well. We found this solution very easy to use even for the novice networking administrator.

SMB-XEM-X10SM Network Performance

We used iperf3 to validate our networking performance. We wanted to check a few different scenarios:

- Blade-to-blade bandwidth

- Blade-to-external node bandwidth (connected to the chassis switch)

- Blade-to-external node bandwidth (through external infrastructure)

- 6x blades-to-6x external nodes bandwidth (through external infrastructure)

Here is a summary of our results.

Blade-to-Blade

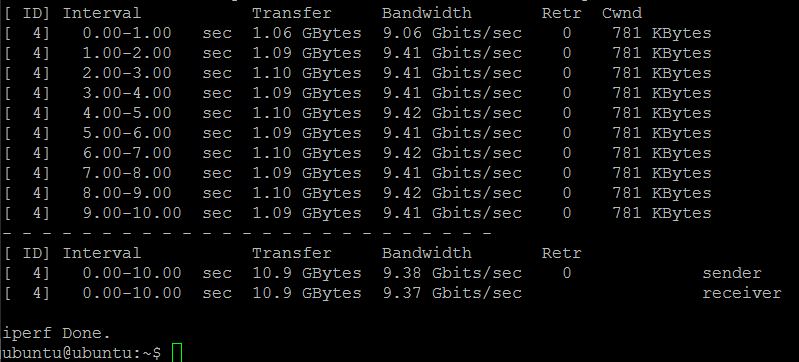

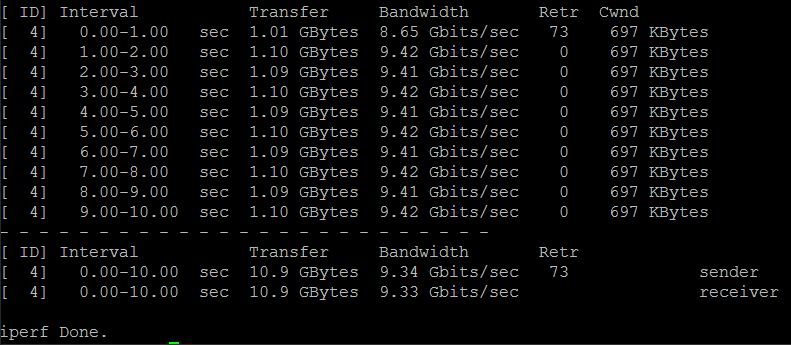

This scenario tests bandwidth from one blade to another in the chassis. Here is an example but doing even simple blade-to-blade testing clearly showed that we have a 10GbE link.

We tried using 24 parallel threads and achieved the same result within 1%.

Blade-to-external node bandwidth (using a chassis switch)

We tested the setup from a blade through the chassis switch to a SFP+ Xeon D node in the lab over a short 3m passive SFP+ DAC. Here is an example result:

Here we can see virtually identical results.

Blade-to-external node bandwidth (through external infrastructure)

We tested the setup from a blade through the chassis switch to our QCT T3048-LY8 switch and to a SFP+ Xeon D node in the lab.

Looking at the blade to external transfers, we saw a fairly similar pattern as we saw blade to blade, albeit less than 0.5% delta.

6x blades-to-6x external nodes bandwidth (through external infrastructure)

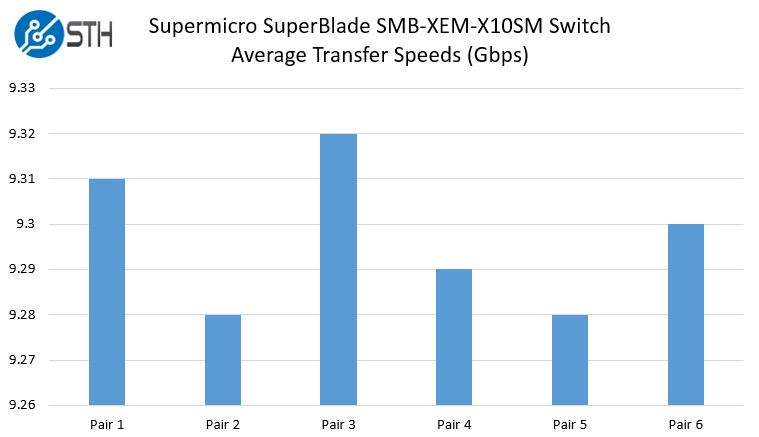

In our final test we took the six blades we had and simultaneously tested against six different targets using 6 SFP+ up links to our lab switch to get enough bandwidth from our chassis switch.

We took averages but as you can see, performance was relatively similar across all pairs. This is not an extraordinarliy difficult test as it was only about half of the switches aggregate capacity. Like we saw in bi-directional iperf3 testing, even with these six pairs of nodes (internal to external and back) both the send and receive speeds were nearly identical. We specifically gave this chart a very tight y-axis range to show small differences we observed. If we did 0 Gbps to 10 Gbps the bars would have looked identical.

Networking closing thoughts

Overall, the performance of the networking solution, given the six blades we had access to, seemed excellent. We will continue to work with Supermicro to see if we can get a second chassis switch setup and potentially higher performance switches. Our big takeaways from this exercise are that 10GbE traffic flowed well from one switch node to the other switch nodes. Cabling was extremely easy. Instead of having to wire our entire chassis with a management NIC and an external 10GbE NIC, we instead could just install uplink ports. The chassis switch (and we might assume switches) are capable of acting as switches for external devices as well. We would have liked to have a model with 40GbE uplink ports, however, we understand this would limit the connectivity flexibility of having 10 functional SFP+ ports. Overall, this was easier to use than we expected.

In terms of management, networking, and the GPU blades themselves (including performance) we can direct you to the other parts of this review:

- Part 1: Supermicro SuperBlade System Review: Overview and Supermicro SBE-710Q-R90 Chassis

- Part 2: Supermicro SuperBlade System Review: Management

- Part 3: Supermicro SuperBlade System Review: 10GbE Networking

- Part 4: Supermicro SuperBlade System Review: The GPU SuperBlade

Check out the other parts of this review to explore more aspects of the system.