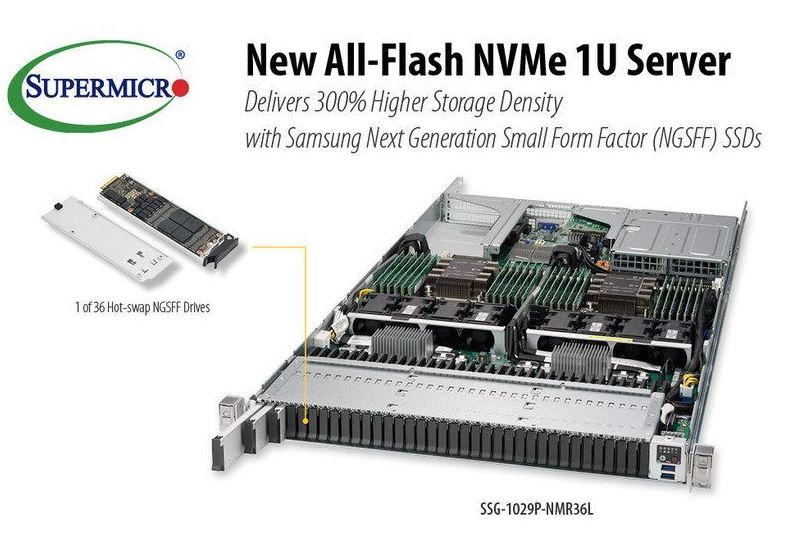

In 2017 we started seeing new alternative form factors arise for SSDs. PCIe cards and U.2 drives were not designed for flash memory. M.2 drives, while useful, were designed for mobile first, not the data center. At Flash Memory Summit 2017 we showed off The Intel Ruler SSD: Already Moving Markets. Intel’s chief competitor is Samsung and the Samsung NGSFF SSD form factor is gaining momentum. Supermicro is taking advantage of the Samsung NGSFF form factor and offering a dual socket server with 36 hot-swap NVMe SSDs in front. This is a massive increase over the standard 10x 2.5″ U.2 designs we have seen which can greatly increase capacity and throughput.

Supermicro Embraces Samsung NGSFF NVMe SSDs

Supermicro is utilizing Samsung NGSFF SSDs in the Supermicro SSG-1029P-NMR36L. We are told that at launch the SSG-1029P-NMR36L will support up to 288TB. With the release of 16TB NGSFF drives later this year the company expects to hit up to 576TB or over half a petabyte in a 1U chassis.

From the system design, we can tell that the first NGSFF solution is designed around dual Intel Xeon Scalable CPUs with 12 DIMMs per CPU (24 total.) As these are expected to be utilized in heavy I/O environments, the server also offers 2x PCIe 3.0 x16 slots and a PCIe 3.0 x4 slot for high-speed networking in addition to the dual 10Gbase-T onboard NICs.

The server features a few additional features that will catch the eye of STH readers. For example, the Supermicro server can power cycle individual drives in the event that a device needs to be restarted.

Final Words

This solution is very strong as it provides significantly more storage flexibility than traditional U.2 designs. It also has two PCIe 3.0 x16 slots which is important for those taking advantage of 50GbE, 100GbE, or 200GbE networking or Mellanox Infiniband fabrics.

This seems a somewhat peculiar choice, I think hardly any data center uses 10GBase-T, perhaps Supermicro expects people to put in their own network cards anyways. I’m also not a big fan of 2 NUMA nodes in a storage server…

“Supermciro” typo

Supermicro replaced 2x 1 GBit/s copper with 2x 10 GBase-T some time ago in most of the designs where PCIe

E lines arent a key factor. This server design is clearly for network storage purpose, and disk speed is limited by netwokr card speed.

How about AIC’s SB127-LX (OB127-LX) that was announced last September at Flash Memory Summit?

https://www.samsung.com/us/labs/pdfs/collateral/SMSG_MissionPeak_Datasheet_v099_final_to_print.pdf

“The system delivers 10 million random read IOPS of storage performance, balanced with 300 Gbps of Ethernet throughput.”

This server (SSG-1029P-NMR36L) doesn’t have required IO bandwidth to fully utilize speed of 36 NVMe SSDs – 200Gb/sec available from 2 x16 slots is nowhere near enough.

Given that those 36 NVMe SSD are seriously expensive it’s unclear why server with 4-CPU motherboard (e.g. https://www.supermicro.com/products/motherboard/Xeon/C620/X11QPH_.cfm ) capable of supporting 36 NVMe SSDs bandwidth (and having up to 3TB RAM cache) wasn’t used.

As a bit of context here, these are often used for network storage, just like SAS based systems would have PCIe x8 connectors. You can saturate 100GbE with this storage configuration and stay with a 2P system.

2x100GbE corresponds to under 700MB/sec per SSD – which is almost certainly much slower that what those NVMe SSDs are capable of (even for 50/50 R/W – but usually it’s much less even at any given moment).

So most of SSDs speed is going to be unused if they’re all busy – due to a network bottleneck (and maybe CPU bottleneck also).

Given very high cost of 36 SSD relative to the rest of the system (and motherboard + 100GbE networking in particular) system design seems not optimal (i.e not properly balanced).