At Intel Innovation 2022, SK hynix showed off a number of new DDR5 modules. The company had client DDR5 in standard DIMM and SODIMM form factors. Next to that was a set of server memory. This memory showed a trend that many are unaware of, DDR5 server memory is going to come in non-binary capacities.

SK hynix DDR5 at Intel Innovation 2022

A bit of background here. In memory, for years, we have seen capacities grow along powers of two in what folks at the show were calling “binary” capacities:

- 1GB

- 2GB

- 4GB

- 8GB

- 16GB

- 32GB

- 64GB

- 128GB

- 256GB

Many of our readers will remember sub-1GB capacities, but at some point, you have to put boundaries on a list.

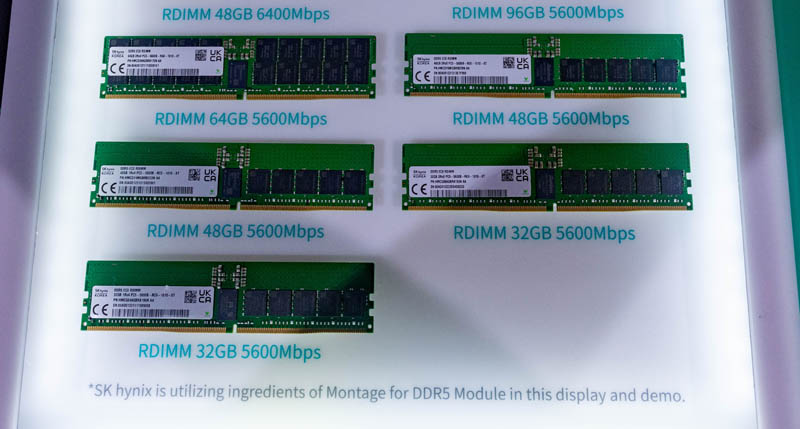

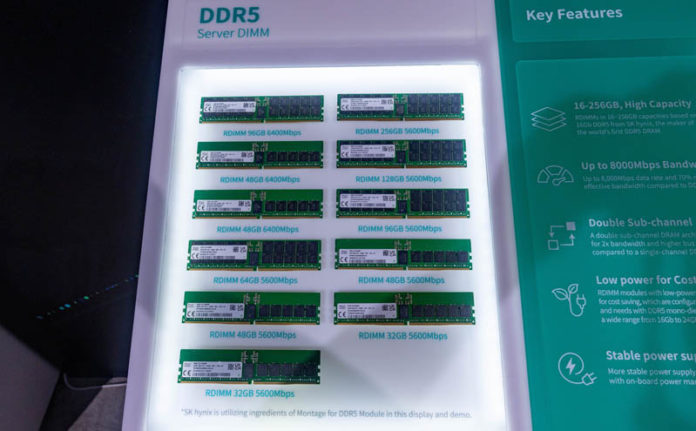

That brings us to the modules at the show. Here we can see 32GB DDR5 RDIMMs as well as 64GB, but there is a new capacity: 48GB. This is a new capacity.

Also, one will see that there are no 8GB and 16GB modules being shown at the show. We may see them, but we were told that 32GB is going to be the new starting mainstream capacity. This is a normal transition that happens from time to time.

Looking at larger capacities, we see a similar incursion of new capacities. Here we can see 96GB RDIMMs as well.

The 96GB RDIMMs are also making an appearance with a capacity between 64GB and 128GB. We have heard there may be 192GB modules coming out from vendors in the future.

One other interesting note here is that the speeds shown are DDR5-5600 and DDR5-6400. Even with 8 memory channels, there is going to be a lot more memory bandwidth available in the next generation of server CPUs.

Final Words

These capacities may not make sense at first, but one has to look at a few factors. First, the DDR5 pricing is much higher than DDR4 at this point, so having breaks between capacity points.

For those wondering if this is a SK hynix-only capacity, it is not. Micron and Samsung said they have 48GB and 96GB DDR5 RDIMMs even if they were not displayed at the show.

What is clear is that new server RDIMM memory capacities are going to diverge from a long history of only scaling in powers of two.

Is there a performance implication to non-binary capacities?

As someone that dealt with a fleet of 50K+ DIMMs at any given time for a fleet of servers over 9 years, I can see the use of these in-between sizes as default DIMM capacities grow.

Over the years in said position I used 2/4/8/16/32GB and a few 64GB DIMMs. If one wants to fill up all the DIMM slots in a classic Xeon/EPYC 2-socket server, as you get beyond 16/32-GB you start to lose some flexibility in matching the server RAM size to the workload.

With 16 DIMM slots and perhaps an app load for a given server that hits a say 700-GB RAM size sweetspot (no wasted/unused memory), 16 * 64-GB DIMMs leaves 300-GB of RAM “wasted”…These non-binary DIMM capacities (48/96/…) work if the pricing is in the proper range.

“First, the DDR5 pricing is much higher than DDR4 at this point, so having breaks between capacity points…..”

Is what? A good idea? A bad idea? A half finished thought?

I am going to spell out how i read this:

For the first time, the industry can leverage partially faulty chips without scrapping the entire power of 2.

No one really cares about filling these long standing gaps. Billions have been made. They are creating a way to maximize incremental revenue potential by enabling faults away from power of 2. Mo reasonable segments = mo money.

You used to not really like the jump at the top size/price and alternate ways could become reasonable. Now, you’ll just pay them instead and thank them for the segment. Bravo.

And market demand is already tested for them thanks to many years of VMs.

The largest benefactors of this will be hyperscalers that want to always reserve 2-4 cores and xx GB of memory across the fleet to handle “serverless” operations in a fabric. They can now better align shared VM sizes to power of 2 and keep the rest to themselves. This ask from hyperscalers has been in play for many years.

This may be helpful for budget conscious buyers who can otherwise only decide between half or double. With 6/3DPC setups we already had *total* capacities that weren’t a power of 2.

Just leaves me wondering what pronouns these non-binary modules will use ;)

It sounds like they’re mixing different capacity modules on the chip with these new capacities. Each of the announced chips so far is just a combination of power-of-two numbers, like 96=64+32. I wonder if one side of the DIMM has 64GB and other side has 32GB?

Hello Jesse: You state “combination of power-of-two numbers, like 96=64+32”. BINGO. What Samsung is providing are “degenerate forms of binary values”, and on par I suspect with what ONW-Arch shared: “leverage partially faulty chips without scrapping the entire power of 2.” The goal, increase yield by not removing from the supply chain, parts that on the whole, are less than nominal, by fragmenting sub sections, and in doing so, increase the effective yield.

Typos adjust “ONW-Arch” to read “PNW-Arch”. For those not partial to “degenerate forms of binary values”, I offer: “exponent increments of a sub integer nature”. In the end, still binary since the exponential value of a binary base, need not be a whole integer to be a binary sum. In short, the memory values are “binary” because of base 2, not because of exponential term.

not sure why comments are jumping to faulty chips. it’s just the fact that ddr5 dimms are two-channel, and there’s no reason for each side to be the same size/geometry.

and price-wise, a 2x jump does become serious as sizes grow. 64->128 prices welcome a half-way product…

SK Hynix has 24, 48 and 96 GB, in addition to the 32-128 GB sizes, source: https://product.skhynix.com/products/dram/module/rdimm.go

With 12 slots that’s 288, 576, and 864 GB, in addition to the 384, 768, and 1,152 GB sizes.

Samsung does have a 512 GB DDR5-7200, with 12 slots that’s 6,144 GB; not that I could afford it.

Non-binary RAM? Is this the “PCs gone mad” people rant about?