SK hynix is joining Samsung and Micron by announcing CXL memory expansion. The SK hynix CXL 2.0 Memory Expansion modules bring RAM to EDSFF for next-generation servers. This will be a big deal as DDR5 moves to PCIe Gen5 channels.

SK hynix CXL 2.0 Memory Expansion Modules Launched

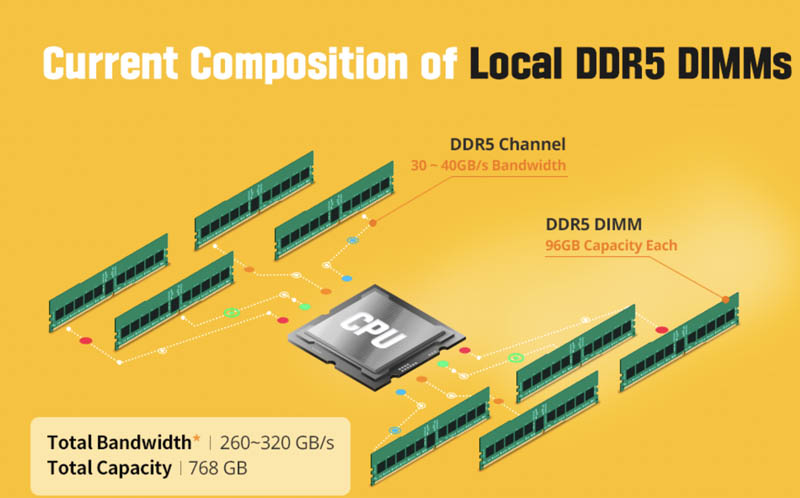

SK hynix showed the “current composition of local DDR5”. What is fun about this is that the company is showing 96GB DDR5 DIMMs. Current DDR4 capacities are 64GB and 128GB, so that is one change being shown in this image. The other is that we get 260-320GB/s of memory bandwidth on 30-40GB/s per channel. The capacity and bandwidth align with 8-channel memory, so this seems to assume an Intel Sapphire Rapids Xeon, not a full 12-channel Genoa system.

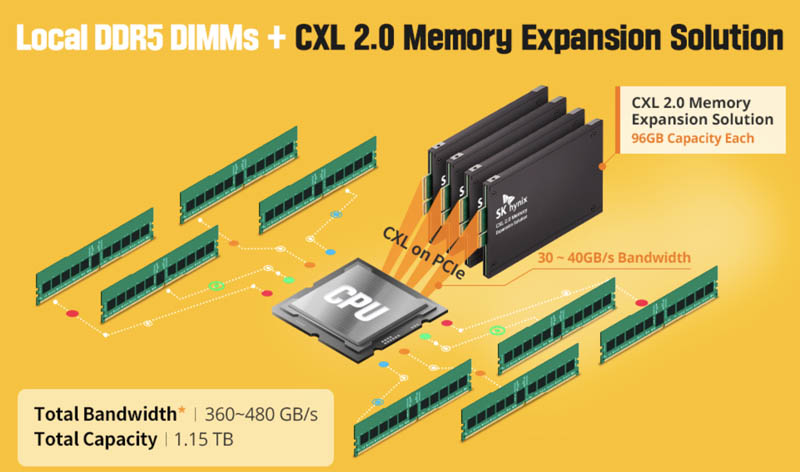

SK hynix is showing EDSFF modules and saying that each can increase both the total amount of bandwidth and the total capacity with 96GB CXL 2.0 memory modules.

CXL 2.0 is also where we see single level CXL switching come into play, so these are important products. You can learn more about CXL in Compute Express Link or CXL What it is and Examples plus the taco primer:

If you want to learn more about the EDSFF E3.S form factor that SK hynix is using, we have a primer on EDSFF as well:

The new CXL memory modules are clearly being designed for the next generation (or two) of servers.

Final Words

While the company is showing 96GB modules at this point, given that Samsung Launched a 512GB CXL Memory Module we expect the line to have different capacity points.

Still, adding memory into the same EDSFF slots that will hold SSDs in the future is going to be one of the biggest changes coming to servers. Vendors will still offer legacy 2.5″ servers, but many are going to want to EDSFF systems just to have the flexibility to add this type of memory expansion solution rather than just SSDs into slots. CXL will change servers forever, especially as we move to the CXL 3.0 generation.

It’s interesting to see that both Samsung( press release on Montage’s site) and Hynix (mentioned in Hynix’s press release) are using the CXL Memory eXpander Controller from Montage technology.

I think 3rd parties like Kingston will be able to offer CXL “drives” too without having to invest in developing their own controller.

Addon:

Hynix has 24 Gbit DDR5 chips. That allows 96 GB modules and this drive without relying on expensive LR buffers or 3D stacked chips:

https://news.skhynix.com/sk-hynix-becomes-the-industrys-first-to-ship-24gb-ddr5-samples/

Samsung’s 512 GB CXL drive probably uses 3DS chips and might be super expensive like their 512 GB 3DS DIMMs.

Doing a bit of math. Adding CXL modules gives them 100-160 GB/sec of extra memory bandwidth. If they have 4 modules (like in the picture), then that’s 25-40 GB/sec per module. Since CXL 2 is basically PCIe 6, they can get 32 GB/sec via an x4 link, or 64 GB/sec with a x8 link. I’d guess they’re quoting 25 GB/sec on x4 and 40 GB/sec on x8.

Presumably you could throw ~16 x4 modules in a Epyc while saving lanes for networking, etc. So an extra ~400 GB/sec of memory bandwidth and (with these devices) 1.5 TB of RAM. Not too shabby. Presumably it’d cost a ton, but 1.5T of RAM never comes cheap.

@Scott

CXL 2.0 is on PCIe 5.0 (just like CXL 1.1). It’s more about features than higher transfer rate.

PCIe 6.0 comes with CXL 3.0

Is there any word on how latency compares between a CXL module and RAM hanging right off the memory controller?

CXL memory obviously compares favorably with RAM that you simply cannot add because you’ve already hit the platform limit with currently available DIMMs; and the fact that more or less the same bandwidth is being quoted for memory controller and CXL means that it won’t be slow; but is this the sort of thing were latency is good enough that you can just treat the CXL RAM as part of system RAM and call it good; or will CXL memory use automatically be somewhere between non-uniform and very non-uniform memory access due to latency?

Cost will be important, AFAIK data center expense is 50% for RAM only.

IF CXL is limited to servers you won’t have the quantity – and competition – as in the DDR4/5 market – and not reach the pricepoint for mass rollout

Examples are RAMBUS, Optane, and Itanium – fantastic products but didn’t sell despite billions spent on dev & marketing

… and the latency?

It is usually higher latency.

They needed pcie5 for cxl because pcie5 added a feature to enable negotiation for a different protocol to take over use of the pcie physical lanes.