Today we have one of the more unique PCIe expansion cards that we have seen recently, the QNAP QM2-2P10G1TA. Typically these expansion cards are used in QNAP NAS units that have PCIe expansion slots. The goal is to provide both 10Gbase-T networking support along with dual NVMe SSD support. There is a M.2 SATA version of this card as well. We wanted to see how these units work outside of their standard NAS confines and hold them to a more rigorous standard: performance in a desktop workstation. We have fielded several questions recently about whether this card works in non-QNAP systems, so we decided to try it. The short answer is that it does, but there are a lot of caveats our readers should be aware of before charging into this solution.

QNAP QM2-2P10G1TA Adapter Overview

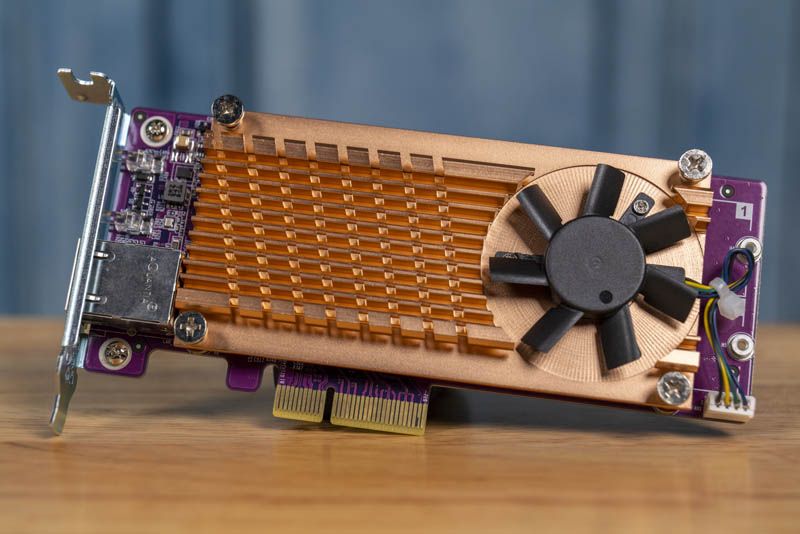

The QNAP QM2-2P10G1TA comes equipped with an Aquantia AQC107 10Gbase-T network port and two PCIe slots for NVMe SSDs. In space-constrained deployments, such as many QNAP NAS units, this is an enormous help. One can connect three PCIe devices all using a single PCIe slot. The usefulness goes beyond QNAP NASes. For example, the HPE ProLiant MicroServer Gen10 Plus has a single available PCIe slot as do platforms such as the Supermicro AS-5019D-FTN4 based on a mITX form factor.

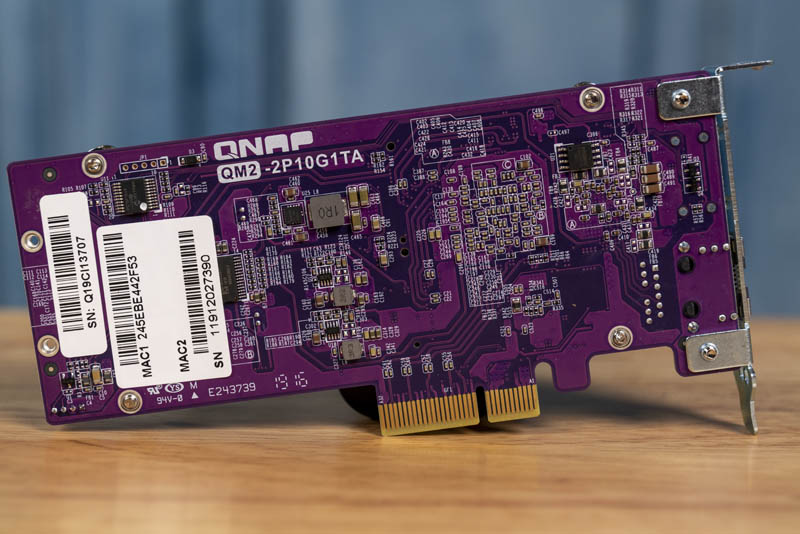

As one would expect, the rear of the unit has some components but is not too busy. Perhaps the most useful information comes in the form of the MAC address on the rear of the card.

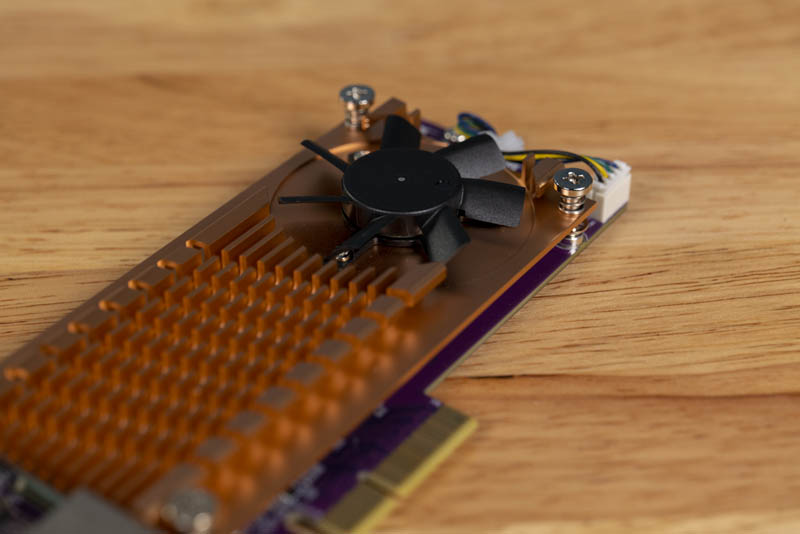

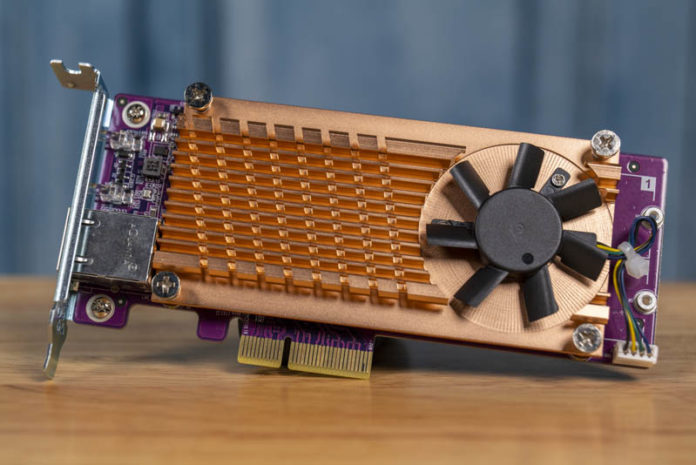

Cooling for NVMe SSDs, especially in small edge NAS units and servers can be a concern. As a result, this is an actively cooled card for the NVMe SSDs. One can see that there is a large heatsink that runs the length of the card with a fan at the end. It almost looks like there should be a shroud to make this a blower-style cooler but that simply is not present with this unit so that fan is not going to be the most effective.

That fan and heatsink cool the two NVMe M.2 SSDs. One can see that there is even a thermal sensor for each drive so that the fan has feedback on when to spin up.

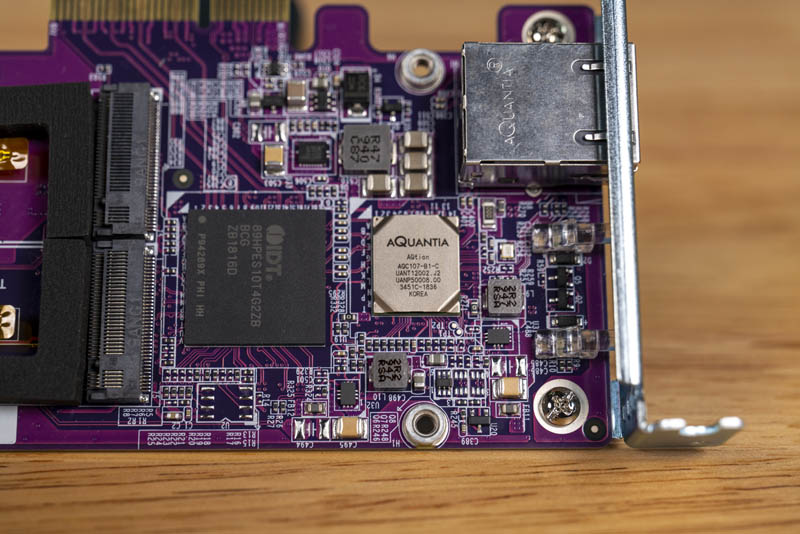

Also under this heatsink, one can see the Aquantia AQC107 NIC next to the single RJ45 10Gbase-T port. One can also see the IDT PCIe switch that is much larger than the NIC.

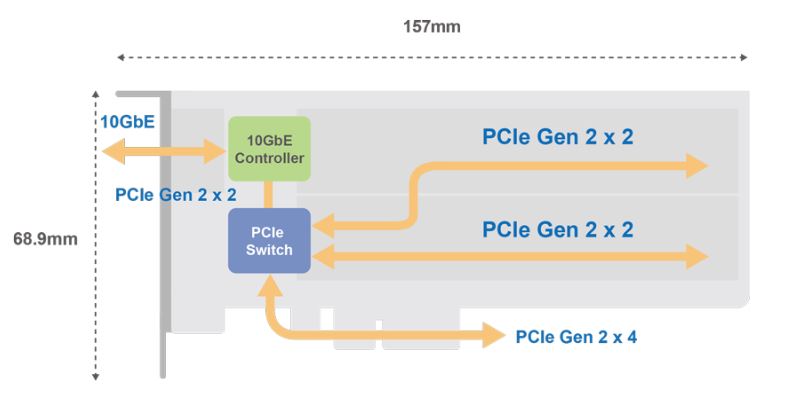

To get a sense of what is going on here, this is the diagram from QNAP. As you can see there is a PCIe Gen2 x4 interface that goes from the host system to the IDT PCIe switch. Off of that switch we see the 10GbE (10Gbase-T Aquantia) controller along with the two NVMe SSDs. All three devices that are on the card each get a PCIe Gen2 x2 link to the switch.

Practically, there are a few key features with this design. Such a design is very easy to integrate into different platforms since it is all running on PCIe. That is the reason we can use these in non-QNAP systems with a great degree of confidence.

Another major impact is that the PCIe switch to host interface has a 1.5:1 oversubscription ratio. Effectively, the network controller and the two NVMe SSDs have 6x PCIe 2.0 lanes connected to the switch and the switch only has four lanes to the host.

We also want to point out this is PCIe Gen2, not Gen3 or now Gen4. On the server-side, PCIe Gen3 became the standard in Q1 2012 with Sandy Bridge-SP Xeons. We are seeing PCIe Gen4 starting in Q3 2019. For the 10GbE connection, this is not a big deal. For the two NVMe SSDs, this will be severely limiting. A PCIe Gen2 lane is about half the effective bandwidth of a Gen3 lane since rates and encoding changed in these generations. As a result, a PCIe Gen2 x2 interface is roughly equivalent to a PCIe Gen3 x1 interface. Most NVMe SSDs today can saturate a PCIe Gen3 x4 interface.

As a result, we have NVMe SSDs, but they are not full performance. Instead, they will run at about one-quarter of the sequential bandwidth. Further, if the network is being used we expect that they can have as little as 2/3 of a PCIe Gen3 x1 lane bandwidth. We wanted to test this out to see that this indeed is the behavior.

Testing the QNAP QM2-2P10G1TA Adapter

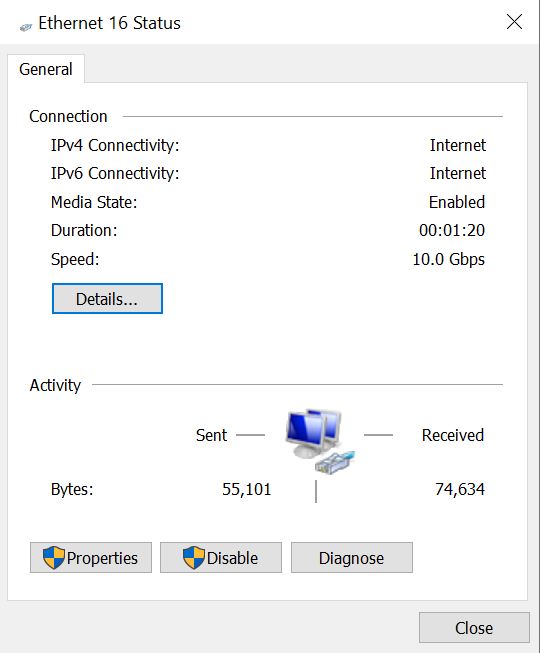

The 10GbE network controller from Aquantia AQC107 worked as expected and was able to attain reasonable speeds in transfers to our NAS. The Aquantia AQC107 is, in fact, the same controller we found in our ASUS ROG Zenith II Extreme motherboard that we use as our testbed.

We saw identical performance to our standard Aquantia 10Gbase-T NICs when tested alone.

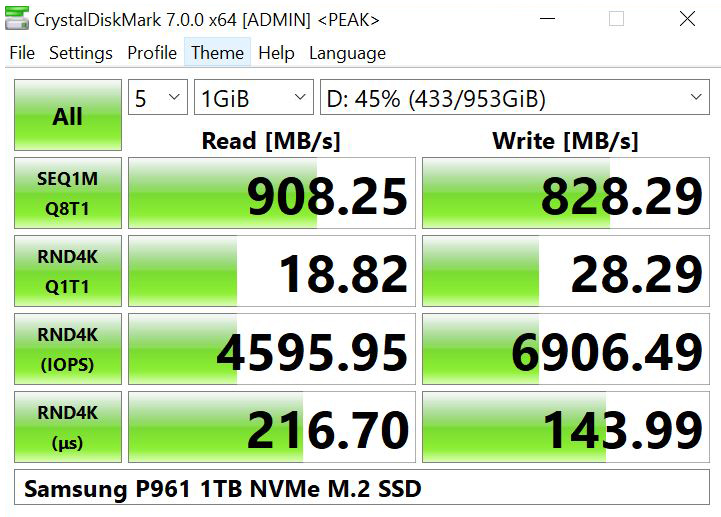

We installed one of our Samsung P961 1TB NVMe M.2 SSD s on one of the QNAP QM2-2P10G1TA M.2 slots and ran CrystalDiskMark without having another SSD installed and not using the network. We wanted to see what the maximum, or best-case, performance would be with a SSD installed in the adapter.

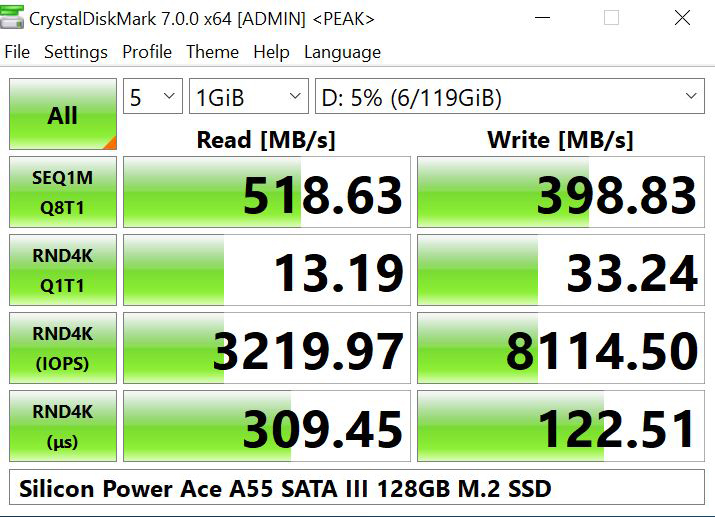

As one might expect, the results are lower than what we would generally see using the P961 on a system with Gen 3 PCIe x4 slot. Just for some context, here is a SATA III SSD on the QNAP QM2-2S, a dual M.2 SATA SSD only card without the 10GbE port:

The Silicon Power Ace SATA III SATA M.2 SSD reached close to expected speeds. If one just needs SATA III storage capabilities, the QM2-2S will make an excellent expansion device for these use cases.

A key takeaway is that the QNAP QM2-2P10G1TA is indeed providing more performance than a SATA SSD solution. While the best-case NVMe performance is around a quarter of our drive’s potential, it is still faster than the SATA III 6.0gbps interface can deliver.

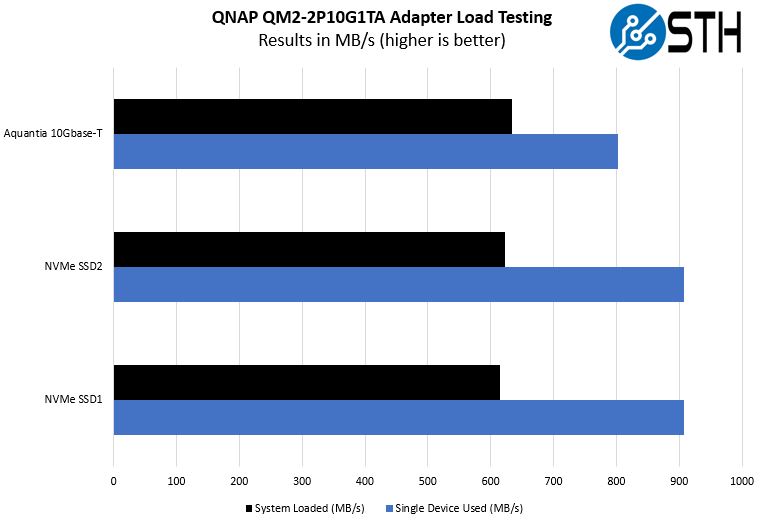

We then set out to put two NVMe SSDs in the QM2-2P10G1TA and run sequential read/ write workloads on the card along with running the network adapter at full speed in an ASUS AMD Ryzen Threadripper 3960X system.

As you can see, the loaded test results were lower, but not exactly 66% of the full bandwidth as we had originally hypothesized we would see.

Final Words

As one might have noticed, both the QNAP QM2-2P10G1TA is a late-cycle product from QNAP. It desperately needs an update to PCIe Gen3 or Gen4. One nice feature we saw was the ability to use this card in everything from QNAP NAS with QTier to a ASUS Threadripper 3960X workstation to a Supermicro 1U server, to a HPE MicroServer. QNAP did a great job making this a general-purpose architecture.

Performance-wise, this solution offers a unique capability of adding network and storage to a single card. Storage performance can be labeled as NVMe, but is far from the performance we see in native PCIe 3.0 x4 slots. As the card is loaded, that performance drops even more. It seems to offer more than simply checking boxes that a NAS can support NVMe and 10Gbase-T but it is not the same as a PCIe Gen3 solution.

At under $250 the card is not exactly inexpensive. Purchasing a 10Gbae-T adapter plus a dual M.2 PCIe card (without a switch) can be done for a little over half of that amount. Still, there is a uniqueness to the solution when one has a single PCIe slot that makes this card still useful.

For a home lab with ESXi on a older, low-end box, this would be a nice upgrade (albeit preferably at a lower price). I would lean toward the SATA version myself.

However, is this supported under ESXi?

New York has the right idea!

This needs DMA over the fabric though…

And a ARM core or two running Linux SDN!

Hi, is it OK to use this card in Synology DS1819+?

Thanks for the answer

Glad to that STH did a review on this. Great job guys!

Like newyork10023 pointed out I would like to see this card tested w/ ESXI. We know that it will never be on an HCL but a card like this could be a game changer in breathing new life into older equipment that only has 1 PCI-E port for expansion.

@Eric: The card’s switch will present the NVMe drives and the AQC107 NIC directly to the OS as discrete PCIe devices. Given that Aquantia provides drivers for ESXI, there is no reason that it would not work.

I bought 2 of the PCIe 3.0 versions of this card (QNAP QM2-2P-384), without the 10Gbe NIC, to put into an IBM X3750 M4. I put two Sabrent NVMe SSDs in each card. They present themselves as native devices to bare metal. No drivers needed in ESXI. The performance on the PCIe 3.0 cards is great — the QNAP card itself has an 8x link bandwidth so each NVMe SSD gets a full 4x of bandwidth.

“We saw identical performance to our standard Aquantia 10Gbase-T NICs when tested alone.”

Is this really the case? I tested this card and iperf3 only gave me 6.66 Gbps. Similar report (6.x Gbps) can be found for an ealier version ( QM2-2P10G1T) of this card https://www.mobile01.com/topicdetail.php?f=494&t=5482371.

Official QNAP site of this card (https://www.qnap.com/en-us/product/qm2-m.2ssd-10gbe) lists a speed of ~820 MB/s and note “The M.2 interface and 10GbE connectivity of QM2-2P10G1TA shared the same bandwidth. To get complete 10GbE performance, please choose QNAP 10GbE NICs or QM2-2S10G1TA.”

Considering this card is PCIe Gen2 x4, is it the case that total bandwidth is divided by 3, hence the saturation at 6.66 Gbps?

In any case, could you please verify the NIC performance of this card? Otherwise it’s a bit misleading to state that it has the same performance as a standard AQ107 card, which does indeed get 10 Gbps.