The NVIDIA GRID M40 is perhaps one of the most interesting cards we have come across in the past few months. From what we can tell, it is a special order part in the GRID line that not even NVIDIA GRID support seems to know about yet. We are unsure if it is simply a special order part or if it is an unreleased SKU. What we do know is that these cards are out there, in droves. The STH data center lab already has a dozen of these cards. It is not listed on the NVIDIA GRID site. It is not the recently launched Tesla M40 (despite NVIDIA support telling us that is exactly what these cards are.) Like many systems and parts before, we are going to do a full knowledge dump on the NVIDIA GRID M40 cards after hours testing them. If you do want to play with these cards, we have resources on how to get them to work. If you are not a huge volume NVIDIA customer, you can get the cards via an NVIDIA GRID M40 ebay search.

What is the NVIDIA GRID M40?

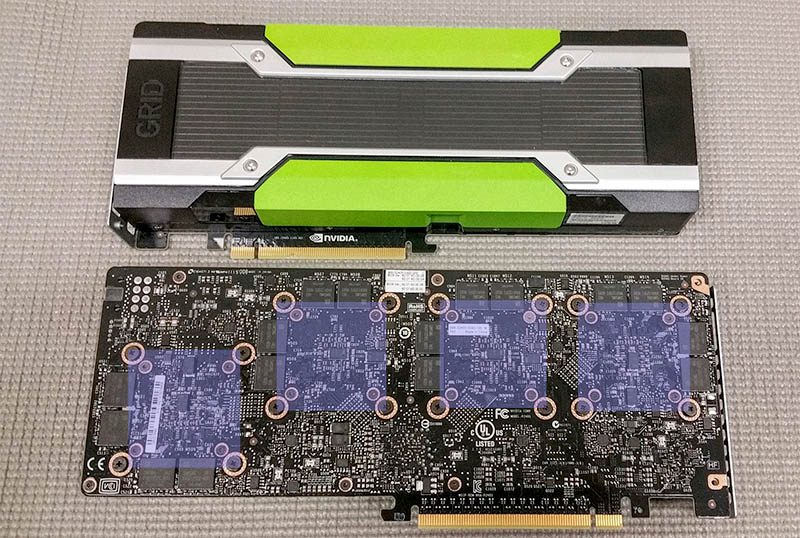

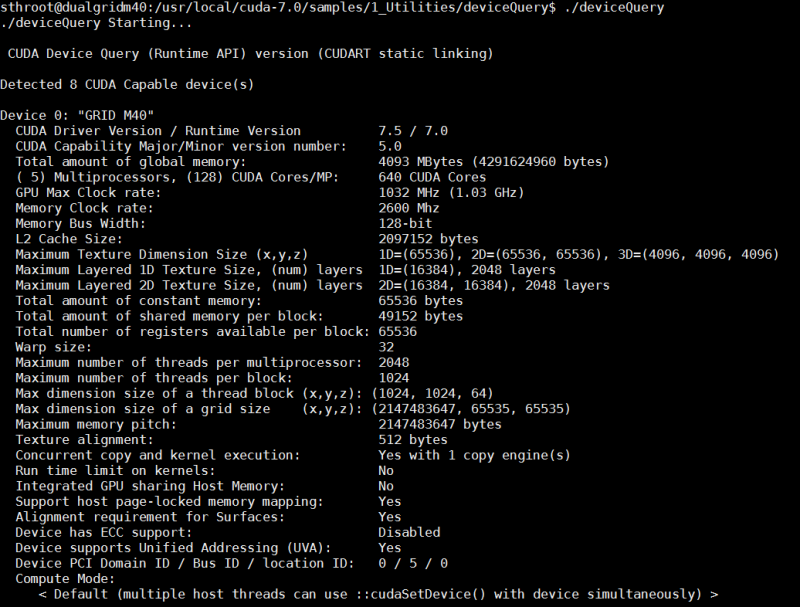

Architecturally, we know the NVIDIA GRID M40 is a 4x GM107L/ GM107GL parts. Each of the four GPUs is roughly equivalent to a NVIDIA Quadro K2200. Each GPU has a total of 4GB DRAM for a total of 16GB on the card. It also has 640 CUDA cores (total of 2560 on the card) and a clock speed around 1032MHz. You can see the GRID branding, and the four GPUs and their associated memory are clearly visible from the back of the PCB.

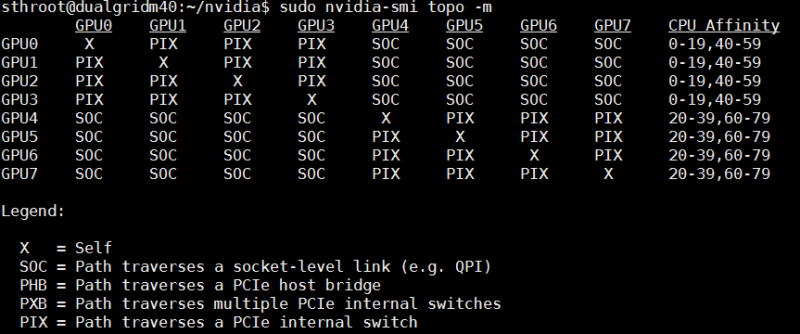

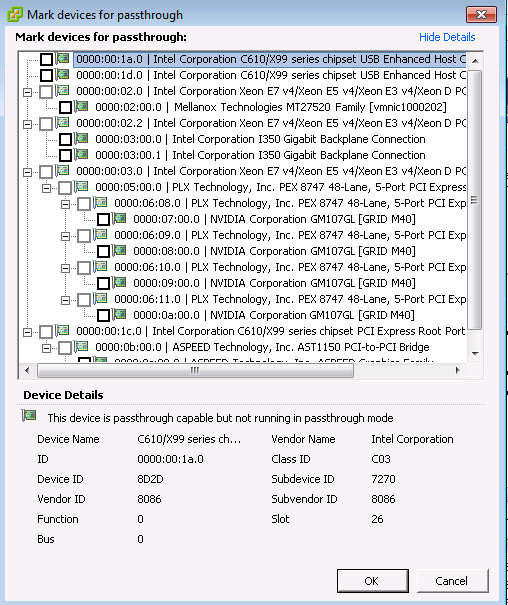

Even without removing the heatsink, one can see there are spots for four GPUs on the card and associated memory. We can see from the system device output that there are PLX switches onboard each card. Here is the nvidia-smi topo -m output with two of these cards, each attached to a Xeon CPU in a Supermicro SuperBlade GPU node:

As one can see, there is an internal PCIe switch on each card denoted with “PIX” in the above map.

CUDA Support

As a first generation Maxwell part, we can see that it supports CUDA compute 5.0. That is a generation newer than the older Kepler parts which offer only CUDA compute 3.x support.

You can certainly run a variety of CUDA applications and for those wondering, we have passed GPUs through to the GPU enabled Tensorflow Docker containers. Using that setup, we were able to pass each GPU through to its own Docker container. As we have seen with the Fermi based Tesla M2090 family that only supports CUDA compute 2.0, machine learning software is evolving past older generations of CUDA compute. That is a big reason you can find a Fermi based flagship Tesla M2090 for under $100 on the secondary market today.

Where can we see this part even exists?

Finding information on the NVIDIA GRID M40 usually means you end up either finding GRID cards (e.g. the GRID M6 and GRID M60) or the new Tesla M40. We have found a precious few bits of information on the web about these cards. Even NVIDIA GRID pre-sales support will tell you an M40 is a Tesla card.

- We found the UL part listed here as the P2405 listed under Accessory – Graphics Cards

- HPE has the card partially listed here, at the very bottom, as “NVIDIA GRID M40 Quad G” – we assume this should read something like “Quad GPU” but it stops with the G

- Pictures! We have a bunch of these cards in-hand:

Or if you prefer a more orderly layout:

Other than that, we have gotten very little information about these cards from NVIDIA.

Power Consumption

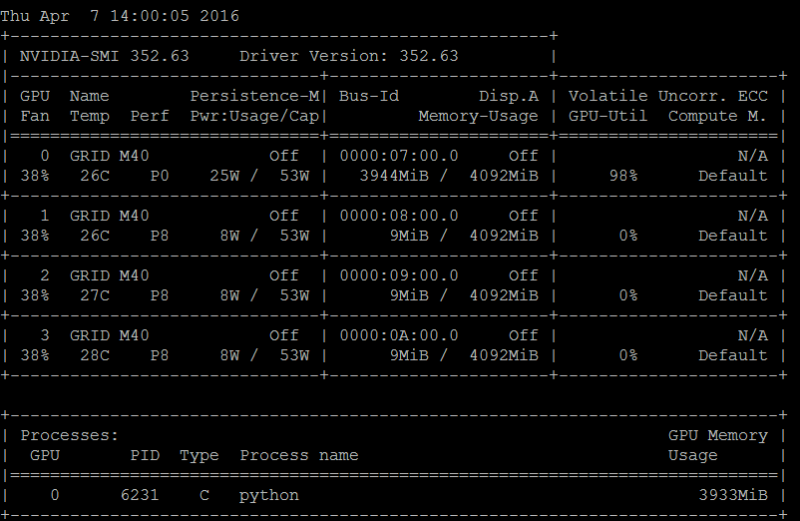

From what we have been seeing in the GPU blades, running CUDA workloads that show 98% GPU utilization we are seeing around 25-30w per GPU. We think the 120-140W range maxed out is fair for these cards making them a fairly low power 5.4TFLOP (single precision) option that compares well even to the new Tesla M4 which provides around 4.4 single precision TFOLPs in two cards and that power consumption window.

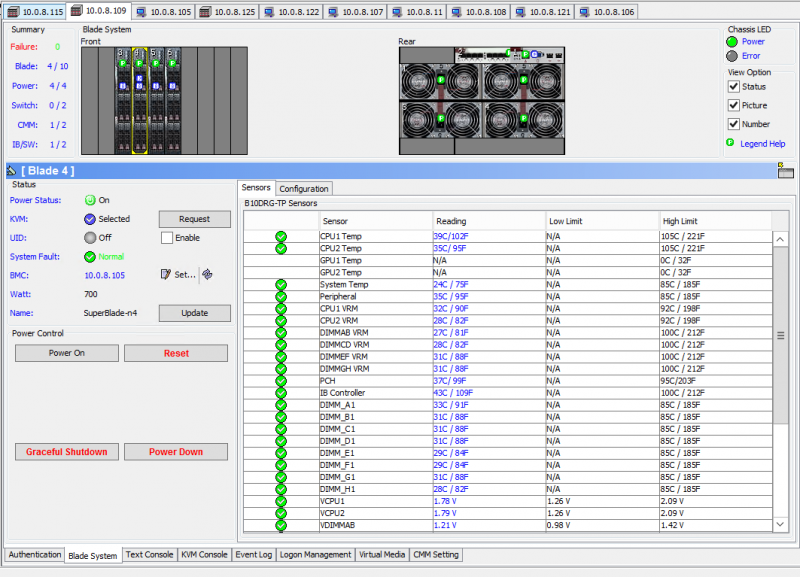

Just to give you an idea how efficient these are. We have our Supermicro SuperBlade system with 6x GPU compute nodes, each with two of these cards active. Our blade chassis has:

- 8x Intel Xeon E5-2698 V4’s (in 4 nodes)

- 4x Intel Xeon E5-2690 V3’s (in 2 nodes)

- A total of 768GB of DDR4 RDIMMs (in 48x 16GB RDIMMs)

- 12x Quad GPU NVIDIA GRID M40 cards (2 per node)

- A 10GbE chassis switch

- 6x 32GB SATA DOMs

- 6x Intel S3700 400GB SSDs

Here is a visual representation of our setup.

And a view of one of the nodes we have running:

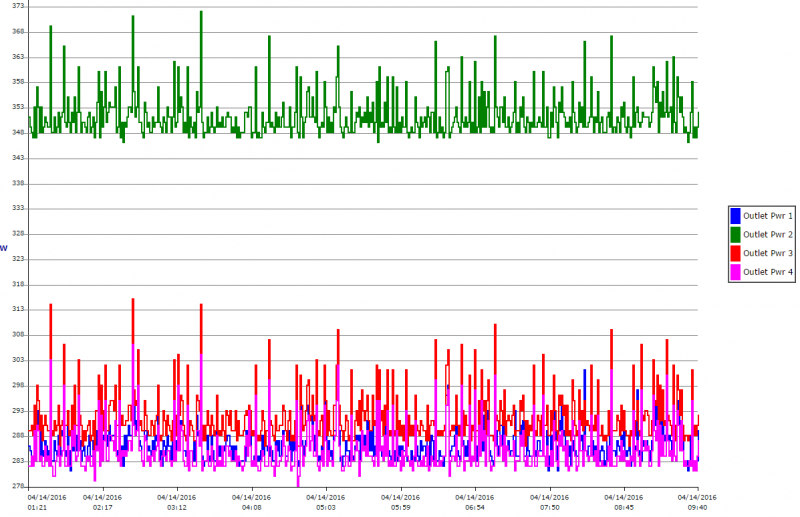

Power consumption even as we have folks work on getting these working with different machine learning frameworks is awesome. Here is our calibrated Schneider Electric/ APC PDU output (208V) on each power outlet connected to each of the Supermicro SuperBlade’s four 3000W power supplies.

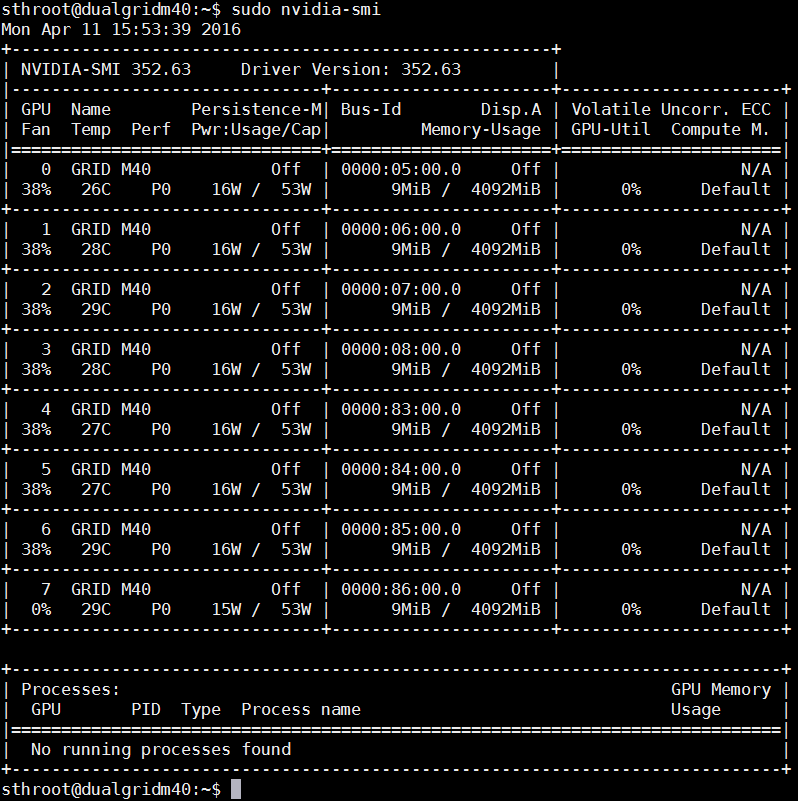

In aggregate we are seeing power consumption in the 1200W to 1400W range. Since we have many people logged into the blade chassis with many GPUs (all not at 100% all the time) it is a bit harder to see actual power consumption of a given GPU. Here is what idle looks like:

Here is what the power consumption on a single NVIDIA GRID M40 node running a Theano task at 98% utilization:

As you can see, very low power consumption indeed.

What about VDI? GRID is a VDI specific part right?

One of the big questions we had was, as a GRID part, how would these work with VDI? We setup blades for VMware, Citrix and Microsoft solutions (Windows Server 2012 R2 and 2016) to test this. There were really two questions:

- Will they work with vSGA and vDGA?

- Do you need the very pricey licensing of the GRID M6 and GRID M60 or is it the less restrictive model we saw with the GRID K1 and GRID K2?

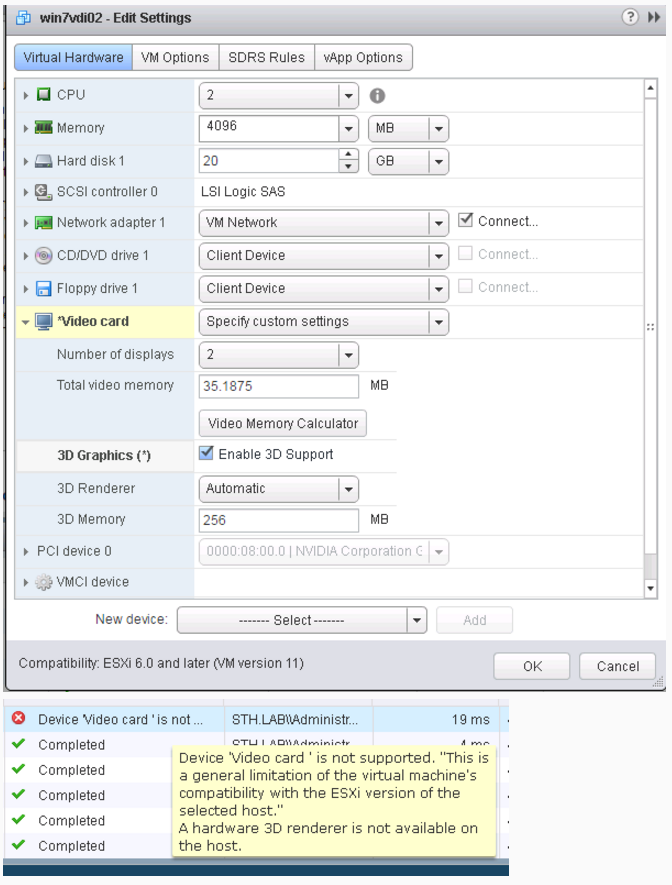

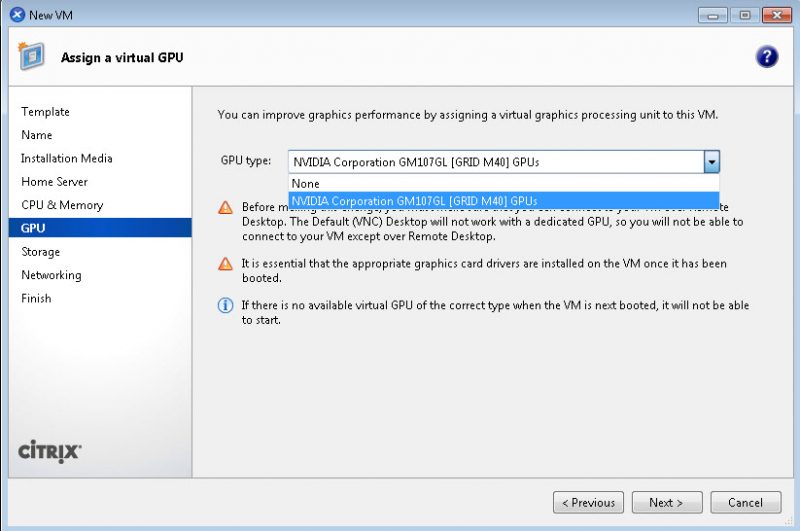

The answer to both questions was a complete dearth of driver support. The NVIDIA GM107GL GRID M40 GPUs show as do the PEX 8747 PLX switches and the ability to use VT-d pass-through in VMware:

But vDGA does not work.

Citrix is having issues installing the drivers for these into the Windows VMs.

When it comes to installing the Windows drivers it fails due to lack of support.

We are still trying to get the NVIDIA mode switching utility on one of these nodes to see if that will fix our current issues, but we suspect we need a special driver from NVIDIA:

Our take right now is that VDI on these cards will require a break-through in terms of software support.

How can you get one?

Probably the easiest way to get one, assuming you are buying hundreds or thousands of cards is to contact your NVIDIA sales rep directly. It seems like NVIDIA is not interested in small orders of 1-2 cards at this point. If you are not buying that many GPUs (and maybe even if you are), the best way we can tell you to get one of these cards is via an ebay search. There are a slew of HP branded NVIDIA GRID M40’s available from decommissioned servers. Here is the ebay search that will find them.

More information and where to get cards we used

You can find more information about these cards on STH in a number of places:

- Our nvidia-smi BIOS settings piece on how to set BIOS for running one or more of these cards

- Our official NVIDIA GRID M40 Forum Thread

- You will also want to consult your system vendor for appropriate BIOS settings (often if you look for the GRID K1 settings these will work for the GRID M40’s as well.)

These are very hard cards to get. The easiest place right now is on ebay. Here is a GRID M40 ebay search.

Final Thoughts

The NVIDIA GRID M40 is a really interesting card for a number of reasons. Without driver support, VDI on the cards is challenging at best. On the other hand, if you were looking to build a low power server with 4 or 8 GPUs either for CUDA/ machine learning applications (e.g. to test multi-GPU scaling) or to do video transcoding (each GPU can support hardware encoding), then this is an extremely interesting piece of hardware. We are going to keep an official NVIDIA GRID M40 Forum Thread open on these cards. If you think you have a way to get the VDI drivers for vGPU working on a virtualization platform, we can probably arrange getting you on a system with these cards for testing for a short period of time.

http://nvidianews.nvidia.com/news/nvidia-grid-delivers-100-graphics-accelerated-virtual-desktops-per-server

Nvidia Tesla M10 announced.

Hey. Wondering if any break through on these cards for VDI. Client of mine picked up 4 of them without doing any research and he’s stuck with them…

Some in our forums have found drivers.

I found that the 362.27_HpISS_WinServer2012-64bit.exe driver from Hewlett Packard listed for the Quadro K2200 works on windows 8.1 and it proved to be 4x faster than my GTX 770 and Quadro K2200 at rendering in blender 2.79b. Refurbished from flash.newegg.com for $200 seems like a great deal. lots of developer tools at NVidia if your wired for the higher cerebral challenges in the Linux realm. It seems NVidia doesn’t support the card on the enterprise end but I put the 2500 or so CUDA cores to good use rendering 3D content. I would love that gigabyte water cooled rig but until then this M40 will do nicely.

This one. 2012 server and Windows 8.1

https://support.hpe.com/hpsc/swd/public/detail?swItemId=MTX_9ab4560fd7694b6cb2bcddbc82