MLPerf is moving to more domain-specific model. We have already covered MLPerf 0.7 Training and MLPerf 0.7 Inference. While MLPerf is more focused on commercial workloads such as vision and natural language processing, MLPerf HPC Training is focused more on HPC workloads. The new benchmark has changes in rules to incorporate I/O as an element of the solution. For the HPC market, there are big datasets. As a result, the new benchmark takes into account the amount of time it takes to do tasks such as staging data using 5-9TB data sets. As such, we get a new class of results from MLPerf designed for HPC.

MLPerf HPC Training v0.7 Benchmarks

Since the MLPerf HPC Training v0.7 is a new project, we wanted to discuss what the actual benchmarks are, especially given that there are only two. Here is the description we received from MLPerf:

The first version of MLPerf HPC includes two new benchmarks:

- CosmoFlow: A 3D convolutional architecture trained on N-body cosmological simulation data to predict four cosmological parameter targets.

- DeepCAM: A convolutional encoder-decoder segmentation architecture trained on CAM5+TECA climate simulation data to identify extreme weather phenomena such as atmospheric rivers and tropical cyclones.

The MLPerf HPC Benchmark Suite was created to capture characteristics of emerging machine learning workloads on HPC systems such as large scale model training on scientific datasets. The models and data used by the HPC suite differ from the canonical MLPerf training benchmarks in significant ways. For instance, CosmoFlow is trained on volumetric (3D) data, rather than the 2D data commonly employed in training image classifiers. Similarly, DeepCAM is trained on images with 768 x 1152 pixels and 16 channels, which is substantially larger than standard vision datasets like ImageNet.

Both benchmarks have massive datasets – 8.8 TB in the case of DeepCAM and 5.1 TB for Cosmoflow – introducing significant I/O challenges that expose storage and interconnect performance. The rules for MLPerf HPC v0.7 follow very closely the MLPerf Training v0.7 rules with only a couple of adjustments. For instance, to capture the complexity of large-scale data movement experience for HPC systems, all data staging from parallel file systems into accelerated and/or on-node storage systems must be included in the measured runtime. (Source: MLPerf)

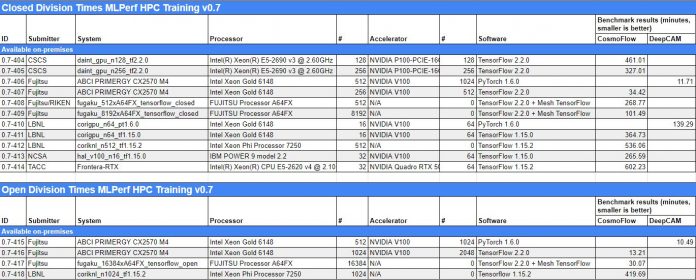

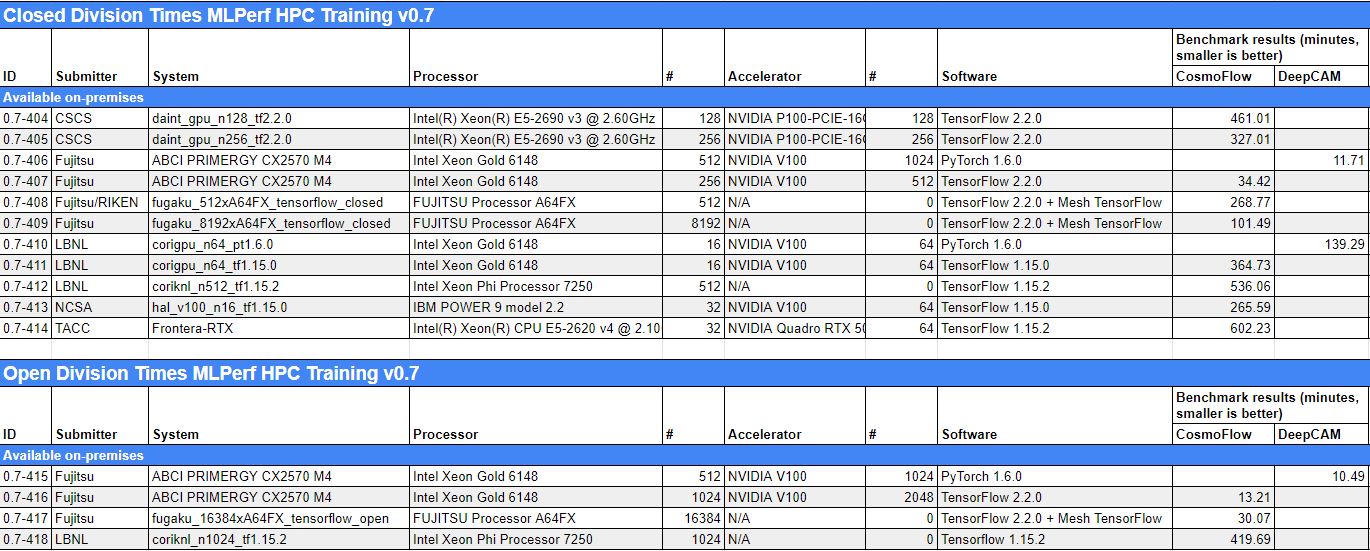

MLPerf HPC Training v0.7 Results

In terms of results, there are very few systems that have run the benchmark, but those include a portion of Supercomputer Fugaku the top supercomputer in the world. There is also some legacy hardware in the mix including Xeon Phi.

We normally would do a deeper analysis, but with fairly sparse results, it likely makes sense just to look at the raw data here.

There is likely good reason. Organizations above likely had to divert computing time away from critical healthcare research to run the new benchmark. Since this is a new benchmark, and there are pressing problems for some of the world’s biggest tools to work on, there are likely supercomputing centers that preferred to dedicate CPU time to research rather than a new benchmark at this juncture.

Final Words

Perhaps the first question one will have is “why?” Why do we need a HPC training benchmark. There are probably a few reasons. First, the HPC workloads are different. Second, top-tier HPC and AI clusters are different, so a HPC center being out-performed by cloud HPC could present challenges. Perhaps the biggest reason is that the hope is that this becomes some sort of validation criteria for the industry if RFP’s target traditional HPC as well as AI workloads.

The MLPerf community is deciding whether these results are going to be published on an annual or approximately 6-month cadence around ISC and SC like many other industry benchmarking efforts. Although this does not necessarily inform what an organization should purchase today, we can see it being a part of future RFP processes.