In a previous article, I tested the LSI 9202-16e and pronounced it the fastest host bus adapter that money can’t buy, with an AMD server pushing the card to over 5,200 MB/ second. Patrick then went on to pry one apart for your enjoyment. Noticeably missing, however, was any indication of how much throughput the card could muster on the latest Intel Xeon E5 servers. There was a simple reason for that: Our dual Intel Xeon test system at the time was limited to x8 PCIe cards only. In this update, we remedy that issue by introducing a new Intel Xeon E5 test server and push the LSI 9202-16e further than ever before.

As a quick recap, the LSI 9202-16e is a fairly unique HBA. It combines two LSI SAS 2008 chips with a PCIe switch. The entire package uses a PCIe x16 slot whereas most storage controllers use a PCIe x8 slot. Effectively, this takes two LSI SAS 2008 based HBAs, such as the LSI 9200-8e and puts them on one PCB. For those looking to place as many controllers on a single system as possible, the LSI 9202-16e increases density two fold. As Pieter recently noted in his LSI HBA and RAID controller power consumption piece, the LSI 9202-16e does use about twice as much power as the LSI 9200-8e.

Benchmark Configuration – Revised

In the first round of testing, we paired the LSI 9202-16e card with two large multi-CPU rackmount servers – a 48-core quad-CPU AMD monster and an Intel Xeon E5 server with a pair of the fastest 2.9Ghz 8-core, 16-thread Intel Xeon server CPUs available, Intel Xeon E5-2690’s. The impressive Intel Xeon PCIe architecture pushed the LSI card to its rated 700,000 IOPS specifications and beyond, doubling what the AMD could muster. Yet it was the AMD in that test, the only server of the two with an x16 PCIe slot, that took top honors for total throughput at 5,250 MB/Second.

Now, Intel is back for a rematch, and this time it brings an x16 slot. Our new dual Intel Xeon E5 test server is based on the Supermicro X9DR7-LN4F motherboard that Patrick reviewed last week. The new servers’ PCIe 3.0 x16 slots promise double the theoretical bandwidth of the prior Intel Xeon server.

In the lab, we shoehorned the new motherboard into a 24-bay Supermicro SC216 chassis. The motherboard fits, but it’s not an optimum configuration. The three-fan SC216 simply has too little cooling for this much CPU horsepower. Fortunately, our testing usually kept the CPU utilization under 2% and never used more than 11%. Heat was never an issue.

As before, the card is paired with 16 SSD drives. The SSD drives are OCZ Vertex 3 120GB devices for most tests and Samsung 830 120GB drives for a few others. The drives are initialized but unformatted to ensure that no operating system caching takes place. All tests are run against 16 separate drives at the same time – we’re here to test LSI card throughput, not RAID implementation efficiency.

Supermicro Server Details:

- Server chassis: Supermicro SC216A

- Motherboard: Supermicro X9DR7-LN4F

- CPU: 2x Intel Xeon E5-2690 2.9Ghz

- RAM: 128GB (16x8GB) DDR3 1333 ECC

- OS: Windows Server 2008 R2 SP1

- Host Bus Adapter: LSI 9202-16e (x16 PCIe 2)

- Disk Drives: 16x OCZ Vertex 3 120GB or Samsung 830 120GB drives

Benchmark Software:

- Iometer1.1.0-RC1 64 bit for Windows

- Test setup varies by test – see below

LSI 9202-16e Throughput Results

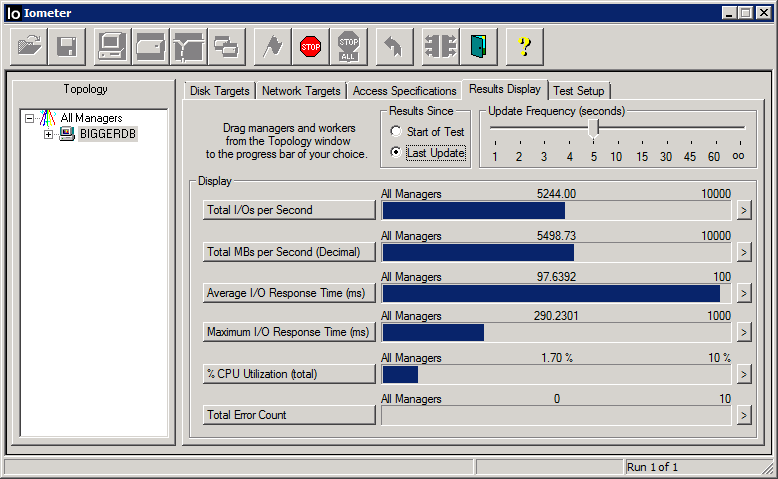

In the earlier round of testing, the AMD server pulled an impressive 5,250MB/Second when performing 1MB sequential reads as in a database scenario. The original Xeon server, limited by its x8 port, topped out at 3,583MB/Second, a number I did not report in the original article. The new Supermicro PCIe x16 Xeon server settles the issue once and for all, achieving 5,499MB/Second for 1MB sequential reads. Note the extremely low CPU utilization – just 1.7%.

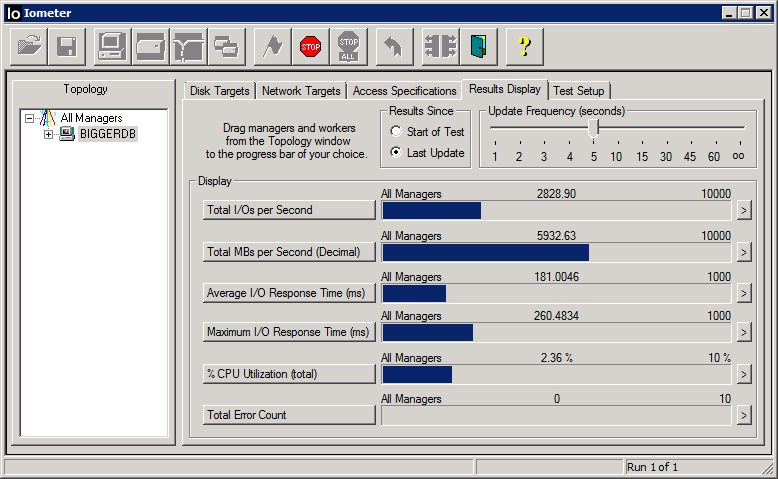

Impressive results, but we can do better. The ultimate in throughput comes when we set IOMeter to read in 2MB chunks and then pin the IOMeter engine (dynamo.exe) to a single CPU using the CPU affinity feature of Windows. The result? 5,932MB/Second with one IOMeter worker. The result without pinning IOMeter to one CPU? Still record-setting at 5,610MB/Second.

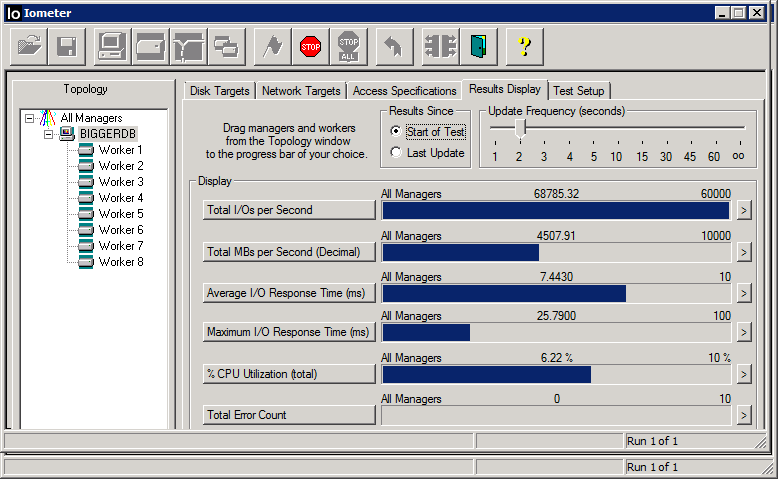

Surprisingly, throughput remains impressively high even with smaller transfer sizes. Configured to read data in 64kb chunks – a value popular for many software RAID implementations – throughput measures a truly impressive 4,508MB/Second using Samsung 830 drives.

While the throughput of the LSI card in an Intel Xeon server matches and then significantly exceeds the throughput of the same card in an AMD server, it gets there very differently. With the AMD server, the best throughput is achieved with eight IOMeter workers cooperating. With the Xeon, a single worker yields the best numbers and the results actually fall slighly as additional workers are added. Configured with eight workers as with the AMD, throughput is a slightly less impressive 5,040MB/Second. This result surprises me in two ways: first that adding even a second thread harms the Xeon results measurably and second that a single Xeon thread is able to consume that much bandwidth- truly impressive.

Please note that it is impossible to conclude, with just this evidence, that it’s the CPU that causes this difference. There has been one LSI firmware update since the first article and there is always the possibility that it’s the card or the drives and not the CPU that are behaving differently.

LSI 9202-16e IOPS Results

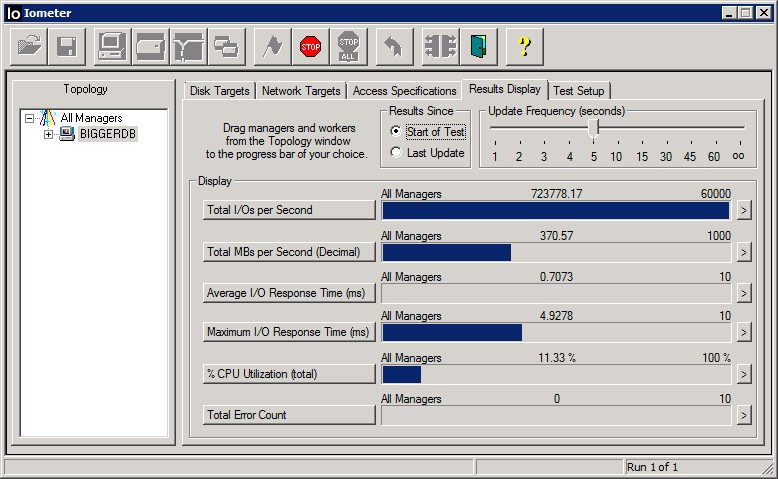

Our original Xeon server was able to coax 726,000 4kb random read IOPS out of the LSI card, higher than any card yet tested, and, in fact, higher than the card’s specification of 700,000 IOPS. The new server, utilizing the same architecture and processors, not surprisingly achieves nearly identical numbers – 724,000 IOPS with OCZ drives. Moving to 16 Samsung 830 128GB drives and the test yields the same results, a clear indicator that we have reached the saturation point of the card, not the SSD drives. Each individual Samsung drive tests at over 60,000 IOPS, so we had 960,000 IOPS of “capacity” available for the card to try to consume.

Our AMD server had a more difficult time with this test, leveling off at 365,000 IOPS, half the Intel result. Kudos to Intel for their PCIe implementation.

Conclusion

With this update, the LSI 9202-16e card retains it crown as the fastest host bus adapter yet tested. Now, however, it’s the Intel Xeon platform that coaxes the most out of the card, though with the caveat that for highly threaded workloads the AMD might provide better ultimate throughput. There is a challenger on the horizon, however. LSI has announced the LSI 9206-16e card, which trades the dual SAS 2008 chips for faster dual SAS 2308 chips. It also trades the x16 PCIe2 interface for an x8 PCIe3 interface, yielding approximately the same theoretical bandwidth. When released, this new card may be capable of up to 1.4 million IOPS (should you happen to have a set of 90K IOPS SSD drives available) and rumor has it that the LSI 9206-16e may touch 6GB/Second. Hopefully, the LSI 9206-16e is more widely available as the 9202-16e was only sold to a few OEMs (though you can find them using an ebay search here.) AMD users need not apply of course – only Intel currently supports PCIe3. I have my fingers crossed that I’ll be among the first to publish those results.

Hope ya aren’t stopping that close to 6. Gotta get there man. Great read. When are you testing new pcie3 cards?

I tried! Really I did! Perhaps the upcoming 9206-16 will break the barrier.

TO answer your question, I will soon share some results from testing the LSI 2308 chip on PCIe3.