The SanDisk/ Fusion-io ioDrive cards have become extremely popular in the forums lately. There have been a number of deals in the Great Deals forum making it affordable to start building non-production labs to test Fusion-io cards. We also recently published an article to get the Fusion-io ioDrive cards working under Windows Server 2012 R2 and will have a VMware guide shortly. Recently I installed these into our Fremont colocation facility as I wanted to test STH forum performance on the Fusion-io ioDrive MLC drives versus 12gb/s SAS SSDs and SATA III SSDs. The goal was to try Ubuntu LXD Docker containers, plus Proxmox VE with OpenVZ and KVM using GlusterFS/ Ceph storage clustering on Fusion-io. Frankly, it was a learning process.

Fusion-io on Proxmox VE

First, the initial attempt was with Proxmox VE 3.4. The plan was to use OpenVZ containers/ KVM containers and compare differences and have them clustered with a second box that had both a Fusion-io ioDrive SLC card as well as a some of the newer Samsung/ Intel NVMe SSDs installed. Proxmox VE 3.4 is built upon Debian wheezy and can be installed atop of an existing wheezy installation so the thought was the Fusion-io drivers for Debian wheezy would work.

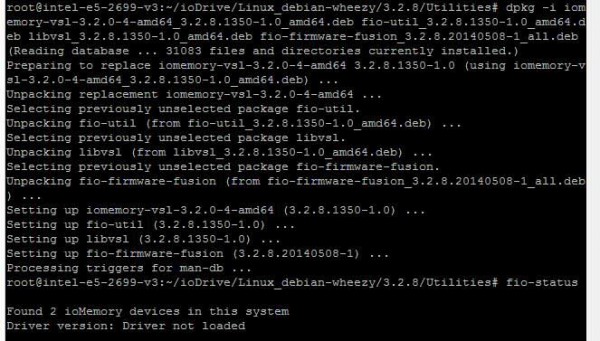

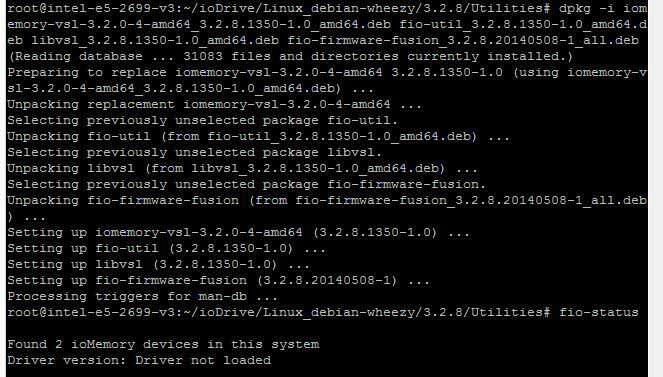

Here is a Fusion-io and Proxmox VE testing thread, but the issue was that the driver was constantly not found. We tried the standard Debian package installs which did not work. We then loaded the headers and all of the build tools in Proxmox, then compiled the SanDisk/ Fusion-io drivers against the Proxmox kernel. It turns out that some of the closed aspects of the Fusion-io iomemory-vsl driver would not integrate.

Thank you to @mir on the Proxmox forums for helping troubleshoot this and confirming there was something wrong.

Turning to Ubuntu

The next step was to try compiling the newest Fusion-io drivers against Ubuntu 15.04 server to see if they would work and we could try using new features such as LXD with the low latency Fusion-io storage. We downloaded and tried compiling both version 3.2.8 and 3.2.10 to no avail. dpkg -i would install pre-compiled binaries but they would not work due to the new kernel versions.

Since Ubuntu 14.04 was on the compatibility list, and had specific driver package downloads for the cards, we then turned our attention to Ubuntu 14.04.2, the newest maintenance release which has a number of security and stability fixes. The pre-compiled drivers likewise did not work with Ubuntu 14.04.1, 14.04.2. We tried compiling our own drivers but would get an error on the build.

Finally, at the urging of SanDisk support, we moved back to Ubuntu 14.04 LTS. You will have to search in the archives for this version now that it is two generations old. Specifically you will want to use: ubuntu-14.04-server-amd64.iso which is the original version. That one utilizes the 3.13.0-24-generic kernel and one can use dpkg -i to install the 3.2.8 or 3.2.10 without any issues. Our cards now show up no problem.

SanDisk support said they have an internal request for 14.04.2 update which would be great. Frankly, the SanDisk – Fusion-io support desk was absolutely awesome. Kudos to them.

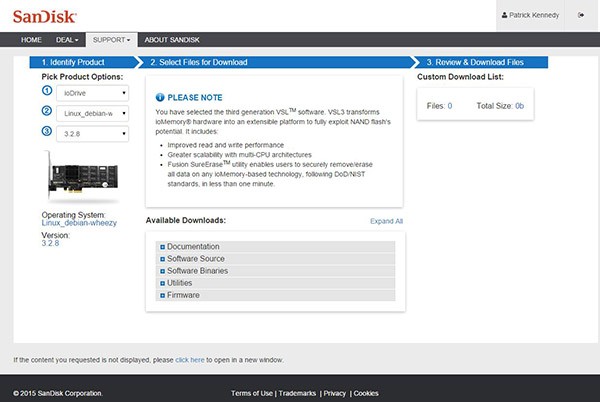

18 fresh Linux OS installs later – a thought

On one hand, I know this is standard industry practice, especially with more closed server platforms. We deal with them at STH all the time and the updated Fusion-io SanDisk support site is very good and easy to use with many options for compatibility. With that said, the model of closing off PCIe storage drivers to compile against newer kernels is not going to be a winner. Want to run CoreOS with Fusion-io? CoreOS does not work with Fusion-io ioDrive drivers at this point. There is so much development going on in the Docker space that this type of driver policy is absolutely detrimental to the use of these products. On the other hand, the massive wave of NVMe drives one can get to work under Ubuntu 15.04.

This is a lesson that took us 18 Linux OS installs to figure out. Sure we would have been safer just using the older versions, but that is not how one can take advantage of the newest software advancements.

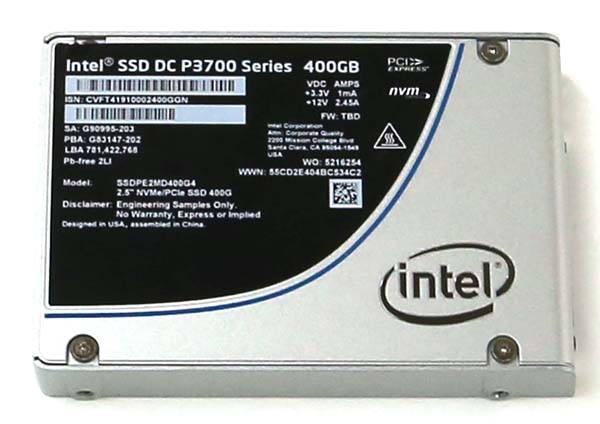

The bottom line here: if you are doing Docker (or any non-standard Linux OS really) development and want fast storage, go NVMe unless Fusion-io decides to change Linux driver policies. If you are running Windows Server or VMware ESXi then Fusion-io works great. HGST just announced their NVMe drives, Intel has no less than 4 lines of NVMe drives (P3700, P3600, P3500, 750) that we have in our lab alongside our Samsung XS1715 array. When it comes to being agile with the newest technology and the newest operating systems, NVMe is where the market has already moved. If SanDisk releases an unsupported build your own source code for Fusion-io, then that may change our recommendations.

Spot on comments. But if I can’t test it, then I’m not going to build my architecture around it for production. SanDisk’s loss.

Has anyone gotten Proxmox Ve 3.4 installed on an NVMe driver? I’ve just gotten a new server with an Intel SSD DC P3700 and my initial attempts have all failed so far. It appears that UEFI installs aren’t actually working with the current install media.

Question – Do you know if these work with OmniOS at all? I see Solaris drivers, but not sure if those will work or not.

Low chance. These are too tied to version for *nix