At SuperComputing 2016 Intel has been heavily touting the wins its Omni-Path architecture has had over the last six months. The Intel Omni-Path story has been bolstered with over 20 new installations just in the November 2016 Top500 list. One of those systems is Oakforest-PACS which is a Knights Landing Xeon Phi generation machine with Intel Omni-Path as an interconnect. Oakforest-PACS ranks as #6 on the November 2016 Top500 supercomputers list.

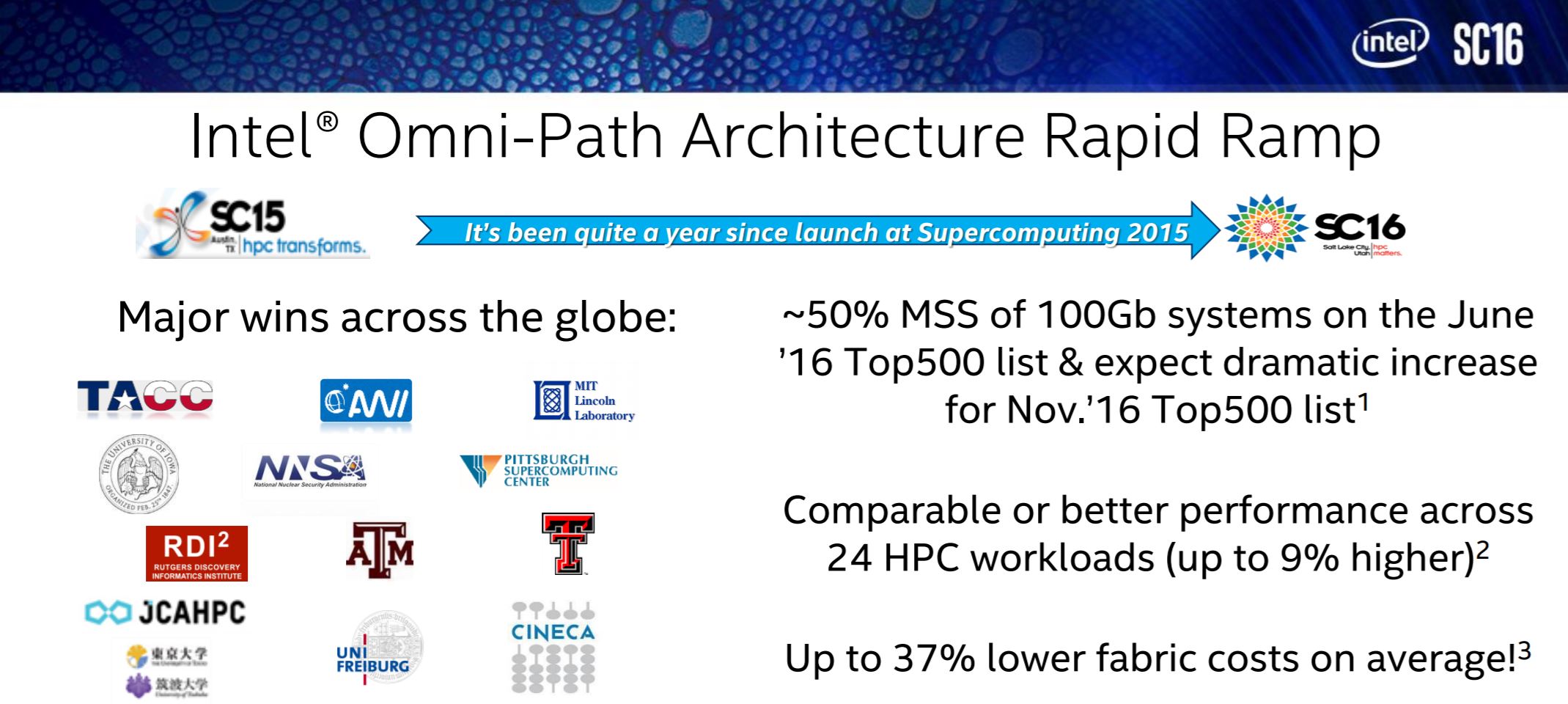

Here is the Intel slide on Omni-Path adoption.

We have heard the number is 28 Top500 systems now using Omni-Path from 8 in the June 2016 list. Intel states that it now has captured over 50% of new 100Gbps interconnect deployments. New is the operative word since Mellanox’s EDR has been in the market considerably longer. Also, in terms of the Top500 systems 10GbE is still the most used interconnect with over one-third of the systems using 10GbE.

With all of this said, Intel has stated that we will see Omni-Path on Skylake-EP around the time of commercial availability in 2017. We will also see Omni-Path on Knights Mill, the next-generation Intel Xeon Phi due in 2017 as well. Essentially, Intel is attacking a 70% margin business of Mellanox with very low cost yet high speed interconnects integrated on package. At the high-end, Omni-Path is going to be the low-cost 100Gbps fabric of 2017. At the same time, Mellanox will be shipping its 200Gbps HDR fabric in 2017 but that is running into PCIe 3.0 bandwidth constraints.

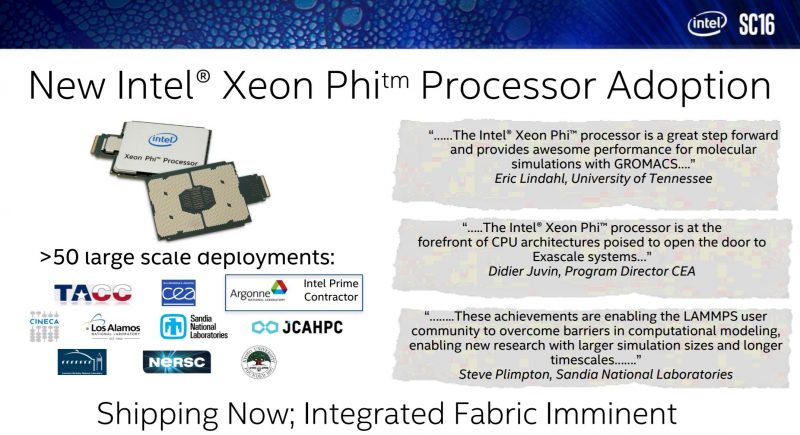

If you look at the KNL image above, you can see an Intel Xeon Phi X200 with Omni-Path pictured. The rectangular package has the small PCB with gold fingers jetting out. This works in the Intel LGA 3647 sockets and we are seeing companies use cables to go from KNL package to the rear of a chassis. Even with 3647 pins, the new Intel socket is not carrying Omni-Path traffic in the systems we have seen.

Here is Intel’s Omni-Path excerpt from their SC16 release:

In the nine months since Intel® Omni-Path Architecture (Intel® OPA) began shipping, it has become the standard fabric for 100 gigabyte (GB) systems. Intel OPA is featured in 28 of the top 500 most powerful supercomputers in the world announced at Supercomputing 2016 and now has 66 percent of the 100GB market. Top500 designs include Oakforest-PACS, MIT Lincoln Lab and CINECA.

· Intel OPA has been deployed in 28 clusters in the November 2016 Top500 Supercomputer list, which is twice the number of InfiniBand* EDR systems and now accounts for around 66 percent of all 100GB systems. Additionally, two systems are ranked in the Top 15: Oakforest-PACS is ranked sixth with 8,208 nodes and CINECA is ranked 12th with 3556 nodes. The Intel OPA systems on the list add up to total floating-point operations per second (FLOPS) of 43.7 petaflops (Rmax), or 2.5 times the FLOPS of all InfiniBand* EDR systems.

· Intel OPA has seen rapid market adoption in the nine months it has been shipping broadly, driven by clear customer benefits such as high performance, price-performance and innovative fabric features, such as error detection and correction without additional latency.

· Intel OPA is an end-to-end fabric solution that improves HPC workloads for clusters of all sizes, achieving up to 9 percent higher application performance and up to 37 percent lower fabric costs on average compared to InfiniBand EDR.

· Major installations include the University of Tokyo and Tsukuba University (JCAHPC), Texas Tech University, the University of Washington, the University of Colorado Boulder, MIT Lincoln Lab, and Met Malaysia. There are now well over 100 successfully-deployed Intel OPA clusters and most adoption is a result of competitive benchmarking and leadership price-performance.