At Supercomputing this year in Denver, Intel had a number of announcements. We were pre-briefed by Intel on the SC17 announcements and were somewhat surprised to see that Knights Mill was not going to be released at SC17, however, it is being demonstrated heavily. Intel is far from the fringes of the Top500 list. Instead it is found in 471 of the 500 Top500 supercomputers on the November 2017 list, its highest market share ever. Intel is also showing off neural net uses for its FPGA lines which has been a major push from the company along with larger scale Omni-Path switches. We are told that Intel is focused on the exascale computing initiative, but the company was unable to provide specifics at this time. Hopefully we will hear more at ISC in 2018.

Intel Knights Mill

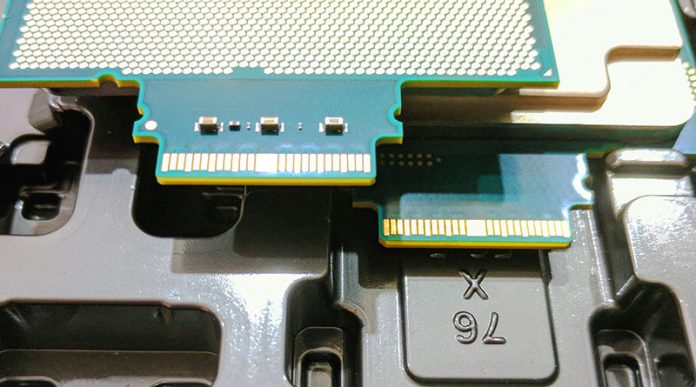

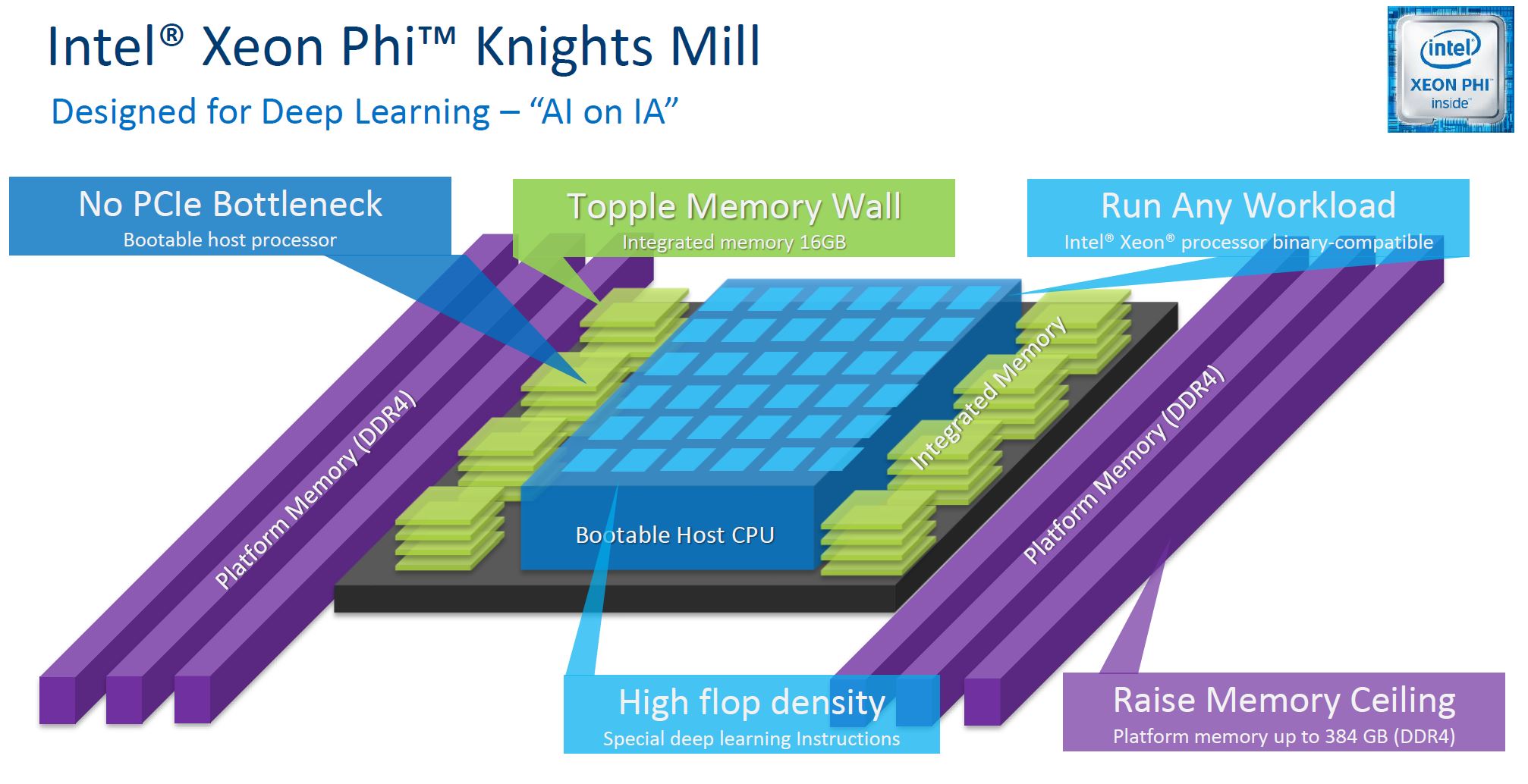

As a socket-compatible roadmap item for Intel Knights Landing, Intel Knights Mill is focused on scale-out deep learning. This is an important step for the company since one of the hot topics at SC17 is how HPC installations can address both traditional HPC applications (e.g. modeling and simulation) as well as deep learning.

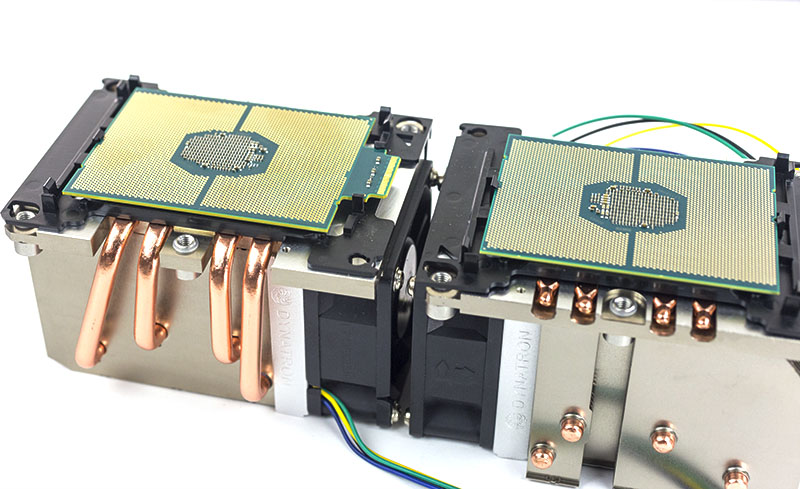

We covered the Intel Knights Mill architecture at Hot Chips 29. For those running HPC clusters, this is the upgrade path. Perhaps better said, this is a way Intel hopes to help its cluster customers address mixing deep learning workloads alongside traditional HPC workloads. We also expect that this will be the last Intel Xeon Phi that is not socket compatible with mainstream Intel Xeon CPUs. We are seeing cooling solutions for 300W+ TDP CPUs at the show which are often liquid. It does not take much imagination to conjure up why vendors are aggressively marketing 300W+ liquid cooling capacities.

We still expect Intel Knights Mill to release before the end of 2017 as promised, but these things can change.

Intel Omni-Path

We took the opportunity with Intel and some of the companies presenting at SC17 to discuss Intel Omni-Path. Intel Omni-Path is the company’s high-speed interconnect operating at 100Gbps speeds and with one other key advantage: on package options. Intel Omni-Path due largely to its pricing and packaging is now found on 60% of the 100Gbps connected Top500 nodes in the November 2017 list.

The Intel Omni-Path controller can either be found in a PCIe card, or as an add-on to some CPUs in the Intel Xeon Phi x200 and Intel Xeon Scalable Gold and Platinum series. One of the more interesting aspects to the “F” fabric CPU packages is that they have dedicated PCIe lanes specifically to interface with Omni-Path. If you use an Intel Xeon Gold 6148F part, for example, you essentially get the equivalent of 64x PCIe 3.0 lanes per CPU.

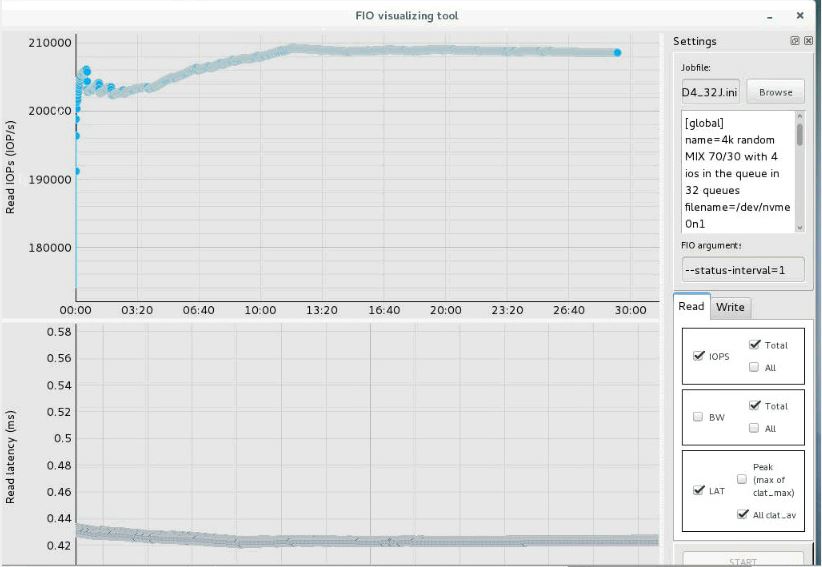

We have four Omni-Path nodes and an Omni-Path switch in the STH DemoEval lab and have shown NVMe over fabrics using Optane and Omni-Path. It is certainly an interesting technology however it needs an additional catalyst to get over the adoption hump. Mellanox is firing on all cylinders and has their VPI solution for running both Ethernet and Infiniband on the same card which is significantly more flexible. Omni-Path is less expensive so for a minimal $155 or so one can add 100Gbps fabric to their CPU which can be attractive.

Intel Exascale Computing

Intel told us in our briefing that they are focused on exascale computing but could not publicly disclose details. Here is an excerpt form the press release we received:

Exascale is the next leap in supercomputing – delivering a quintillion calculations per second, which is more than ten times greater than the largest system today. Exascale has the potential to solve some of the world’s toughest challenges – from enabling gene therapies to addressing climate change to accelerating space exploration. This ability for significant scientific breakthrough is increasing global interest in accelerating the delivery of exascale-class systems with many nations pursuing a faster pace to this technology goal. For example, the U.S. Department of Energy has accelerated its timeline for delivery of an exascale system by two years, now targeting delivery by 2021.

Intel has been collaborating with many global academic and national research institutions on their unique HPC needs for the path to exascale. One of the most exciting efforts has been with the U.S. Argonne National Labs as part of the CORAL program. Recently, the Department of Energy announced they intend to deploy the first U.S. exascale system based on Intel technology, demonstrating a significant level of confidence in the Intel roadmap and portfolio of technologies designed for exascale-class computing. To meet its customers’ needs in exascale, Intel has accelerated its own roadmap and shifted investment toward a new platform and microarchitecture specifically designed for HPC, big data, and AI at the highest scale. With its breadth and depth of technologies across compute, storage, interconnects, and software, Intel has the optimal foundation for exascale computing. (Source: Intel)

Final Words

Intel was extremely frank in their briefing which was refreshing. We hoped to get a lot more announcements from Intel at SC17. At the same time, Intel is all over the SC17 show floor and announcements of systems. Even NVIDIA, a fierce rival, continues to use Intel Xeon CPUs in thier flagship DGX-1 HPC servers.