Inspur i24 Power Consumption to Baseline

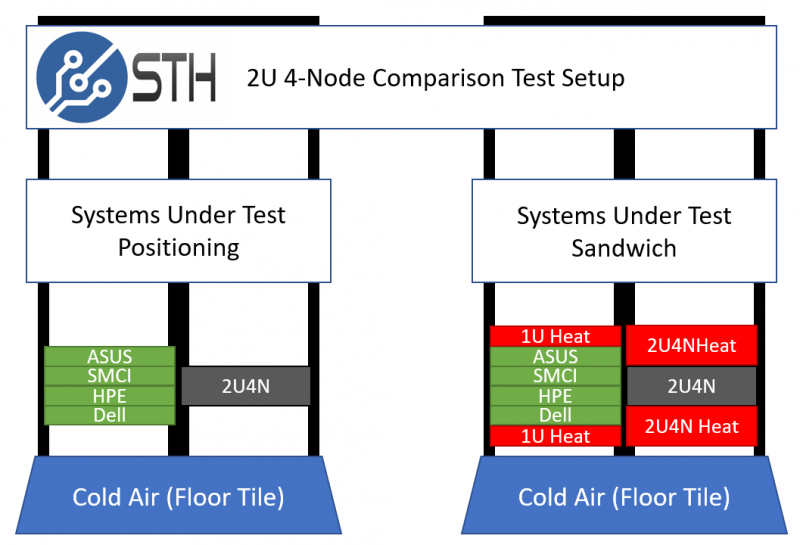

One of the other, sometimes overlooked, benefits of the 2U4N form factor is power consumption savings. We ran our standard STH 80% CPU utilization workload, which is a common figure for a well-utilized virtualization server, and ran that in the sandwich between the 1U servers and the Inspur i24. With dense servers, heat is a concern, so we replicate what one would likely see in the field. This is the only way to get useful comparison information for 2U4N servers.

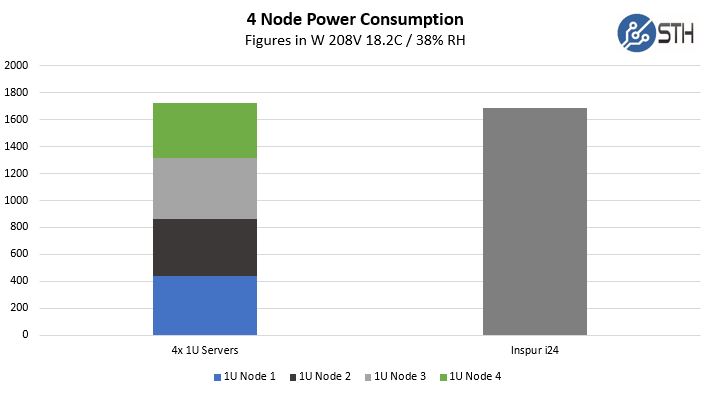

Here is what we saw in terms of performance compared to our baseline nodes.

As you can see, we are getting a small but noticeable power efficiency improvement with the i24’s 2U4N design. Had Inspur used fully independent nodes with their own cooling, we would expect to see higher power consumption here than in our control set.

This is about a 1.8% power consumption improvement. Part of that may be running power supplies at higher efficiency levels. Part of it is the shared cooling. When one combines power consumption savings along with increasing density, that can be a big win for data center operators.

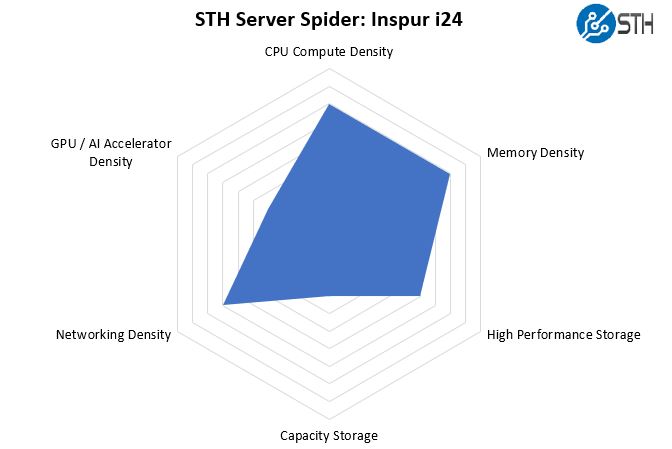

STH Server Spider: Inspur i24

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Inspur i24 is a very interesting mix of capabilities. One gets eight CPUs in a 2U form factor along with 64 DIMM slots. This is while providing a solid mix of hot-swap storage options. Each node can utilize an OCP NIC 2.0 along with up to two PCIe expansion cards making the nodes surprisingly flexible.

There are severs that can fit more capability into each 2U of space such as higher-end GPU or storage servers. Even with that backdrop, the Inspur i24 excels in a number of areas while providing a solid consolidation and efficiency story.

Final Words

The Inspur i24 (NS5162M5) is a system we did not know what to expect when reviewing. It is a system that we have not seen often at trade shows. We also have reviewed many 2U4N servers from a number of different vendors. As such, we have a very thorough process to find differences between the solutions.

One of the biggest results from our testing is that we saw a performance baseline within testing variances while getting around 1.8% lower power consumption in the “STH Sandwich” test configuration we use to simulate dense racks of these servers. That is a very impressive result as it adds an additional facet to TCO calculations when looking to consolidate from 1U 1-node to 2U 4-node platforms.

Perhaps the highlight of the Inspur i24 is its design. The use of a dedicated chassis management controller, or CMC, is becoming standard. At the same time, using high-density connectors instead of PCB gold fingers is something we find mostly on higher-end systems. Weeks after starting our testing of this system, the one item that clearly makes an impact is the rear PCIe riser design. Many vendors, including large vendors such as Dell EMC, have a series of screws to secure the PCIe risers into 2U4N sleds. Here, Inspur is using thicker gauge steel on the compute sleds which allows them to make what is likely the industry’s most easily serviceable PCIe risers.

We have covered several times how Inspur is now the third-largest server vendor. Unlike many of the other large server vendors, Inspur is building its platforms with industry-standard BMCs like what hyper-scalers use. Like these larger vendors, the Inspur i24 is a great example of how it is doing a great job designing its server platforms, in some cases even providing clear industry leadership in terms of server design.

The units should also support the new Series 200 Intel Optane DCPMMs, but only in AppDirect (not Memory) mode.

U.S. Forces Intel to Pause Shipments to Leading Server Maker.

Tomshardware

Intel Restarts Shipments to Chinese Server Vendor

Tomshardware