VMware vSAN is one of the most intriguing hyper-converged/ software defined storage solutions out there. Having the ability to use fast local storage without a single point of failure in virtualization environments is what people are asking for years. This new breed of software defined storage solutions has traditional shared storage vendors like EMC and NetApp scared. With products like VMware vSAN, there may be no need for expensive, proprietary storage arrays. Instead, having the ability to use commodity/ off the shelf hardware is the key for any software defined storage solution. In this series we will evaluate a 2 node all flash VMware vSAN using Dell workstation hardware and off the shelf SSDs, the antithesis of traditional SAN storage. In this article we are setting up the hosts with inexpensive Mellanox Inifiniband to get a low cost and high speed network for our all flassh vSAN.

High-level VMware vSAN hardware requirements

VMware vSAN is not as picky about hardware compared to other hyper-converged solutions but it does have a guided HCI we need to follow for successful deployment. Here are a few of the biggest requirements:

- Using traditional host bus adapters (HBAs) rather than RAID cards. HBAs based on LSI 2008/2308/ 3008 chipsets are very popular with any software defined storage, including VMware vSAN.

- Use a 10GbE (or faster) storage network for all flash vSAN. This will ensure data replication between servers in real time.

- Utilize high-(write) endurance SSDs. There will be lots of read/write operations in the cache tier.

Hardware selection for home lab

Here is the hardware we used to meet the above general requirements for all flash vSAN. There is also a bit of explanation around the hardware used.

- Server: Dell Precision R7610 Rack Workstation. It is similar to the PowerEdge R720 with up to 2 x E5-2600 V2 CPU. 16 DIMM slot up to 512 GB memory. 6 x 2.5’ SATA/SAS HD/SSD and up to five PCIe full-length/full-height slots (up to 4 x PCIe x16); one PCIe x8 half-height/low profile. It has a LSI SAS 2308 HBA integrated and has enough power to support up to 3x Nvidia Grid GPU. It supports PCIe based NVMe SSD as well.

- Network: Mellanox Connect x2 InfiniBand HCA (40GB InfiniBand) with Mellanox/Voltaire grid director 4036 InfiniBand switch. One of the most popular high performance/low cost solutions on the STH forum.

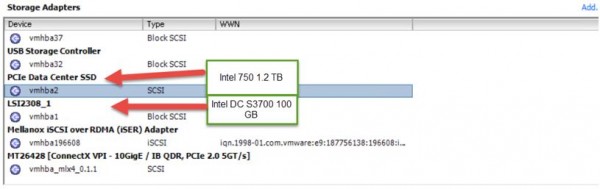

- Storage SSDs: Intel DC 3700 100 GB as high endurance read/write tier (1.8 PB). Intel 750 Series AIC 1.2 TB SSD as capacity tier (up to 2500 MBps read, 1200 MBps write, 460000 IOPS 4K random read and 290000 4K random write.) The PCIe add-in-card Intel 750 1.2 TB NVMe SSD drive will be used for data/capacity tier. The Intel DC 3700 100 GB SATA SSD drive will be used for flash tier. It is served via the Dell’s on board LSI 2308/LSI 9207 controller.

- VMware environment: Two clusters. One cluster for VMware vSAN witness virtual appliance. One cluster for two node all flash vSAN. Build is VMware ESXi 6.0.0 build-3073146.

- vSAN architecture. Due to space/budget limitations, the vSAN consists of two Dell Precision R7610 with SSDs and one VMware Virtual SAN Witness Appliance. Otherwise VMware vSAN requires at least 3 identical node. It has Management/VM network on a L3 switch and storage network on InfiniBand switch (IPoIB.)

- ESXi storage: USB drive, we used the popular SanDisk Ultra Fit USB drive as boot drive. 32GB versions can be purchased for $10 or so

and will fit in internal USB headers even in 1U servers.

Important Note: InfiniBand is not officially supported in vSAN environment. The Intel 750 NVMe based SSD is not officially supported in vSAN environment. This is for high performance lab environment, dot not use in production.

The goal is here is to show you how to setup a two two node all flash vSAN design over Infiniband and measure VM performance on NVMe based SSD in the architecture using low cost components.

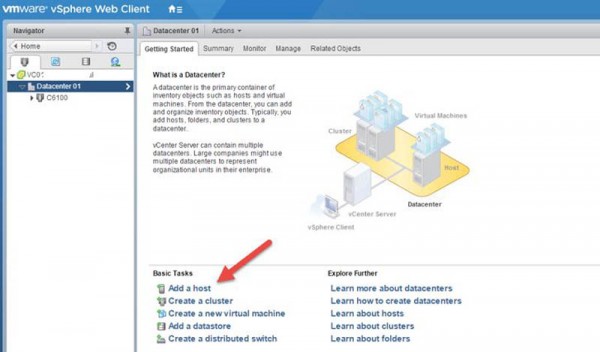

Setting up Infiniband vSAN hosts

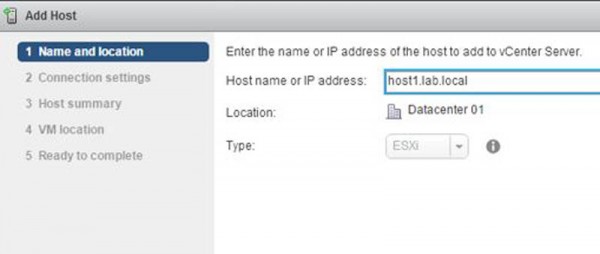

The first task we need to complete is adding a new host. Here are the steps. Open vSphere Web Client, and Add a host:

Add first host to the datacenter:

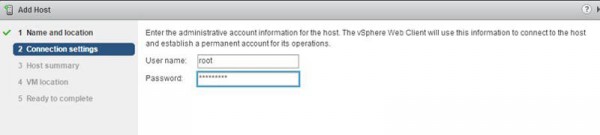

Enter user name and password:

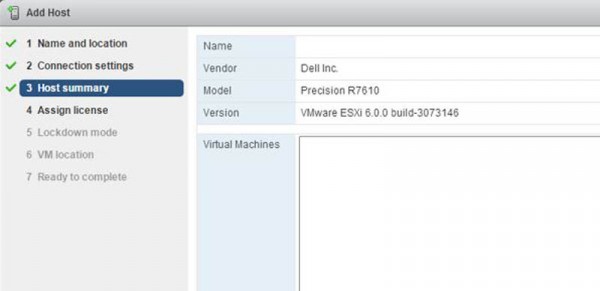

Verify in the host summary:

Assign licenses (obscured for obvious reasons in the screenshot):

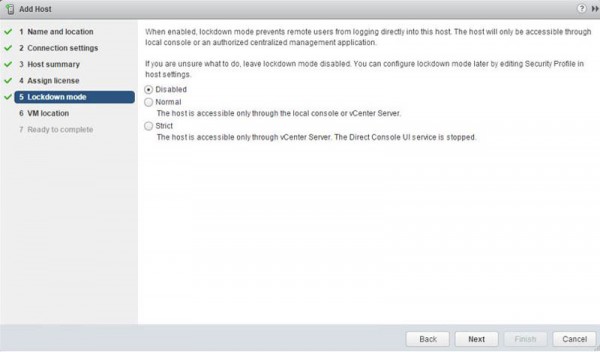

We are leaving Lockdown mode at the Disabled option so we can ssh to the host and update drivers:

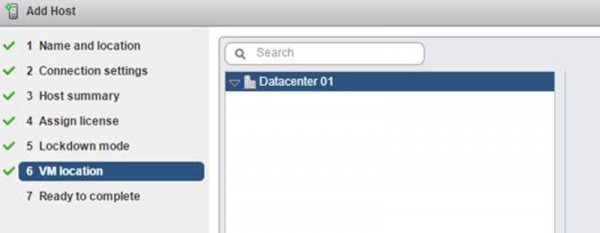

Select the VM location:

Validate settings before finishing the wizard:

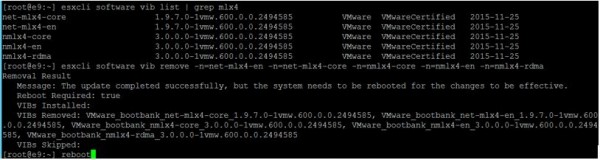

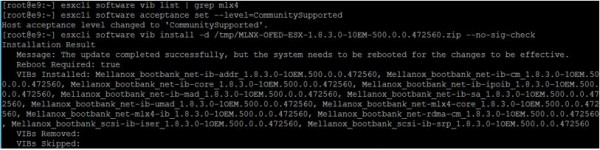

After hosts are add to the data center we need to install Mellanox Inifiniband drivers. We are using Mellanox drivers v1.8.3.0. The first step is to remove the existing drivers:

esxcli software vib list | grep mlx4

esxcli software vib remove -n=net-mlx4-en -n=net-mlx4-core -n=nmlx4-core -n=nmlx4-en -n=nmlx4-rdma

Next we need to install the v1.8.3.0 drivers:

esxcli software acceptance set -–level=CommunitySupported

esxcli software vib install -d /tmp/MLNX-OFED-ESX-1.8.3.0-10EM-500.0.0.472560.zip -–no-sig-check

After reboot. Verify the Mellanox IB Storage NIC.

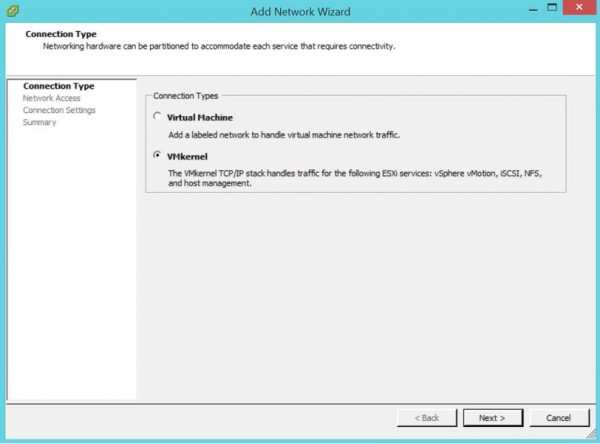

Add VMkernel

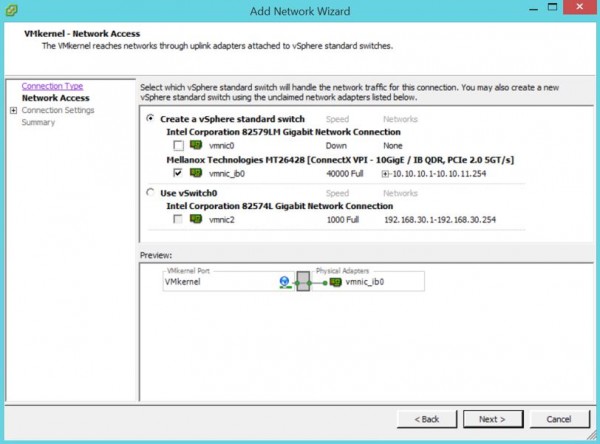

Add Mellanox ConnectX 2

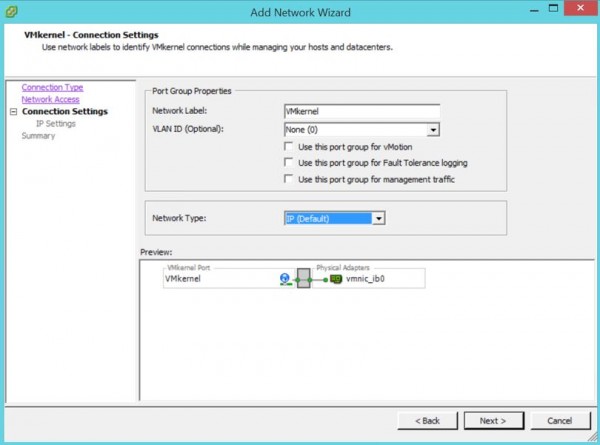

Under Connection Settings we are using IP as the network type to keep things relatively simple:

We can then utilize standard IP addressing and assign a static IP address.

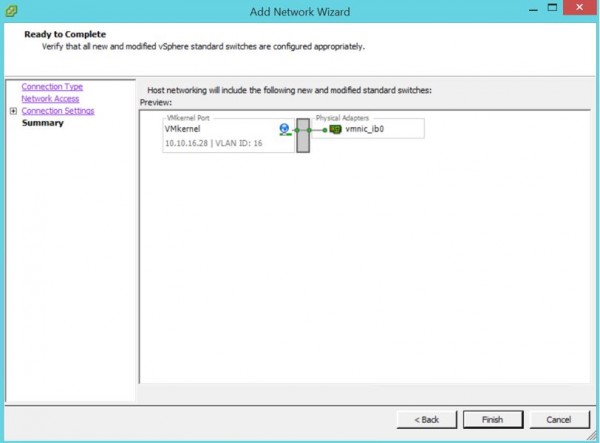

Again we have the VMware wizard summary:

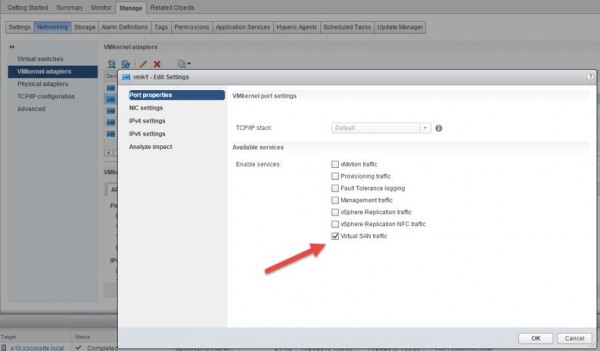

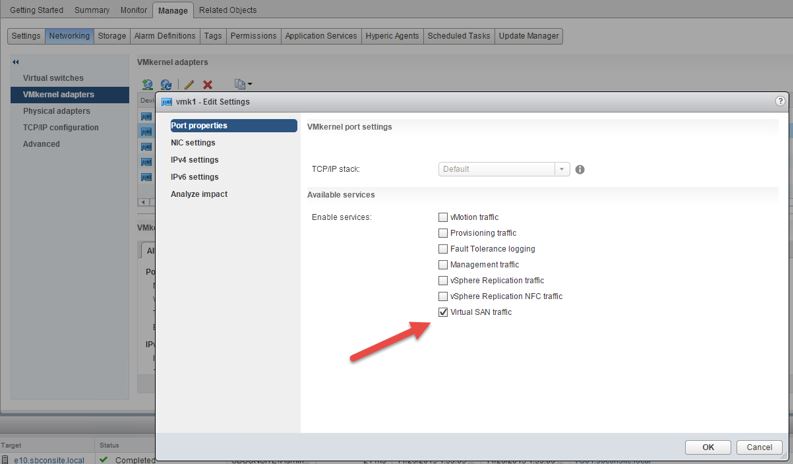

The last major step we are going to cover today is ensuring that we can use vSAN over this new interface. We need to edit the VMkernel adapter and ensure that “Virtual SAN traffic” is checked under “Enable services”.

Wrapping up Part 1

We started Part 1 of this guide discussing some of the choices we made in terms of selecting commodity hardware to use. We then documented how to setup hosts including the installation of the Mellanox Infiniband driver. We then setup the network to handle our Vritual SAN traffic. Overall using the above you should be able to get hosts up and running relatively inexpensively. Next up: setting up the cluster and vSAN. We will then show how this setup performs as we spin up multiple VMs. The goal with this series is to show how to setup a similar evaluation. For those looking to build something similar you should head to the STH forums to find some great deals on similar hardware.

Where did you get your hands on the 1.8.3.0 driver? all Mellanox has released is 1.8.2.4 and 2.3.3.0 to the public currently.

Is this a future edition that might be ESXi 6 compatible? i was doing this for a long time last year however i would have issues with 1.8.2.4 and ESXi 6.x PSODing b/c of the OFED driver, which isn’t supported by ESXi 6. (Dell C6100 and HP S6500 Blades same result)

Also what firmware on the ConnectX-2 were you using? if u can snag that information and share.

1.8.3 is a beta driver. You can request from https://community.mellanox.com/

Get OFED driver to load on esxi is a challenge, that’s why I used the sm from the ib switch.

Firmware on the connect-2 is 2.10.720. You can find that in the forum.

Cheers

How did you get multicast to work? I’m having trouble with a similar configuration.

I’m running:

ESXi, 6.5.0, 4887370

OFED 1.8.2.5

ConnectX-2 MHQH29C with 2.9.1000

IS5035 switch with subnet manager

I followed the instructions here: https://forums.servethehome.com/index.php?threads/10gb-sfp-single-port-cheaper-than-dirt.6893/page-9#post-145423

I am able to get IPoIB working and all of the hosts can ping each other.

However, multicast is not working. How can I get multicast to work? It is preventing vSAN from working.