Cavium made headlines in 2014 with their announcement of ThunderX, a multi-socket ARMv8 design. We covered the ThunderX announcement almost exactly one year ago. The prospect of a dual socket processor with 48 ARMv8 64-bit cores was tantalizing as it meant potentially 96 cores per server. Today Cavium and Gigabyte are making that a reality with the launch of a 4 server in a 2U machine dubbed the Gigabyte H270-T70 with MT70-HD0 nodes. This is a similar concept to the Dell PowerEdge C6100 setups we used in our Las Vegas, Nevada colocation using shared power and cooling for greater efficiency. Gigabyte has recently announced other ARM servers based on AppliedMicro X-Gene and Annapurna Labs processors. It seems as though Gigabyte is trying to take a leadership position in ARM based servers and this Cavium ThunderX announcement gives the company a larger platform.

Quick Cavium ThunderX Refresher

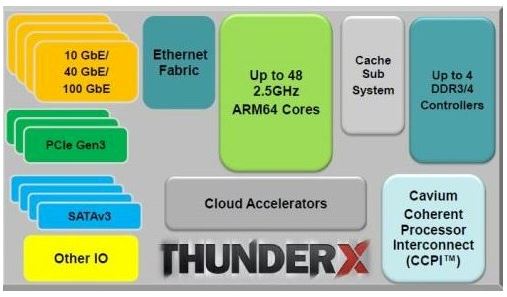

Since it has been almost a year since the announcement, here is a quick diagram of the basic blocks that make up the Cavium ThunderX chip:

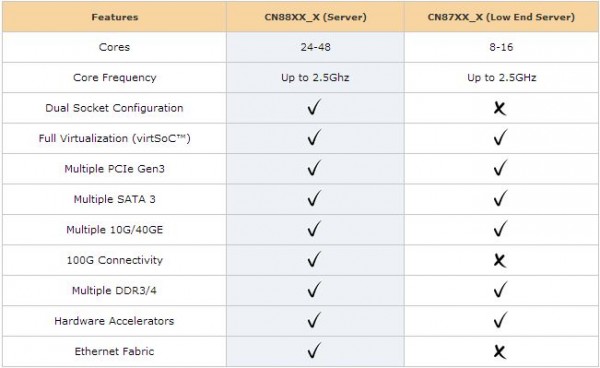

One can see that these are very much SoC designs with storage and networking built-in. Unlike competing 64-bit ARMv8 designs, Cavium ThunderX is going after the Intel Xeon E5 line. From the performance numbers we have seen thus far, expect an ARMv8 core to be more competitive with an almost two year old Intel Atom C2000 series processor rather than a modern Haswell-EP or Broadwell-EP/ DE core. Nevertheless, Cavium is big-game hunting with high-end features.

The Gigabyte H270-T70 / MT70-HD0

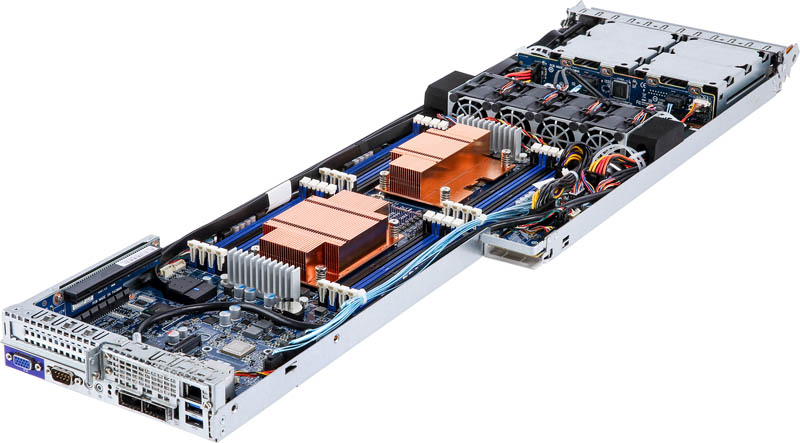

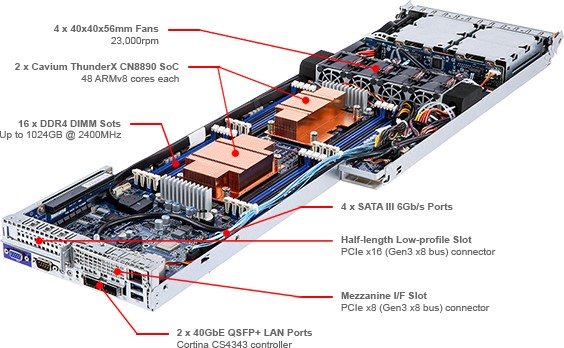

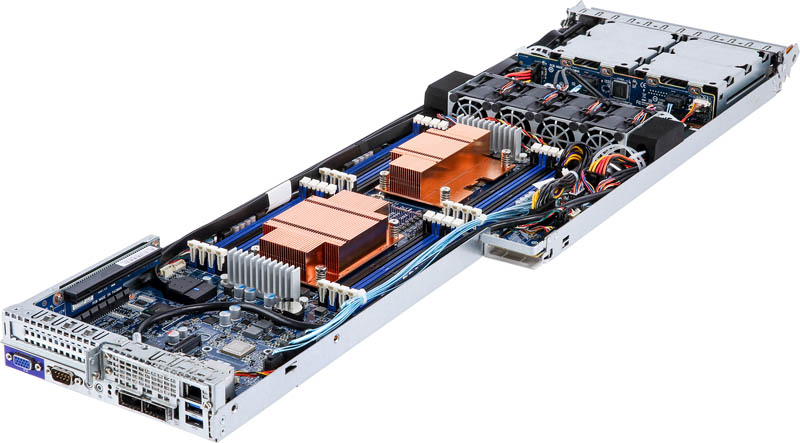

First looking at one of the Gigabyte H270-T70 nodes from the top side, one can see the dual Cavium ThunderX CN8890 processors configured in-line, much like we see with higher-density Xeon E5 designs. The 8x DDR4 DIMM slots per processor give access to 16 DIMMs per node. Using 64GB DIMMs one can achieve up to 1TB of 2400MHz DDR4 RAM per node.

The choice to use copper heatsinks over aluminum ones generally means we are looking at hotter chips, however these are in-line processors in essentially a packed 1U half width chassis so we are still interested to see how hot and power hungry these chips actually are. Cavium is a fabless company so ThunderX is not manufactured on the latest Intel 22nm or 14nm process.

For the annotated view of the server node:

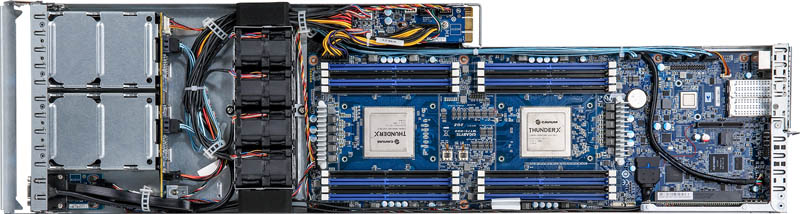

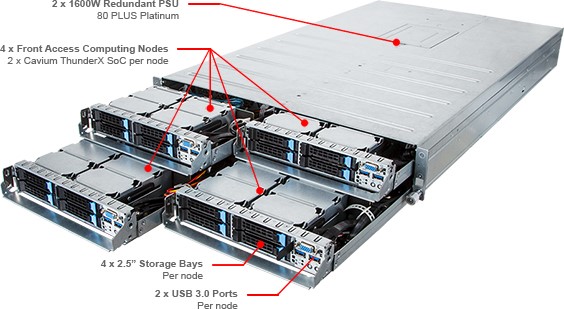

Here is a shot of the four notes that can be swapped. This is a great feature that saved us many hours so far in our Las Vegas colocation maintenance.

And the annotated view of the chassis:

One of the more interesting features is redundant 1600w PSUs. With four nodes that means that there is 400w allocated for each node. When one adds-in a 8w per SATA SSD buffer, that leaves 368w for the server node itself (minus some for the shared cooling). Certainly respectable given the integrated dual 40GbE networking and expansion capabilities.

One can see that the chassis is made for servicing as each node has both front and rear USB 3.0 ports as well as VGA ports for KVM carts. The servers also have a RJ-45 port for IPMI remote management. It appears as though there is an ASPEED AST2400 IC on the motherboard so we would expect the remote management to be fairly familiar.

Gigabyte did tell us that we should be getting access to a unit. We will be sure to get Linux-Bench running on one as soon as we can!

Very nice unit indeed. Unfortunately pricing information is not included which means purchasing decisions cannot be made yet.

Notice the notch out of the backplate for PSU connection….

The other 2 nodes must have mirrored boards/sheetmetal, otherwise they wouldn’t work with the PSU’s. THUS, left side nodes can’t be swapped with right side nodes….. FAIL!

The other alternative is that one side would be run upside down from the other side, to avoid mirrored boards, but then you’d see the flipped nodes one side vs the other on both the IO backplates, and the front IO/drive sleds.