Other Build Bits

Once we had the chassis, CPU, and motherboard aligned, we still had a few more bits to get together.

One of the quirks of using the SilverStone CS381 is that it uses a SFX or SFX-L power supply. These days, SFX PSUs are plenty powerful and quiet, but they are also more costly. Needing a small PSU in a big chassis like the CS381 seems counterintuitive.

As a quick note here, you do not have to use a SilverStone PSU with the CS381, we just did to match.

For memory, this is easy. We are using 2x32GB DDR4 ECC RDIMMs. We would have used simply 2x 16GB but the 32GB modules were already installed so that was easy. We see some people putting 128GB of memory in small ZFS servers like this. Even 64GB is overkill for most when running a few smaller VMs such as a Ubiquiti UniFi controller. Still, we had lots of extra DDR4-2133 and DDR4-2400 because most of the memory we are using these days is DDR4-2933 or 3200. Since it was there, and the slots were open, why not.

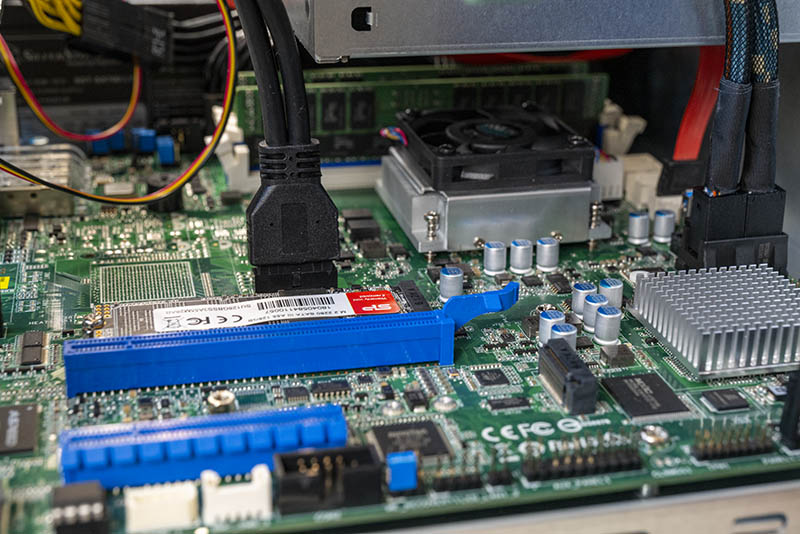

For boot media, we would normally use a SATA DOM, but we did not have an easy SATA DOM power source on the motherboard so instead, we are using a small boot drive which again saves cost and power.

For hard drives, we are mostly using Western Digital EasyStore shucks in 10TB capacities. We actually have a great reason for this and it is around the next series we are doing with the HPE ProLiant MicroServer Gen10 Plus after our Ultimate Customization Guide. If you think this is a cool build, just wait. An interesting point about our 10TB drives is that they are actually all from different purchase batches. They were manufactured on different days and months. We actually do this intentionally even on small servers as it helps mitigate the risks of a bad batch of drives.

We also added a SATA SSD (Intel DC S3610 480GB) that we had here simply because we had them and figure we can use for L2ARC/ VM duties.

This was selected because it was what we had on-hand.

Building the FreeNAS TrueNAS Core ZFS mATX Appliance

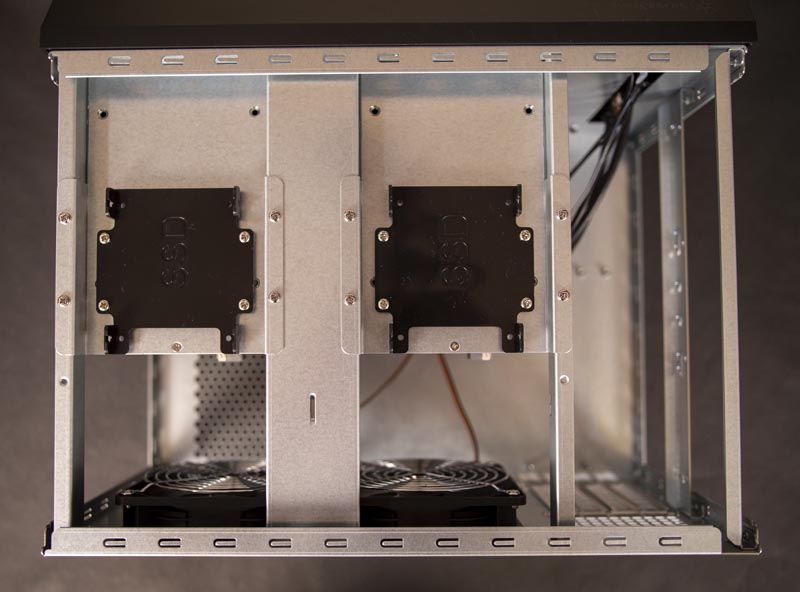

The build process was extremely simple. A nice feature with embedded boards like the one we are using is that we did not need to even add a single PCIe card to get the drive connectivity nor the 10GbE networking for the build.

We again wanted to reiterate something from our original SilverStone CS381 Review, there are so many screws when building this platform. It is not difficult, but it feels almost oppressive with just how many screws there are in this chassis. Still, it is a one time deal.

Overall, the build process took about 90 minutes. While that is not too long for a system like this, but it is also much longer than using a pre-built solution.

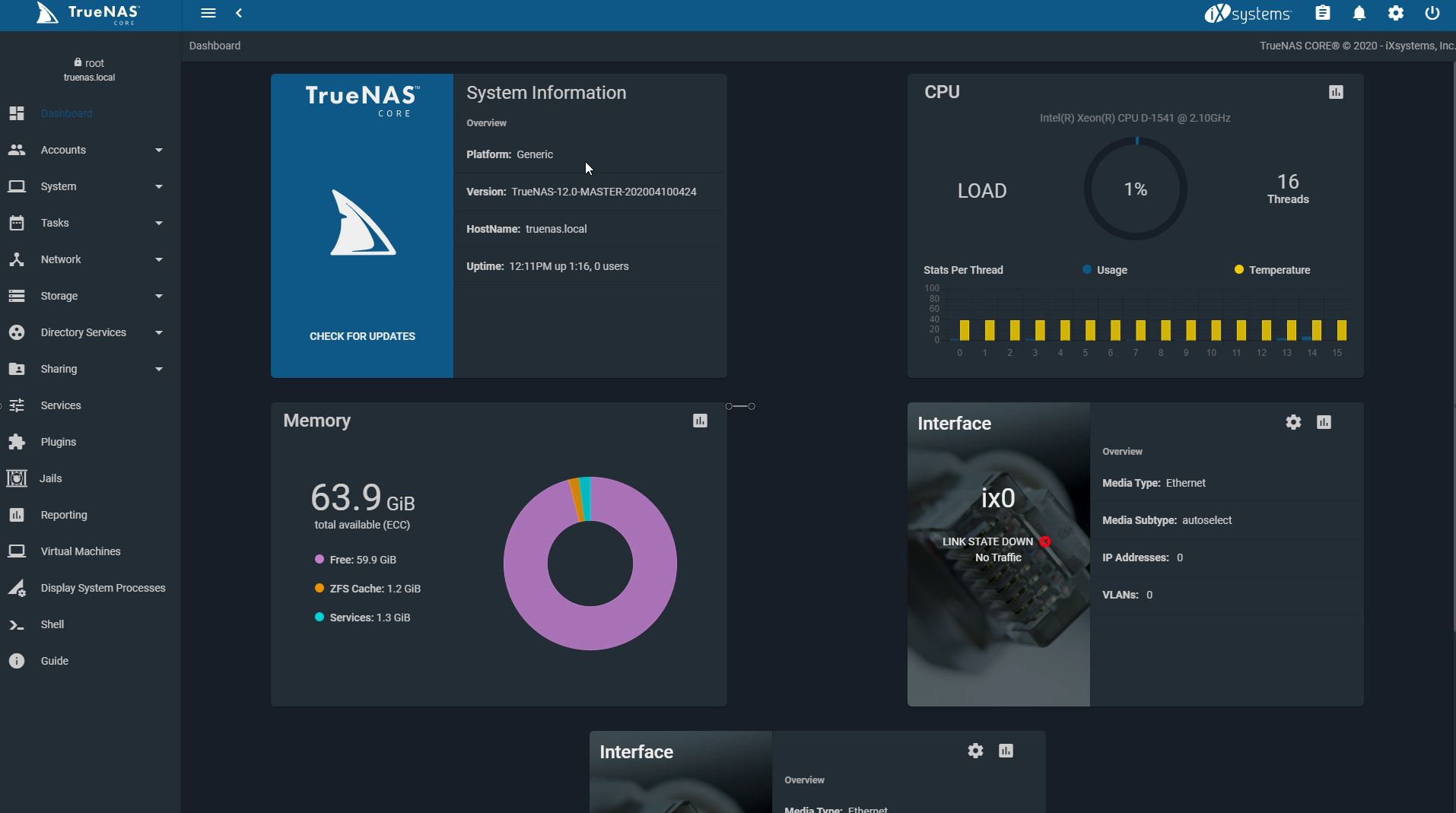

Getting TrueNAS Core Running

Luckily this part was a breeze. One of the benefits of using embedded hardware that has been on the market for a long time is that everything installs. We could put virtually any OS and the solution will work out of the box.

We were originally going to have a big section here on how to setup TrueNAS Core on a system like this, especially given it will not be released for several weeks. Instead, the process was:

- Log into remote iKVM

- Load TrueNAS Core nightly build ISO

- Boot System

- Follow the handful of command prompts to install and set a default password

- Reboot

- Login to TrueNAS Core

Actually, the longest operation, by far, was copying the OS files over the remote iKVM. The ASRock Rack platform we are using is still the AST2400 generation of BMC and sometimes those are not the fastest. From there, setting up a RAID-Z2 array and network shares was very easy:

Again, this is the advantage of using a proven platform for storage. Everything just works out of the box. After the system booted, we set up our array and SMB sharing. Within 5-10 minutes, we were transferring files over the 10GbE network port.

While we had plenty of opportunities to push boundaries, instead we had something that was super simple to set up. If you generally understand NAS basics, you can go from bare system to storing data very fast without using a CLI. Synology, QNAP, and others have more polished interfaces that hide some of the less attractive bits such as the mid-1990s themed FreeNAS installer, but in terms of actual difficulty, this was probably a 1 of 10. The FreeNAS/ TrueNAS Core teams have done a great job making some of the directional decisions that greatly enhanced the user experience here.

Next, we are going to discuss power consumption before getting to some final thoughts.

They should sell a FreeNAS MiniXXL with this setup for those that need more than Atom but still want a smaller FF.

Stop trying to make me subscribe to watch your content is stupid and doesn’t work on mobile

We do not have subscribe to watch filters. Everything is on YouTube or the STH main site without a registration wall.

Any performance issues with semi-disregarding the generally accepted wisdom/paradigms of the TrueNAS/FreeNAS gurus of recommending 1 GB of RAM per TB of storage? (Any benefits at all of running 128 GB over 64 GB?)

@Mark Dotson,

that 1GB RAM per 1TB storage is only partially true. Look at the offical hardware requirements/recommendations for FreeNAS: https://www.freenas.org/hardware-requirements/

In short (and not considering any RAM requirments of any other services running on your FreeNAS box), 8GB RAM is minimum and sufficient for 8 drives. Then, for any additional drive, an additional minimum of 1GB per drive is recommended. Note that those requirements/recommendations are not based on a RAM-per-storage-capacity metric, but rather based on RAM-per-drive.

Note that additional RAM can and will be utilized by the ZFS ARC for increased performance. Like with any other cache, how much of a performance gain a particular cache size will bring depends significantly on the usage scenario(s) that define the cache access patterns and the tiered cache hierarchy configuration (ARC and L2ARC).

What a coincidence!

I’m just waiting for delivery of the last four disks. Should arrive in the next hour or two.

My build:

CS381

450W Seasonic FOCUS SGX, Full Modular, 80PLUS Gold

X11SSH-CTF

E3-1260L V5 (also 45W, less cores than the D-1451, but higher per core clock)

64GB ECC

8 * DC520 (mix of SE/ISE,SAS/SATA so they’re from different mfg batches)

PMC NV1604 Flashtec NVRAM (if I can ever get it to work – it’s been EOLed so no control s/w) :(

Another option for the motherboard would be the Intel D-21xx based boards. Higher TDP but it has better performance per core. You make up for that with future expansion options or more flexibility now however when considering the boards it’s used on like X11SDV-8C-TP8F from SuperMicro. Lower price and you get 2xSFP+, 2x10Gbase-T, 4x1Gbase-T, Satadom, M2-nvme, pcie-x8 and x16 slots, and its VMWare certified. Perfect for a freenas, soho/lab fw/router, and room for a home automation guest or other light weight options.

Im running the X11SDV-8C-TP8F in the CS81 chassis, adapting a CU cooler to fit was the most difficult part of the build as the CPU sit partly under the drive trays.

How do you like it so far? I just finished building mine. Using a Plink web2208 rackmount case. Still waiting on new drives but I threw my old ones in it and booted it about 30 minutes ago. Havent had time to get esxi on it yet. Project for tomorrow.

Dr. Madness said: “8GB RAM is minimum and sufficient for 8 drives.”

What is your source for this info regarding very RAM amounts?

Perhaps someone should advise should advise their TrueNAS/FreeNAS/ZFS experts they’ve had it wrong for years…

They (the TrueNAS folks) seemed pretty clear in their recommendations for RAM amounts being related to 8 GB min, then add 1 GB of RAM per TB of storage capacity in Terrabytes, and not simply the ‘number of drives’, or, ‘add one GB for each drive above 8’ (which no one has EVER uttered/implied in the TrueNAS forums in the 4 years I’ve been reading them)…; and I’m reasonably certain there would logically be more RAM overhead involved in managing /correcting issues with 8 each 16 TB drives vs. 8 x 80-100 GB drives from 12 years ago, for instance…

EDIT: I do see Dr. Madness’ recommendations now listed officially as the minimal RAM recommendations on the TrueNAS page….; seeking clarification as to why /when the recommended was so drastically lowered… (Certainly makes TrueNAS a more affordable solution, hardware wise, if only 8 GB or 16 GB is sufficient now instead of 128 GB)

Do you need a storage controller that support JBOD for ZFS? Or create 8 RAID-1 arrays on the LSI controller?

1GB of ram / TB is only if you plan on using dedupe. I’ve got 128GB of ram in a box with 96TB Usable (144TB RAW), however that is overkill (I got a GREAT deal on the RAM). When I was running 64GB it was more than enough as well, my machine never struggles. IF you run DEDUPE, then yes it’s recommended, otherwise 32-96GB (depending on what you want to do with the box)

It’s more cost-effective to buy a complete server these days. I had actually considered building my own but ended up buying an HPE server and building around it.

An HPE ML110 Gen 10 with an 8 core Xeon Silver 4208 and 16gb of RAM is only $850:

https://www.provantage.com/hpe-p10812-001~7HEWY349.htm

STH review: https://www.servethehome.com/hpe-proliant-ml110-gen10-review/

Another 4 bay LFF drive cage is $105 (comes with cabling):

https://www.provantage.com/hpe-869491-b21~7HPE931F.htm

Another 16GB RDIMM is $175 (I know you can get memory much cheaper, but for this exercise I’m using real deal HPE labeled memory):

https://www.provantage.com/hpe-815098-b21~7HPE92F8.htm

You can get 8 real HPE drive sleds on eBay for around $60.

https://www.ebay.com/itm/LOT-OF-4-651314-001-HPE-TRAY-FOR-3-5-SAS-SATA-DRIVE-TRAY-DL160-DL380p-G8-G9/133331123957 (I have actually bought from this vendor before)

Likewise, you can get iLO Advanced on eBay for $30:

https://www.ebay.com/itm/HPE-iLO-Advanced-License-LIFETIME-GUIDE-2-3-4-5-ALL-Servers-FAST/253721355479

If you don’t want to use the built-in SATA ports, I bought a Broadcom/LSI 9400-16i HBA for $275 on eBay, though of course older cards are even cheaper.

Apples to apples, that’s $1,220, or just a tad under $1,500 with an HBA, for a much better system with a warranty and support. Even if you paid full retail for iLO Advanced instead of getting it on eBay you still come out way ahead.

I also just built a FreeNAS box with a D1541D4U-2O8R. For me it was the excellent price and feature set of that board that was a no brainier.

I originally planned on going with a C3758 based board, but there was none with SFP+ ports other than the A2SDI-H-TP4F for more than 2x the price.

I ended up getting a tiny 128 GB m.2 2230 for $30 as a boot drive.

I went with 4x16GB DDR4-2400 RDIMMs. It seems to be true on this board that if you only populate 2 RDIMMs the ram only comes up as DDR4 2133. (Still waiting on the extra 2 sticks to verify)

The only thing that i haven’t decided is if I need a L2ARC, and do i go another m.2 nvme (only PCIe2 1x) or SATA SSD?

I built this same setup for my first NAS. I purchased the MB from Newegg for 299.00 for a steal with only 32gb of ram. It runs my plex and unifi software with ease. Next step is more ram and surveillance software. Great article and now I can show some friends. They said it was overkill, but that’s what we do right?

I’m thinking of doing a build in this case myself, but I’m concerned about the HDD temps. Does anyone know how high they are during normal usage?

The Silverstone CS381 seems like a decent case. Better airflow than most, especially if you need the hot swap drives.

I prefer the Fractal Design Node 804. More and larger fans. It can still take full size PCI cards and full size ATX power supplies. It puts the board in a separate airflow pattern than the power supply and drives. A bit unique on form factor since it’s a big (almost) cube.

https://www.fractal-design.com/products/cases/node/node-804/

What a joke, NAS with $1300 motherboard but the HDD shucks…

Did you flash the LSI 3008 into IT mode for this setup?

Hello I’m planing my first FreeNAS build. I’m colorist and need a shared storage solution to work direclty out of.

I’ve been planing a build around the AsRock X570D4U-2L2T, however after reading this article I found a really cheap D1541D4U-2T8R on ebay and was wondering if it would be a better option to get that instead, however it’s a really old design and I don’t know if it makes sense to buy that in 2021…

What would you recommend?

Did you ever measure the fan noise? How did that turn out?