The AMD Instinct MI300X

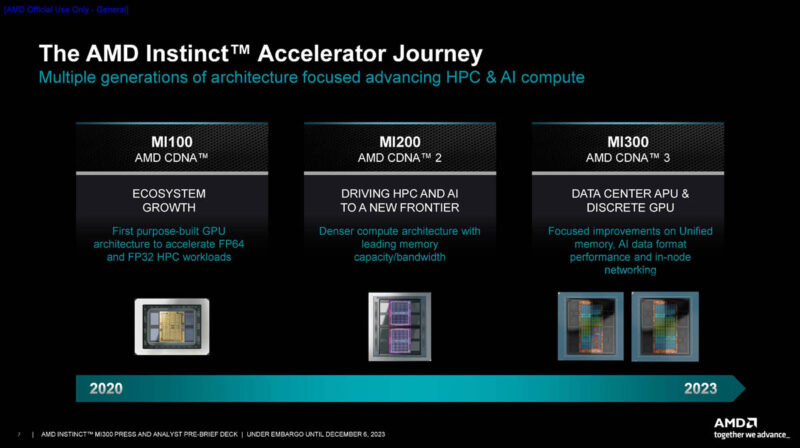

Starting with the AMD Instinct MI300X, the GPU variant, AMD has been on this path for some time starting with the MI100 years ago.

Here are the strategic pillars that we uploaded to this article and so we will just put it in if folks are interested.

Some time ago, AMD split its GPU lines into the RDNA line for graphics and CDNA for computing. We are now on CDNA 3 which has more focus on AI than in previous generations. While we still call these GPUs, realistically, this is a GPU style compute engine.

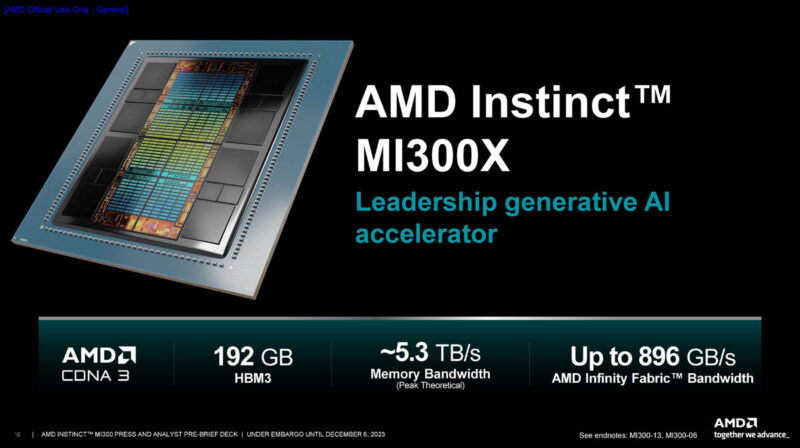

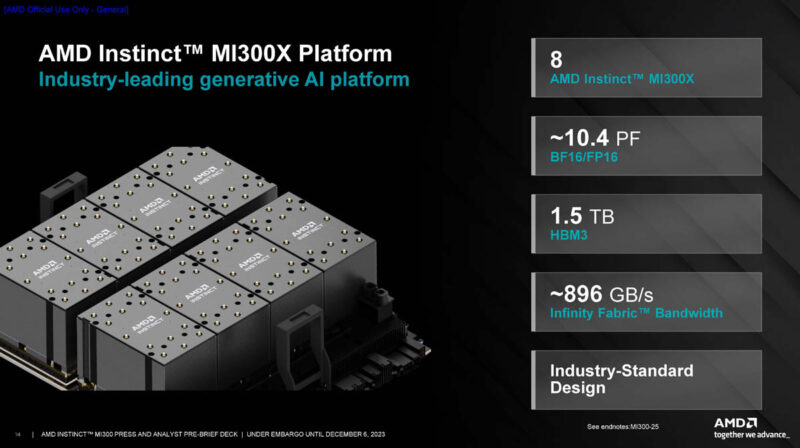

The AMD Instinct MI300X is the company’s big GPU. It has 192GB of HBM3 per accelerator, tons of memory bandwidth, and Infinity Fabric bandwidth. These numbers are hand-picked to be competitive with NVIDIA. AMD is leaning into the comparison.

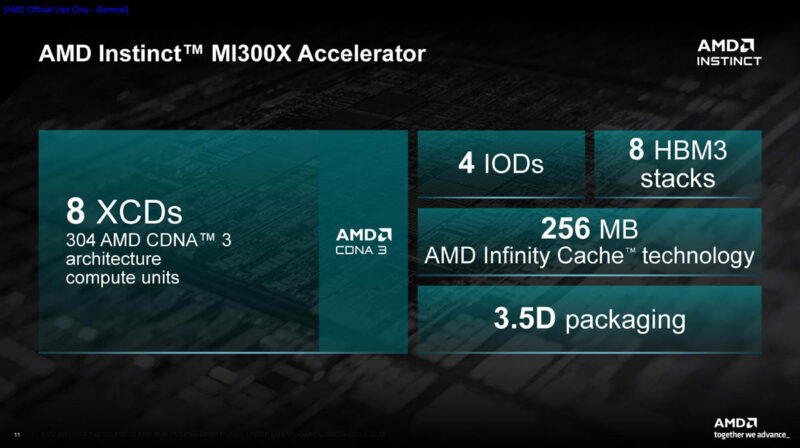

AMD has not just a GPU, but a technological marvel. There are 8 XCDs for compute, 4 IO Dies, 8 HBM3 stacks, 256MB of cache, and 3.5D packaging that we will get more into during our architecture and packaging sections.

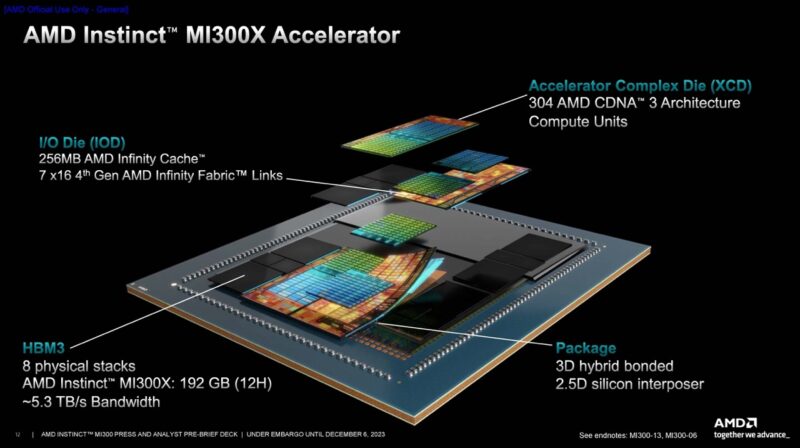

Just to take a look at what this all looks like, here is AMD’s chiplet stack diagram to show just how much is going on in here.

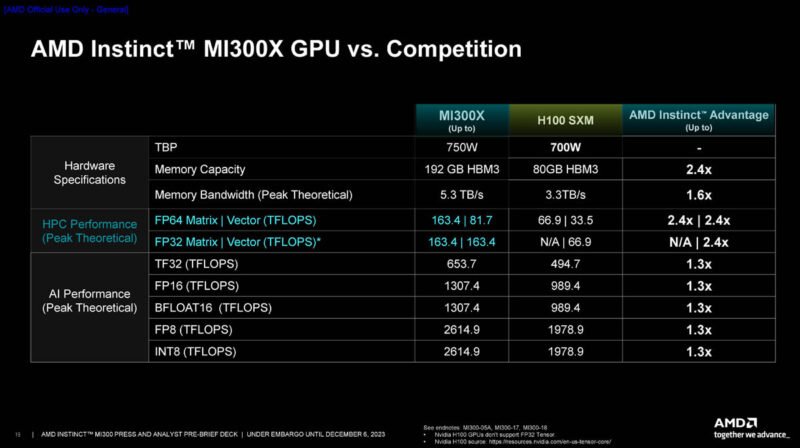

AMD’s topline numbers put this at something like 2.4x a NVIDIA H100 in many key compute metrics for HPC. On the AI side, it is around 1.3x. Perhaps the big message here is that it is faster than a H100 (probably closer to a H200) in AI with more memory. With 153 billion transistors, it is a big chip.

AMD puts eight of these onto an OAM UBB to build an 8-way GPU system. The OAM platform was designed as a hyper-scale alternative to NVIDIA’s proprietary SXM form factors. It also does not use NVLink switches. The power consumption being higher on these versus the SXM5 modules NVIDIA uses is realistically offset once the NVSwitch power consumption is counted.

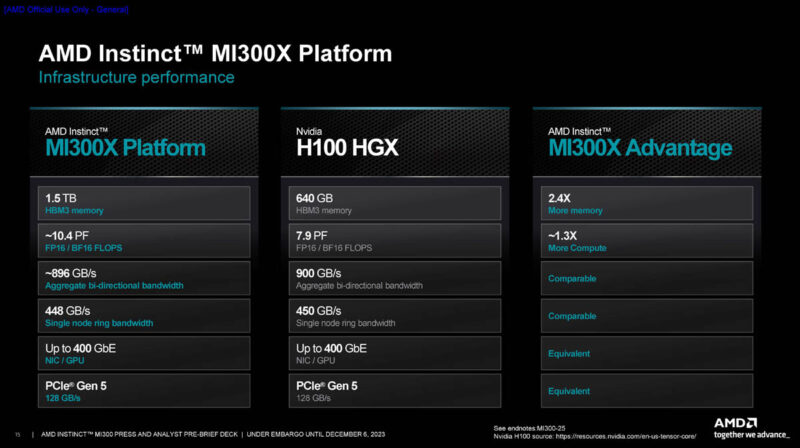

AMD does not call out the fact it is not using NVSwitches like NVIDIA, but versus the NVIDIA HGX H100 8-GPU system, it is very competitive.

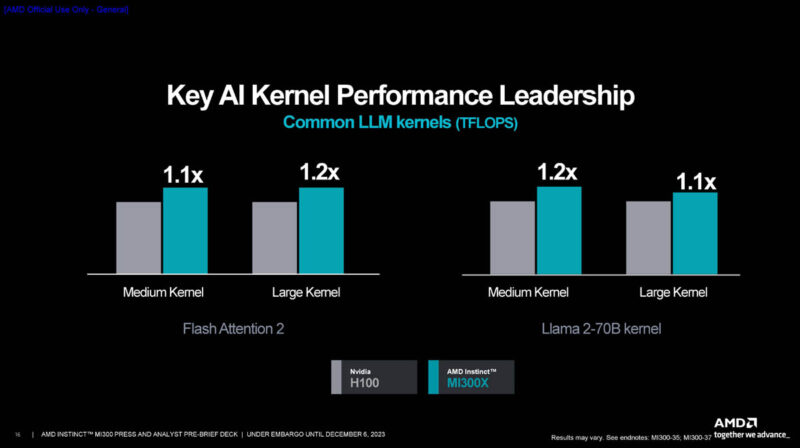

AMD says that running AI it is head-to-head faster than NVIDIA.

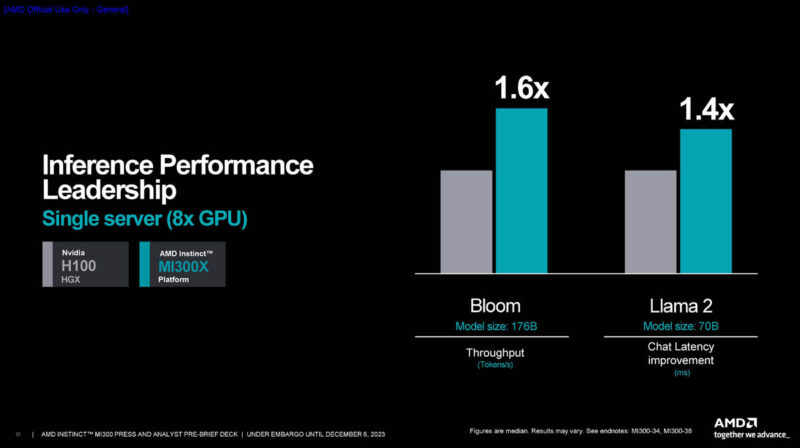

AMD also says it is significantly faster on the inferencing side. Inference is a bigger application.

On the platform level, AMD says it is about as fast as NVIDIA. At the same time, we need to be real here. NVIDIA is not cherry-picking one result. Instead, it has a lot of different proof points on the training side.

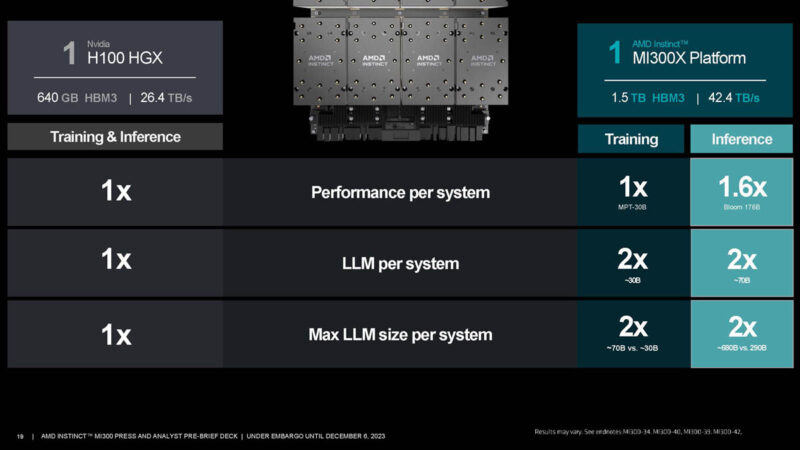

Here is AMD’s slide comparing the HGX H100. We have no idea why AMD is calling this the H100 HGX. If you want to learn more about NVIDIA’s platforms, check out the NVIDIA DGX versus NVIDIA HGX What is the Difference article.

AMD has a number of OEMs that are signed up to deliver MI300X systems. This is an opportunity for leaders in the NVIDIA AI space like Supermicro, QCT, and Wiwynn to offer new solutions. For traditional laggards in the NVIDIA AI space like Lenovo and Dell, this is a new opportunity to get into a market segment early.

AMD also has several cloud partners. Microsoft was on stage at the MI300 launch event, but is not on these slides. Microsoft previously announced that the MI300X is going to be available in Azure, so perhaps these are just new customers. Microsoft will have its preview VMs available today.

Oracle Cloud was also on stage as a partner and not on the slide.

Next, let us get into the MI300X architecture. If you want to get into the MI300A, we will do that on the subsequent page.

STH testing when?

Great article as always Patrick and Team STH

It looks like a couple of MI300A systems are available: https://www.gigabyte.com/Enterprise/GPU-Server/G383-R80-rev-AAM1 and https://www.amax.com/ai-optimized-solutions/acelemax-dgs-214a/

Couldn’t find prices but if it’s supposed to compete with GH then it’ll be around U$30K.

It’s a good question which of the MI300A or MI300X is going to be more popular. As a GPU could the MI300X be paired with Intel or even IBM Power CPUs?

I personally find the APU more interesting. Not because the design is new so much as the fact that real problems are often solved using a mixture of algorithms some of which work well on GPUs and others better suited to CPUs.

Do you know if mi300A supports CXL memory?

I hope to see some uniprocessor MI300A systems hit the market. As of today only quad and octo.

Maybe a sort of cube form factor, PSU on the bottom, then mobo and gigantic cooler on the top. A SOC compute monster.

In the spirit of all the small Ryzen 7940hs tiny desktops. Just, you know, more.