We’re down to our third and final chipmaker keynote of the day. Closing out a busy day for press conferences is AMD, who this year gets the honor of holding CES’s official opening keynote. The subject of AMD’s keynote, like so many others this year, will be a broad focus on AI, with CEO Dr. Lisa Su presenting AMD’s vision for AI solutions for both consumer and enterprise customers.

AMD CES 2026 Keynote Live Coverage Preview

With a wide portfolio of chips these days, AMD has no shortage of products they can talk about in an AI-themed discussion. Besides big iron chips like the Instinct series, AMD has specialty accelerators, and even their bread & butter client SoCs have AI capabilities these days via their NPUs. So there’s plenty of latitude here to present products.

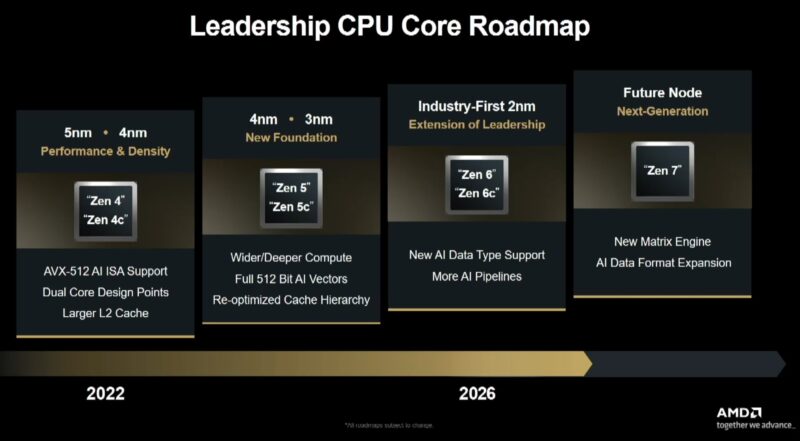

With that said, AMD is largely mid-generation on the bulk of its product line. Zen 5 is mid-cycle; so is RDNA 4, for that matter. So we’re not coming into CES 2026 expecting a ton of new consumer silicon from AMD. Enterprise is a bit more nebulous; AMD’s roadmaps are already locked in place, but as we saw with NVIDIA’s keynote earlier, it’s not too outlandish to announce or launch your enterprise hardware before it’s shipping in volume.

The calendar hold for AMD’s keynote is for 1 hour. Coupled with the usual CES/CTA wrap-around material, and this should be a pretty brisk presentation. Lisa Su will be helming it, though it should be unusual for her to not hand off some individual segments to her lieutenants.

AMD CES 2026 Keynote Coverage Live

It’s 6 minutes past AMD’s scheduled start time, and we’re still waiting for things to get started. Hopefully this is just a brief delay.

And here we go! Starting with the CES/CTA intro materials.

CES 2026: Innovators Show Up

As is usually the case, the CTA president – currently Gary Shapio – opens up the CES opening keynote.

“To introduce a leader – one of the bastions of CES”

And here’s Lisa.

“Every year I love coming to CES to see the latest and greatest tech.”

“We have a completely packed show tonight.”

“My theme for tonight is ‘you ain’t seen nothing yet’.”

And with that, Lisa is getting started.

“AMD technology touches the lives of billions of people every day”

AI is the #1 priority at AMD. AI is going to be everywhere. And it’s going to be for everyone.

AMD is building the compute foundation to make that future real.

AMD thinks AI use can grow to 5 billion (or more) active users within 5 years.

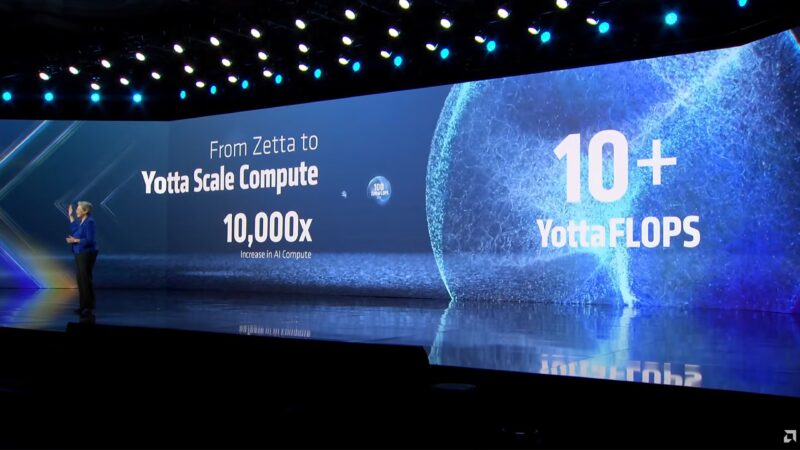

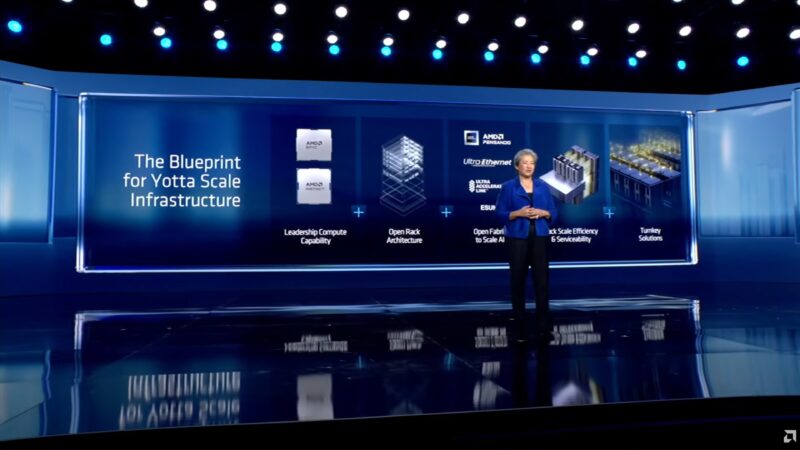

To enable AI everywhere, AMD needs to increase the world’s compute capacity over 100x over the next 5 years. To over 10 YottaFLOPS.

To make all of this happen you need powerful computing hardware everywhere – both at the edge and in servers. And that means using the right kind of compute for each workload. Tuned for the application used.

Diving in to specific hardware topics, Lisa is starting with the cloud.

Cloud computing is where the bulk of AI compute is taking place. Especially with the need for central, powerful systems for training.

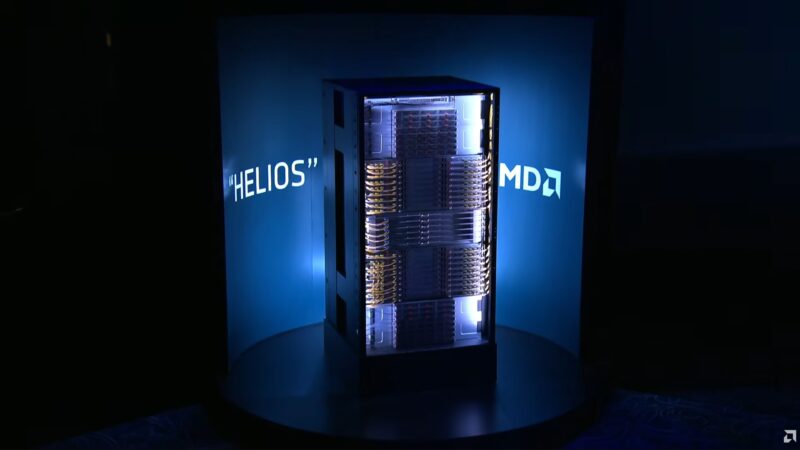

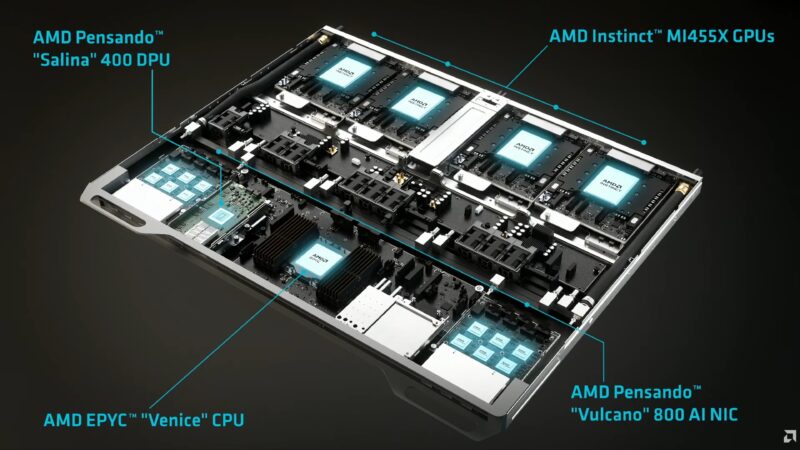

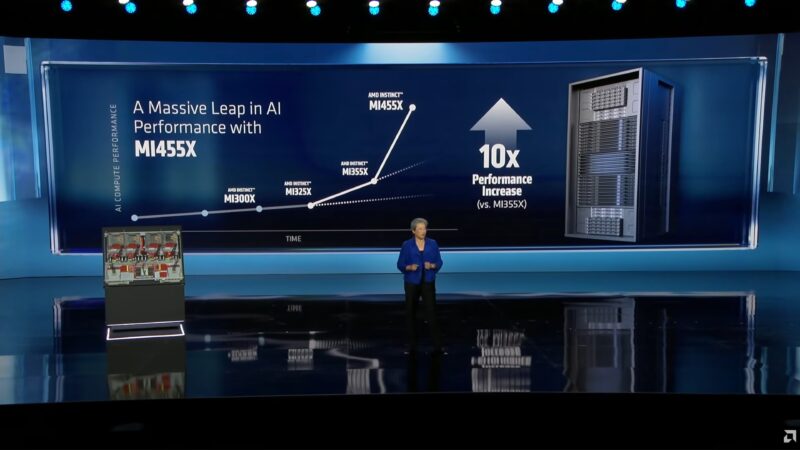

And this brings us to Helios, AMD’s next-generation rack-scale platform. As well as AMD’s Instinct MI400 series of accelerators.

Helios has 72 GPUs in a single rack, scaling out to thousands of GPUs over multiple racks. All while making use of AMD’s processors and their Pensando networking tech.

“Is that beautiful or what?”

Helios is a double-wide rack that weighs nearly 7000 pounds.

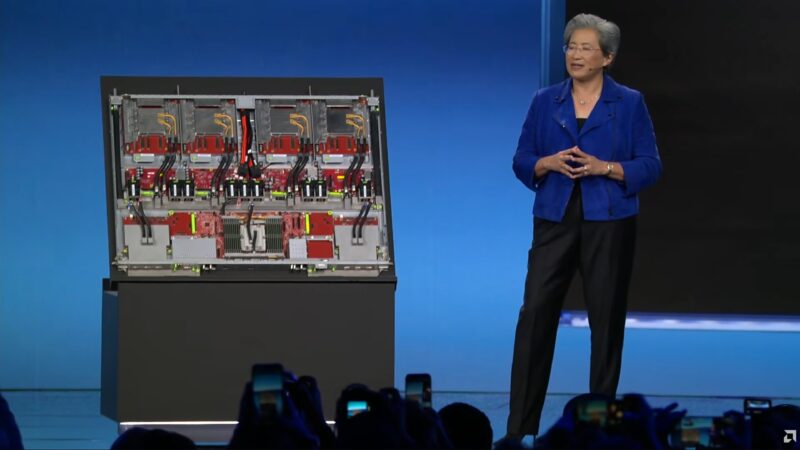

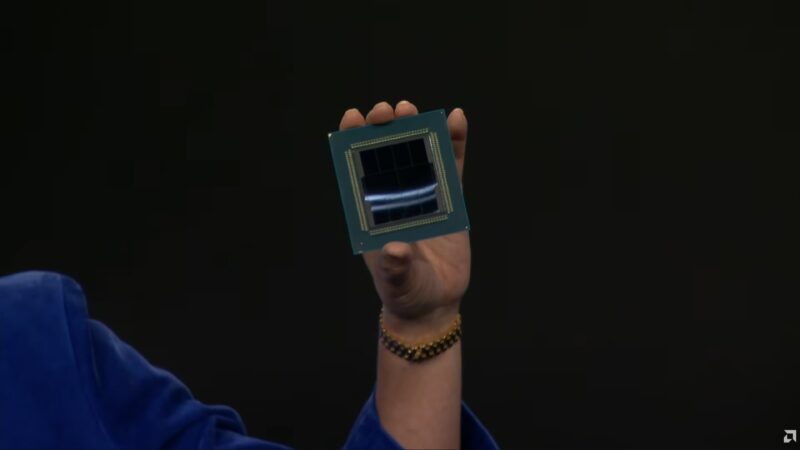

A look at the Helios compute tray. And holding up one of the massive MI455X GPUs that goes in it.

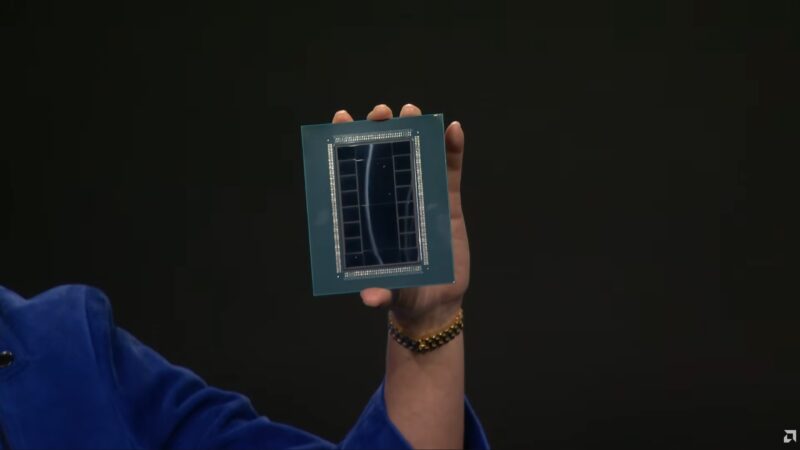

Lisa has a Zen 6 Venice chip to show off as well.

Each Helios rack has more than 18,000 CDNA compute units. 31TB of HBM4 memory, and 43 TB/second of aggregate scale-out bandwidth.

Helios is exactly on track to launch later this year. AMD expects it will set the new benchmark for AI performance.

With more powerful hardware, developers can build bigger and better AI models.

Now on stage, Greg Brockman, President of OpenAI, to talk about how they use AMD’s hardware.

Greg wants to move from being an impersonal service with a text box to something people use for important moments in their lives. He thinks this will be the year we see enterprise agents take off.

Greg wants more compute (again). Agentic workflows require far more computational power, as they can run for an extended period of time as they invoke other agents.

And now he’s talking about various cases where ChatGPT was used for medical advice.

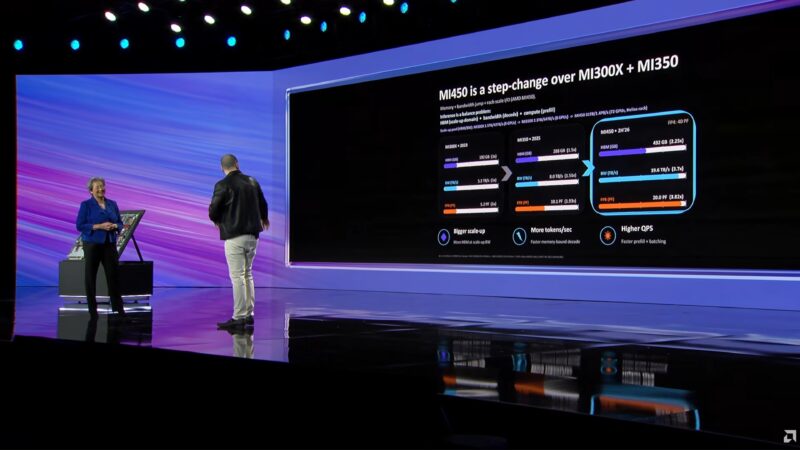

A slide comparing MI450 to MI300/MI350 that was put together by ChatGPT.

What does the world look like in a few years? Greg thinks that GDP growth itself will be constrained/boosted by the amount of computational power is available. Not only providing services to end-users, but optimizing processes that already exist today. “Hard problems.”

And that’s OpenAI.

Back to Lisa and AMD’s Data Center hardware.

As well as MI455X there will be MI440X for 8-way configurations. And MI430X for sovereign compute and classical HPC.

“AMD is the only company delivering openness across the entire stack”

Bringing the discussion to software and AMD’s ROCm platform.

Now on stage, Amit Jain of Luma AI.

Amit is discussing Luma’s advanced models. And rolling a short video comprised of clips made with their generative AI video models.

Luma is working with everyone from individuals to large enterprises. Luma has found that control comes from intelligence, and not just prompting. As well as the need to be able to edit video using AI.

“2026 will be the year of agents”

Giving small teams the power of Hollywood studios through agentic AI for video.

Today 60% of Luma’s inference workloads run on AMD cards.

TCO and inference economy is critical to Luma’s needs. Their AI models require a massive amount of compute/tokens.

Luma is expanding their AMD partnership to the tune of 10x as much as before.

Amit sees video models as still being in their early days. But in time, they want to get to accurate physical simulations. And with that, those models can become the backbone of robotics.

And that’s Luma.

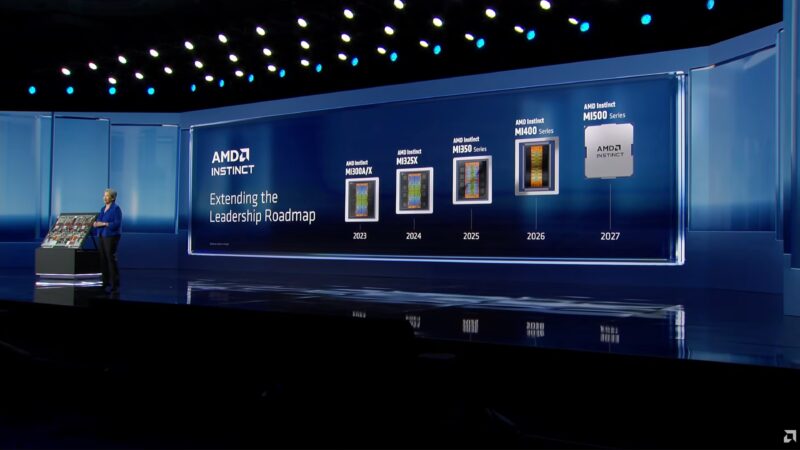

And with AMD knocking on the door of delivering MI400 accelerators, here’s a fresh roadmap out to MI500.

MI500 is based on AMD’s CDNA6 architecture, built on a 2nm process, and paired with HBM4e memory.

And that’s the cloud/enterprises. Now over to PCs.

Rolling a video of various AI-accelerated PC applications, with Lisa narrating from the sage.

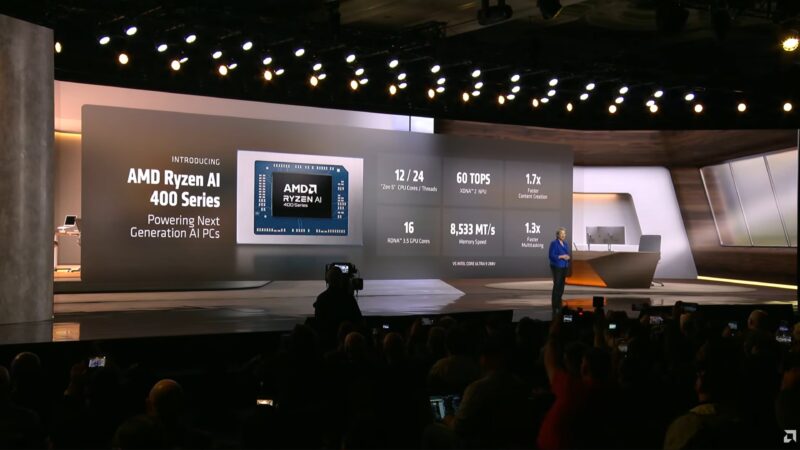

Announcing the Ryzen AI 400 series. For mobile and desktop.

This is a refresh lineup using AMD’s existing Strix Point and Krackan Point silicon used in the Ryzen AI 300 series.

Now on stage, Ramin Hasani of Liquid AI.

“We are not building foundation models, we are building liquid models”

Liquid AI wants to deliver frontier-quality models on local devices.

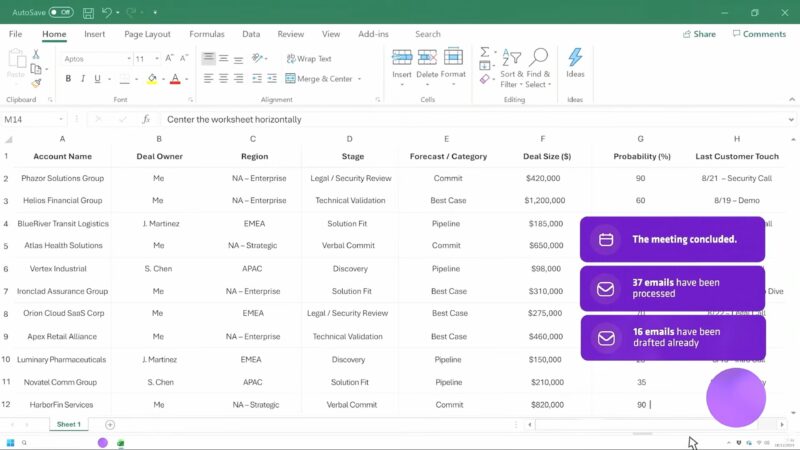

And rolling a video of using LFM to attend a meeting for you.

“This is going to be the year of proactive agents”

Liquid is collaborating with Zoom to bring these kinds of features to their platform.

And that’s Liquid.

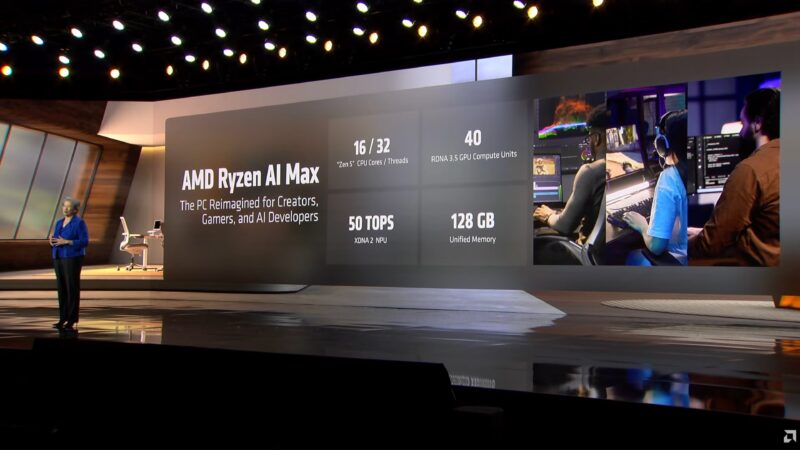

Pivoting over to Ryzen AI Max, aka Strix Halo, AMD’s high-end SoC.

Ryzen AI Max has been very popular with AI developers due to its large local memory capacity.

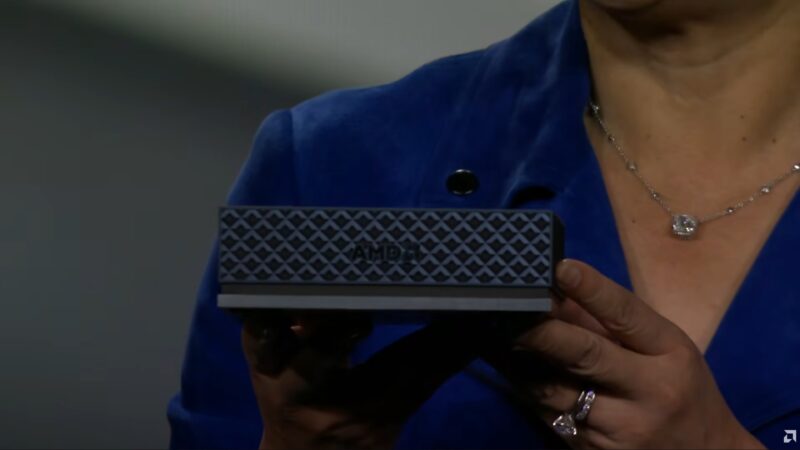

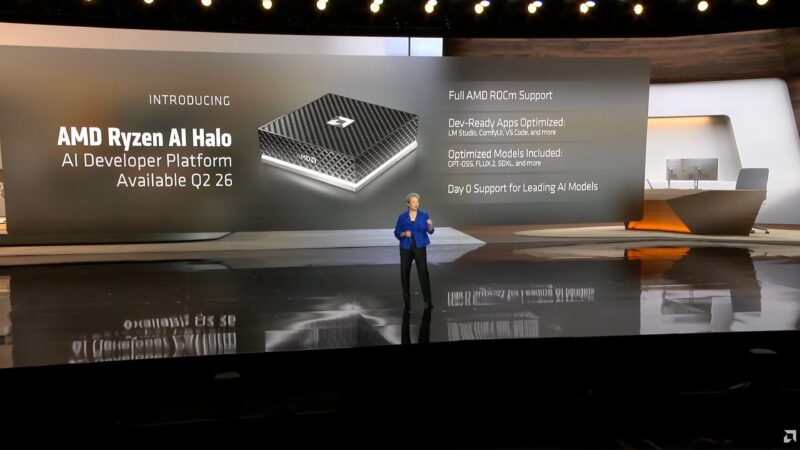

Announcing Ryzen AI Helo, a reference platform for local AI development.

A Ryzen AI Max box from AMD and preloaded with AMD’s ROCm software stack and other tools. A DGX Spark competitor. Available in Q2.

And now over to gaming.

And now on stage, Dr. Fei-Fei Li of World Labs.

Li wants to give AI spatial intelligence.

As for how this is connected to gaming, World Labs wants to use generative AI to scan in a world from images, rather than having to literally scan every inch of an area to map it.

Demoing how World Labs’ AI models were used to make a 3D model of AMD’s offices.

What used to take months of work can be reduced to minutes.

Dr. Li is eager to see platforms like MI400 hit the market, so that they can create even larger worlds. And to have them react quickly enough for an interactive experience.

And that’s World Labs.

Shifting again, this time to health care.

And rolling a video on the subject. How computers are being used to power and improve health care. Genome research, molecular simulation, robotic surgeries, etc.

AMD tech is already at work in health care. It has been for a long time.

This is apparently a personal passion of Lisa Su.

Now for a group chat on health care. Joining Lisa are Sean McClain of Absci, Jacob Thaysen of Illumina, Ola Engkvist of AstraZeneca.

With today’s computational resources, it becomes possible to engineer biology. Absci wants to have AI cure baldness and endometriosis.

AMD apparently invested in Absci a year ago.

AI isn’t just about productivity, but innovation.

For AstraZeneca, the big focus is on drug discovery and simulation. Finding new drugs and checking to see if they work before ever making it to lab testing.

As for the AMD connection? The amount of data that modern tech can generate – and the amount of data AI and other tools can analyze – will unlock new techniques and cures in biology and medicine.

And that’s a wrap on the healthcare fireside chat.

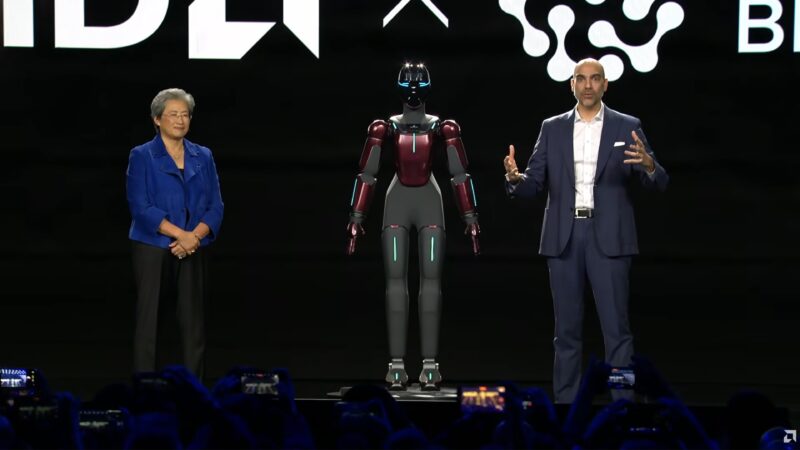

Now on to Physical AI.

“Physical AI is one of the toughest challenges in technology”

This leverages all of AMD’s hardware. CPUs, dedicated accelerators and more.

Now on stage, Daniele Pucci of Generative Bionics.

“Human-centric physical AI”

Generative Bionics’ first generation humanoid robot is ready to be released.

The Gen 1 robot has touch sensors. Touch is a key focus of Generative Bionics’ research and technology.

Manufacturing will start in the second half of 2026.

And that’s Generative Bionics.

One more subject shift, this time to space.

“AMD technology is powering critical missions today”

Now on stage, John Couluris of Blue Origin.

Blue Origin wants to move heavy industry off of the Earth – for the benefit of the Earth.

“Space is the ultimate edge environment”

So Blue Origin needs hardware that can survive in space, making heavy use of AMD’s hardened, high-temperature embedded hardware.

Blue Origin is using AMD’s Versal hardware for their next flight computer. A design that will eventually be used to land astronauts on the moon.

“Every employee at Blue Origin has access to AI tools”

Edge AI will help future explorers optimize future exploration by collecting and processing data for them ahead of time.

And that’s Blue Origin.

Now on to the final chapter of the night: science and supercomputers.

Recapping the various supercomputers that AMD is currently powering, such as Frontier and El Capitan.

Now working with the US DoD on the Genesis Mission program for supercomputers. This will result in the Lux and Discovery supercomputers.

Now on stage, Michael Kratsios, Science Advisor to the President of the United States.

The administration wants to double US R&D productivity within a decade.

The Genesis Mission will be leveraging AI to accomplish this. And they want to bring in even more federal resources.

One of the other goals of the project is to create high quality data sets for further research and simulation.

3 strategic priorities for the administration: remove barriers to innovation and accelerate R&D, get AI infrastructure and energy production right, and finally using AI in education.

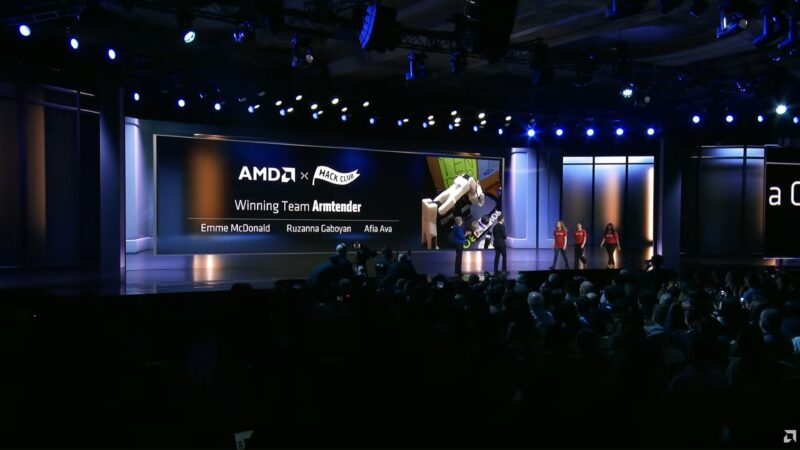

Hackathon winners “Hack Club”. Built a Ryzen-powered AI barista: Armtender. They did not have any previous AI programming experience. AMD is also giving each of the club members $20K grants.

And with that, Lisa is ready to wrap things up.

“AI is different”

AMD is going to deliver both high performance hardware and an open ecosystem in order to bring AI everywhere and for everyone.

And that’s a wrap! Thank you for joining us for our CES 2026 live blog coverage.