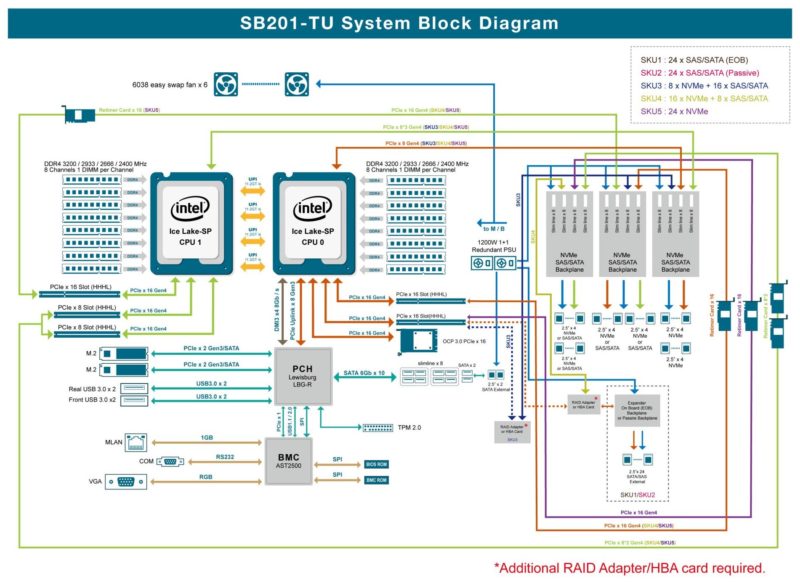

AIC SB201-TU 2U Block Diagram

AIC has an awesome block diagram for this server:

This is perhaps one of the most interesting platforms we have seen. AIC has a number of different storage options and is depicting five different SKU storage configurations on this diagram. We are reviewing SKU5 for those who are wondering.

This system uses 96 PCIe Gen4 lanes just for the front NVMe storage so the block diagram gets complex quickly.

AIC SB201-TU Performance

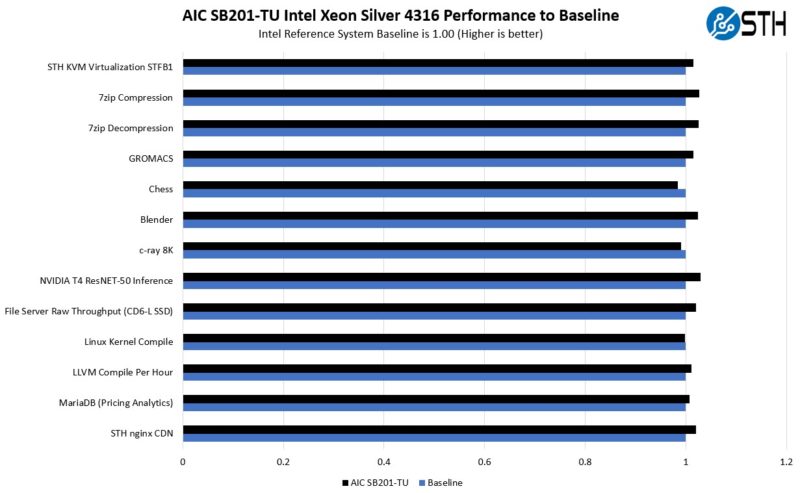

This was a bit of a different system given that we wanted to stay within the 165W TDP CPU recommendation from AIC. As such, we only had access to two Intel Xeon Silver 4316 CPUs to test with. We have done most of our Ice Lake testing with Xeon Platinum and Gold CPUs, so we had limited sets of CPUs to choose from to use in this system.

Still, there was some variability, but the Intel Xeon Silver 4316 performed reasonably well in this chassis.

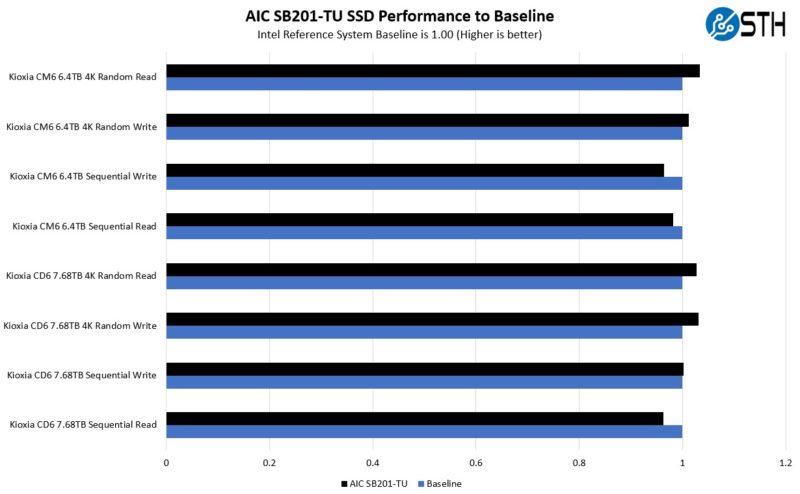

The next question was how do SSDs perform in this chassis, versus our 3rd Gen Intel reference platform.

Overall, that was about what we would expect. There was a bit of variability, but we were within +/- 5%.

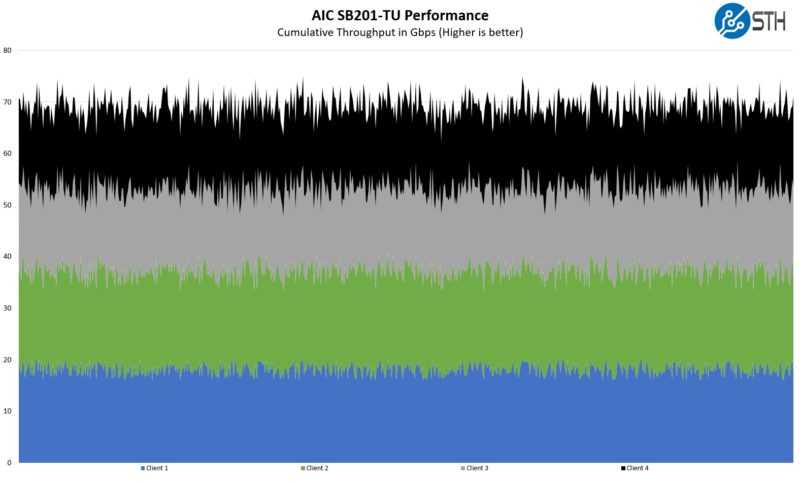

Then came the next look, running over a network in a file server configuration. We had an NVIDIA ConnectX-5 OCP NIC 3.0 card installed in our AIC Ubuntu environment and then had four clients connected via an Arista DCS-7060CX-32S 32x 100GbE switch. We then created drive sets for each client and saw how fast we could pull data from each set.

The clients were all 64-core Milan generation nodes, but we saw something really interesting just running sequential workloads. There were certainly peaks and valleys but it seems like two of the six drive sets being accessed by clients were notably lower performance by ~1-2Gbps. We realized this was the case when we were accessing drives on CPU1. The OCP NIC 3.0 slot is on CPU0, and the Xeon Silver CPU has lower memory speeds as well as a lower UPI speed. Still, there is a lot of tuning left to get the full performance out of this configuration and one easy step would be to use higher-end 165W TDP CPUs that we did not have.

Next, let us get to power consumption.

Sure would be nice to see more numbers, less turned into baby food. For instance, where are the absolute GB/s numbers for a single SSD, then scaling up to 24? Or even: since 24 SSDs are profoundly bottlenecked on the network, you might claim that this is an IOPS (metadata) box, but that wasn’t measured.

The whole retimer card – cable complex looks very fragile and expensive, it’s hard to believe this was the best solution they could come up with.

The vga port placement is a mystery, they use a standard motherboard i/o backplate, they could have used a cable to place the vga port there (like low-end low profile consumer gpus usually do)

The case looks little too long for the application. Very interesting server, not sure if it’s in a good way but at least the parts look somewhat standard

Reviews are objective but useful

We get 100GBPS Read and 75GBPS write speed on a 24 bay all nvme server. Ultra high throughput server with network connectivity upto 1200GbE.

Would love for you to take a look.