At AMD Financial Analyst Day 2025, the company was very focused on AI, but it also offered a new nugget on the next-generation AMD server CPU. The 2026 era AMD EPYC Venice will, as one might expect, offer more performance and more cores. Now we have a bit more on the new design.

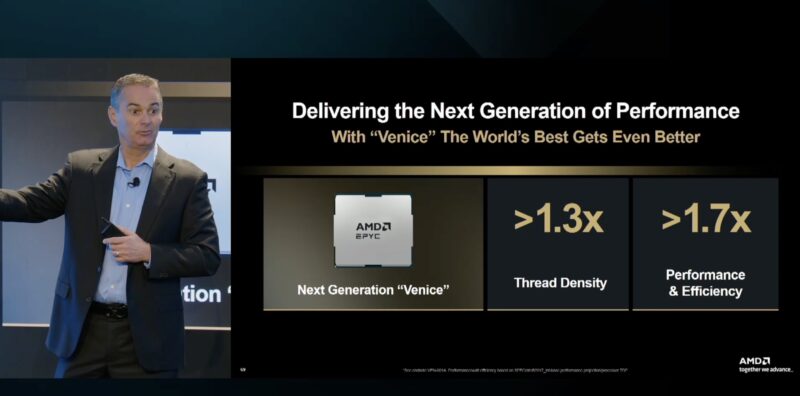

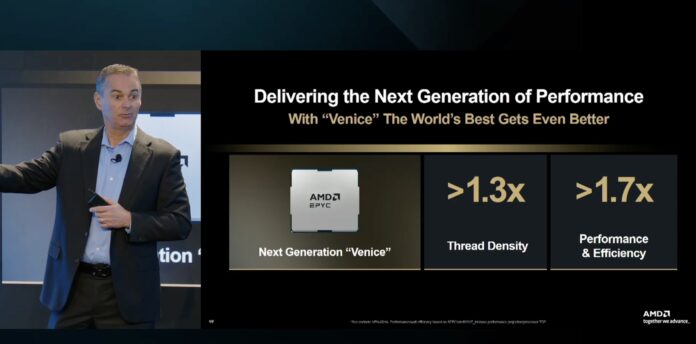

AMD EPYC Venice 2026 with 1.3x Thread Density and 1.7x Performance

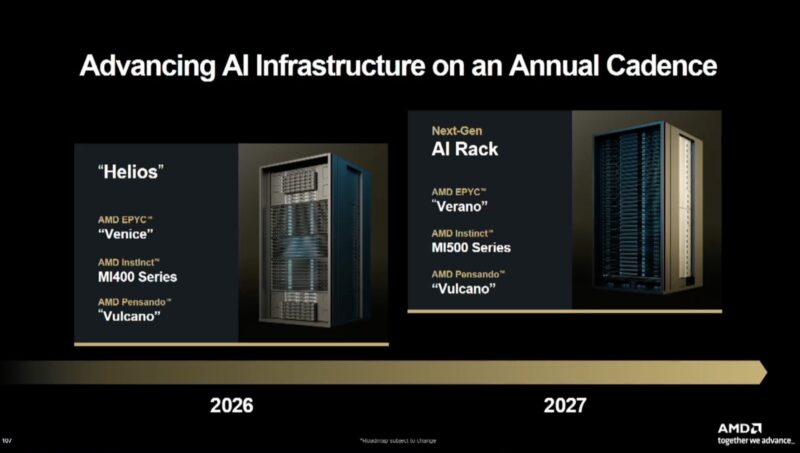

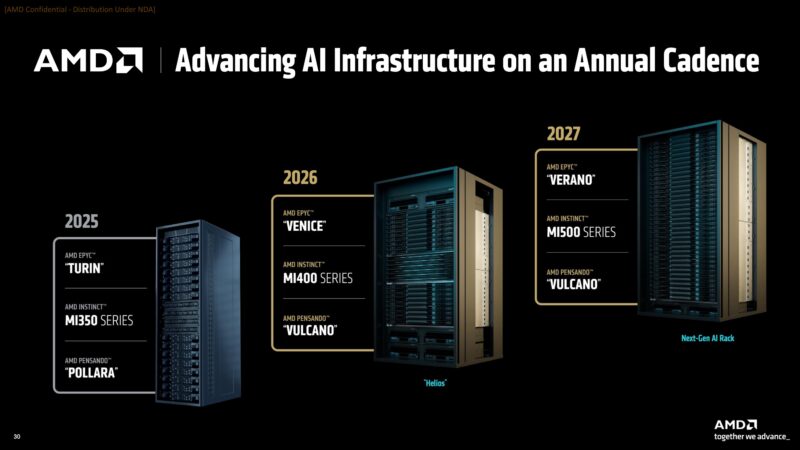

AMD’s big revenue driver in 2026 and 2027 in the data center is set to be AI. The AMD MI450 Helios rack is a major part of this.

The company also showed its MI500 rack which it has shown previously on roadmaps for 2027. Notable there is that components like power shelves are no longer shown at the front of the rack. That makes sense as NVIDIA is also removing non-essential components from its racks in future generations.

AMD has already said that it is going to be using a next-generation AMD EPYC “Venice” as part of Helios, and then Verano in 2027. Helios is coming to market in Q3 of 2026, so Venice needs to be ready to launch by then, even if it is just for the AI racks.

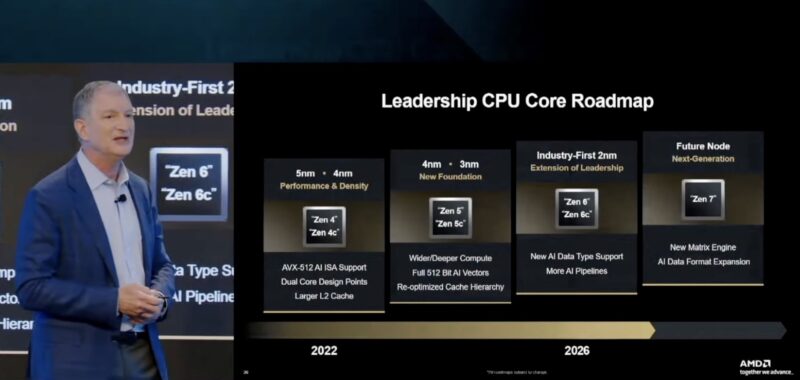

AMD also said that its next-generation Zen 6 and Zen 6c (AMD EPYC Venice will be using this next-generation architecture) are going to be on 2nm TSMC. AMD is also talking about new AI data type support and more AI pipelines for this generation, and even more AI for 2027’s Zen 7. Notably, Zen 7c is not on this slide.

AMD has shown this before, but it expects over 1.3x thread density (which would be roughly going from 192 cores to 256 cores) and 1.7x performance and efficiency.

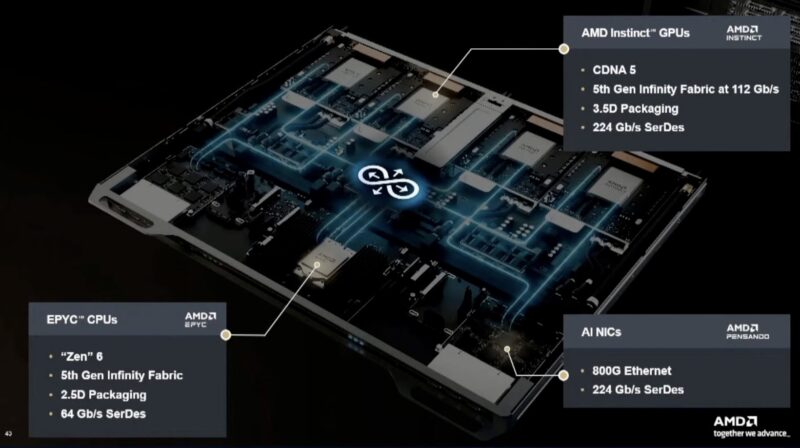

Another interesting nugget is that AMD discussed its SerDes interconnect. 224G SerDes are coming, but this slide seems to be another data point pointing to 448G will be a copper/ optical transition point. We have heard many in the industry say 448G is when co-packaged optics will make a lot of sense, so it is interesting to see this here.

AMD showed Venice in a Helios compute tray. We can see the 224G SerDes on the Instinct GPUs and Pensando NICs. AMD is also saying that we should get 5th Gen Infinity Fabric and expect the EPYC Venice chips to use 2.5D packaging.

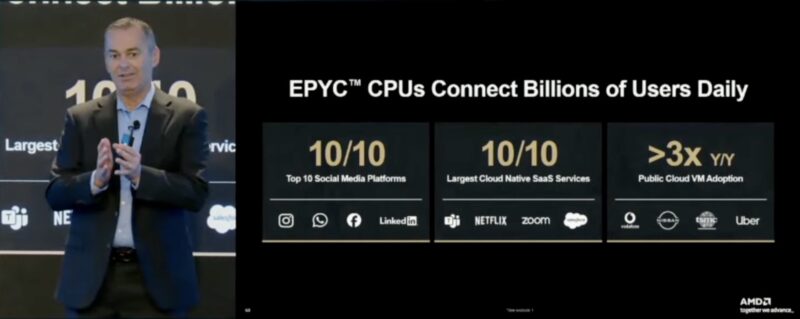

Regarding the AMD EPYC progress to date, AMD stated that it has a target of achieving a 50%+ server CPU market share. Beyond that, here is something striking. AMD has now converted 10 of 10 social media platforms and 10 of 10 large SaaS organizations. While this may seem minor, I can remember a time when some organizations flat out did not want to deploy EPYC. When we showed Facebook Meta AMD EPYC North Dome CPU and Platform Details in 2021, Meta had been a heavily Intel Xeon shop, so it was news that AMD had won at Meta.

Another big growth point was that AMD is seeing 3x year/ year public cloud adoption by large customers picking EPYC. If you take a second and think about what that means competitively, we know Arm is getting share with hyperscalers’ chips and with NVIDIA servers. If AMD is also growing like this, that is quite impressive and competitively not a great sign for Intel.

Final Words

The server CPU market is not growing as fast as the AI GPU market. At the same time, current designs call for CPUs to be sold alongside GPUs and NICs, so as those GPU clusters come online, we expect to see CPU sales follow. For those who just enjoy technology, we expect AMD EPYC Venice in 2026 with PCIe Gen6, more cores, and more memory bandwidth.

Intel has Clearwater Forest and Diamond Rapids on deck, but we went into why its new CEO’s comments are causing concern at OEMs about those products in the Substack.

Am I wrong here or is it actually correct that they put a capital B instead of small b after 40MB/s, 112MB/s, 224 MB/s on the high speed serdes interconnect slide? Thats a major mistake in an official presentation to investors, if wrong.

I believe you are correct, it should be Gb not GB but it also appears that the standard denotation by synopsis is just G so it would be 224G. I would bet that the marketing person who made the presentation is so used to the PCIE standard and memory standards that are in GB/s they they either didn’t know or weren’t thinking about the fact networking is in Gb/s.

Is the SerDes slide explicitly just networking, or is it semi-implicitly also about PCIe? I mean, a PCIe 6.0 x4 slot and a QSFP56 interface aren’t really *that* different once you subtract off all of the power and auto-negotiation features — they’re both 4-lane serial ports that use PAM4 signaling, but one is 64 Gbps per lane and the other is 56 Gbps per lane.

Should we be looking forward to CPO on CPUs in the PCIe 8.0 or 9.0 range?

OT: is AMD Running out of codenames? I remember putting together an AMD Athlon 64 3400 Gaming rig in 2005….That CPU had the Codename Venice.