AMD has a new AI NIC, dubbed the AMD Pensando Pollara 400 AI NIC. This is a new Ultra Ethernet Consortium-ready NIC at 400GbE speeds. We previously covered the AMD Pensando Pollara 400 UltraEthernet RDMA NIC launch last year, but now we have more details.

This is being done live at Hot Chips, so please excuse typos.

AMD Pollara 400 Details at Hot Chips 2025

Here is the overview of the NIC. This may seem like a competitor to NVIDIA ConnectX-7, but it offers something a bit different.

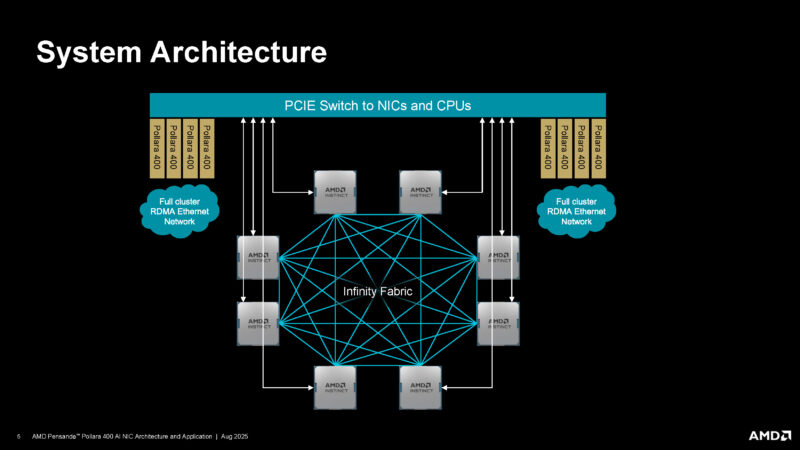

Since this is an AI NIC, we can see what AMD envisions for this. Whereas NVIDIA is pushing PCIe switches out, AMD is embracing PCIe switches and sees its GPUs and these Pollara 400 NICs in a 1:1 ratio in systems like the Gigabyte G893-ZX1-AAX2 or ASUS ESC A8A-E12U we recently reviewed (and one that we will review later this week on STH.)

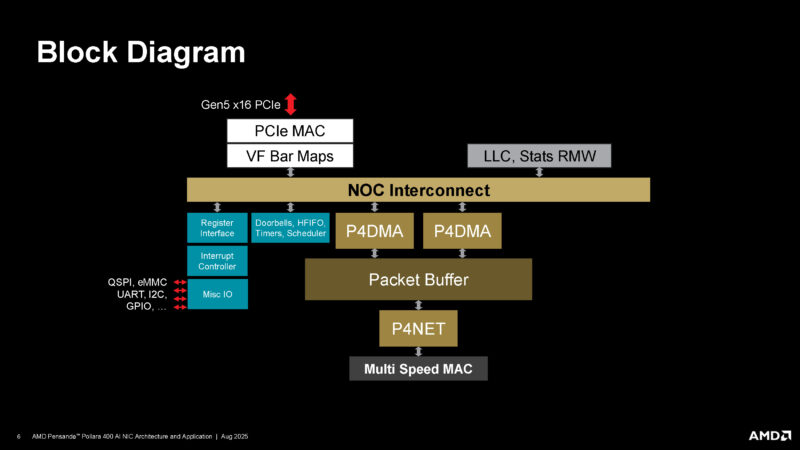

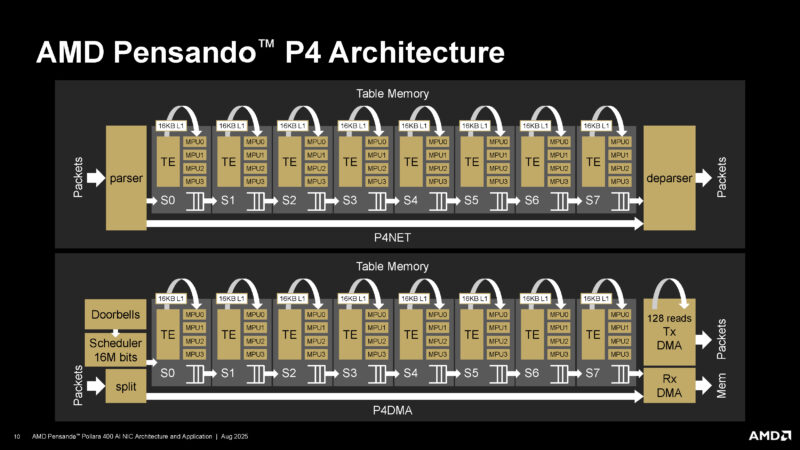

Here is the block diagram for the part. Notably, AMD does not have a PCIe switch and is using P4 for programmability.

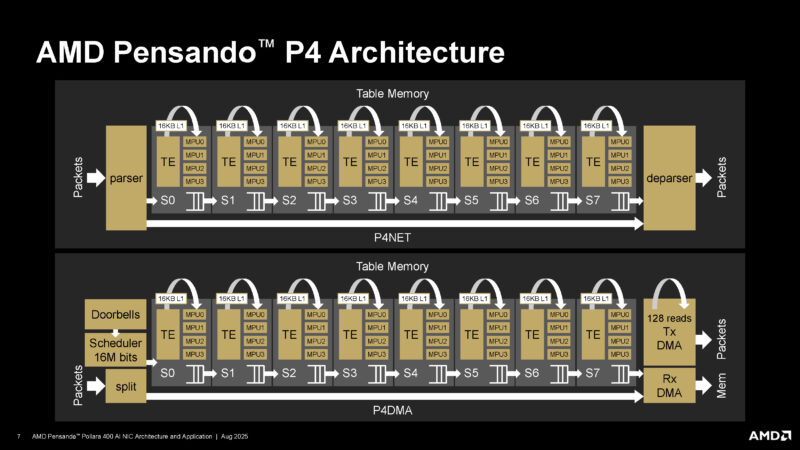

There we go, here is the P4 architecture. P4 is designed to build programmable packet pipelines. We also see this on the Intel IPU line that was designed for Google as an example, so P4 is not an AMD-only feature.

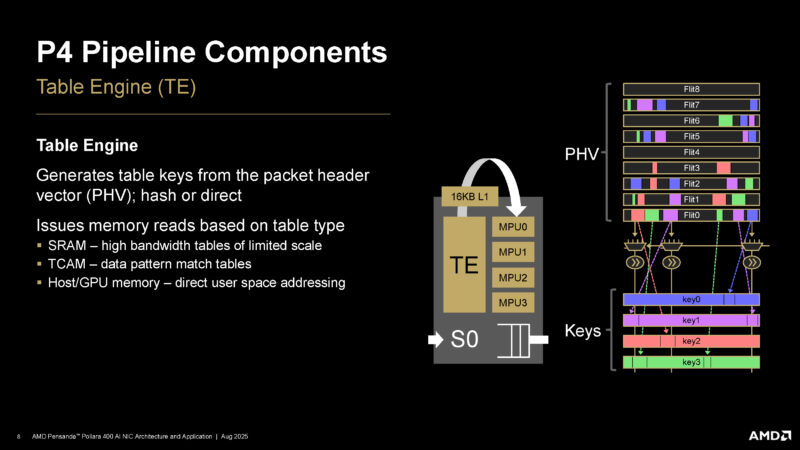

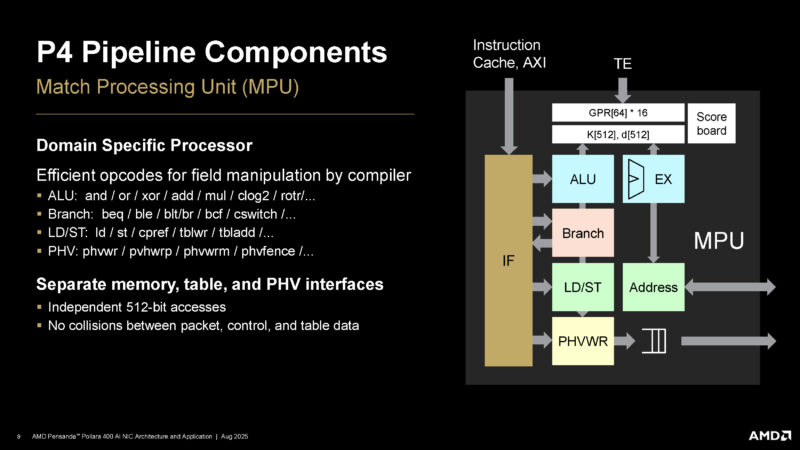

AMD is now going into the P4 pipeline components. The first is the Table Engine or TE. This generates table keys and issues memory reads.

There is also a match processing unit or MPU. Often in networking, you are trying to pick traffic flows based on patters that are matched in the packets for example.

Now we are back at the P4 architecture diagram we saw earlier.

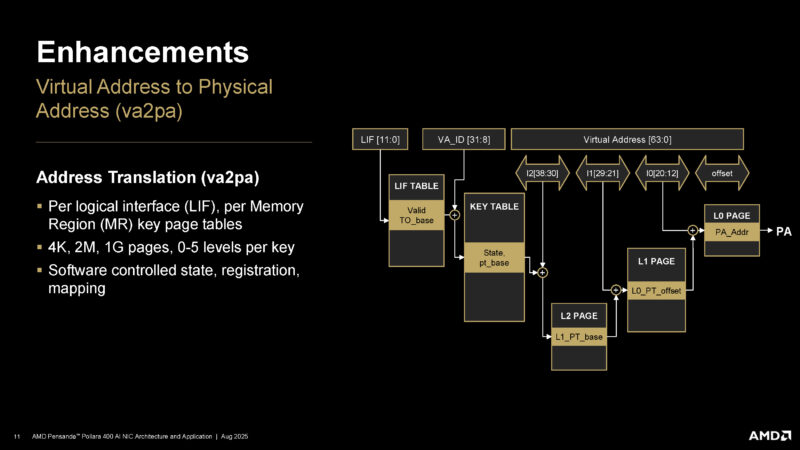

There are enhancements for things like the virtual address to physical address translation.

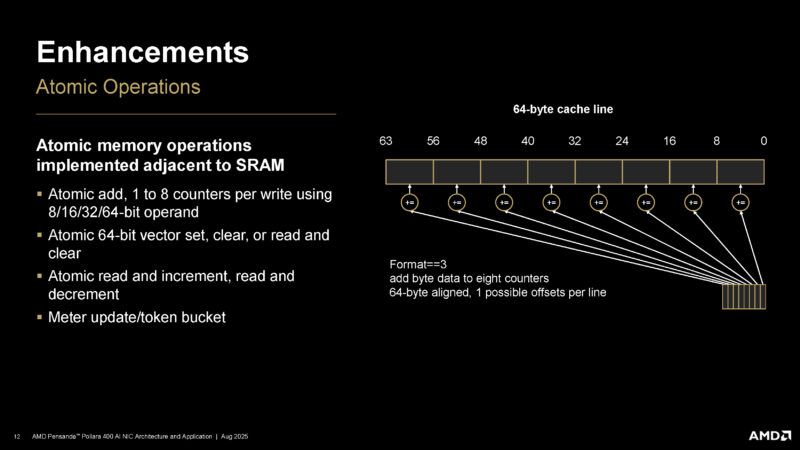

There are also atomic memory operations.

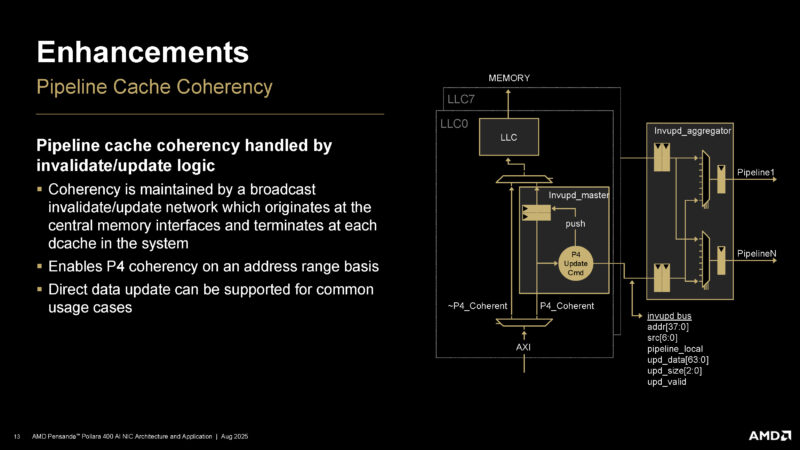

AMD also has pipeline cache coherency.

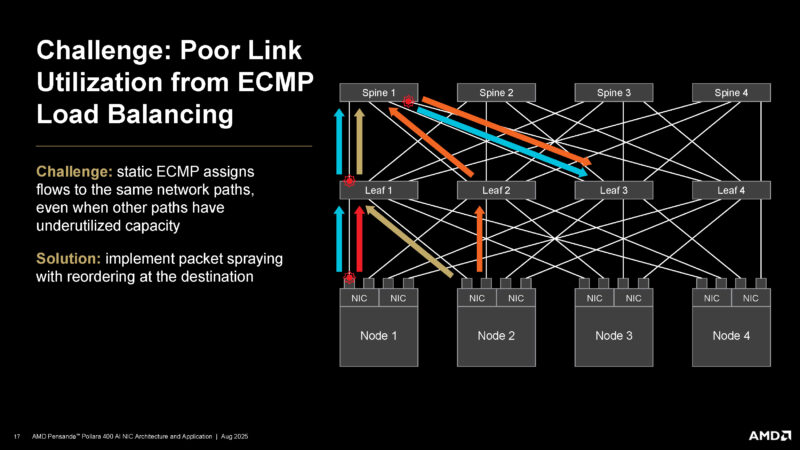

In AI scale-out networks (East-West) you see that there are a number of challenges.

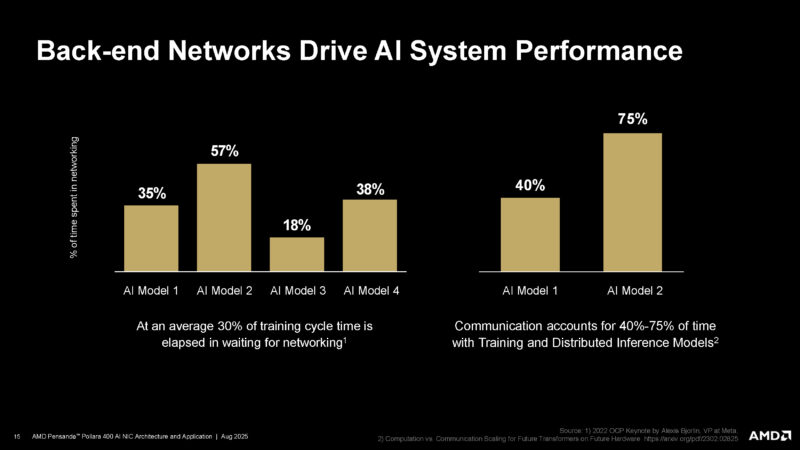

AMD is showing how networking has a direct impact on system performance, which matters when you have a 1:1 network card to GPU ratio and the networking can have a large impact on the performance of the much more costly GPUs.

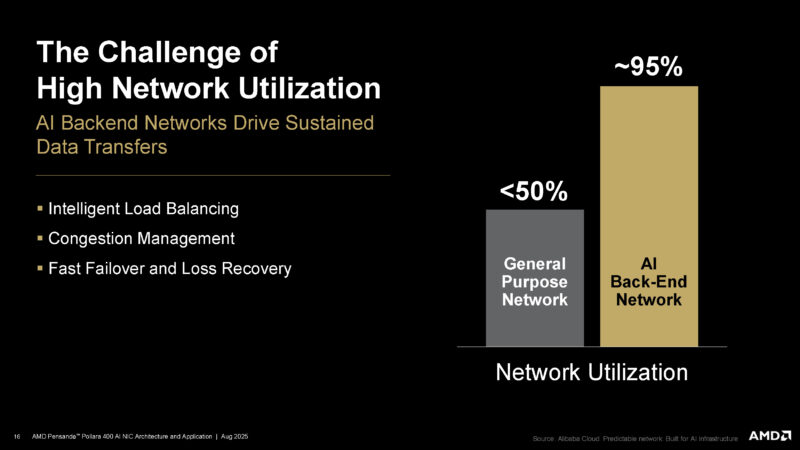

As part of AI networks, there can be high utilization which is driving the need for faster switches and NICs.

Links that are presenting issues can slow systems. As a result, packet spraying and reordering is becoming more common.

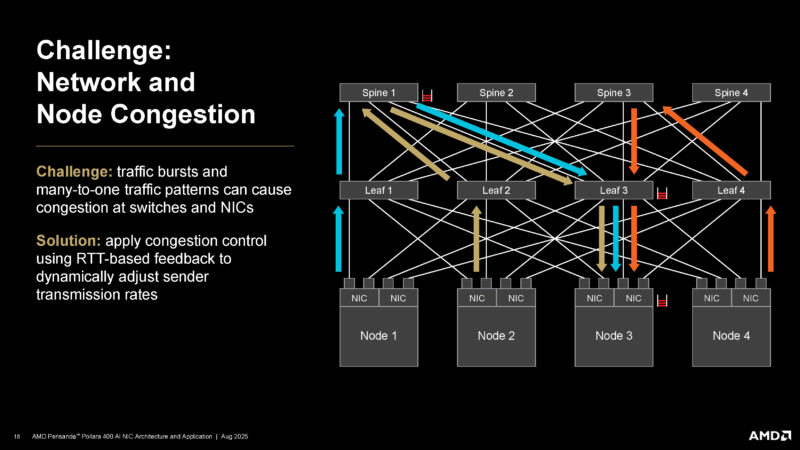

At the network and node level there can be congestion. AMD has a congestion control mechanism.

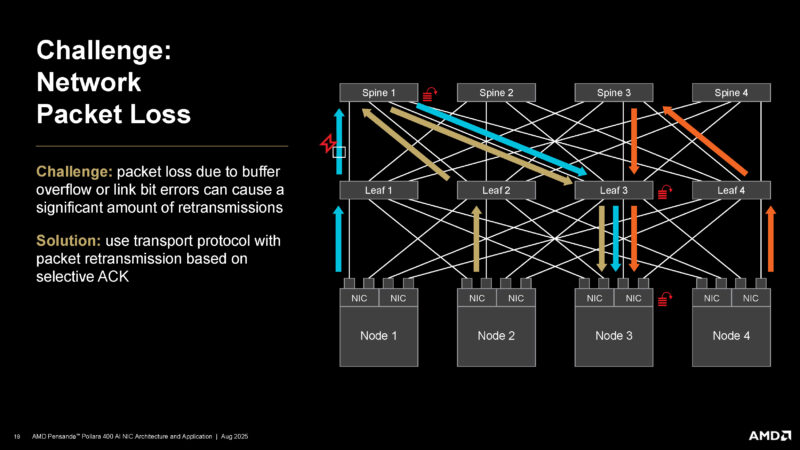

Packet loss can happen in the large complex AI networks and you do not want that to derail training jobs.

As a result, Ultra Ethernet Consortium is addressing these challenges with using Ethernet. UEC is not just around NICs, but also is a big influence on new switch chips to help make an ecosystem around solving these problems.

AMD says that its NIC is UEC ready.

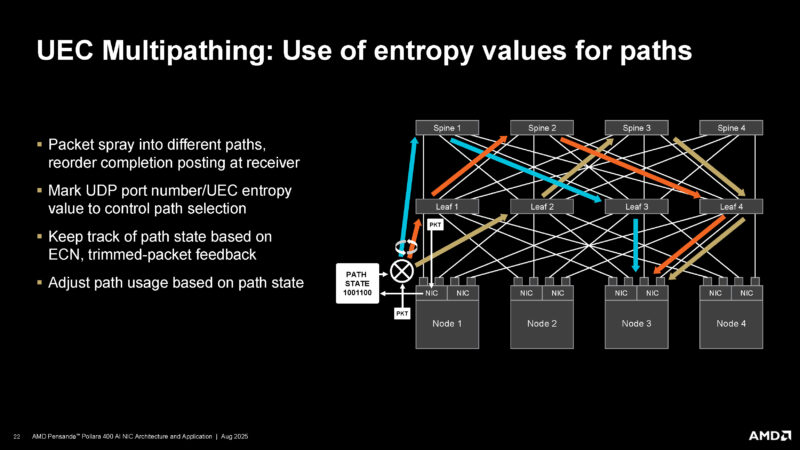

UEC has multipathing for help solve many of the challenges shown earlier.

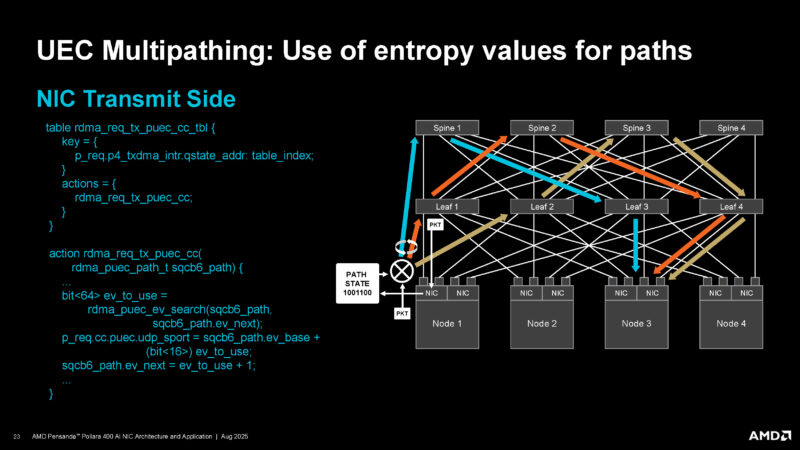

Here is how the entropy values for path works on the transmit side.

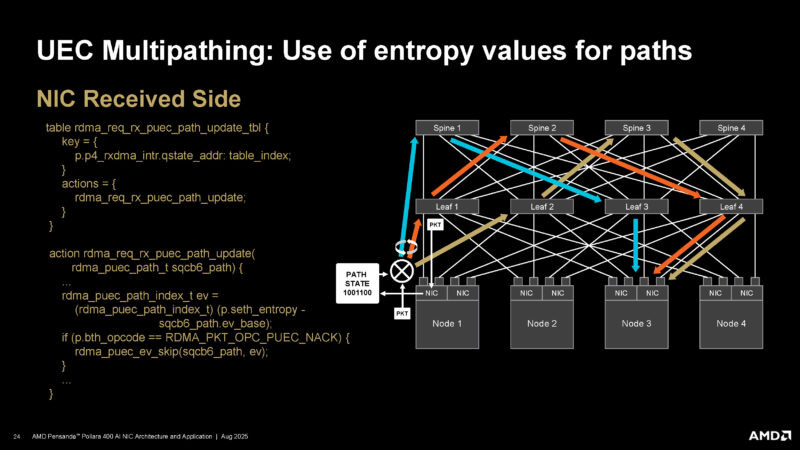

Here is what it looks like on the receive side.

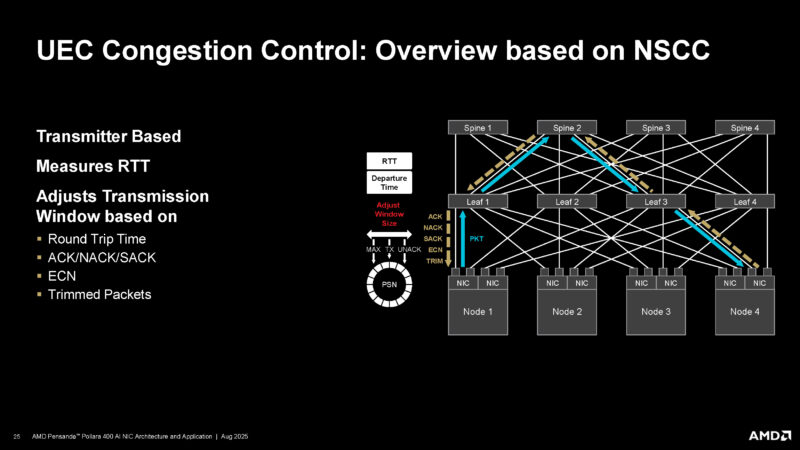

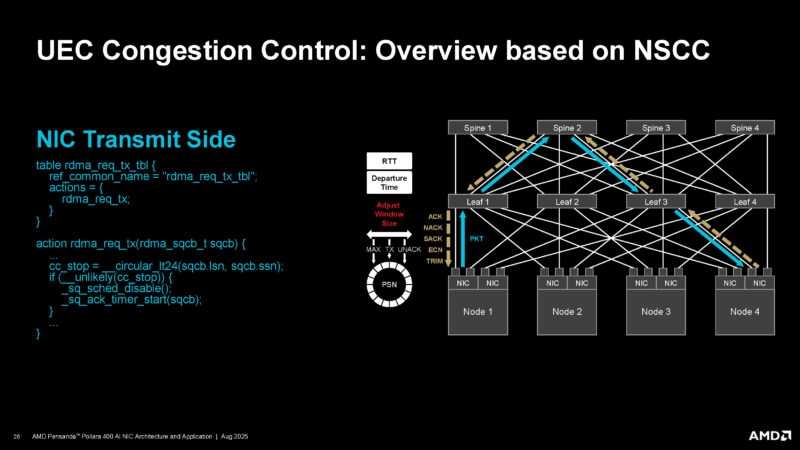

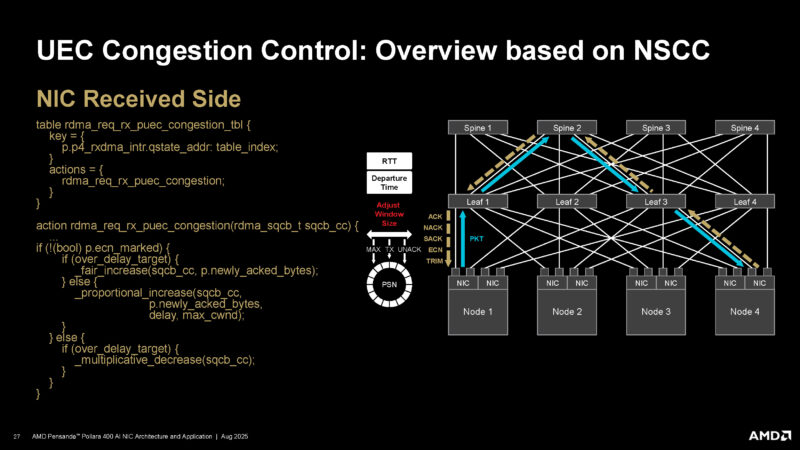

UEC also has congestion control.

Here is the NIC transmit side of the UEC congestion control.

Here is the NIC receive side of the UEC congestion control.

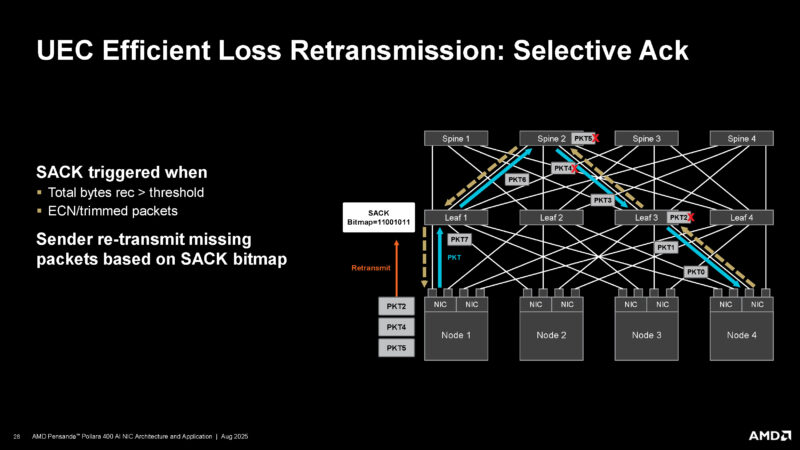

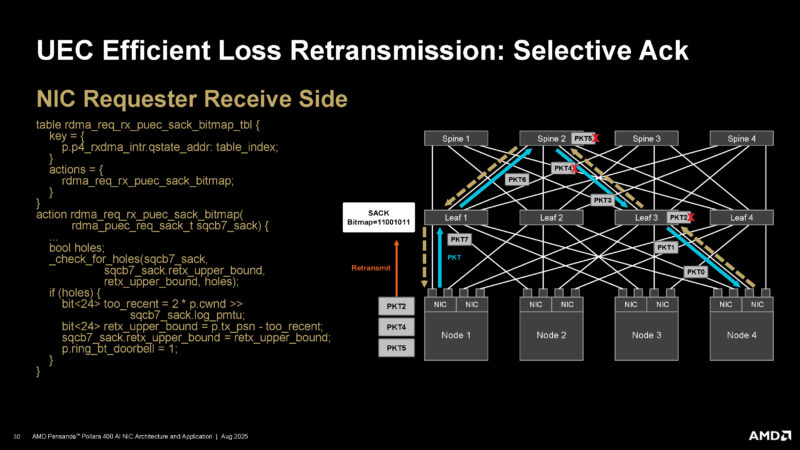

There is also a retransmit feature with selective Ack, or SACK.

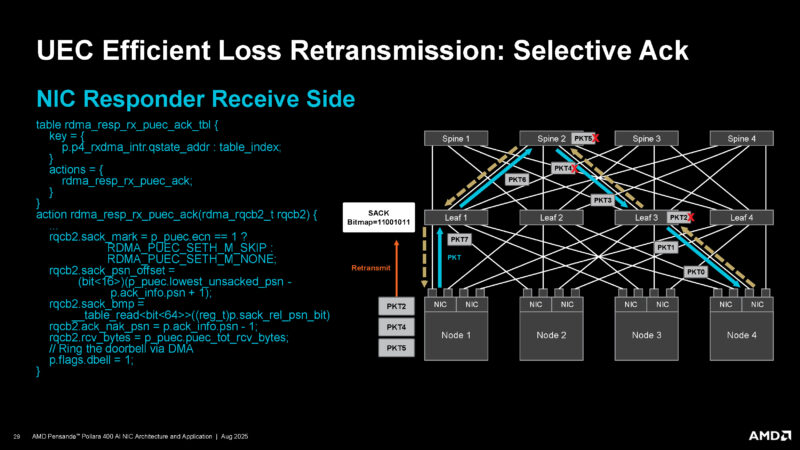

Here is the UEC SACK on the NIC receive side

Here is the requester side:

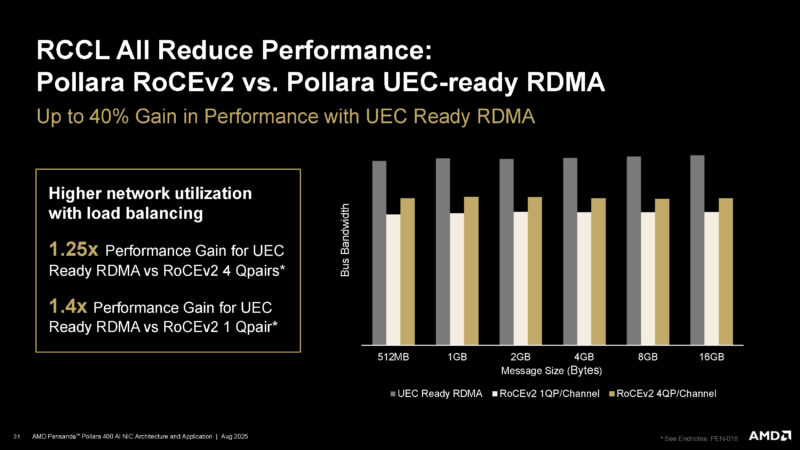

AMD also says that RCCL, AMD’s counterpart to NVIDIA NCCL, with UEC-ready Pollara 400 NICs can increase performance.

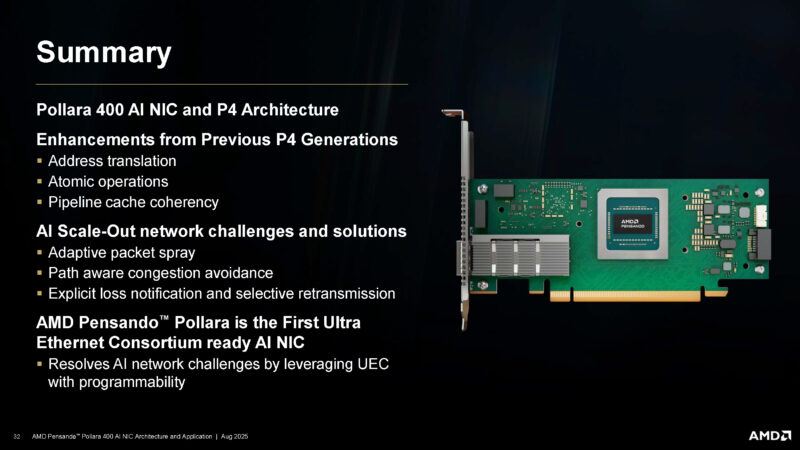

Here is the Pollara 400 AI NIC summary.

The summary seems to be that UEC is good, and Pollara uses P4.

Final Words

Overall, this is an exciting NIC. We have been dealing with a lot of ConnectX-7 400GbE cards recently as we have been building this Keysight CyPerf traffic generator. Hopefully we will be able to use this in our NIC reviews as well.

With all these patches over Ethernet just so it can mimic Infiniband, why not just use the real deal – Infiniband ?

Is this just another ritual dance to please the “Gods of Free Choice” in the “Open Market” show ?

@NotReally Me

So you can have single Ethernet infrastructure, and not dual Ethernet+InfiniBand. Also InfiniBand hardware is basically dominated by Mellanox which was acquired by NVIDIA, so the rest of the industry wants to break away from it.

@Kyle:

IB Hardware market is dominated by Mellanox/nVidia for a good reason – they are kicking everyone’s arse.

They won’t solve that problem by reinventing the wheel.

@NotReally Me

So what do you want them to do? Grovel under NVIDIA’s iron grip? That’s good only for one party – NVIDIA itself. Everyone else loses in this arrangement, including consumers.

They are doing the right thing – a consortium pushing open standards over an already entrenched Ethernet.

@Kyle:

If they chose to rebel, I’d expect them to start with a clean slate and stop trying to patch existing Ethernet.

@NotReally Me

“rebel”… Now I know you’re either a troll or a hardcore NVIDIA shill. In either case it’s not worth my time to argue further with you.

It’s a troll, they don’t know wtf they’re talking about.

Also, is it clear that these are suitable for ai? XD