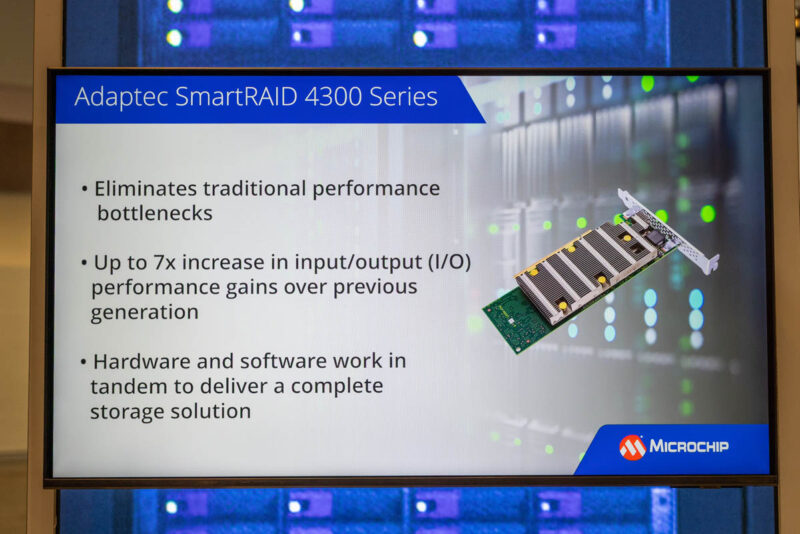

This week at FMS 2025, we saw the Microchip Adaptec SmartRAID 4300. This is a new generation of NVMe RAID controller that is different. Unlike Microchip/ Adaptec’s previous generation RAID controllers, there is no drive connectivity on the device itself.

Microchip Adaptec SmartRAID 4300 A New Era of NVMe RAID Controller Without Drive Connectivity

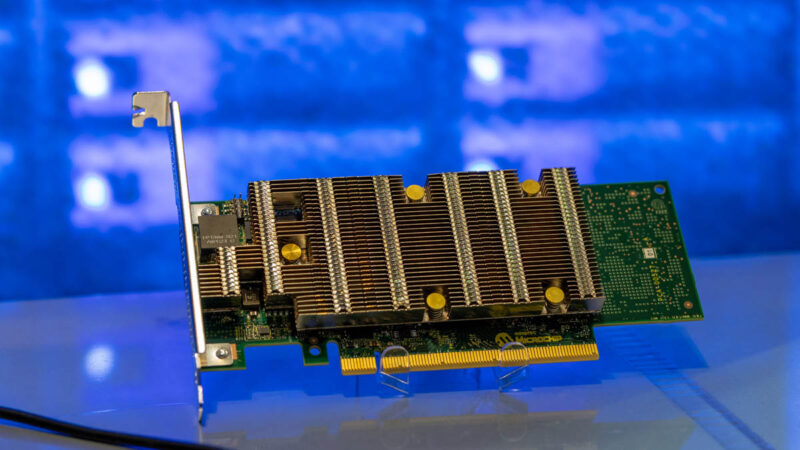

Here is a quick look at the SmartRAID 4300 and you can see a big heatsink, but no MCIO or other connectors for NVMe SSDs.

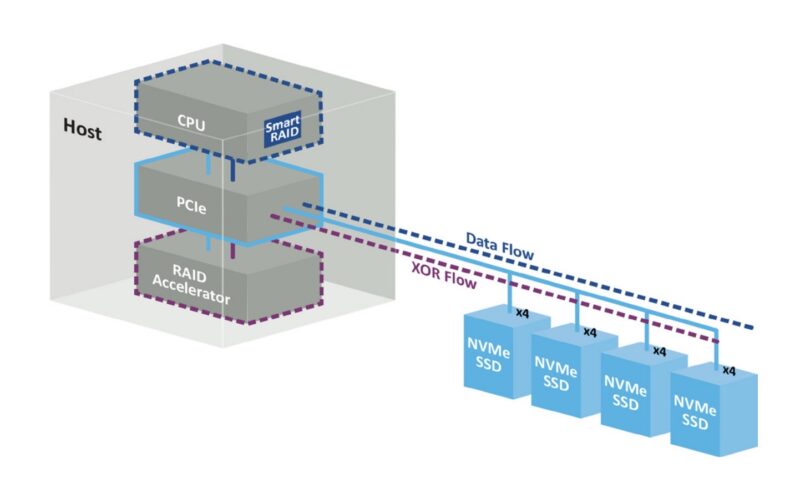

Instead, this is a RAID solution that runs on the CPU and then the data flows directly to the SSDs. The XOR parity calculations are done on the Adaptec SmartRAID 4300 and then that is distributed to the drives.

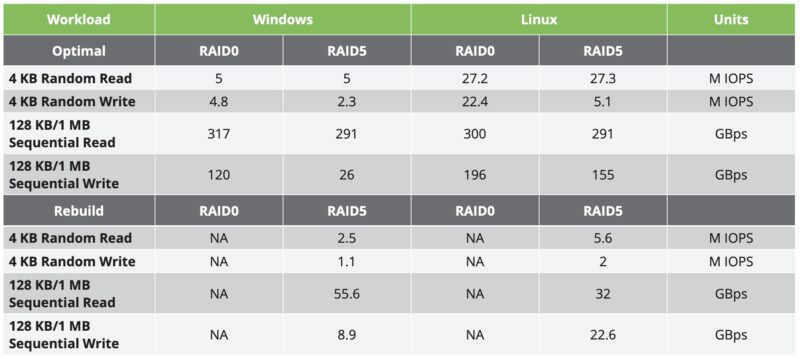

Here is what Microchip is quoting in terms of performance, with only 5M 4K random read IOPS in Windows, but over 27M in Linux. Keep in mind that this is a PCIe Gen4 x16 controller and not a Gen5 device.

The idea is to get the RAID controller out of the direct path to the NVMe SSDs in order to improve performance, rather than being a mandatory bump in the wire. Most traditional NVMe RAID controllers had a PCIe endpoint at the controller, then they had to handle the downstream NVMe devices. Microchip is now removing that stop for all data.

Final Words

This is not exactly a new approach in the industry. Companies like GRAID, Pliops, and so forth have placed their parity offload accelerators on a PCIe bus, skipping the drive connections on their cards. Each approach is different, but at a high-level, this seems to be the way of RAID controllers going forward. Something that is also interesting is that the setup means that optimally you would want the SmartRAID 4300 on the same CPU as the NVMe SSDs to avoid socket-to-socket links. A benefit, aside from performance, is that with modern CPUs that single RAID controller can cover many SSDs that each get their own PCIe x4 link to the system.

Overall, this was neat for the STH team to see at FMS this week.

Why in hell microchip doesn’t sell their PCIe-Switches on an pcie-card with connectors for u.2/u.3 NVME-disks?

WTF’s the use of it if it has old PCIe4 interface ?

What did the rest of the system from the benchmarks look like? How fast would software RAID in Linux (which has issues, but it’s potentially very fast) run without their card?

The slide says “eliminates traditional performance bottlenecks.” But wait, how old do the systems and CPUs have to be to have those traditional bottlenecks?

While traditional RAID still has uses in datacenters with uninterruptible power, my question is whether this device can accelerate ZFS as well?

Eric Olson,

Since nvme storage easily saturated pcie line. Put limit how much drive to put inside raid board.

So this is VROC that requires an additional card to work?

Is there a typo in Units in the Workload table, as the stated throughput greatly exceeds the 31.5 GBps of even a x16 PCIe 3.0 link.

Also the separate “accelerator” card DOUBLES the PCIe transactions crossing the bus, as PCIe is a one-to-one architecture. First every byte needs to be sent to the extra card, and then sent separately to the drive interface. Doesn’t seem a good way to conserve scarce PCIe lanes, either.

Sorry, quoted PCIe 3.0 when I wanted to quote 4.0. Doesn’t make much difference as 4.0 is only double 3.0, not the many multiples shown in the table. Other performance comments remain valid.

Hey! That’s right 16xPCIe4 has a bandwidth of 31.51 GB/s and the table claims read at 300.

Without testing by a third party, how can one choose between snake oil, smoke and mirrors?

My understanding is, Adaptec card provides UEFI boot feature, after computer boot it does nothing when array is configured as RAID0, 300GB/s is possible when computer has 12 channel interleaved DDR5 and 24 PCIe Gen5 NVMe RAID0 drives.

The other problem, which hopefully will be rectified with a new card, is explained by Adaptec on their website:

“Microchip’s Adaptec storage adapters offer two encryption capabilities. The first is Controller-Based Encrypton (CBE) and the second is Self-Encrypting Drive (SED) support. Controller-Based Encrypton (CBE) is a comprehensive encryption solution offered on most Adaptec RAID adapters, but we now also offer SED if your current system is not compatible with an Adaptec® maxCrypto™ CBE-enabled adapter or you need an HBA encryption solution.”.

With CBE you can encrypt *regular* (lower cost, unencrypted, non-SED) SSDs and HDDs at no extra cost; potentially saving a thousand dollars per drive. The SmartRAID-3162-8i/e supports RAID levels 0, 1 ,5, 6, 10, 50, 60, 1 ADM, and 10 ADM.

Too few RAID levels and lack of CBE, was an oversight.

This should be in a chiplet form factor and placed in the same fabric as the CPU, Cache and Memory Controller.

It appears no one here knows how graid works, so saying its like graid lead to a lot of questions and confusions. Please refer to the dataflow picture in the article.

The data written to the NVME SSDs does not ever go to the raid accelerator, Only the XOR calculations that determines which drive which bits get written to. Likewise… it cannot encrypt, what does not flow through it, and relies on SED drives if you want that.

If data flowed through it, it would be limited to X16 of gen4 or gen5 for the next one… which is 1/10th of its actual performance. Same answer for why it doesn’t have pcie switches on the card… the pcie to cpu would be massively bottlenecking.

I do not doubt its performance as it matches gRaid and ive seen that tested.

No it does not support ZFS, but ZFS is soft raid this is halfway there but targeted at only nvme drives. This is a niche that not many are targeting. Traditional raid, mind blowing performance for in box data.