Today, just as many at STH are starting to celebrate 4th of July Eve, Dell and CoreWeave announced that they have delivered a new NVIDIA GB300 NVL72 rack.

Dell and CoreWeave Show Off First NVIDIA GB300 NVL72 Rack

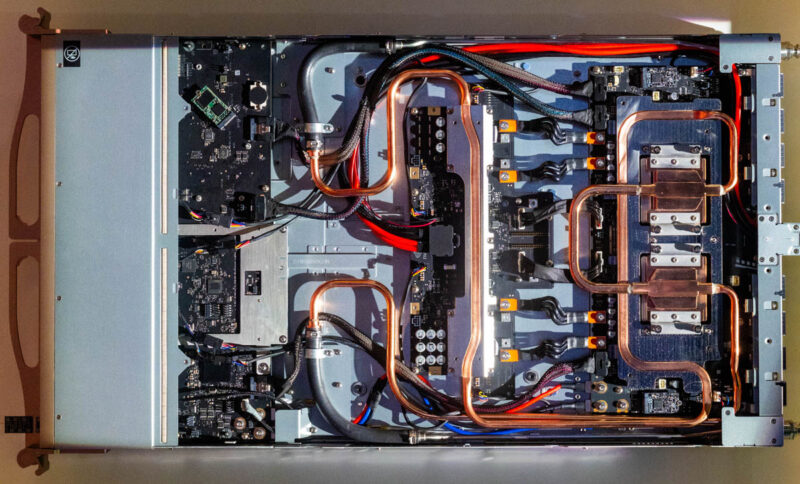

The installation at CoreWeave uses the Dell PowerEdge XE9712. We can also see a Vertiv CDU at the bottom of the rack. Also, with the EVO logo on the racks, the nod to Switch data centers, and the single rack containment design, it is unlikely those are the highest-power density racks that facility can support. Those EVO racks are designed to scale up to approximately 2MW of power each, with 0.250MW of that being air-cooled and 1.75MW worth being direct-to-chip liquid-cooled.

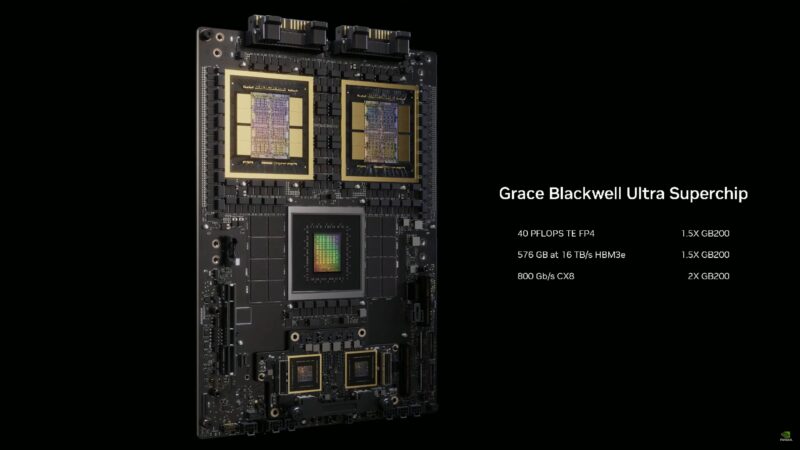

The GB300 or Grace Blackwell Ultra adds more compute and more memory so the NVL72 platform has something like 21TB of memory.

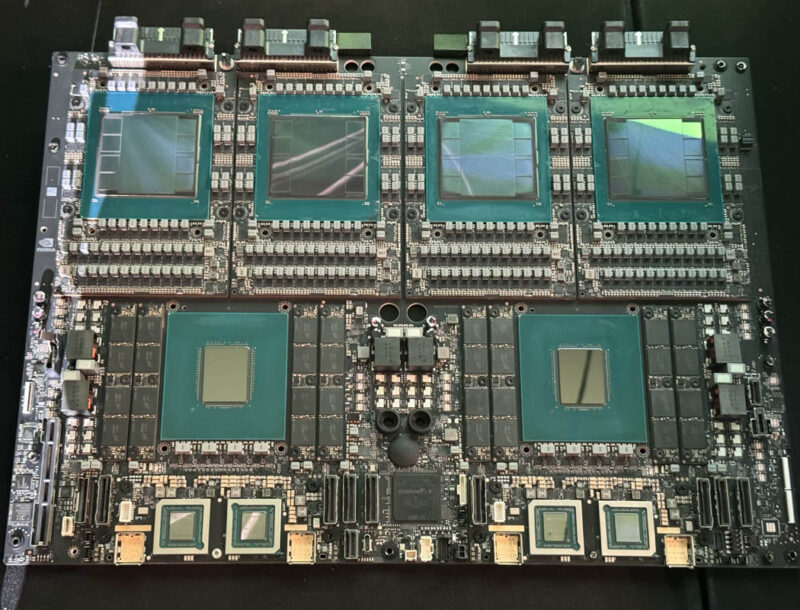

Here is a GB300 NVL72 board we saw back at GTC. There have been some modifications to the design between earlier this year and production.

We also saw a GB300 NVL72 NVLink switch tray at GTC 2025.

CoreWeave said they are using the ConnectX-8 NICs with NVIDIA Quantum-X networking, so CoreWeave is going InfiniBand rather than Ethernet for its East-West scale out network.

Final Words

There are still many GB200 NVL72 installations going on right now. Our sense is that this one is more of an early rack. Still, if you were to use something like $3.7M for a GB200 NVL72 rack, and then add some for the GB300, which adds more liquid cooling and bigger accelerators, this is one expensive rack.

With that said, if you wanted to learn more about where Dell manufactures these systems, Patrick and the team took a tour Inside the Dell Factory that Builds AI Factories.

Is it just me or is this getting a bit crazy. 2MW per rack! That is ~40KW per RU. What is the upper limit for power density?

If you are using C19 plugs that still works out to be ~11 C19 cables per RU! Are the PDU’s water cooled :D

I hope for Dell’s sake that the payment terms are aggressive. Coreweave is one outfit that would be pretty terrifying to be shipping millions of dollars worth of product to without cash in hand.

It’s an OCP style rack, three phase AC power in and then DC bus distribution to the devices. No C19s here.

Still, you can see the power supplies in the picture and there certainly aren’t 2MW of them. Also even at 1400w/chip, 1.75MW is 1250 GB300s and that doesn’t remotely fit in 50RU, plus the switch shelves. Nvidia calls their equivalent rack a 120kw rack and that’s more believable. I suspect when they say “these racks scale up to X” they don’t literally mean the one rack by itself.

It looks like similar to Supermicro’s NVL72 GB200 rack (https://www.supermicro.com/datasheet/datasheet_SuperCluster_GB200_NVL72.pdf), which has 8x 1U 33 kW power shelves, each of which has 6x 5.5 kW PSUs. Supermicro calls it 133 kW total, presumably with N+2 shelves. So yeah, 120 kW sounds pretty reasonable.

This still a *huge* amount of power by any standard, though.

Well, a huge amount of *electrical* power at least. It’s only a ~160 horsepower rack.

8x 1U 33KW standard rack, to a double-wide rack. I feel going from ~120-133kw to 250kw today with plans to run 2.5MW per rack (10x) in the future, I don’t see why it’s impossible. If you are designing a datacenter and rack system to last into the future…

What’s impossible is the power generation required to feed a datacenter that might try for this density, it’s literally going to be nuclear. Cuz if you assume some 100k sqft datacenter (small for the sake of this) is going to have 50 of these “doublewides”… starting at 250kw/12.5MW and you need to run the cooling for 12.5MW ~5MW to cool it with normal efficiencies. So you need a nearby power generation providing 20MW within reason. 50x GB300 NVL72 racks = 3600 gpu’s. Xai Colossus is 200k gpus. It’s drawing more power already, just at lower density (280MW) and they have problems getting that power.

We are going to run out of water for the cooling on these and power generation is going to limit the speeds of deployment far before we run out of the chips. Realistically, is a 700-1400W chip really the sweet spot for efficiency…pulling 700W to get to say 2ghz clocks, I bet you can run them at 1.2ghz at 200W. BUT they are all chasing that brass ring and with the pace of newly released NV chips you need to put it to work as soon as possible in some attempt to be THE ai company, the obsolescence hits within 2 cycles vs power usage.

Also, what I don’t understand, datacenter physical size is not really a constraint versus pricing. So why seek the density so much? Cable length limitations (30M is kinda a limit for copackage optics,but you can extend that). The datacenter will be power limited, not density limited at these sizes. Building a large building (near power) is the easy part.