One of the most popular SAS 6.0gbps/ SATA III controllers for enthusiasts is the IBM M1015. In its native form, it is a very capable host bus adapter (HBA) based on the LSI SAS2008 chip. With the low price on places such as ebay (see here for an ebay search for the M1015) and an enthusiastic community that has learned to unlock many features, it has quickly become a go-to choice for low-cost SATA III connectivity. This series of articles was written by Pieter Schaar perhaps best known as the one behind laptopvideo2go.com. He has been a regular contributor in the STH forums and has been detailing his learning in threads such as these. I do want to note that these modifications are not endorsed by this site, LSI, IBM or others and the information herein is purely for educational purposes. If you do encounter problems, you are solely responsible for those consequences.

There are now a few parts to this piece including:

- IBM ServeRAID M1015 Part 1: Getting Started with the LSI 9220-8i

- IBM ServeRAID M1015 Part 2: Performance of the LSI 9220-8i

- IBM ServeRAID M1015 Part 3: SMART Passthrough on the LSI 9220-8i

Performance

The below performance results were made with the following setup:

- ASROCK P55 Deluxe 3 Motherboard

- Xeon X3470 ES CPU @ 2.93Ghz

- 16GB 1333Mhz DDRIII RAM

- nVidia Geforce GTX465 (modded to GTX470)

- IBM M1015 SAS/SATA controller

- Windows 7 x64 Ultimate

- LSI9211 Firmware package P11 (v7.21.00.00)

- LSI9211 Driver v2.00.49.00

- LSI9240 Firmware Package v20.10.1.0061

- LSI9240 driver v5.1.112.64

- MegaRAID Storage Manager Software v11.06.00.03.00

- 4x OCZ Solid3 60GB SSD (Firmware v2.15) SATA3 (6Gbps)

- HD Tach v3.0.4.0

- ATTO v2.46

- AS SSD v1.6.4237.30508

- Anvil v1.0.31 beta9

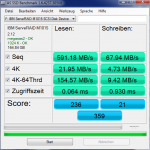

To test the throughput of the M1015 I attached 4x 60GB OCZ Solid3 SSD’s (SATA3, 6Gbps), these are rated at 450MB/s write and 500MB/s read. I used them in either 4 drive RAID0 for maximum speed or 4 drive RAID5 for redundancy and check the parity generating speed of the LSI SAS2008 ROC.

I tested with 4 of the better known benchmarks to give an overall view of how they perform: HDTach, Atto, AS SSD, and Anvil, each does a particular thing well, so with 4 of them I hoped I covererd all bases. I also tested the Intel ICH10R controller on the ASROCK Motherboard, as this can take 6x SATA drives, I did not test the Marvell 9128 also included on the Motherboard as this can only take 2 drives.

Looking at the above the RAID 0 performance is very respectable, the bottom charts are of the OCZ Solid 3 by itself on the controllers, from the LSI9240, Intel ICH and Marvell 9128 Adding more drives to the array scaled the performance very nicely, as four SSD’s nearly achieve 4x single drive performance in RAID 0. The Intel P55’s ICH10R was very good at the higher queue depths, but the IBM M1015 in LSI 9240 mode was king of overall speed. Intel will be able to overtake these figures once their chipsets support more than 2 devices at 6Gbps. As currently the P55 Chipset can only run at SATA2 speeds at 3Gbps max throughput. Also the LSI9240 ofcourse needs no CPU help to do it’s RAID functions as can be seen the HDTach charts, where Intel relies solely on the CPU to do calculations.

Another revelation is the very lackluster performance of the Marvell 9128, really a joke calling it self a SATA3 controller, the SATA 2 Intel controller ran circles round it. LSI9240 Raid 5 on the other hand did very well in the read tests, obviously the LSISAS2008 ROC can suck the data of the disks all 4 at once. BUT when it comes to writing data to the disks, the performance is terrible to say the least. Looking at the LSI spec sheet for both the LSI2008 and LSI2108, the controller is Hardware assisted software RAID, I would have though better performance from it. I can only say AVOID these cards for RAID5, unless you have a say a video server where only reads are done. Any writing will just frustrate the user no end in its subpar performance. If someone knows why this is, please let me know, I’ve tried all the tricks that I know of.

E.d. The reason for this slow write performance is due to the fact that the card does not have battery or capacitor backed write cache. You can learn more about this in the Differences between Hardware RAID, HBAs, and Software RAID for a quick primer of why this happens with certain solutions. The short answer is that without the write cache, the controller gets bogged down by trying to write less sequential bits across the drives. This is prevalant in RAID 5 and RAID 6 writes.

Speaking of which Diskcache was turned off for all tests on the M1015 as this affects Write speeds in RAID0 drastically.

From mediocre to the rather good, but this made no difference for RAID5 performance. The lack of memory available to the ROC seems to be the issue, as the ROC cannot process any data and store it, so I assume parity needs to be handled on the fly.

Next up we will look at the SMART attributes of the IBM ServeRAID M1015 in different modes and compare that to the Intel and Marvell controllers.

You seem to have never heard of the problem with writes to RAID5 where, if a partial stripe is being written, the whole stripe must be read first, then parity computed, then written back. That’s what slows it down, not the parity calculation.

Amateurs shouldn’t write articles.

So how does anyone ever get to write an article as we all started as amateurs ?

I most certainly don’t know all, and I write as I see it.

What you say is true but just as amateur as me ?

The controller can read from the SSD’s at 1500+MB/s in RAID5

So the stripe is read in the blink of an eye.

Then the parity is calculated.

Theoretically the Controller can write to the SSDs in RAID0 at aprox 1200MB/s (the parity has already been calculated in previous step)

So if over all we are getting 70MB/s sequential, 8MB/s 4k, the only conclusion I can come to the Parity calculation = SLOW

I can’t quite work out why the LSI9240 is so slow at Parity calculating where as a Hightpoint equivalent model with no cache does so much better.

I may just complete another part to this series, how many articles does it take to become a novice? ;)

“So how does anyone ever get to write an article as we all started as amateurs ?”

By studying what professionals write? Kinda obvious, duh.

When I have no clue about something, I don’t write my braindump as what could be mistaken as an authoritative source of knowledge, possibly leading people to absorb my half-knowledge and spreading it. It would just dumb down everything down the road. But that’s just me.

I still don’t get what you are on about ?

Is the article just plain wrong, or my style of writing ?

LSI9240 RAID5

Read speed = fast

Write speed = fast

So when real life performance is slow what else is to blame but the speed the Parity is calculated

What would a professional conclude from it ?

I base what I write on what I see, I see lack luster performance I write about it, its not the pretty text you may expect from TomsHardware

I may not ever get to work for a Publishing Firm, but this is a blog ? where you get to have your say on matters ?

I don’t get paid for it, and it might be of use to those looking at a LSI based controller, thinking RAID5 will do well as it can do it.

And of course you are allowed your say on the matter, as it’s a blog after all.

Anyway, you have given me the enthusiasm to write about the IBM M5015 now, and it does do RAID5 very well.

Well I’ve fround the article helpful and informative. Professionals are not always right (look at this banking mess we are in at the moment) Some people are unable to offer constructive “positive” criticism, they were good points but in my opinion poorly communicated. Good luck on your quest Pieter :-)

RAID5 How long time 4k check?

im 9750 4i user 4k check time very slow…

@Person and @ Pieter

It is painfully obvious the pair of you are “Gurus” and as such, you spend your days Trolling the web looking (not) to add beneficial feedback but instead to show your immaturity and lack of knowledge (and experience).

Don’t misunderstand, you have a partial valid point about potential performance issues, however, your (amateur) feedback is also only one of many potential causes for performance issues during RW IO operations. There are many factors; with the Authors (and yours) being two of them. It is extremely unlikely (impossible) to identify, list and discuss all of the potential factors in such a very short article.

For reference I will add two more. Read Modify Write latencies, Mixed Storage, Sequential Vs Random, Cluster Sizes, Native Sector Size(s) / Mixed Sector Sizes, Buffered Vs Unbuffered IO operations, Write-Back Vs Write-Through, Command Queue / Queue Depth, Cache Size, Drives & FW, Operating System Platform, OS Bugs, and the list goes on almost forever, etc. etc. etc..

In short, the author has written a good article but as will all articles, they are not intended to be the End-All / Be-All definitive reference. You must (Read and Study More) to gain additional understanding. Thank you to the Author for this article (and good luck to “Person and Pieter” in their life).