Micron 9400 Pro 30.72TB Performance

Our first test was to see sequential transfer rates and 4K random IOPS performance for the Micron 9400 Pro. Please excuse the smaller-than-normal comparison set. In the next section, you will see why we have a reduced set. The main reason is that we swapped to a multi-architectural test lab. We actually tested these in 17 different processor architectures spanning PCIe Gen4 and Gen5. Still, we wanted to take a look at the performance of the drives.

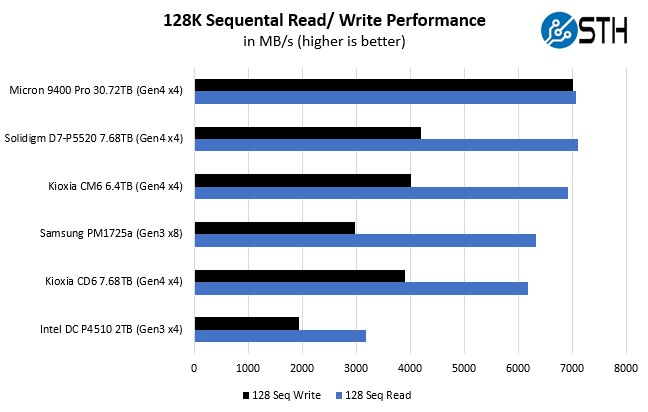

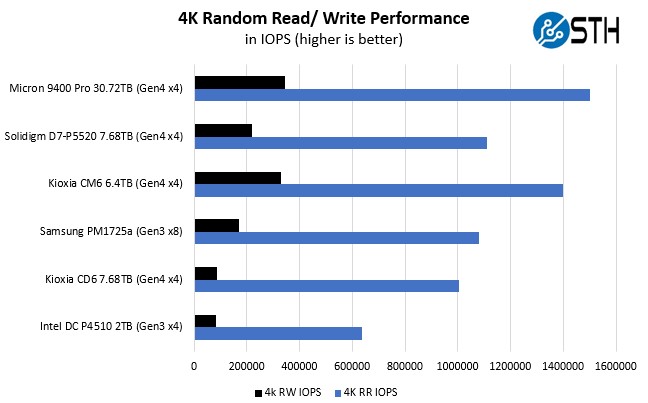

Overall the sequential and 4K random read/ write numbers were about what we would expect from a modern NVMe SSD and close to the rated speeds.

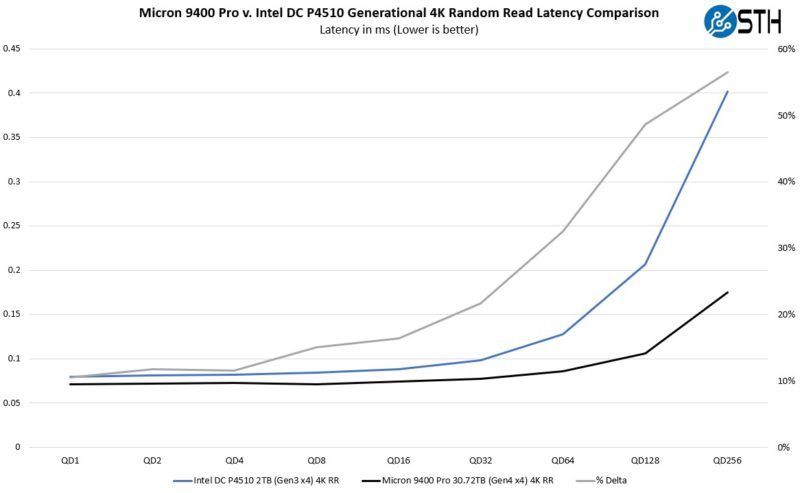

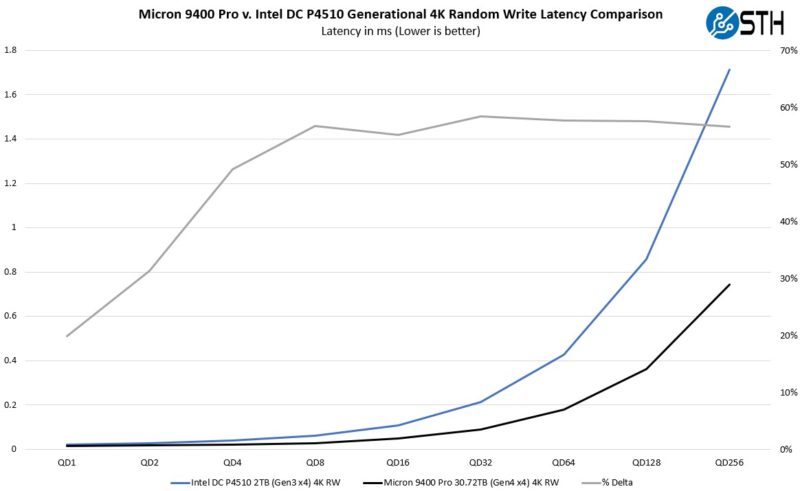

Just to put that into perspective given our theme of modern drives, like the Micron 9400 Pro in modern server platforms seeking a 2:1 to 4:1 consolidation ratio, here is the Intel DC P4510, a popular and later drive in the 2018-2020 era compared to the Micron 9400 Pro:

When we look at read latency, we saw 10-20% lower latency at lower queue depths but then scaling into the 50-60% range at the higher queue depths. A 50% improvement in latency from the previous generation means the latency is half. That is an enormous gain.

Looking at the write side of the equation, the performance is even better with 50% lower latency even at QD4. That is absolutely stunning. This is the reason we put the percentage delta in these charts because that QD4 improvement may seem small given the higher QD256 latency on the older drive skewing the chart’s scale.

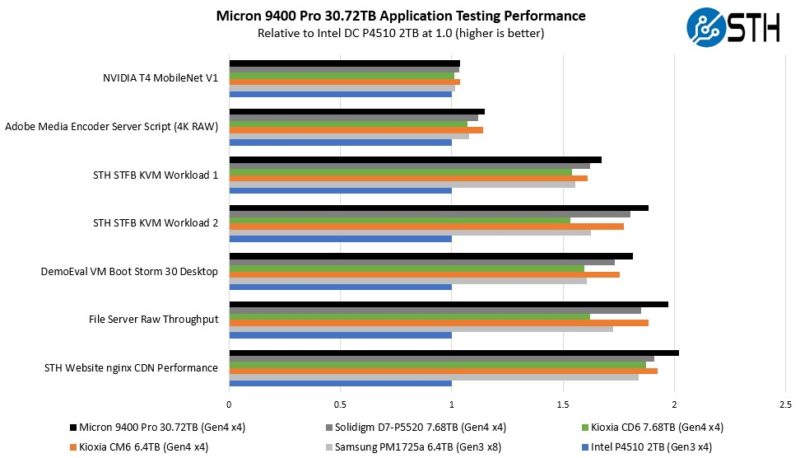

Micron 9400 Pro 30.72TB Application Performance Comparison

For our application testing performance, we are still using AMD EPYC. We have all of these working on x86 but we do not have all working on Arm and POWER9 yet. The Micron 9400 Pro drive performed very well even getting top honors in a test.

As you can see, there are a lot of variabilities here in terms of how much impact the Micron 9400 Pro has on application performance. Let us go through and discuss the performance drivers.

On the NVIDIA T4 MobileNet V1 script, we see very little performance impact, but we see some. The key here is that the performance of the NVIDIA T4 mostly limits us, and storage is not the bottleneck. Here we can see a benefit to the newer drives in terms of performance, but it is not huge. That is part of the overall story. Most reviews of storage products are focused mostly on lines, and it may be exciting to see sequential throughput double in PCIe Gen3 to PCIe Gen4, but in many real workloads, the stress of a system is not solely in the storage.

Likewise, our Adobe Media Encoder script is timing copy to the drive, then the transcoding of the video file, followed by the transfer off of the drive. Here, we have a bigger impact because we have some larger sequential reads/ writes involved, the primary performance driver is the encoding speed. The key takeaway from these tests is that if you are mostly compute-limited but still need to go to storage for some parts of a workflow, the SSD can make a difference in the end-to-end workflow.

On the KVM virtualization testing, we see heavier reliance upon storage. The first KVM virtualization Workload 1 is more CPU limited than Workload 2 or the VM Boot Storm workload, so we see strong performance, albeit not as much as the other two. These are KVM virtualization-based workloads where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. We know, based on our performance profiling, that Workload 2, due to the databases being used, actually scales better with fast storage and Optane PMem. At the same time, if the dataset is larger, PMem does not have the capacity to scale, and it is being discontinued as a technology. This profiling is also why we use Workload 1 in our CPU reviews. Micron’s blistering random IOPS performance is really helping here. On Workload 2, and the VM Boot Storm, we see the performance of the new drives really shine. Micron’s story around the 9400 Pro is not just that it is a fast SSD but that it enables the consolidation of servers in terms of performance and capacity.

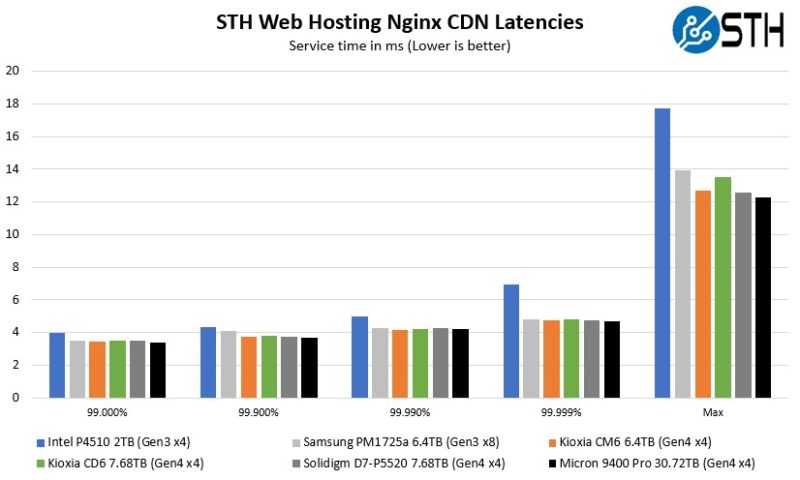

Moving to the file server and nginx CDN, we see much better QoS from the new Micron 9400 Pro 30.72TB versus the PCIe Gen3 x4 drives. Perhaps this makes sense if we think of a SSD on PCIe Gen4 as having a lower-latency link as well. On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with caching disabled, to show what the performance looks like in that case. Here is a quick look at the distribution:

Here the Micron 9400 Pro 30.72TB drive performed extremely well, topping the charts. We ended up with more throughput as well as lower latency and lower tail latency on an application that is very important to us.

Now, for the big project: we tested these drives using every PCIe Gen4 architecture and all the new PCIe Gen5 architectures we could find, and not just x86, nor even just servers that are available in the US.

Is Dell using these drives? It’d be nice to get 4 drives instead of 8 or 16 so it increases airflow. That’s something we’ve noticed on our R750 racks that if you use fewer larger drives and give the server more breathing space they run at lower power. That’s not all from the drives either.

I’m enjoying the format of STH’s SSD reviews. It’s refreshing to read the why not just see a barrage of illegible charts that fill the airwaves since Anandtech stopped doing reviews.

That multi uarch testing is FIRE! I’m going to print that out and show our team as we’re using many of those in our infra

I enjoyed seeing the tests for the different platforms. As the Power 9 was released in 2017, it’s interesting how it holds up as a legacy system. My understanding is that IBM’s current Power10 offering targets workloads where large RAM and fast storage play a role. I’m particular, the S1022 scaleout systems might be an good point of comparison.

At the other end of the spectrum, I wonder which legacy hardware does not benefit significantly from the faster drive. For example, would a V100 GPU server with Xeon E5 v3 processors benefit at all from this drive?

Micron 9400 en Fuego!

30TB over PCIe 4.0 should take around 1h. So, a 7 DWPD drive in this class would have to be writing at full speed for ~7h per day to hit the limit. I’m sure workloads like this exist, but they’d have to be rare. You probably wouldn’t have time for many reads, so they’d be write-only, and you probably couldn’t have any sort of diurnal use patterns, or you’d be at 100% and backlogged for most of the day with terrible latency. So, either a giant batch system with >7h/day of writes, or something with massive write performance but either no concern about latency, or the ability to drop traffic when under load. Packet sniffing, maybe?

Given the low use numbers on most eBay SSDs that I’ve seen, you’d need a pretty special use case to need more than 1 DWPD with drives this big.

That’s 1 hr to fill 30tb using sequential writes which are faster. The specs for DWPD are 4K random since those are what kill drives. If all sequential SSD have much higher endurance. So the 1hr number used is too conservative

How does one practically deploy these 30TB drives in a server with some level of redundancy? Raid 1/5/10? VROC?

@Scott: “You probably wouldn’t have time for many reads”

I am not sure what you mean. PCIe is full duplex and you can transfer data at max speeds in both directions simultaneously. As long as the device can sustain it, that is. I would assume reading is a much cheaper operation than writing so in device with many flash chips (like this one) that provide enough parallelism you should be able to do plenty of reads even during write-heavy workloads.

@Flav you would most likely deploy these drives in a set using a form of Copy-On-Write (ZFS) or storing multiple copies with an object file system or simply as ephemeral storage. At this capacity RAID doesn’t always make sense anymore as rebuild times become prohibitively long.

This is a superior read. TY STH

Great read. I’ve managed to get my hands on an 11TB Micron 9200 ECO, which I’m using in my desktop and while it’s an amazing drive, it gets really toasty and needs a fan blowing air directly at it, which makes sense, as the datasheet specifically requires a continuous airflow along the drive.

What I’d like to say with all that is that I think it would be really useful if you could include some power consumption figures for all of these tests, as the idle power is generally not published on the datasheets, all we get is a TDP figure, which is about as accurate as the TDP published for a CPU. If you used an U.2 to SFF-8639 cable, you could measure the current along the 2-3 voltage rails, which I think would be fairly simple to do.

Blah blah blah. How much are these mofo’s gonna cost? Looking forward to these drives being the norm for the consumer market at a lie price to be honest. Then we can have a good laugh at the price we used to have to pussy fit a 1tb ssd, like in the early 90’s with 750mg hdd’s costing £150+.

In general STH should have more focus on power consumption!

The are astonishingly affordable for what they are. $4,400 on CDW.com. Going to be loading the boat on these things.

How are you connecting the 9400 U.3 drives? i just bought a 7450 U.3 cant get it to work with a PCIe 3.0 x4 U.2 card, nor with m.2 to u.2 cable, both work fine with Intel 4610 or Micron 9300 but not with 7450, just looking for a PCIe card or an adapter to test the 7450.