A few weeks ago we previewed the Intel Fortville dual 40GbE solution, and some of Intel’s cards like the XL710. With the release of Haswell-EP recently, we now have Fortville available for public discussion.

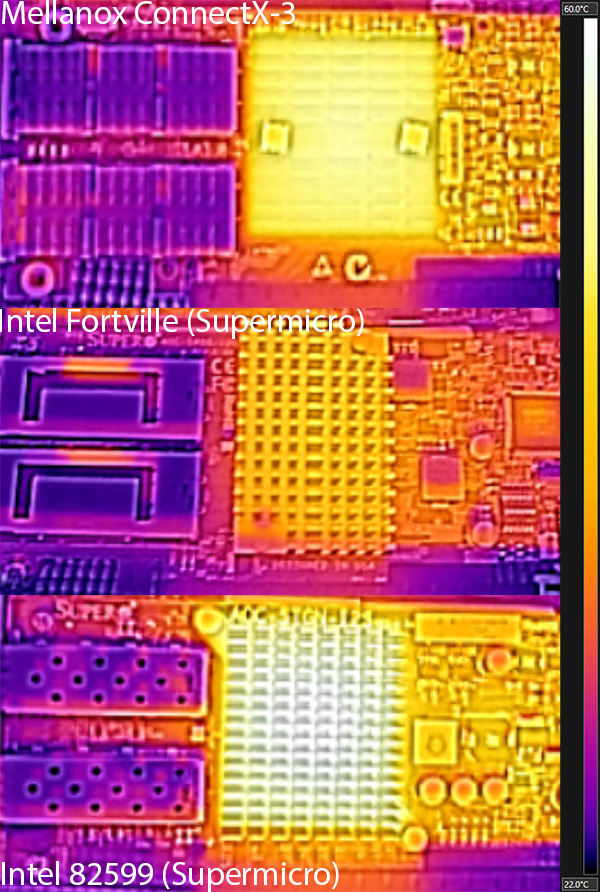

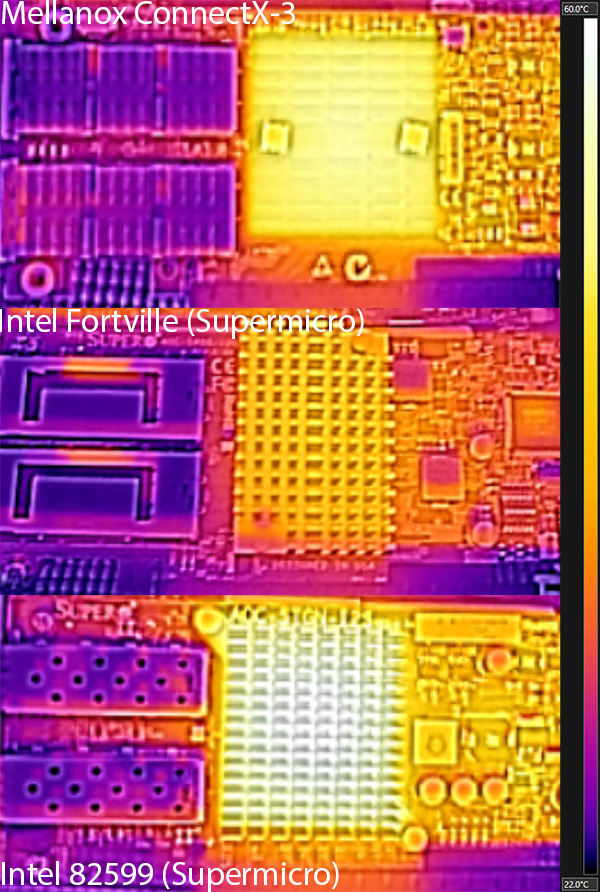

Here are three high-speed network controllers side-by-side. We used our FLIR Ex series 320×240 thermal imaging camera to snap a few pictures of the cards. Temperatures were normalized to our standard 22C – 60C range.

In this mini-round-up we have a Mellanox ConnectX-3 VPI adapter that can do both FDR Infiniband as well as 40GbE. In the middle we have a new Supermicro Intel Fortville adapter. On the bottom we have a legacy Supermicro 82599ES adapter (Intel X520 equivalent.) The images speak for themselves.

One can easily see that these new Intel Fortville based adapters run cool.

We heard at the Intel press day that Fortville owes this largely to its new smaller process. Fortville’s controllers are 28nm parts. Of course, Intel has 32nm and 22nm so these seem to be built using TSMC as the foundry.

Between the 3.6w typical and 7w TDP what we see is making sense. This new lower power architecture is a game changer.

10GbE and 40GbE are now extremely low power options. Many motherboards we have seen, such as those from Supermicro and Gigabyte have integrated Intel X540 10Gbase-T controllers (even dual controllers for quad 10GbE). The X540-t2 (dual port) cards had a typical power consumption with 2m cables of 13.4w each. Moving to Fortville gives eight ports, or the equivalent of 50w of 10Gbase-T Ethernet for 3.6w. That has profound power and cooling impacts.

For those wondering, I have been pestering manufacturers to find out when we will see integrated Fortville. No firm timelines just yet, but it makes sense. We showed yesterday how the Intel Xeon E5-2699 V3 can consolidate two E5-2690 generation servers into a single machine. That much VM space will start to need additional networking bandwidth. Now that additional bandwidth has minimal power and cooling implications, new converged architectures will start to make sense.

I just $hat myself. Really? That is phenominal. How did they do it? Like that is looking cool enough to touch in the 40C range (41 is burn point right?)

I tried making a box with 6 Intel X520’s and that was generating like 70w in additional load which was as much as another low power processor. Plus you gotta keep NICs cool or else they can lead to connection or system stability issues.

Sweet visual. I already have budget set aside for these in the October quarter.