The Gigabyte R280-G2O is a 2U server designed for GPU compute applications. Lately we have reviewed several GPU servers here at STH, from big 8-Way GPU machines to 4-Way GPU servers. Today we have the latest 3-Way GPU server from Gigabyte called the R280-G2O GPU/GPGPU rackmount server in the lab and ready for review.

Gigabyte R280-G2O GPU Server Key Features:

- Supports up to 3x double slot computing cards

- NVIDIA validated GPU platform: Supports 3x NVIDIA Tesla, K80 GPU accelerators

- Supports AMD FirePro and Intel Xeon Phi cards

- Intel Xeon processor E5-2600 V3 product family

- 24x RDIMM/LRDIMM ECC DIMM slots

- 24x 2.5″ hot-swappable HDD/SSD bays – SAS card required to enable the drive bays

- Supports SATA III 6Gb/s connections

- 2x GbE LAN ports (Intel I350-BT2)

- 2x 1600W 80 PLUS Platinum 100~220V AC redundant PSU

- 2U form factor, height 87mm, width 430mm, depth 712.4mm

Storage provisions include front mounted 24x 2.5” hot-swap bays with support for SATA III 6Gb/s connections. Network needs are supplied 2x GbE LAN ports (Intel I350-BT2) and remote management port, in addition there is a mezzanine type T (Gen3 x8 bus) card slot is available for expansion cards in the back of the server.

Complete PCIe slots via riser cards are:

- 6x Full-length full-height slots

- 2x Half-length full-height slots

- 1x PCIe mezzanine type T (Gen3 x8 bus) slot

That is a solid number of expansion slots as this server is designed to make extensive use of PCIe devices. The installed MD90-FS0 motherboard includes all IR Power Design with Digital PWM and IR PowIRstage IC controllers, OS-CON Capacitors with a minimum service life of 50,000 hours and High-End Ferrite Core Chokes for ultra stable power delivery.

Close look at the R280-G2O GPU Server

The R280-G2O server comes in a heavy duty shipping box and weighs in at 39.68 lbs (18 kg) before GPU’s and drives are loaded in. The kit includes rails and two heat sinks plus aditional mounting hardware. There is also a driver DVD included in the kit.

Here we are looking at the front of the R280-G2O server. The front is divided up into three sections holding all 24x 2.5” hot-swap drive bays. Please note that a SAS card is required to enable the drive bays as the Intel PCH cannot support 24 bays.

At the back of the server we can see the rear exhaust ports for all 3x GPU’s.

Across the bottom we find 2x 1600W Redundant Power Supplies. The middle IO area has 2x USB 2.0. 2x USB 3.0 ports, 2x 1GbE LAN ports, 1x Remote Management port and a VGA connector.

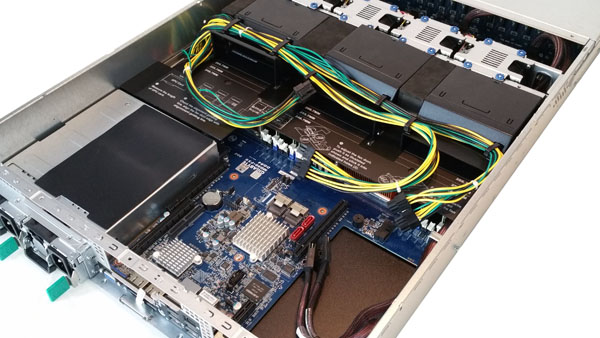

Here we have taken off the top lid to show the internal layout. Looking at the back of the server we can see the PCIe riser cards and power cables above the air-shroud.

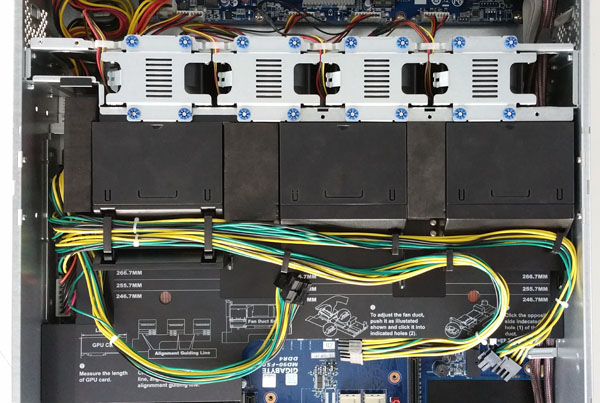

After removing the riser cards we can get a better look at the cooling shroud which has a channel underneith to cool the CPU/RAM area. The top cooling area is broken down into three sections, one for each GPU.

Each of the three GPU cooling shrouds are adjustable so they can fit different GPU’s available on the market. Power to the GPU’s is provided by power cables that connect to the motherboard on the left side.

The mid plane cooling bar houses four large 80x80x38mm (14,900rpm) fans which cool the server’s components. These fans are connected to the front backplane via headers.

They are easy to pull out and are cushioned well to keep vibrations to a minimum but the power cables must be removed from the back plane for complete removal so these are not true hot swap fans. This would be a potential area for improvement in future iterations of the product.

At the left side of the server we have 2x 1600W Redundant 80 PLUS Platinum Power Supplies.

The power supplies are hot swap and a power supply can be replaced while the server is running.

On the front left side of the server there is a control panel which has typical functions found in most servers. Power & Reset Buttons, ID Button, System Status and LAN LEDs and HDD Status LEDs.

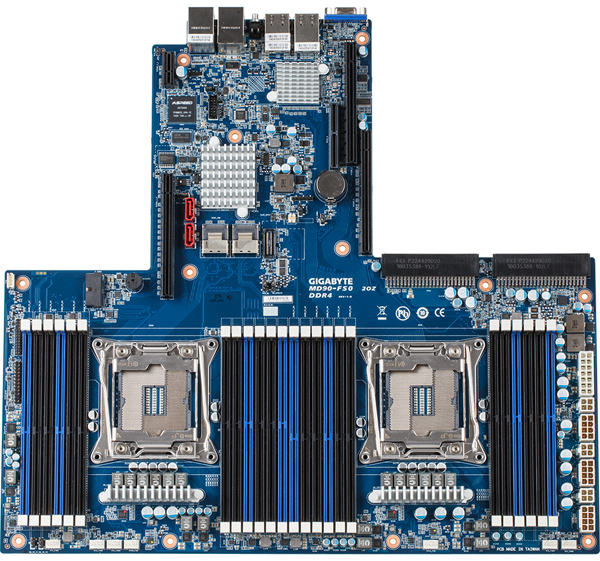

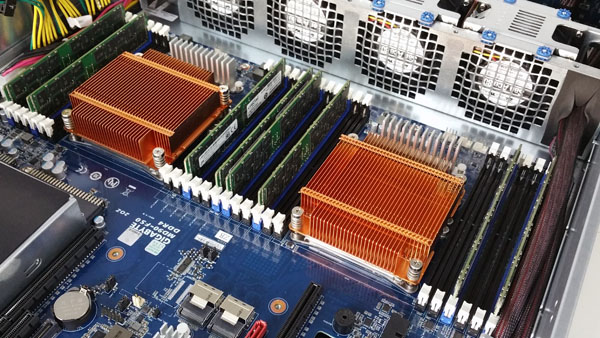

At the core of the R280-G2O GPU server is the MD90-FS0 motherboard. As one can see, this motherboard is a custom form factor specifically designed for this chassis and application.

Each of the 24x DIMM slots found on the MD90-FS0 server support ECC RDIMM/LRDIMM. Support includes single and dual rank RDIMM modules up to 32GB or quad rank LRDIMM modules up to 64GB giving capacities of 768GB and 1.5TB of DDR4. Down the center section are the expansion PCIe slots and on the right we find the PSU power connectors.

The power supplies also plug directly into the motherboard eliminating a separate power distribution board. The power distribution board tends to be a high-failure rate part so eliminating this extra piece of PCB can aid in server reliability.

Configuring the Gigabyte R280-G2O

Moving on to installing the CPU’s, RAM and GPU’s into the R280-G2O GPU server. We were very happy to find that Gigabyte has supplied this server with copper heat sinks, we feel they perform better than aluminum heat sinks.

Directly behind the main cooling assembly is where the CPU’s are located which provides optimal cooling for these components.

Looking at the back of the server now we see the PCIe slots that provide support to the riser cards and There are also two SFF-8087 connectors and cables that connect to the front drive bays. These each provide 4x SATA III 6.0gbps ports based on the Intel C612 PCH. To enable additional drives on the 24 port front panel, one will need to install an additional SAS/ SATA HBA or RAID card.

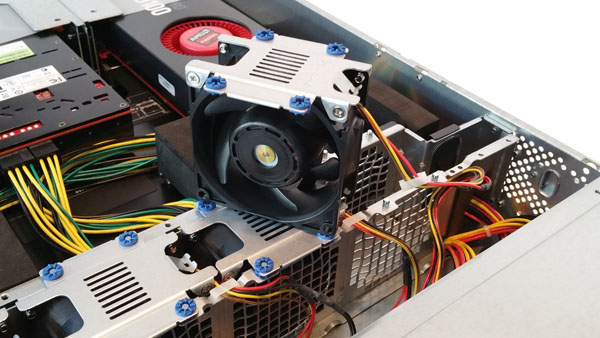

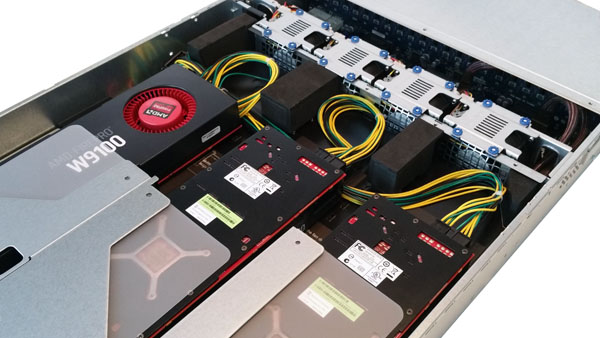

Turning to the GPU installation Gigabyte uses riser cards to orient full height cards in the 2U chassis.

With the riser card on the left side removed installing the GPU is rather straight forward and on this riser card we can see two PCIe slots are available for expansion cards.

The riser card on the right side provides PCIe slots for two additional GPU cards, this is also straight forward to install the cards.

After we have finished installing the CPU/RAM and GPU’s we take a finial look at the system all setup and ready to begin testing. We recommend connecting the power cables up to the GPU’s before reinstalling the riser cards back into the server.

Here we have all three GPU’s and riser cards installed back into the server. For the W9100’s which we used on our test setup we needed to remove the GPU cooling shroud for these to fit. In doing this we still have ample air flow from the front cooling bar to keep the GPU’s cool.

Looking at the back of the server now we can see that the W9100’s has top mounted cooling fans, we thought that the GPU on the far right might have heating issues but in our tests we found it performed just fine. If we had been using cards like the S9150’s that we have used in other tests, we would need to use the GPU cooling shroud to direct air flow into the GPU as S9150’s do not have active cooling.

The server is all setup and ready to go now so let us take a look at the BIOS and remote management.

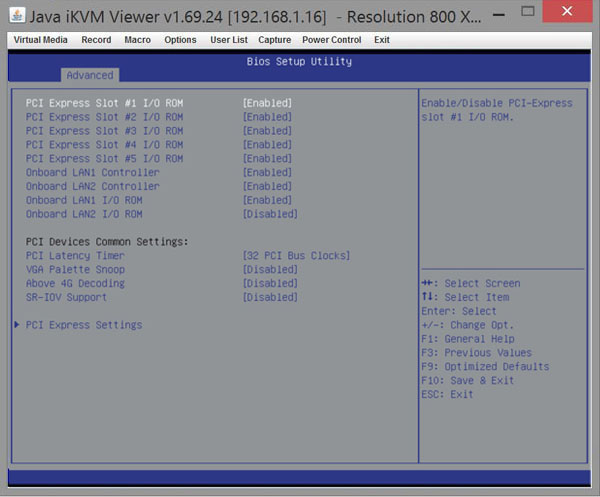

BIOS

We have previously covered the Gigabyte BIOS solution and find that it works well.

Looking at the BIOS we can see how it reports the PCIe slots. Each of the slots used for the GPU’s are PCIe x16 and the 2x expansion slots are x8 slots.

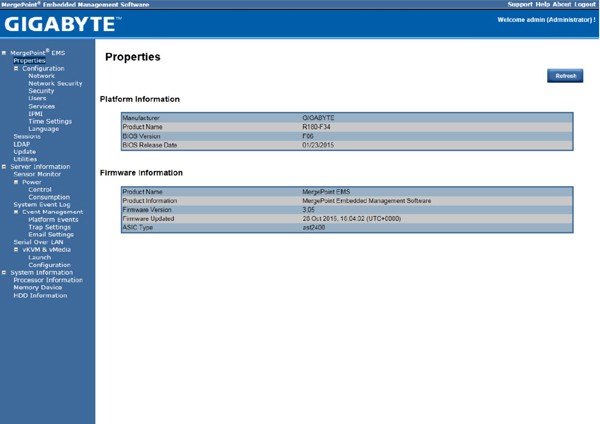

Remote Management

For remote management simply enter the IP address for the server into your browser and login.

The login information for the Gigabyte R280-G2O GPU server is:

- Username: admin

- Password: password

Remote management also reports the temperature of each CPU/GPU and also all the other aspects that are typical of Supermicro remote management solutions.

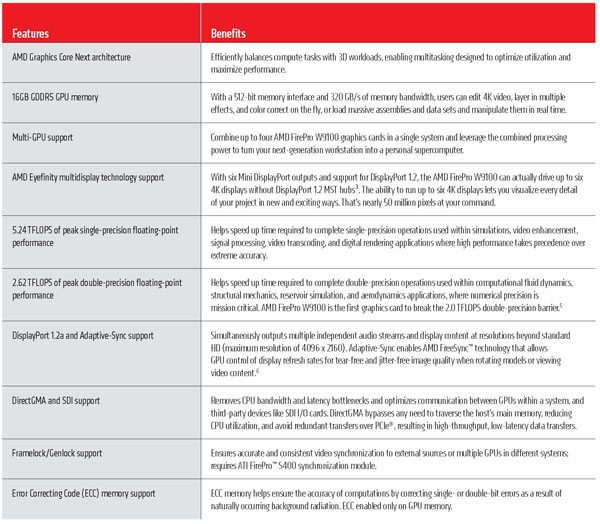

AMD FirePro W9100 Professional Graphics GPU’s

For this review we will be using AMD FirePro W9100 GPU’s, these are professional workstation graphics cards but also have the ability to be used in compute systems.

Key AMD FirePro W9100 Cooling/Power/Form Factor Specs:

- Cooling/Power/Form Factor:

- Max Power: 275W

- Bus Interface: PCIe 3.0 x16

- Slots: Two

- Form Factor: Full height/ Full length

- Cooling: Active cooling

We often see the W9100’s in professional workstations for graphics/media applications use. These GPU’s have 6x mini-display ports and can support resolutions of 4096×2160. They also have 2,816 stream processors (44 compute units) which make this an ideal card for compute workloads.

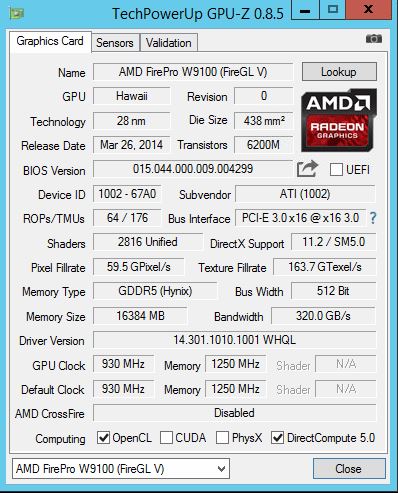

This is how GPUz reports these cards.

Test Configuration

The Gigabyte R280-G2O GPU Server does not include a DVD drive for installing software so in our case, we used a USB DVD drive to install Windows Server 2012 R2 and run our Ubuntu Disk directly off of the USB drive. We had no issues getting a OS installed on the system, drivers were used directly from Gigabyte’s website.

- Processors: 2x Intel Xeon E5-2650L V3

(12 cores each)

- Memory: 8x 16GB Crucial DDR4

(128GB Total)

- Storage: 1x SanDisk X210 512GB

SSD

- GPU’s: 3x AMD FirePro W9100

Professional Graphics GPU’s

- Operating Systems: Ubuntu 15.04 and Windows Server 2012 R2

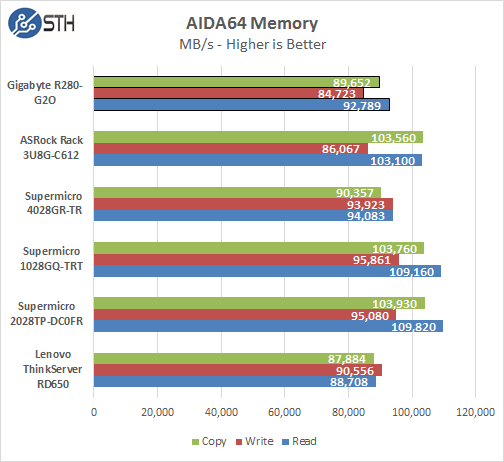

AIDA64 Memory Test

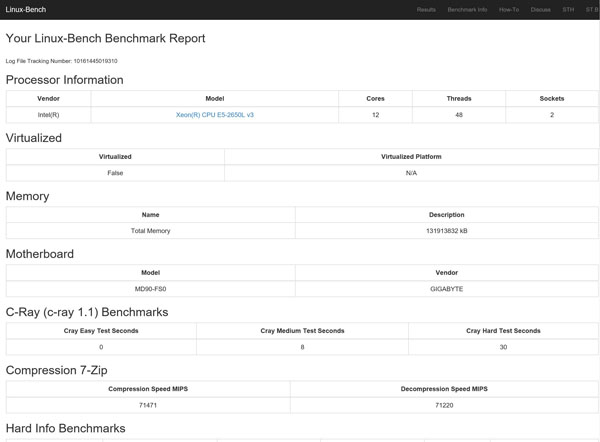

Linux-Bench Test

We ran the motherboard through our standard Linux-Bench suite using Ubuntu as our Linux distribution. Linux-Bench is our standard Linux benchmarking suite. It is highly scripted and very simple to run. It is available to anyone to compare them with their systems and reviews from other sites. See Linux-Bench.

An example run of Linux-Bench on this system can be found here: Gigabyte R280-G2O GPU Server Linux-Bench Results

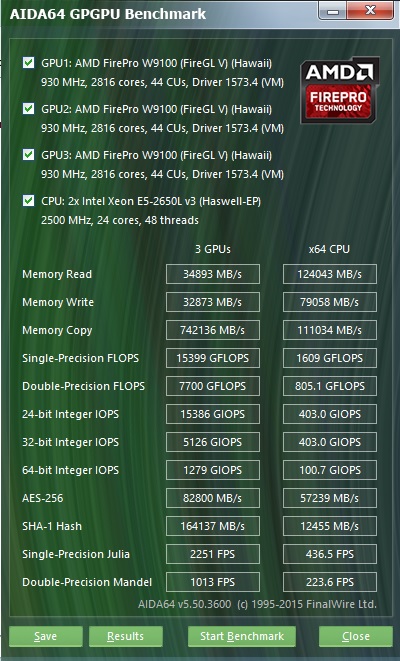

AIDA64 GPGPU Test

For our GPU testing we use AIDA64 Engineer to run the provided GPGPU tests which has the ability to run on all three GPU’s and the CPU’s.

The first test we ran was AIDA64 GPGPU benchmark. This test see’s all GPU/CPU present in the system and goes through a lengthy benchmark test. Our combined single-precision performance came in at 15,399 GFLOPS or 15 TFLOPS performance!

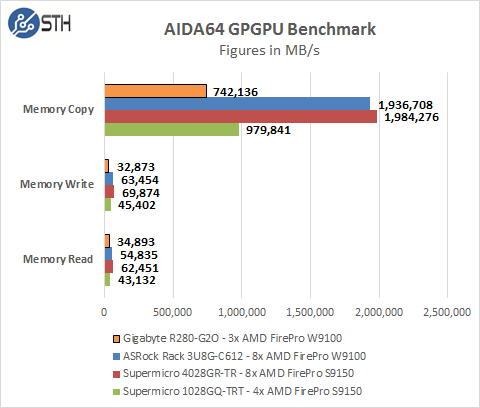

Memory Read: Measures the bandwidth between the GPU device and the CPU, effectively measuring the performance the GPU could copy data from its own device memory into the system memory. It is also called Device-to-Host Bandwidth. (The CPU benchmark measures the classic memory read bandwidth, the performance the CPU could read data from the system memory.)

Memory Write: Measures the bandwidth between the CPU and the GPU device, effectively measuring the performance the GPU could copy data from the system memory into its own device memory. It is also called Host-to-Device Bandwidth. (The CPU benchmark measures the classic memory write bandwidth, the performance the CPU could write data into the system memory.)

Memory Copy: Measures the performance of the GPU’s own device memory, effectively measuring the performance the GPU could copy data from its own device memory to another place in the same device memory. It is also called Device-to-Device Bandwidth. (The CPU benchmark measures the classic memory copy bandwidth, the performance the CPU could move data in the system memory from one place to another.)

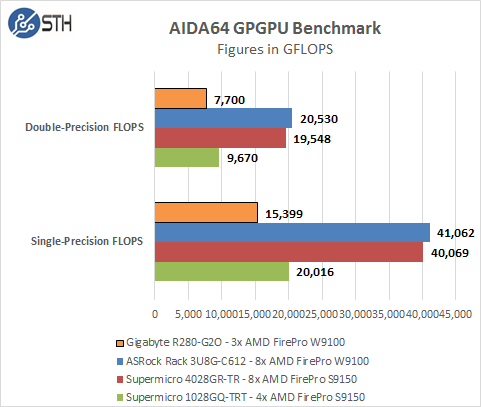

Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data. Not all GPUs support double-precision floating-point operations. For example, all current Intel desktop and mobile graphics devices only support single-precision floating-point operations.

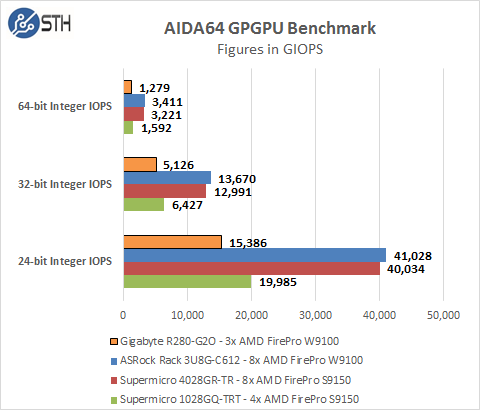

24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This special data type are defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units, effectively increasing the integer performance by a factor of 3 to 5, as compared to using 32-bit integer operations.

32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead they emulate the 64-bit integer operations via existing 32-bit integer execution units. In such case 64-bit integer performance could be very low.

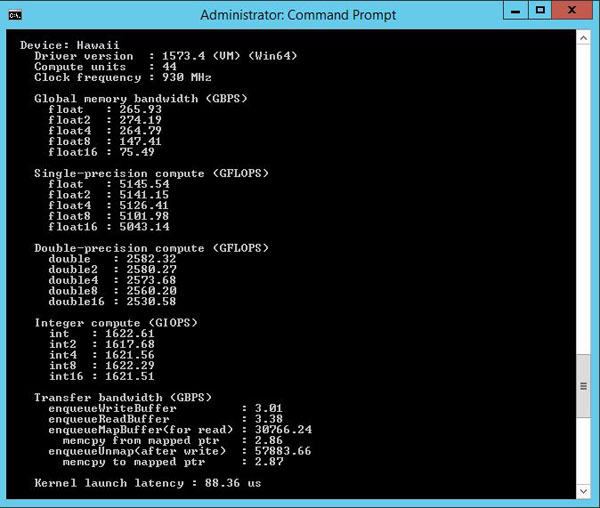

clPEAK Benchmark

We also ran clPEAK benchmark on the system and saw numbers right where we expected them to be. This test runs on only one GPU at a time so the screen shown was repeated eight times.

The W9100 GPU tested here show improved performance in Single-precision and Integer tests vs the S9150’s we tested before. However, the S9150’s has better performance in memory bandwidth and Double-precision compute.

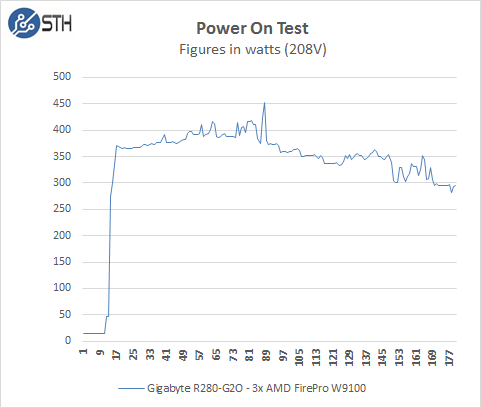

Power Tests

For our power testing needs we use a Yokogawa WT310 power meter which can feed its data through a USB cable to another machine where we can capture the test results. We then use AIDA64 Stress test to load the system and measure max power loads.

We start with the power needs of the system when it is turned off. The R280-G2O pulls ~14watts to keep remote management on. When the power button is pressed the boot process starts and we go through various boot up processes, initializing various components and finally the OS start up. Our test system jumps to ~375watts very quickly, peaks at ~452watts and finally settles down ~300watts on idle, depending on what the system is doing and other BIOS settings this can increase. For our tests we use default BIOS settings and we make no changes to these.

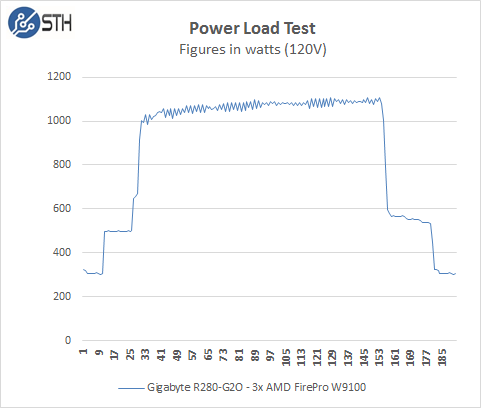

Fully Loaded Stress Tests Power Use

For our tests we use AIDA64 Stress test which allows us to stress all aspects of the system. We start off with our system at idle which is pulling ~305watts and press the stress test start button. You can see power use quickly jumps to 1,000watts and continues to climb until we cap out at ~1,100watts.

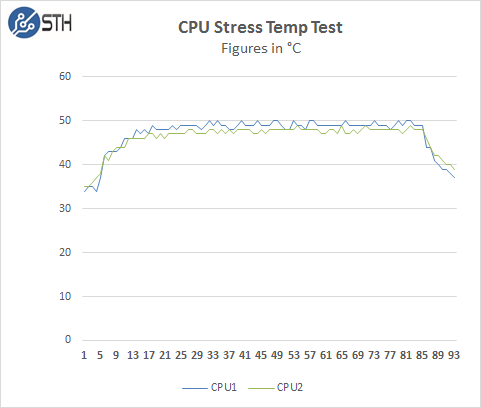

Thermal Testing

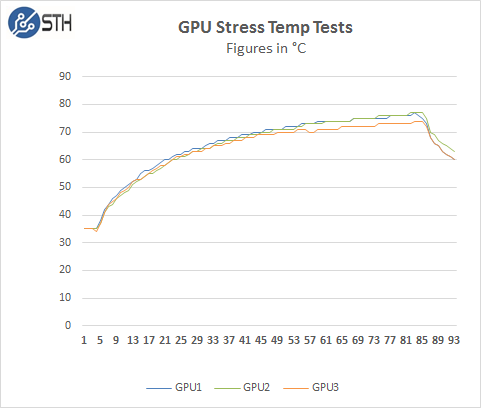

To measure this, we used HWiNFO sensor monitoring functions and saved that data to a file while we ran our stress tests.

Now let us take a look at how heat from the stress test loads were handled by the cooling system in the R280-G2O. This shows that the servers 4x 80x80x38mm (14,900rpm) cooling fans are very powerful and cools the CPU’s with copper heat sinks very well.

Here we see the temperatures of the GPU’s while the stress test is running. Just like the CPU’s the servers 4x 80x80x38mm (14,900rpm) cooling fans really do a nice job at keep all 3x W9100’s nice and cool under full loads.

Conclusion

We have looked at several GPU servers now, from large 4U 8x GPU systems and down to a 1U from factor 4x GPU system, each type of system has it merits depending on how they will be deployed.

The Gigabyte R280-G2O GPU Server fits in an interesting deployment model and has many advantages. The first is its low power draw compared to some of the other systems, this will work out very well if your rack space has limited power capacity and must fit in with other server types. It also allows you to scale up adding more GPU servers with again, minimal power draw and still get high TFLOPS of computational power spread across several nodes, which might have advantages vs one large node. The next option we like is the large number of storage bays at the front of the server, out of the box these require a SAS storage card, it would be nice if it included SAS options as well. This allows one to build additional storage I/O locally which can be used either to hold datasets or as storage to be used with scale out file systems. We also found performance to be right on par with other solutions we have looked at recently.

We did however find installing GPU’s to be somewhat cumbersome but this only needs to be done at the time of install and should not be a problem to access if any cards need to be replaced. The air-shroud is also a bit cumbersome to work with but it does provide good air-flow for the CPU’s and GPU’s and keeps the system cool. However, if you might need to access the CPU/RAM area later all this must be removed to get to that area.

We did find using the Intel Xeon E5-2650L V3’s to be a good choice when running this system, they have a high core count and are low power which helps to keep temps down and still have enough horse power to handle all the tasks needed when running this machine.

Spotted the duped paragraph… lol

This machine looks sweet. Good review and plenty of eye-candy. Any comment on how long it takes to boot and if sleep is supported?

Before anyone responds with shite, I ask as I am always on the prowl for decent workstations.