When we first left Amazon AWS in favor of colocation, we did so with our eyes wide open. The costs of going colocation we projected saving over $10,000 in two years for STH. That number ended up being closer to $9,200 due to Amazon’s falling prices and a few modeling misses on our part. We are embarking over the next few weeks on a more ambitious plan to expand our capabilities in the area. With over two years of experience now I thought it was time to provide some perspective.

Hardware – the great and not so good

We built our colocation setup on a bootstrap budget. Back when the Dell PowerEdge C6100‘s were plentiful and relatively efficient, they were our boxes of choice. Since this time we have de-commissioned one of them due to the great hardware failure of 2014. The issue there was that the chassis saw all of our Kingston E100 400GB drives within about a minute. These are the ridiculous drives that have 800GB of NAND onboard and 50% OP. That experience was terrible and now we keep backups on no less than six different disks. Excessive? Possibly, but we are not having that happen again and are taking steps to ensure it does not. More on that in a bit.

In terms of performance, we do not have to deal with “noisy neighbors” on our systems and performance is extremely consistent which is excellent. Whereas we saw STH have seg faults on AWS due to low disk I/O in 2012 and 2013, with our all-SSD architecture this has never been an issue. We are able to control how much network competition there is on the entire stack including CPU, drives and network.

We got an excellent deal on a Supermicro Intel Xeon E5 Fat Twin and this has become the main hosting box. The E5 series is extremely fast and power efficient.

Bottom line: We still use a Dell C6100 but with the Intel Xeon D-1540 now providing about as much performance as a dual L5520 server at less than half the power consumption, it is time to move on to newer generations.

Network – pfsense is awesome

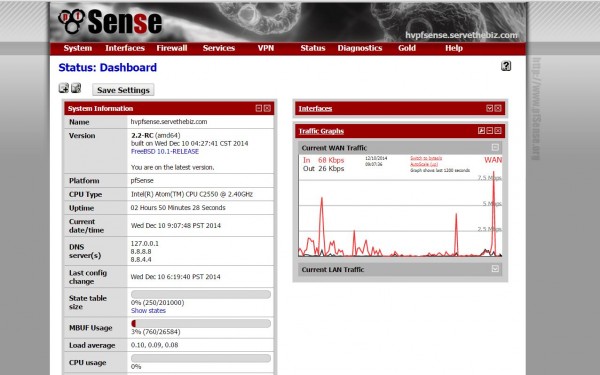

To keep costs down we utilized pfsense as our main network router/ firewall. pfsense is absolutely awesome. The only issue we have had with pfsense was one time where the firewall got a little bit excited and blocked us out of the site. Less than ideal. The best part about pfsense for anyone building a similar setup is that the entire router, firewall, DNS, DHCP, NAT and other features can be configured using the WebGUI. We initially started using two nodes, each with a single Intel Xeon L5520 processor. Since then we have migrated to Supermicro A1SRi-2758F motherboards. They provide four 1GbE NICs and have Intel Quick Assist to accelerate VPN.

Aside from these, our two HP V1910-24g switches have worked extremely well. They went into the colocation facility and have not had an issue since installation. That is exactly what one would want to see with a switch.

Bottom line: we will continue to use pfsense going forward as it has worked very well for us.

Remote hands – lower costs than we expected

Since we colocated with Fiberhub in Las Vegas, NV we do not have easy access to hardware. Paying for remote hands has cost us under $200 over the past two years.

There is one huge caveat here. We did have two visits to our servers to perform remote hands work ourselves. Las Vegas is extremely convenient because it is easy to get a flight out to from just about everywhere. We also head to shows there like CES which bring us to Vegas on a regular basis. The low costs in Las Vegas plus the ability to schedule quick remote hands sessions have worked out well for us.

Bottom line: These are costs that need to be considered. There is a huge variance in costs by datacenter/ region so we will factor those in going forward.

Cost savings – it worked

Using deals found in the STH “Great Deals” forum, we were able to find our new, upgraded hardware at not much of a premium over our previous hardware. We were diligent to sell off unused hardware and so our differential over the past two years has been about $440. We still have six primary compute nodes, two machines for pfsense and a fifth Atom node for miscellaneous uses. The remote hands costs plus new hardware did add up but similar to if we were purchasing from AWS, we were able to upgrade our hardware and maintain reasonable costs.

AWS did introduce no-upfront cost reserved instances which would have been the go-to option but using a 1 year option seems to be the way to go given the rate at which AWS rolls out new instance types.

One cost item we did miss was shipping. We ended up building many of the boxes then shipping them to the colo facility. That added almost $200 to our operational costs.

Bottom line: We saved over 60% by going colocation over AWS. Published AWS cost savings assume using new hardware. Savvy teams can save a bundle with colo.

What is next

We are sold on colocation. It saved us an enormous amount of money over the past two years and the ability to achieve consistent performance has been excellent. One item we did exclude from our $9,200 cost savings is that we ended up with significantly more development instances than we expected. All told we had budgeted for four production VMs and two development VMs. We added hosting the Linux-Bench application which added two more production VMs and three development VMs. We also have an additional development VM for testing various other functions. Needless to say, with colocation adding capacity was a few clicks away but with no real cost impact. Our $383/ month savings would go up significantly if we added in these new instances.

We have a big series coming on how we are chaging our strategy with colocation and expect more in the not to distant future. The forums will have quite a bit of preview information so sign up there and feel free to ask questions.