Equinix SV11 in Action: Housing the NVIDIA DGX B200 SuperPOD

Diving deeper into SV11, this data center is primarily designed to house the newest AI systems and hyperscale clusters. This means being able to accommodate the massive power and cooling needs of a rack of GPUs. NVIDIA has a cluster here that we are calling “the” NVIDIA DGX B200 SuperPOD because it is the cluster that runs many of the global GTC demos you see when something is running remotely, and Jensen is on stage presenting. Although you rarely get to see the physical system, we have certainly seen its outputs on stage.

Comprised of eight NVIDIA GB200 NVL72 racks and associated hardware, the SuperPOD is the first level of scaling out clustering in NVIDIA’s ecosystem.

NVIDIA has enormous GPUs clusters that it uses to develop and support its products. This cluster is a bit different because it is specifically located at the Equinix Silicon Valley campus. With the extensive connectivity to many carriers and the presence of many large organizations on campus, this cluster is also used for proof-of-concept work with partners and customers. Since so many have resources on the campus, those fiber lines we showed you earlier help provide connectivity to this SuperPOD.

Equinix is also NVIDIA’s global deployment partner for SuperPODs, so this Silicon Valley installation allows NVIDIA to validate the SuperPOD it before replicating it at other customer sites and Equinix data centers elsewhere.

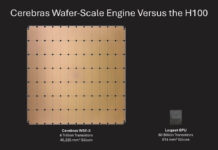

As the NVIDIA DGX B200 SuperPOD requires liquid cooling, this was also a critical factor for NVIDIA in terms of hosting needs. As part of the SuperPOD design, NVIDIA opted for a high-density layout to enable short copper runs between servers, thereby avoiding optical runs and the power penalty associated with additional optical transceivers. As a result, liquid cooling is a necessity when you look at a few racks that can use up to 1MW of power.

Liquid cooling is a more efficient cooling option overall. With the higher heat density of water (and other liquids) versus air, NVIDIA (and Equinix) can spend less power on cooling.

In the video, we had Charlie Boyle, VP, DGX Systems at NVIDIA. Charlie is one of my favorite folks to talk to since he explains not just what is in a system, but the why behind it.

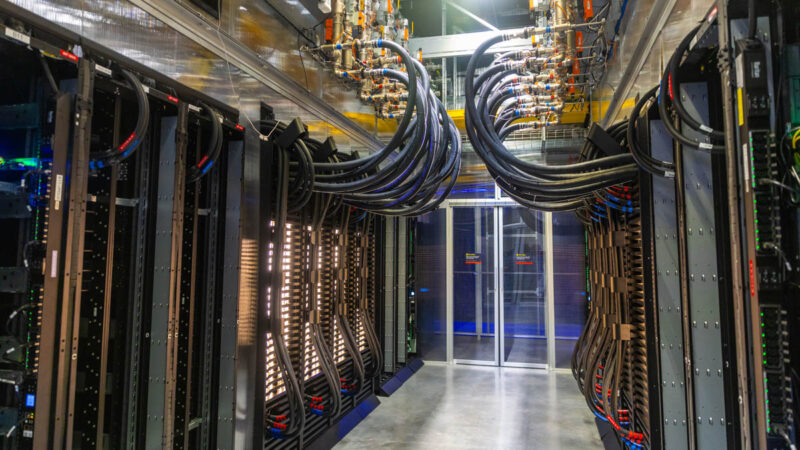

For example, in the photo above, Charlie explains why the liquid-cooling system for the cluster uses hoses rather than welded pipes.

He was telling me that to get the correct bend in a welded pipe was not an issue to have done by a skilled tradesman once, but getting thousands of them perfect for large-scale AI clusters would unlikely.

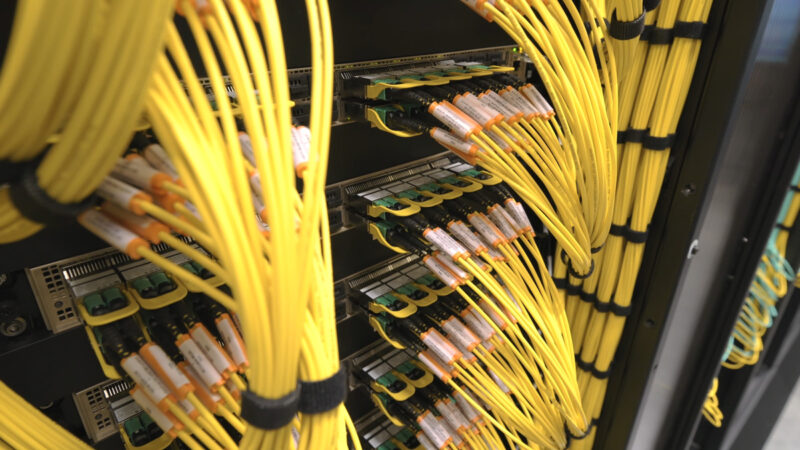

Also, because this was STH, it was interesting to see the networking and other components. Folks often talk about the GPUs, but really it is the interconnects that turn a single GPU package into a large AI cluster that is driving today’s AI boom.

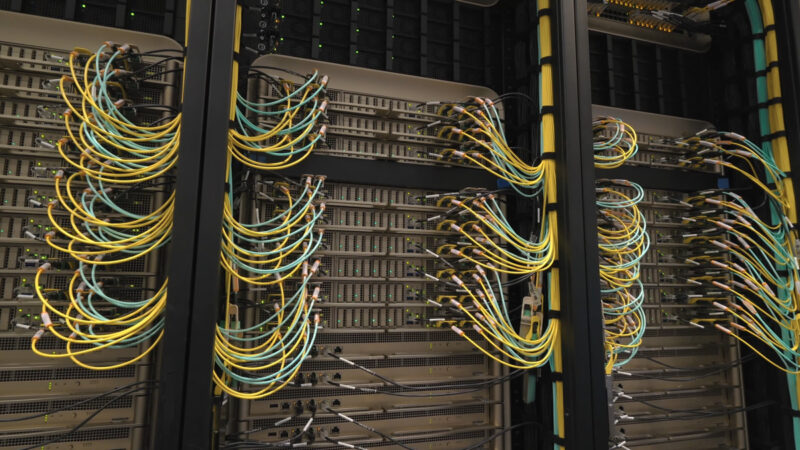

Since I know everyone likes to see fiber, here is the rear of the SuperPOD.

NVIDIA has multiple storage vendors colocated with this SuperPOD, as it is used for POC work.

We spotted both NetApp and DDN storage gear here.

Folks often overlook that, even though the main focus is on the GPU compute blades and interconnects, AI clusters also have traditional compute nodes next to them, often with lots of networking.

Something that is obvious when walking through the SuperPOD versus the floor of SV1 is how this entire cluster was co-designed to work together with very specific requirements, but also much higher density. It is a sharp contrast to the density of what is running in SV1.

You gotta visit the One Wilshire meet-me room sometime! (And: awesome!)

Super stellar video sth

Those less technical stories usually refer to open evaporative cooling, used at many hyper scalers, where they use swamp coolers and then humid air enters the servers via air cooling and is then discharged. They often air cool their ai servers

I have fond memories of working on servers and networking in SV1! Being there felt like being in the absolute center of the coolest part of the Internet. Equinix data centers like SV1 had style, and lots of it – shapes, patterns, colors, and just gobs of cool everywhere. Most other data centers were sterile, horrible places to be.