Sabrent has been selling customized Apex cards for some time. These types of cards are especially popular with folks doing things like professional rendering, editing, and so forth where having lots of fast local storage is useful, so long as it is also high-capcity. Now, Sabrent has a version of the Apex X16 PCIe Gen5 card that uses sixteen of the Sabrent Rocket 5 M.2 NVMe SSDs for 64TB of storage and over 50GB/s of sequential read performance.

Sabrent Apex X16 Rocket 5 Destroyer 64TB PCIe Gen5 Card Shown

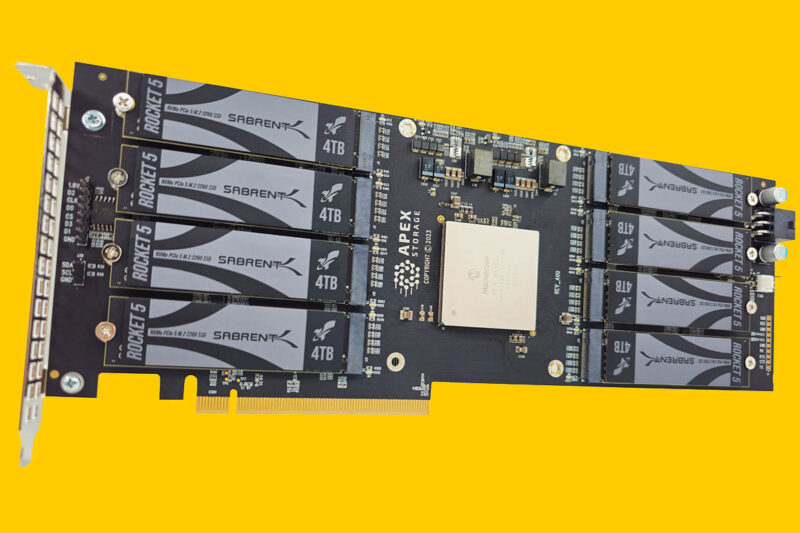

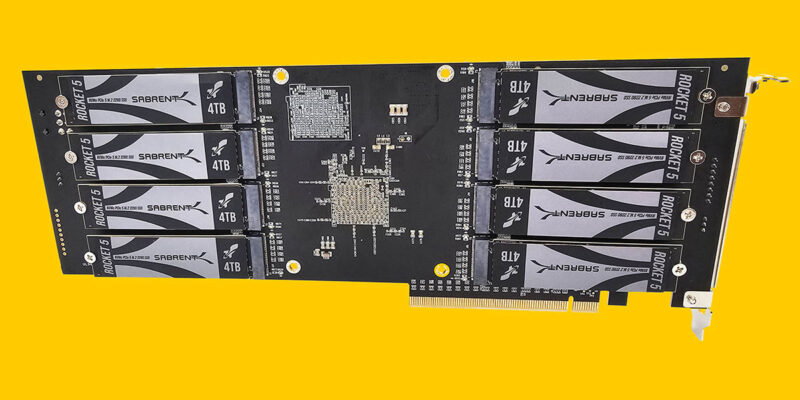

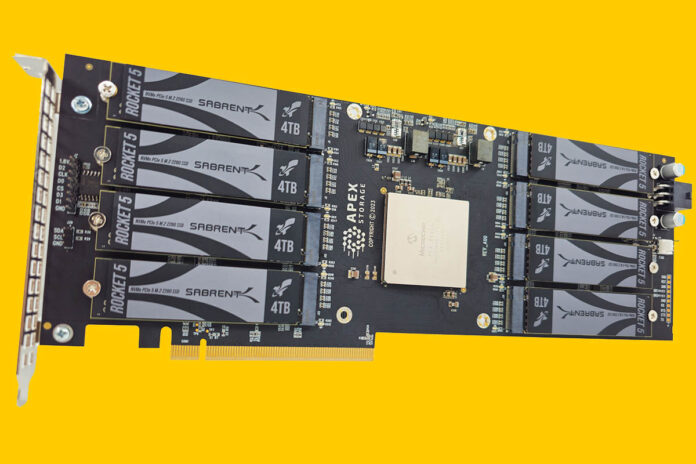

Looking at the Apex Storage card, we can see that it is massive. This is not going to fit in smaller cases, but if you have a big Threadripper Pro, Xeon W, or similar workstation then there should be room.

There are eight 4TB NVMe SSDs on the front and eight on the rear of the card. 16x 4TB is 64TB total capacity.

One of the other really interesting points is that the card is not using a Broadcom PCIe switch. Instead, it is using the Microchip Switchtec PFX 84xG5 PM50084. Microchip’s Switchtec line was one built to provide an alternative to Avago/ Broadcom’s price increases on PLX switches in the 2016 era. It is cool to see the alternative used here even though solutions from companies like HighPoint Technology use the Broadcom PLX switches.

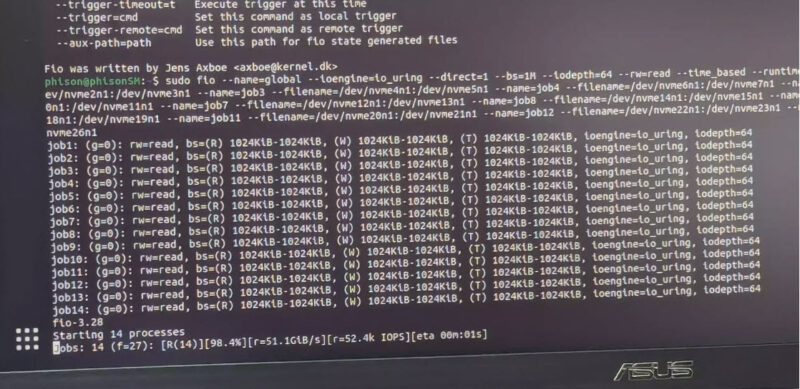

Since these are PCIe switches, multiple drives can aggregate bandwidth to the PCIe Gen5 x16 link. In the fio benchmark shot shown above, we can see over 50GB/s of throughput. Also, since this is a PCIe switch architecture, we do not get RAID functions onboard. We can see the screenshot above is simultaneously hitting multiple drives instead of a single volume. Still, it is very impressive.

Final Words

In many ways, solutions like these are very fun, albeit expensive. The 4TB Sabrent Rocket 5 is $729 at the time of writing. That means we have drives worth almost $11.7K plus the cost of the card. At some point, it might be easier to use a cheaper card and something like the Solidigm D5-P5336 61.44TB SSD to get more capacity per dollar. With all of this said, it is still very cool to see these big cards.

If you just want to buy the 4TB SSD, then you can find them on Amazon (Affiliate link.)

Bandwidth seems severely capped, though I suppose it always was going to be with a limit of 63GB/s on a 16 lane. Still, dropping from 63 to 50 is a bit much no?

I do wonder whether it’s still worth it to use so many M.2 drives instead of going for four U.2/U.3 devices at 16TB each. That could saturate a 16-lane PCIe connection equally well, but would be easier to cool, as it’s less dense instead of being a double-sided PCIe card and there would be no need for an additional PCIe switch, assuming the motherboard supports bifurcation.

You can get Crucial P3 Plus 4TB gen3 SSD for ~$250 – each ssd is max 5GB/s.

80GB/s max…I doubt you’d see much of a performance drop at all.

$4k in ssd…don’t know if that switch chip supports mixed pcie gens but if it doesn’t …you’d drop to 16GB/s in x16 gen3.

Just saying it might not be worth it to do gen5 nvme versus gen3 considering the over-provisioned bandwidth.

Yeah I think that this is a bit of an odd application of 16 NVMe drives. You can get better capacity elsewhere, though with lower bandwidth admittedly, or up to 4x the throughput through 4x x4 NVMe PCI-E cards and bi-furication. You’re likely to be using something like threadripper if you need such large amounts of scratch space rather than consumer platforms where you only have one 16x PCIe 5.0 slot.

At hypothetical rates of 80GB/s you are going to be testing the limits of your CPU’s load/store abilities, so the exact way that the tests are performed is likely to have a major impact, as would things like LBA format to minimize I/O completion callbacks and that sort of thing.

No indication in the article if this card will “downshift” in a slower speed (per PCIe lane, not raw lane count) PCIe slot.

In any case the design already looks heavily oversubscribed so what’s a little more oversubscription between friends?

This board has great density, but I wonder what’s the need for such a device.

On Xeon W and TR Pro bifurcation makes the additional PLX chip somehow superfluous and 16 SSD would become a failure rate nightmare soon.

Maybe with much higher TBW SSDs that might work, but often such devices are 22100, therefore not fitting on such card.

To me it seems like an interesting exercise for enthusiast machines, without much use in other setups. Except, maybe, for mounting a bunch of AI TPUs or other m.2 adapters which might not starve from the capped bandwidth.

Hello,

Is there any indication this could be used with older generation 8TB M.2 NVMe SSDs? Having a 128GB card may be more useful for read-intensive applications.

Capacity? The point of this card is not capacity, it’s bandwidth.

Sixteen drives aggregated without redundancy…

Funny device, no one will put this toy in DC and home users / pro users will be better with 15/30/60 Tb nvme drives which are way cheaper than that. When you use this much throughput you will be in DC/enterprise grade anyway

It would be great to see a review and benchmarks comparing all of the high speed solutions, including the GRAID SupremeRAID SR-1010 (which is much faster, and supports RAID).