Today, NVIDIA and AWS announced a multi-generational partnership to integrate NVLink Fusion chiplets into future AWS AI racks and chip designs. AWS designs its own Graviton CPUs, Nitro networking cards, and AI accelerators after its 2015 Annapurna Labs acquisition. As such, it has a stack that is not NVIDIA’s stack, while also buying many NVIDIA GPUs. In the future, it is going to integrate NVIDIA’s technologies into its custom silicon stacks, which is a big deal.

NVIDIA NVLink Fusion Tapped for Future AWS Trainium4 Deployments

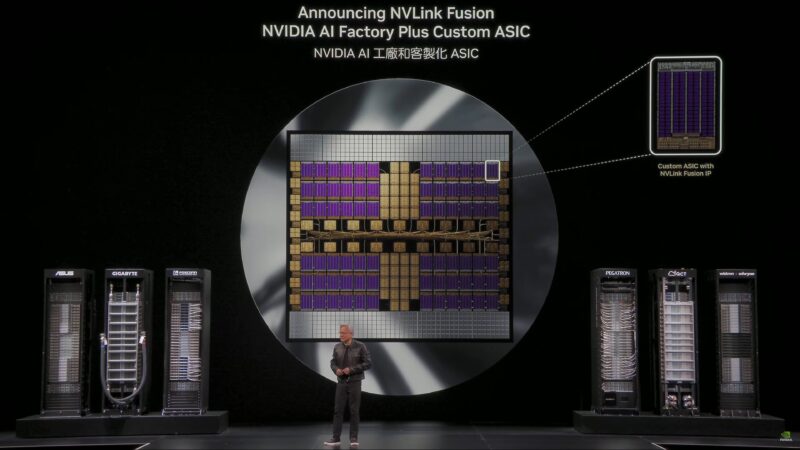

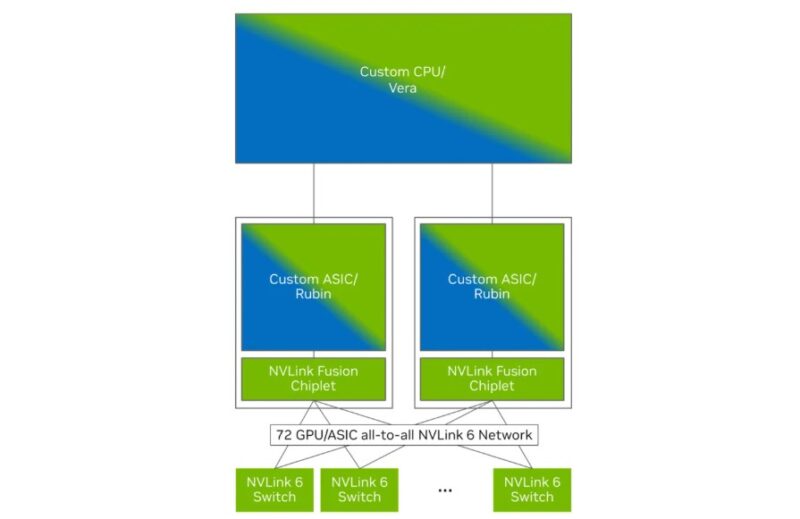

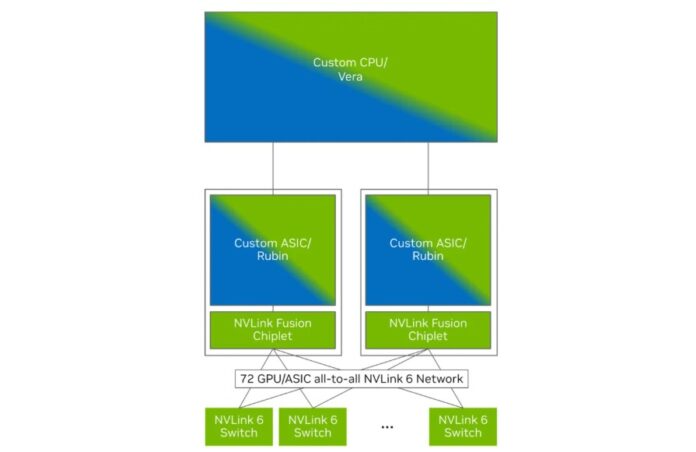

The idea behind NVLink Fusion is that NVIDIA can sell an IP block that allows other chips to communicate using NVIDIA NVLink.

NVIDIA announced a number of partners, and others, such as Arm joined recently.

From the release, we see that “AWS is designing Trainium4 to integrate with NVLink 6 and the NVIDIA MGX rack architecture, the first of a multigenerational collaboration between NVIDIA and AWS for NVLink Fusion.” (Source: NVIDIA)

This is an interesting deal for both companies. AWS gets to use a similar rack architecture for its own custom silicon efforts as it does for its NVIDIA NVL72 racks.

For NVIDIA, it is being built into a hyper-scale custom silicon effort. It has the opportunity to sell both the NVLink Fusion Chiplet as well as the NVLink Switches into those non-NVIDIA CPU/GPU/NIC silicon racks.

Final Words

Perhaps the most interesting part of this is just that AWS, while going ahead with future Trainium versions, decided it was better to build on NVIDIA NVLink instead of building its own communications protocol, switches, and then the rack infrastructure. Also, and notably, AWS using NVLink means that it will not be using a Broadcom Tomahawk Ultra or other Ethernet-based switch silicon for its in-rack scale-up compute links, as it would be odd to use both technologies for the same purpose.

When the NVLink licensing came out, as per eg https://www.nextplatform.com/2025/05/19/nvidia-licenses-nvlink-memory-ports-to-cpu-and-accelerator-makers/ and probably STH too I cant find the article, the licensing was only if you had Nvidia devices at one end of the link, mostly for connecting nvidia accelerators. But this deal seems to be to connect AWSs CPUs to AWSs accelerators, wondering why Nvidia is even doing this?

To sell NVLink switches between accelerators?

NVlink is proprietary tech, getting it accepted into datacenters to compete with Ethernet (Ultra-Ethernet) open alternatives would be an uphill battle if they limited to only NV installations.

NVlink (infiniband/mellanox) tech needs open accessibility or it just becomes a dying proprietary standard vs ethernet. It’s better imho for latency/datacenter builds, but it’s not all over the place like ethernet. Then again neither is Graviton/Nitro…so I guess it’s a win win for both teams.

The one thing nvLink has mandated is the ability to support coherent traffic and facilitate features like a flat memory space across scale up nodes. This means really, really big systems. This means that Amazon can integrate with nVidia’s hardware stack natively while also providing their own solution side-by-side. This gives Amazon some flexibility to re-use infrastructure such that if their next Graviton chip is delayed, they can get a CPU from nVidia and drop it into the the current system design to keep moving forward. Things are moving so fast, having a parallel Plan B is nearly impossible.

I would disagree that Ethernet is not going to be used in Amazon’s systems. It’ll still be there and at high speeds, just not emphasized as it’ll be the primary network IO instead of a coherent cluster.