At GTC 2019, there were a number of great demos by both NVIDIA and its partners. One NVIDIA specifically briefed the press on doing a live demonstration before the Keynote session at San Jose State. NVIDIA GauGAN is a model trained on a myriad of photos. Its application and output are quite amazing. Given a rough sketch of what a composer wants a scene to include, it is able to generate a scene that incorporates those elements.

NVIDIA GauGAN at GTC 2019

The NVIDIA GauGAN demo at GTC 2019 was immensely interesting. My friends and family all know that I have, what can be termed as, “limited artistic skill.” More succinctly, I failed coloring in kindergarten and never recovered. The concept of creating a photorealistic scene with my drawing seemed farfetched to me before seeing this demo.

Here is the NVIDIA demo of GauGAN to give you an idea of what it can do:

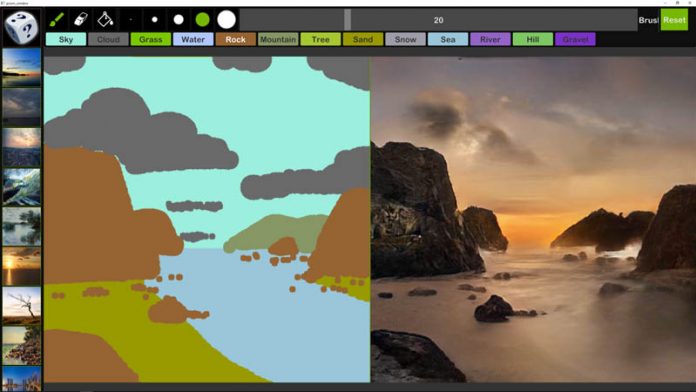

By composing a scene based on what you want to see in different locations of the frame, GauGAN is able to fill in that scene. What is amazing is not just the basic nature of this capability. One can change the settings to different seasons or time of day and the network changes the image output. For example, if you have a tree GauGAN will give it leaves in the summer and just branches in the winter when the scene is covered in snow.

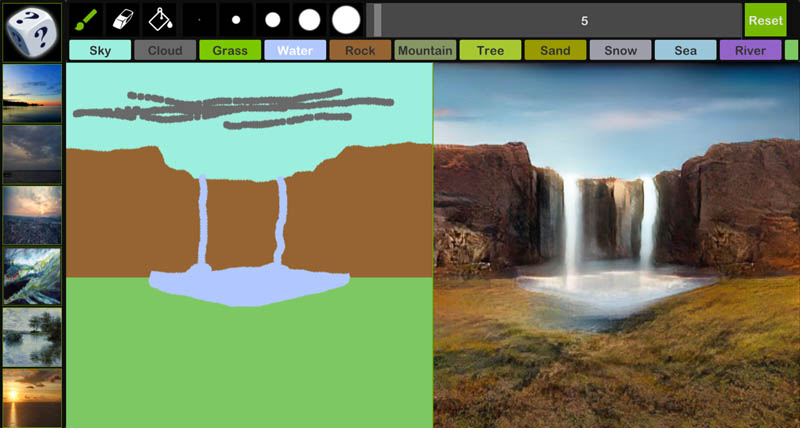

Here is an NVIDIA sample input and output.

I asked if I could try it myself to see if my poor artistic skills can be rectified and I was told no. Still, we saw a live demo before the GTC keynote this week. The sample images may be the best examples, but they capture the essence of what GauGAN does fairly well based on what we saw in the live demo.

Some of the finer details were a bit more spectacular. One example the company showed is that by adding a mountain and a lake element to the scene, GauGAN actually adds the mountain’s reflection to the lake’s surface. Changes like adding the lake, with reflection, happened single digit seconds after they were made, not hours after the fact. Frankly, a lot of this happened faster than standard Adobe Photoshop filters get applied on a high-end workstation.

There were still a few rough edges. Some of the boundaries did not look perfect using wide brush strokes. We were told there are still a few improvements but using a finer brush would have yielded better results, albeit not created at a pace fast enough for a live demo in front of an audience.

Learning More

You can learn more about GauGAN at the NVIDIA blog. I hope we get to actually use this at some point and it does not stay relegated to a research project.

So its proceduraly generated environments combined with MS paint?

Haha, all I can think of is how awesome this would be for DnD night… Thanks Nvidia!

Super game