Microchip recently showed off its newest generation of SAS and NVMe expanders and adapters. The new Microchip 24G SAS solutions are designed for the emerging PCIe Gen4 slots we will start to see in mainstream x86 servers with the AMD EPYC Rome launch next quarter. Recently, Microchip showed off its new adapters and expanders that are set to double SAS bandwidth along with a doubling of PCIe bandwidth.

Microchip 24G SAS for PCIe Gen4 Servers

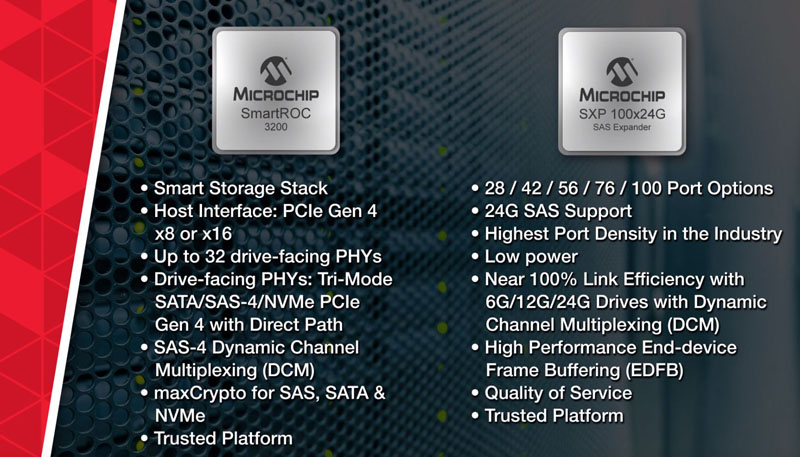

Microchip 24G SAS products are coming. The company after the Microsemi acquisition has a portfolio of SAS adapters and SAS expanders that it is readying for the 24G SAS generation. This includes its new Microchip SmartROC 3200 series of tri-mode adapters designed to work with SAS4, SATA, and NVMe. While SATA is stuck at 6Gbps speeds, 24G SAS4 and NVMe storage will consume more bandwidth. As a result, the company has tri-mode adapters as well as the ability to use Dynamic Channel Multiplexing to get more bandwidth to SAS4 expander enclosures.

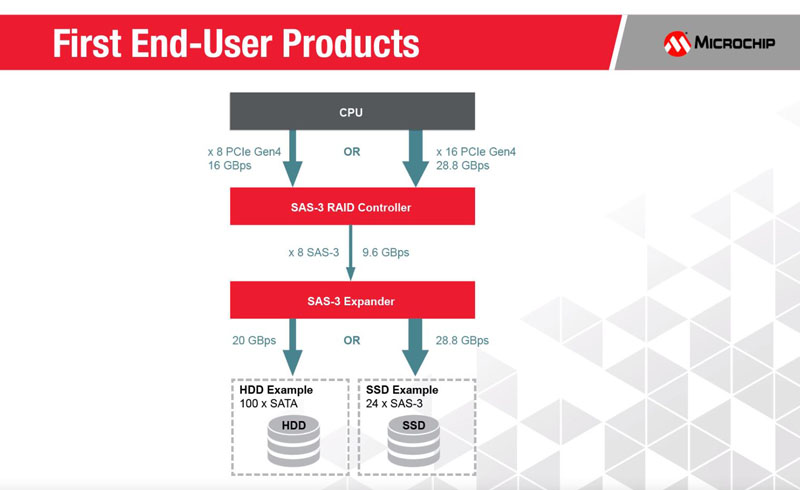

To show what will happen with the SAS3 generation, here is a diagram from the company. As you can see, the SAS3 controllers on a PCIe Gen4 interface have more bandwidth than eight lanes of SAS3 to an expander can provide. This is so even if the storage on that SAS3 expander can provide more than twice the bandwidth of 8x SAS3 lanes.

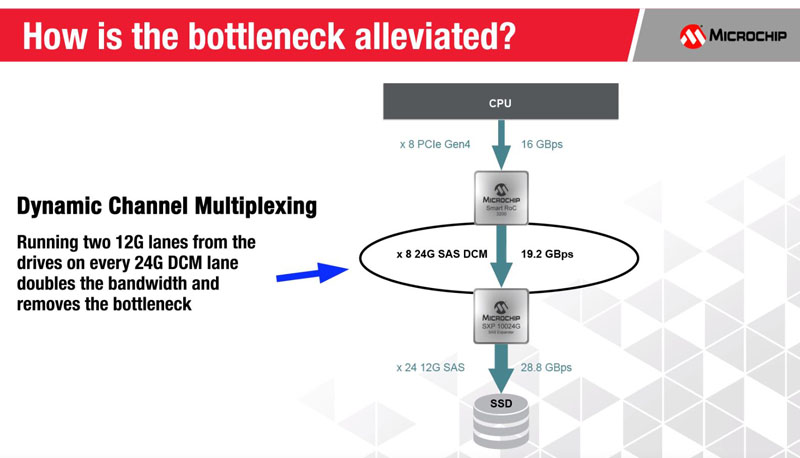

Using 24G SAS and Dynamic Channel Multiplexing, SAS expanders can uplink more bandwidth to the RAID controller or HBA, thereby more effectively using the PCIe Gen4 x8 link back to the server.

Over time, SAS4 SSDs will come out to saturate the uplink with only eight drives. At the same time, using today’s SAS3 SSDs, and large hard drive arrays, one has the ability to alleviate a SAS bottleneck with the new SAS4 solutions such as Microchip 24G SAS RAID controllers and SAS expanders.

Final Words

24G SAS is still not here yet. The initial plugfests are just kicking off this year. Still, we expect that as the PCIe Gen4 server ecosystem becomes more robust, we will see 24G SAS products come to market.

I use on my workstation, two PCIE3 x8, 12G SAS/SATA RAID Controllers (Areca 1883ix-24) that are built around the LSI ROC, along with five 12G RAID Expanders installed, there being a singular 12G RAID Expander integrated onto each of the two 12G Areca RAID Controller boards that are not active (spare), along with three stand alone and active 12G RAID Expanders (two Intel RES3FV288 and one Adaptec 82885T) connected via external 12G cables and interpret this PCIE4 bus/port hardware path as a means to finally provide near full bandwidth to both 12G RAID Controllers simultaneously, as the two RAID Controllers regulate the operations of 81 storage bays (56 2.5″, 25 3.5″) populated with 72 drives.

As for having more available bandwidth from the 12G RAID Expanders than that which can be provided by a single PCI3 x8 bus/port, will state that the extra Expander bandwidth is not wasted, thus wanted and appreciated, for I use only RAID 5 SSD and HDD configurations using 6G storage devices and the extra 12G RAID Expander bandwidth, that beyond the PCIE3 bus/port, is fully consumed during a single heavy or multiple light, RAID5 Vol. to RAID5 Vol. traffic. I look forward to using two 24G ROCs on a PCIE5 or higher bandwidth bus using 12G storage devices.

Getting PCI4 at this stage is perhaps too late to invest in for systems that have similar configuration such as what I use when mated to 24G RAID controllers for there will be little bandwidth headroom remaining upon installation of the first 24G RAID Controller/Expander set, on the PCIE4 x8 bus resulting in a two 24G RAID Controller configuration time sharing bandwidth just like PCIE3 x8 with two 12G RAID Controllers.

To expand the available bandwidth, would like to see future RAID Controllers evolve to a PCIE x16 electrical lane edge connector configuration.

Park McGraw

Correction to 1st post – Thinking PCIE5 stated RAID5 when it should stated RAID10 (groups of 4 devices).

I use on my workstation, two PCIE3 x8, 12G SAS/SATA RAID Controllers (Areca 1883ix-24) that are built around the LSI ROC, along with five 12G RAID Expanders installed, there being a singular 12G RAID Expander integrated onto each of the two 12G Areca RAID Controller boards that are not active (spares), along with three stand alone and active 12G RAID Expanders (two Intel RES3FV288 and one Adaptec 82885T) connected via external 12G cables and interpret this PCIE4 bus/port hardware path as a means to finally provide near full bandwidth to both 12G RAID Controllers simultaneously, as the two RAID Controllers regulate the operations of 81 storage bays (56 2.5″, 25 3.5″) populated with 72 drives + spares.

As for having more available bandwidth from the 12G RAID Expanders than that which can be provided by a single PCI3 x8 bus/port, will state that the extra Expander bandwidth is not wasted, thus wanted and appreciated, for I use only RAID10 SSD and HDD configurations using 6G storage devices and the extra 12G RAID Expander bandwidth, that beyond the PCIE3 bus/port, is fully consumed during a single heavy or multiple light, RAID10 Volume to RAID10 Volume traffic. I look forward to using two 24G ROCs on a PCIE5 or higher bandwidth bus using 12G storage devices attached to RAID10 Volumes.

Getting PCIE4 at this stage is perhaps too late to invest in for systems that have similar configuration such as what I use when mated to 24G RAID controllers for there will be little bandwidth headroom remaining upon installation of the first 24G RAID Controller/Expander set, on the PCIE4 x8 bus/port resulting in a two 24G RAID Controller configuration time sharing bandwidth just like PCIE3 x8 with two 12G RAID Controllers.

To increase the available bandwidth for the RAID Controller (ROC), would like to see future RAID Controller boards evolve to a PCIE x16 electrical lane edge connector configuration.

Park McGraw

I think the interesting thing is the potential for meaningful nvme support.

Currently, $1,000 LSI raid cards can handle only 4 nvme drives (or many more sas3 drives), with 2:1 oversubscription of pcie bus. 2:1 is probably ok for a raid array, as writes use more drive bandwidth and less bus bandwidth, but you wouldn’t want to exceed 2:1.

At the same time, the limit of just 4 drives makes this barely useful as an nvme raid controller. You’d be better off just bifurcating or pcie-switching the pcie slots to get a bigger drive count, and just deal with the downsides of software raid or no raid at all.

A pcie4 device with 2-4 the bus bandwidth can make a big difference here. 8-16 nvme drives with a 2:1 bus oversubscription makes hardware raid a much more useful solution in an nvme world.

Software raid 5 with nvme provides a pretty poor experience, presumably because the drives are often spread out between both cpu sockets, and you pay a heavy numa penalty. As well, a faster write commit latency from supercap-backed raid ram means you don’t have to wait for raid 5 calculations AND the data being written to disk before your writes get acknowledged as completed. The raid card can commit the write before it has even begun the raid calculations, which can provide a big boost over software raid, even with faster raid levels like 1 or 10.

Keeping all the drives on one physical pcie slot also helps with numa issues — threads doing heavy i/o can be scheduled on the cpu that has the raid array attached to it.

Getting beyond 4 nvme drives in a “real” raid card will do a lot for disk performance moving forwards. Looking forward to it.

2021 now, almost 2 years later, and still no SAS-4 HBA/controllers on the market yet