Recently I had the opportunity to chat with Scott Tease, VP, and GM of HPC and AI for Lenovo. The discussion was partly about STH’s plans to liquid cool our labs starting in 2022, but it was also just to catch up on the state of Neptune. Some of my favorite days of each year are when Scott shows me around Lenovo’s liquid-cooled solutions. At STH, we have been pushing for our readers to get ready for 2022 when the threshold shifts to more systems needing liquid cooling, so it was important to catch up on Lenovo’s offerings. First, though, some really cool background Lenovo shared. Some of our readers skip background, but this is going to illustrate what is happening.

Lenovo on Why Liquid Cooling with Neptune

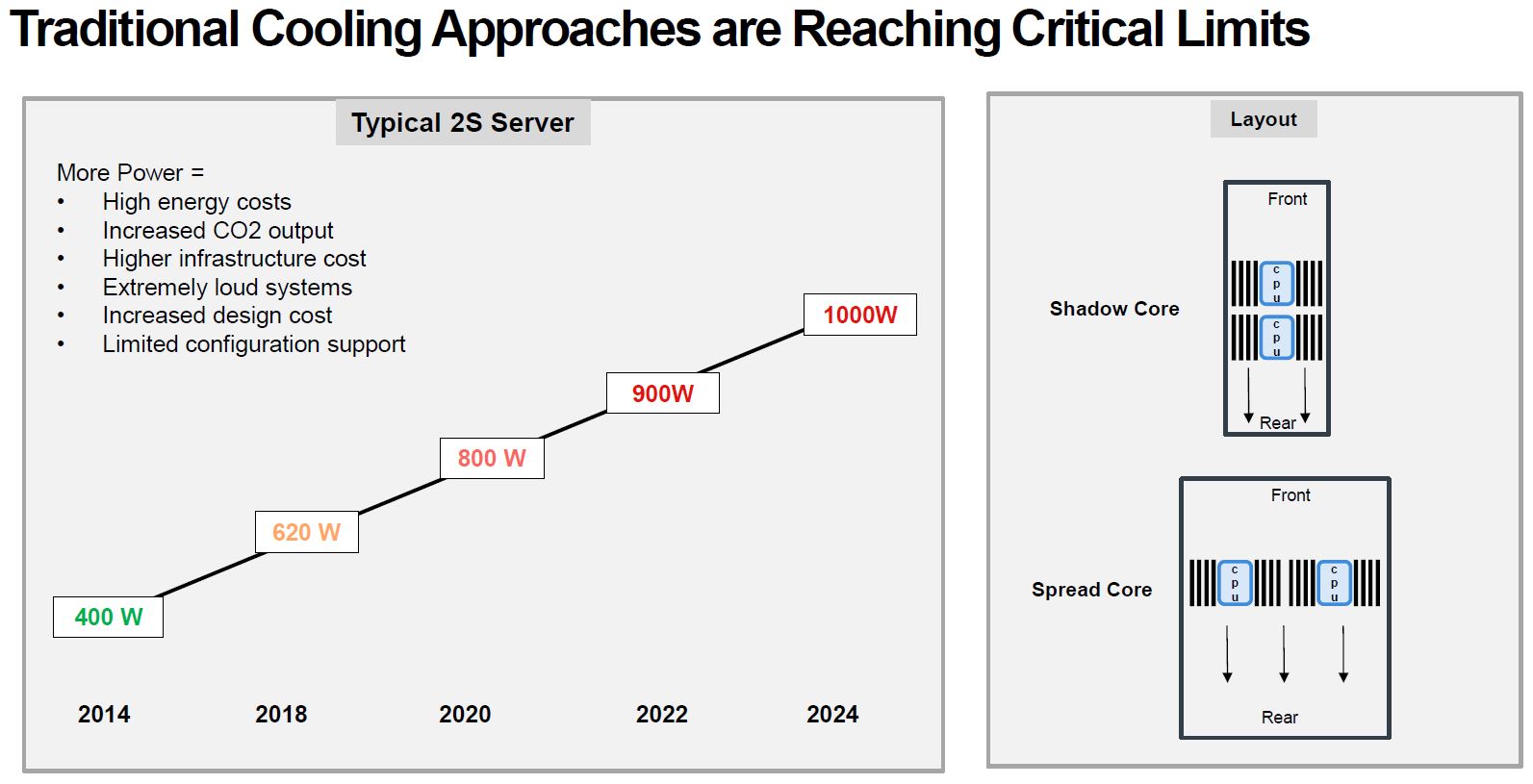

Some readers at STH will have noticed a subtle change this year at STH. We are now regularly discussing the power of the servers that we review in terms of not just what we observed for a system, but also what a rack of servers will consume. Lenovo is pointing to a similar trend that we are seeing across vendors where dual-socket servers are now regularly pushing 800W+. To be frank, we have a number of systems even without accelerators already at 1kW per dual-socket server and with GPUs we are frequently seeing well above 5kW per system.

The challenge is that traditional 1U and 2U servers have to expel more heat and when one looks to half-width, such as those used in popular 2U 4-node (2U4N) servers, the second CPU is getting heated by the air from the first leading to severe thermal challenges.

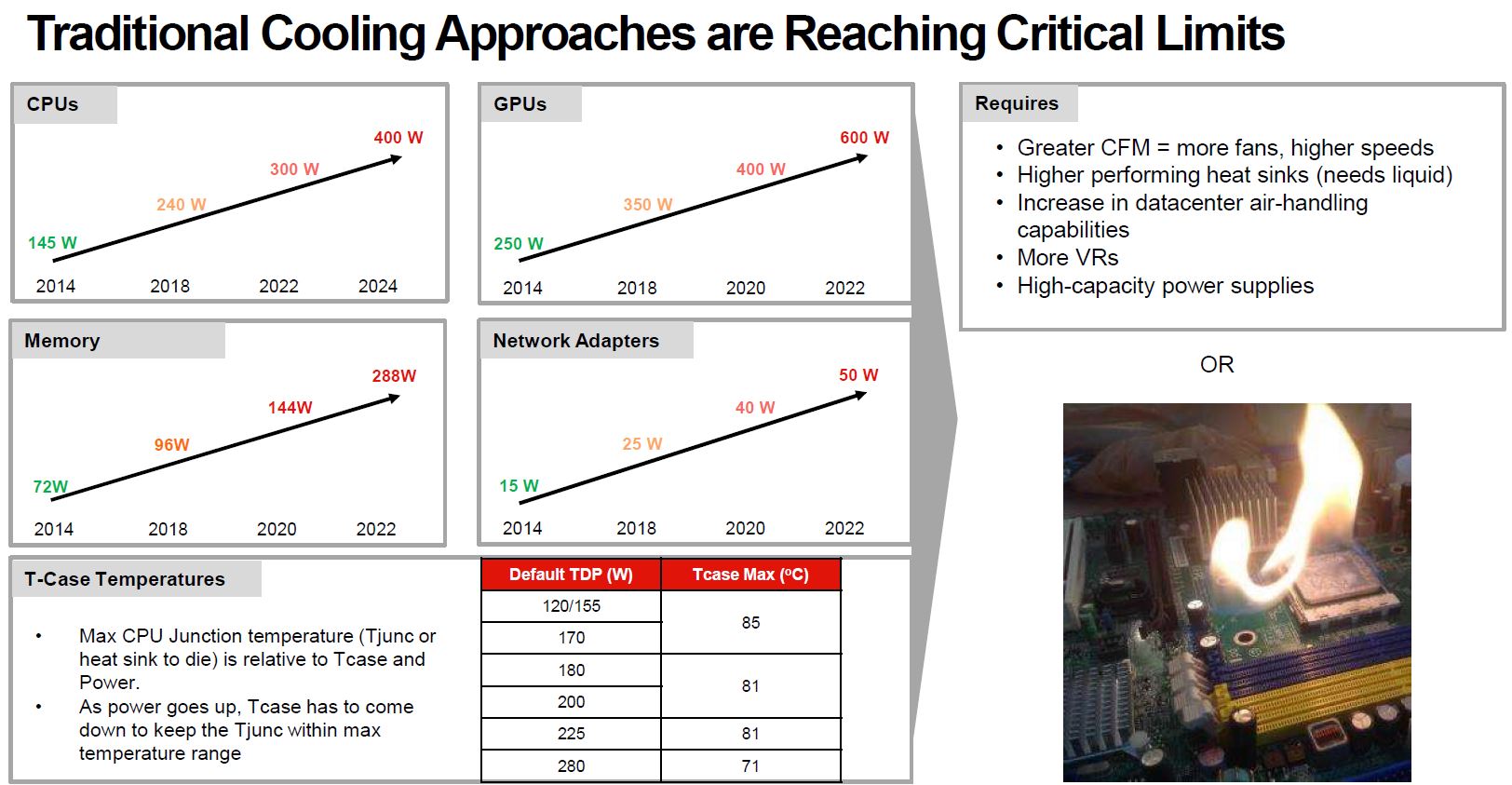

Going beyond CPUs, Lenovo has 300W CPUs in 2022, but let us be clear STH has heard of 300W CPUs as variants of current generation products for specific customers. The GPUs going from 500W, such as the 500W A100’s we have tested on STH to over 600W next year and we expect multiple GPU vendors to be at 600W+ by then. Likewise, we are seeing memory and network adapters scale power consumption as well, especially with the new DPU devices.

As TDPs are increasing, Tcases are decreasing as well. For a server vendor, that means that as devices generate more heat, the server needs to cool the devices to lower temperatures. Realistically, most of today’s devices have thermal throttling but that means thermal throttling leads to less performance and that is why cooling is important.

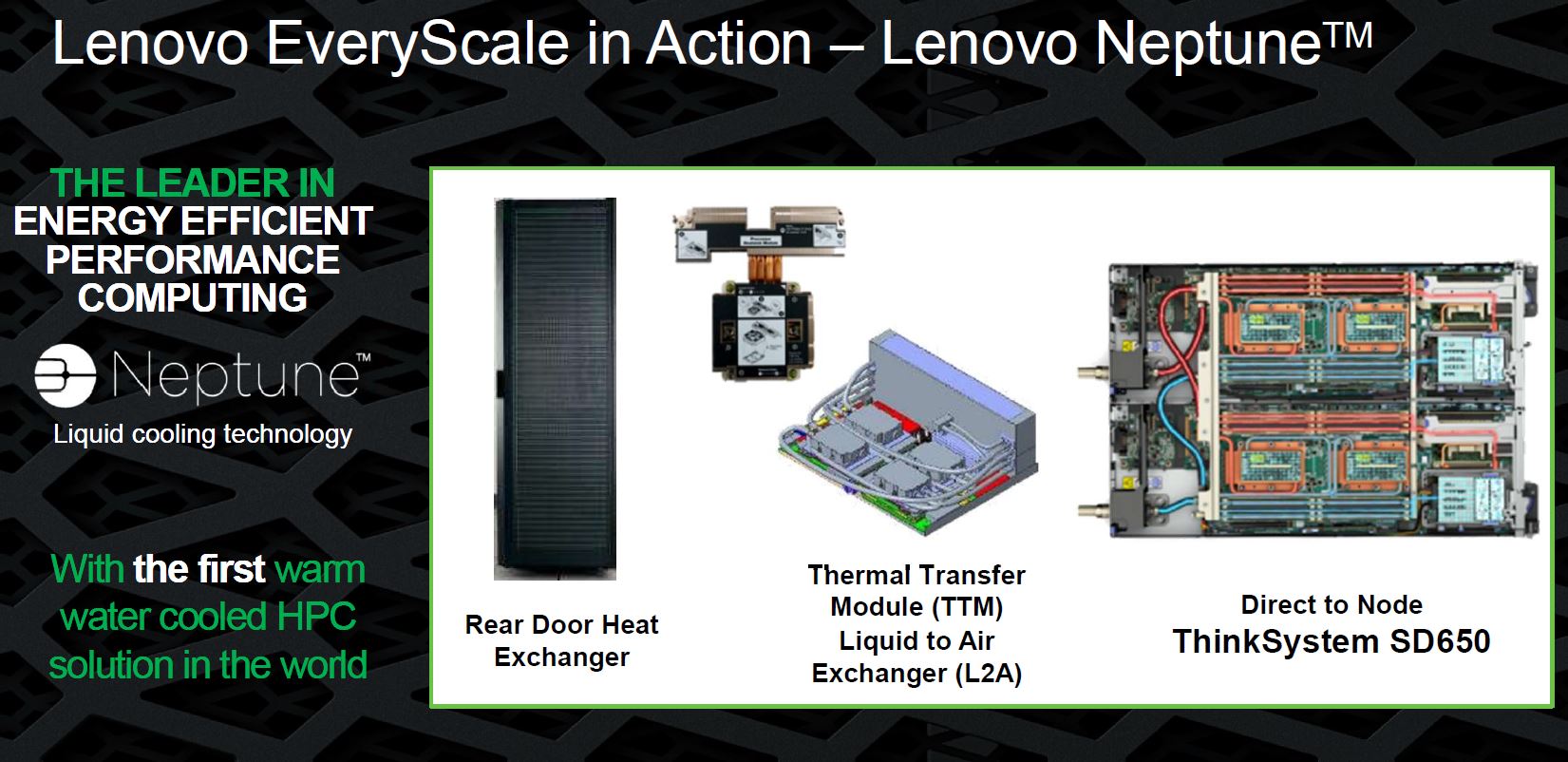

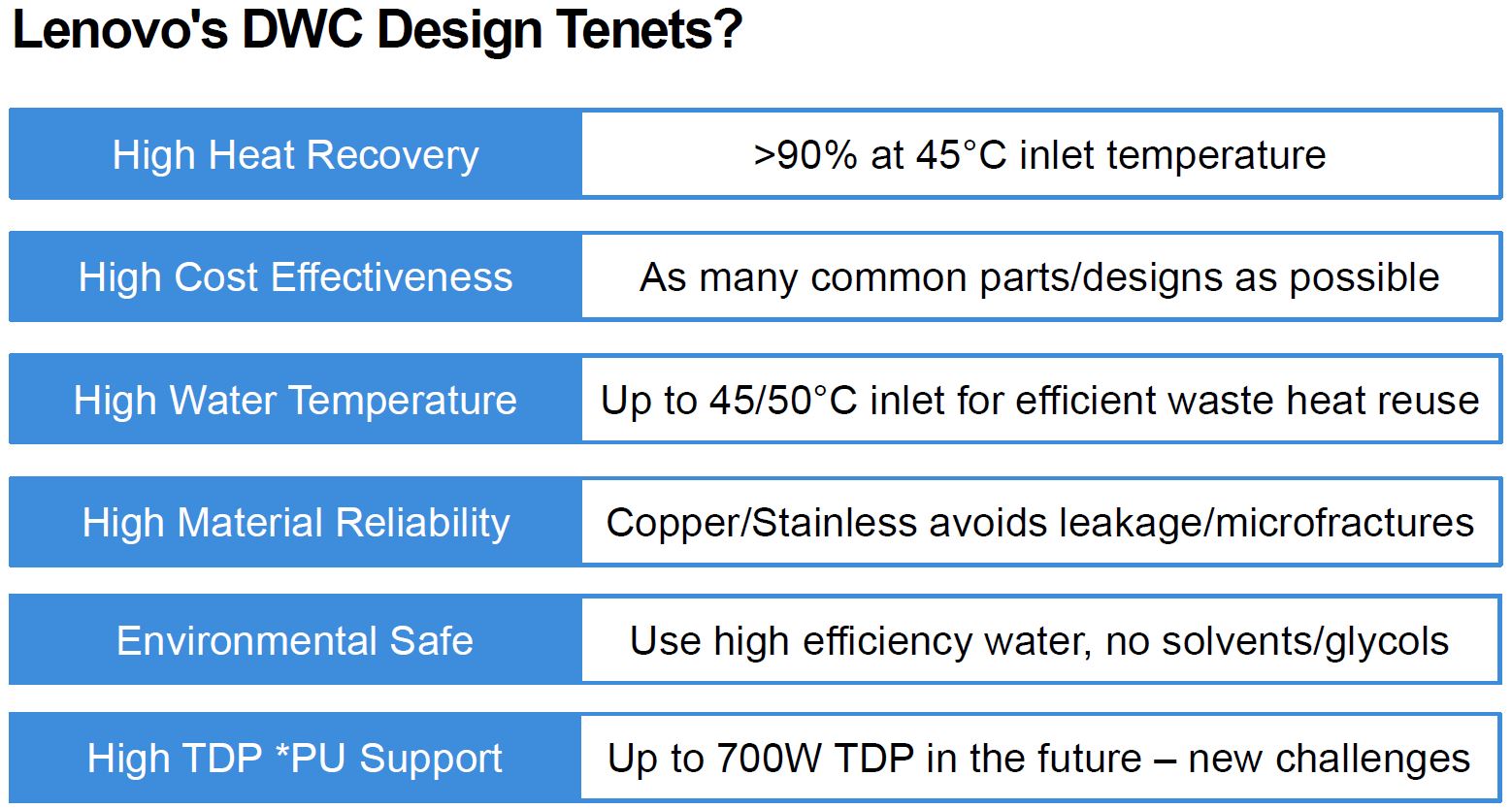

Lenovo’s answer is the Lenovo Neptune line. This encompasses a number of different technologies designed to keep high-density high-performance computing clusters cool and running at optimal performance. Lenovo is also looking to make water cooling easier to deploy by allowing higher temperature inlet water and also using the thermal energy stored in water to do other useful work.

With that, let us get to the SD650 V2 and SD650-N V2. When we previously covered the SD650-N V2 it was before Ice Lake’s formal announcement so we had renderings. Now there is actual hardware being deployed.

Lenovo SD650 V2 and SD650-N V2 Liquid Cooling with Neptune

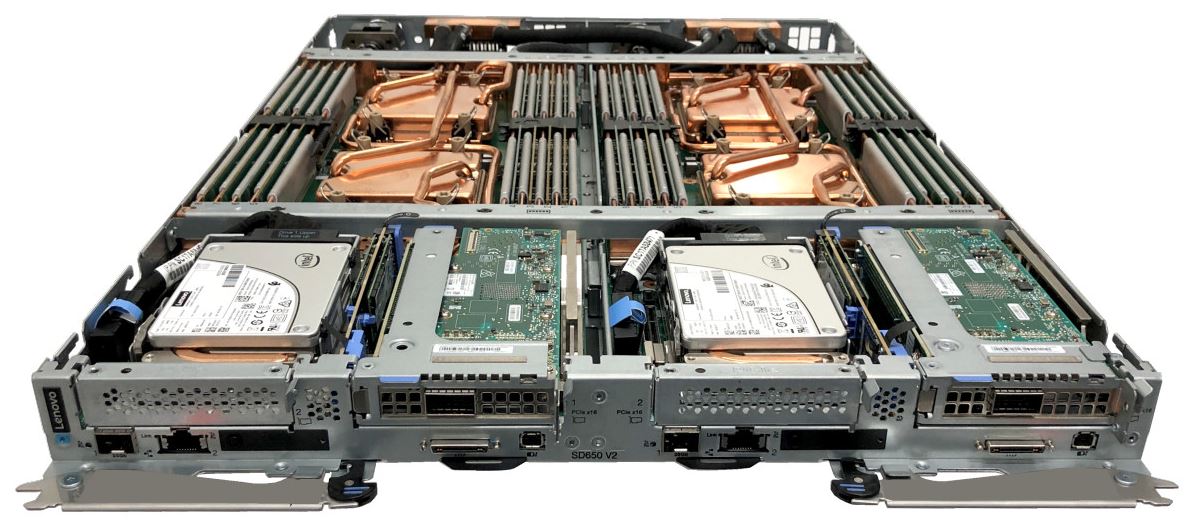

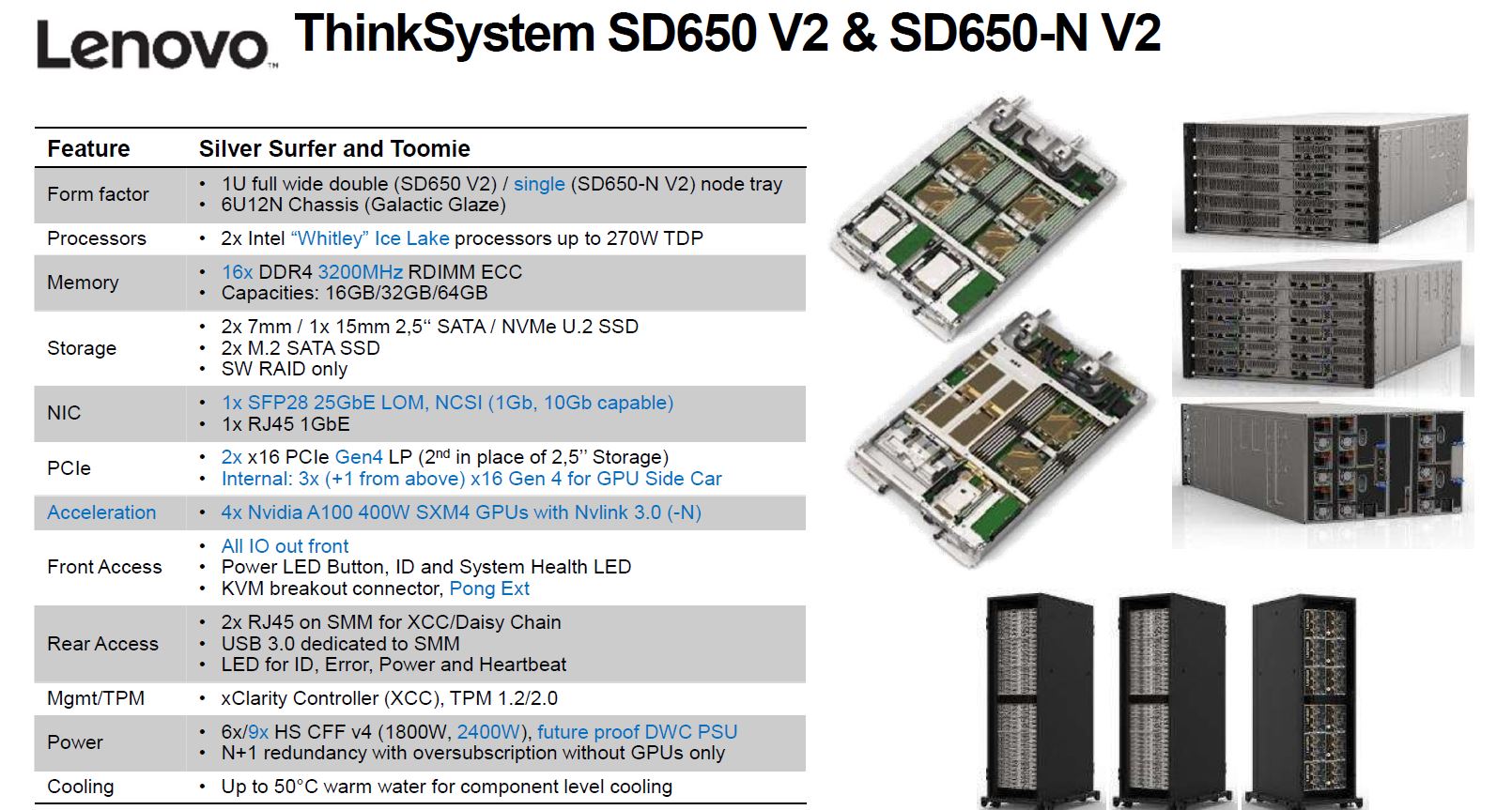

Making this easier for our readers, we are going to focus mainly on the SD650 V2. This is Lenovo’s two-node dual-socket server solution. For density purposes, this places four CPUs in 1U of rack space on a single tray.

The SD650-N V2 instead of being a two-node tray is a single node with the second node’s space being occupied by NVIDIA A100 SXM4 GPUs.

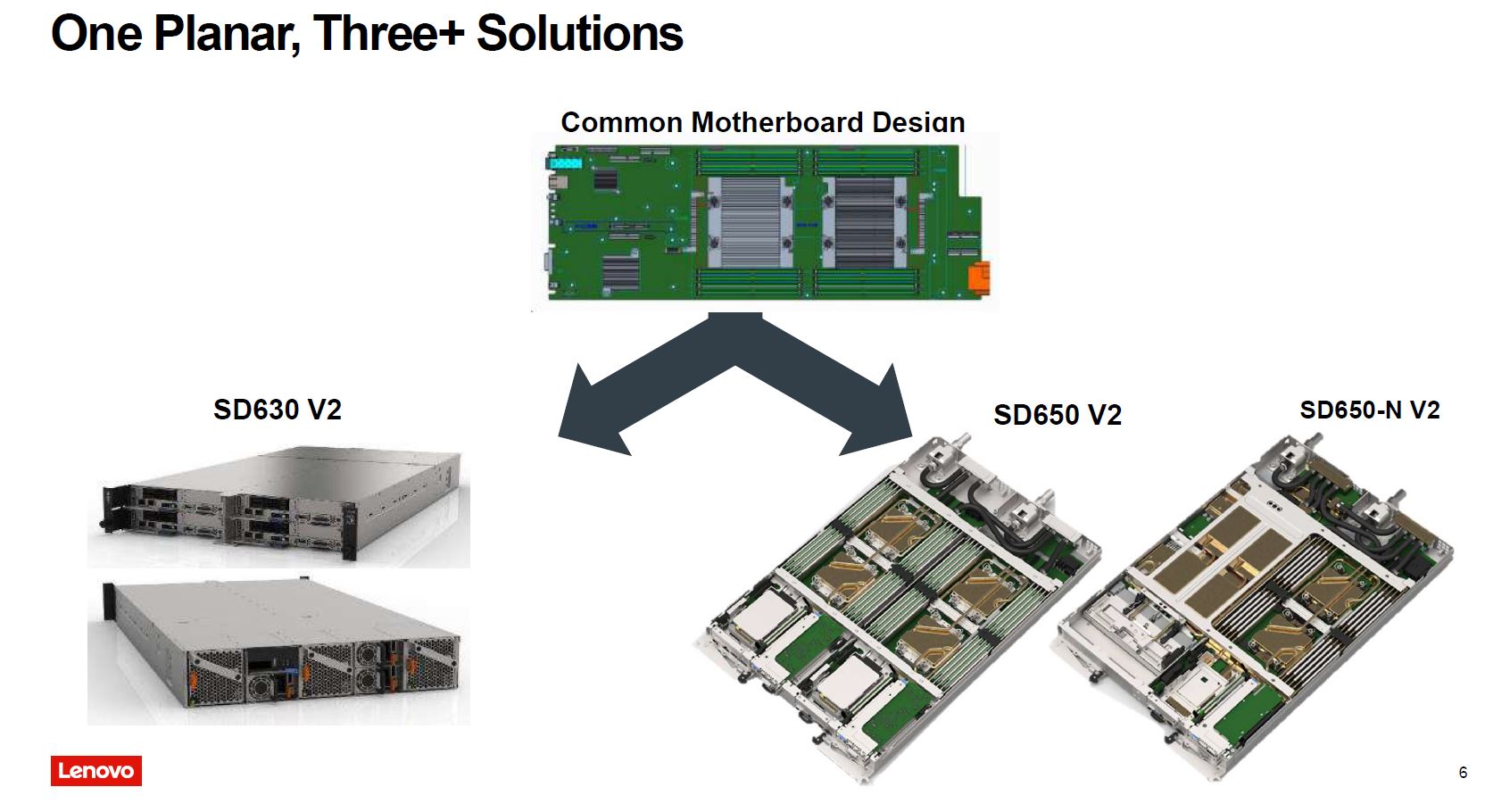

Lenovo’s goal is to utilize a common motherboard design across different products. This is something we have seen other vendors do as well. Using a single motherboard drives up volumes and helps drive firmware maturity and quality. That is why this is a common approach.

Here are the specs for the SD650 V2 and SD650-N V2. For reference on why this is V2, you can see our Lenovo ThinkSystem SD650 article for the first version. Some of the key differences are being highlighted in blue and they are big changes due to the new systems being Intel Xeon Ice Lake systems.

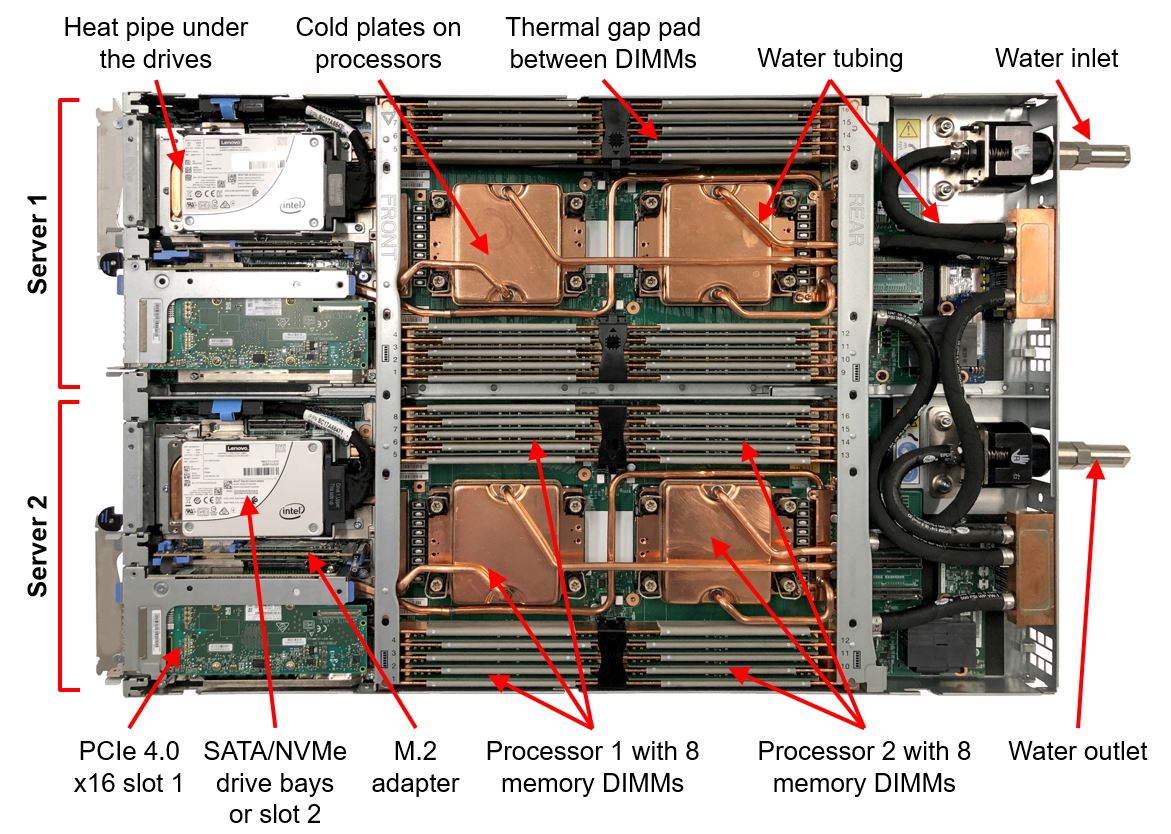

Lenovo’s solution is actually quite different than many others in the market. One may see this and think that you have seen a 2U4N server with water blocks on the CPUs and maybe the DIMMs. Lenovo is going further. It is also cooling the SSDs and the high-speed NIC. It is also using copper tubes in the chassis to minimize the potential for leaks. The net impact is that Lenovo is trying to evacuate all of the server’s heat using water. There is some radiant leakage since the server is made of metal and so forth, but one will not see fans on this server. Depending on how the water is handled outside of the nodes, liquid-cooled data centers that have this degree of cooling are almost eerie as often instead of screaming fans and very hot aisles facilities can get to the relatively quiet murmur of pumps.

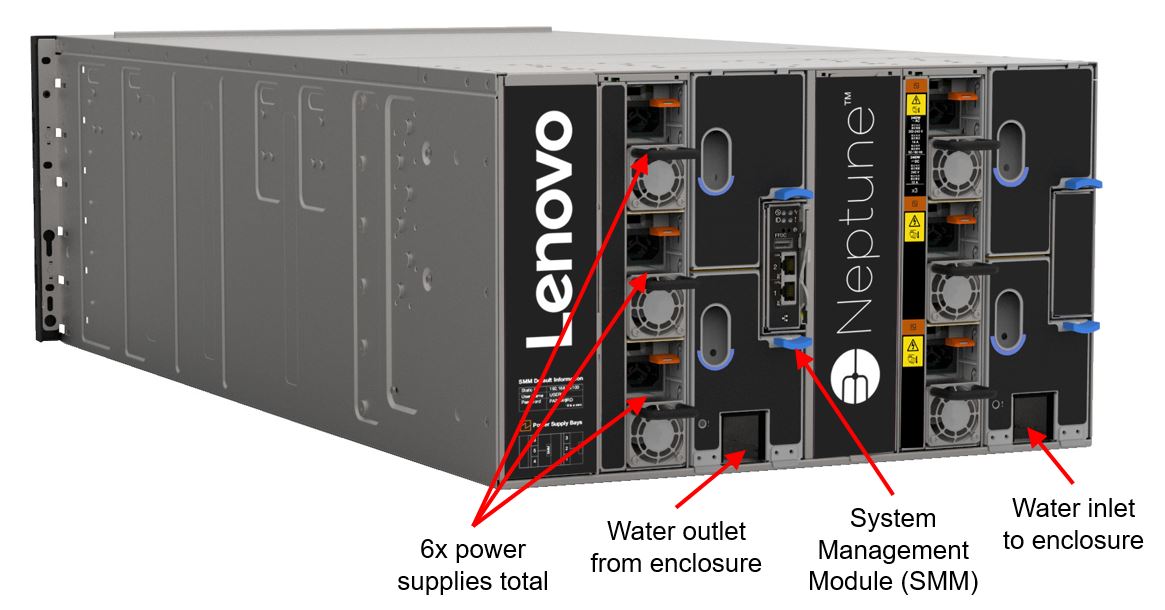

These SD650(-N) V2 nodes go into the Lenovo ThinkSystem DW612. Here, there are multiple redundant power supply blanks and then dedicated space for the water inlet and outlet.

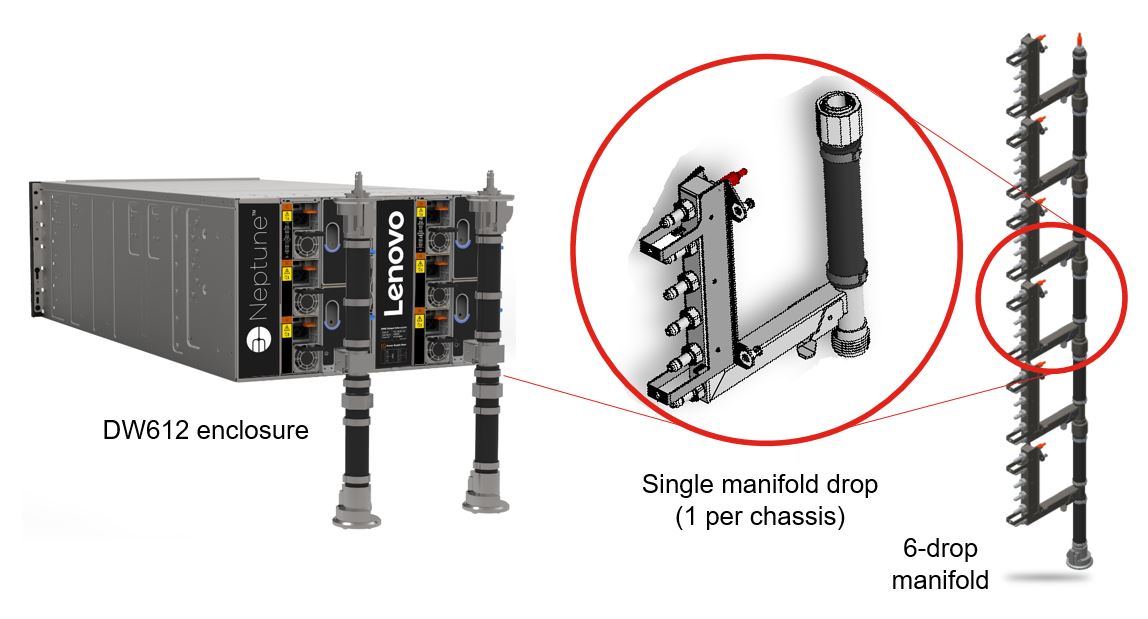

Each DW612 is connected via a manifold drop and up to six can be put into a 42U rack leaving some room for networking.

One fun fact that I learned chatting with Scott this time is that the reason this system looks different than many others with many small quick disconnects at the front or rear of a chassis is its origin. Lenovo decided they needed liquid cooling and the team that designed this solution went back to mainframe roots and used that style of design for Neptune.

Final Words

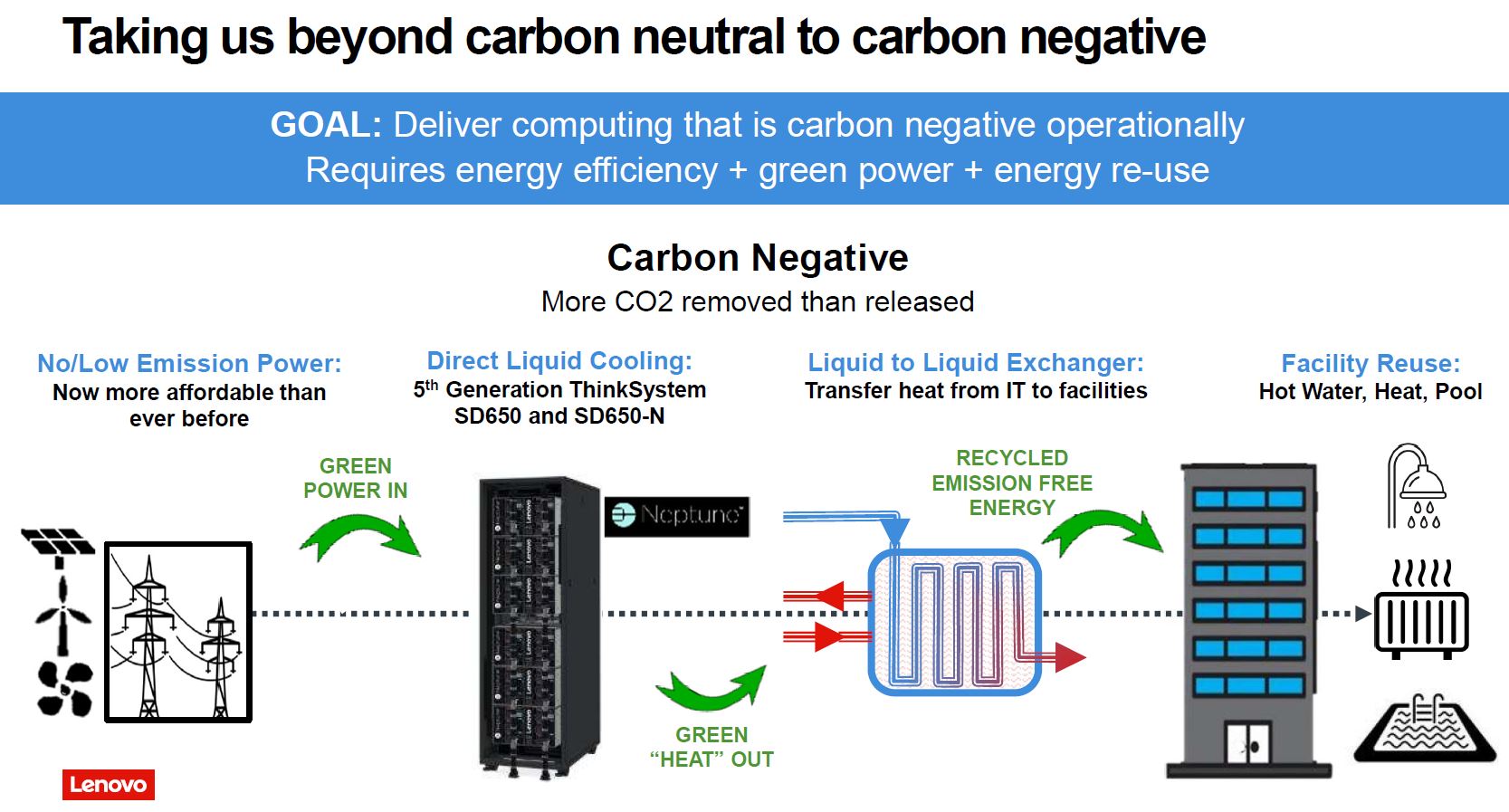

As part of Scott’s Lenovo Tech World 2021 talk, he discussed the more aspirational goal of this technology. With water being relatively non-toxic for those working in the data center, but also having the ability to move a lot of heat, the goal could be to recycle that heat replacing oil and gas-based sources. The goal assuming those alternative heat sources are not overly efficient is to go beyond carbon neutral to carbon negative. This feels a bit like a highly aspirational goal, but it is good to set lofty goals.

The bottom line is this: at STH we review servers from virtually every major vendor, and we review the components as well. Every systems and component vendor is talking about liquid cooling and the power delivery to these racks. It is something that we have been hearing for years, but starting in 2022 we are going to see it begin to bifurcate the market into those that can use higher density solutions and those that cannot.

You will notice that this year it has become more of a theme with our Liquid Cooling Next-Gen Servers Getting Hands-on as an example and much more coming. Lenovo has a nice solution but no matter the vendor you use, if you use high-density compute you should be engaging in that conversation and forming a plan.

Liquid cooling a data center is such a wild concept to me, even though it makes total sense. This blade style approach is super interesting and I hope they come out with an AMD version as well. My biggest gripe with Cisco UCS B-series blades is that there are no AMD blade options. I’m sure there is some drivers reasoning behind it or maybe exclusive INTEL agreements in-place, still a pain though. Excited to see what STH liquid cooling looks like in the future. Keep up the great work!

The biggest issue I see is increasing the cost of the servers and racks, and all that copper tubing, distribution plates, and so forth, probably cannot be reused for a future refresh. If the servers cost enough to begin with, this may be less of an issue in percentage terms.

Is there any company providing liquid cooling for just a single rack? All of these liquid cooling solutions seem to be tailored to having a whole datacenter of infrastructure. In the past I have looked into liquid cooling to reduce noise of the servers in the office where I work, but I gave up when I couldn’t find anything professional – only PC enthusiast parts.

This summer I completed my capstone IT position: 9 years as a sys admin / data center engineer for a small/medium sized Manhattan based financial firm…Floating among several thousand Xeons and (eventually) EPYCs.

9 years ago standard 1U dual-socket pizza boxes, 4-core w/o HyperThreading Xeons, mostly full air cooled racks.

Then to 6-core HT enabled Xeon pizza boxes, still mostly full racks.

8 & 10-core Xeons led to 2U/4-node air cooled beasts, just started to have a bit less total server nodes per rack (iirc).

12-core Xeons was our first split from 100% air cooled (we added mineral oil vats. Quieter data hall, supposedly lower TCO, but a bit messy when doing maintenance and having to deal with slow motion oil leaks via cabling)…On the air cooled side, the 12 core models definitely had less servers per rack.

32-core EPYCs and even the oil filled vats couldn’t be completely filled with 2U/4N server chassis.

—

Not quite sure where we are going with “less is more” (less new servers to replace old ones given the higher power per server power budget).

If you have an older data center, with a modest power/cooling budget per rack, it would seem that at some point “less is less” (total rack compute power goes down when server generation N replaces generation N-1).

Can we bring back Dennard Scaling? Sigh.

@Gabriel, is it though?

Cisco, HP and Dell have shown an ability (though I wouldn’t necessarily call it a commitment) to put newer silicon into older blade style enclosures such as this one. I don’t think they’d put the amount of engineering into this they clearly have if it was a one-and-done solution.