NVIDIA has a custom networking module with 1.6Tbps of network capacity that does not use a standard PCIe slot, yet moves a ton of data. We first covered this module in our 2022 piece NVIDIA Cedar Fever 1.6Tbps Modules Used in the DGX H100. At the time, we had renders of the module that was put into the NVIDIA DGX H100/ H200 systems, but we did not have the actual modules. Thanks to the powers of STH, we now have a pair of the actual modules, albeit ones that have lived a rough life.

Updated with a short:

Inside the NVIDIA Cedar Module with 1.6Tbps of Networking Capacity

As a bit of context, NVIDIA makes its own custom networking modules for its DGX systems. Most of the HGX 8-GPU platforms you see utilize PCIe-based GPUs, at least until the upcoming NVIDIA MGX PCIe Switch Board with ConnectX-8 for 8x PCIe GPU Servers and the HGX B300 NVL16. While NVIDIA said that these are available for partners, the majority of partners over the years have used PCIe NVIDIA ConnectX-7 NICs. The Cedar modules are different, with a custom horizontal form factor and then cables to the optical cages at the rear of the system.

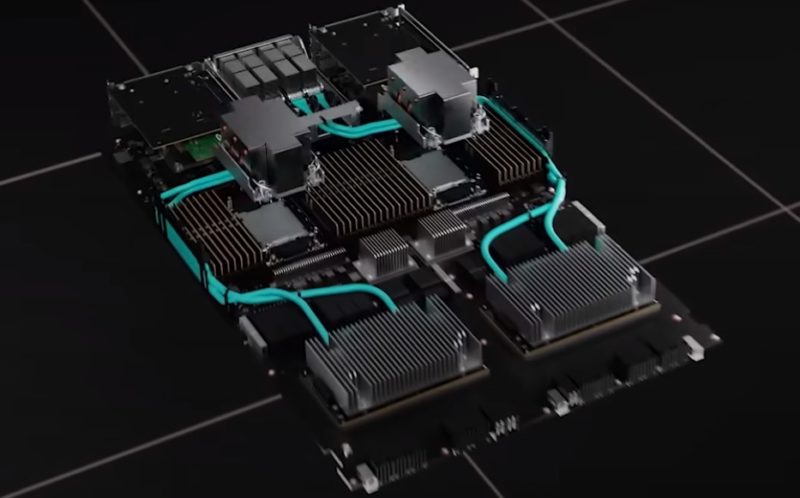

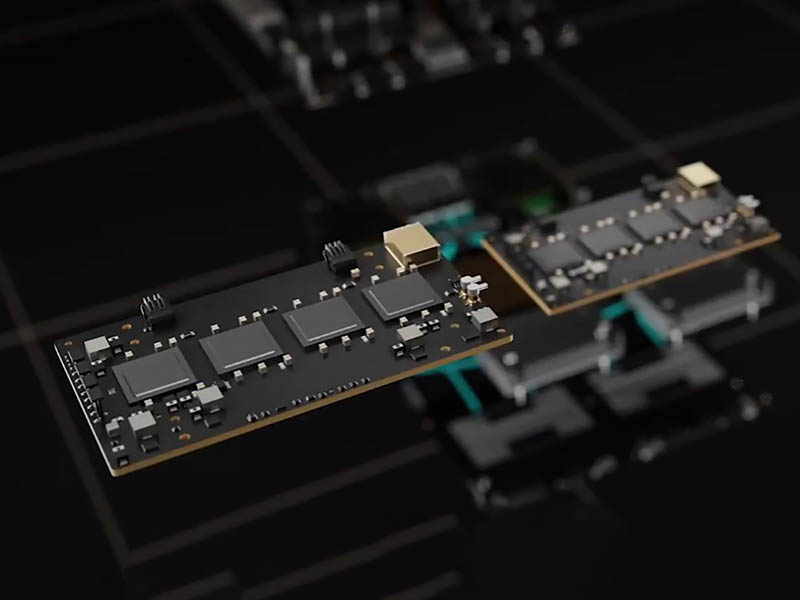

At first, the best look we got at the modules came from a screengrab of a promotional video. These are clearly missing a lot of detail.

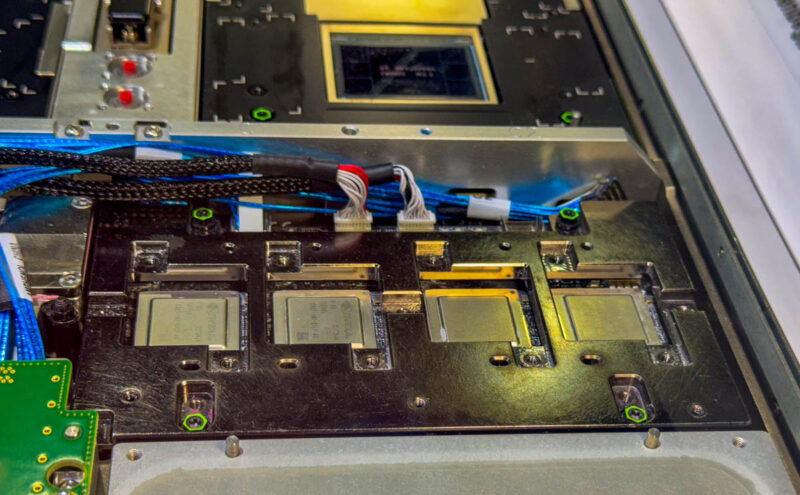

At SC24, we featured how Eviden Shows the Quad NVIDIA ConnectX-7 Cedar Module. There was, however, one challenge: these modules were installed in systems.

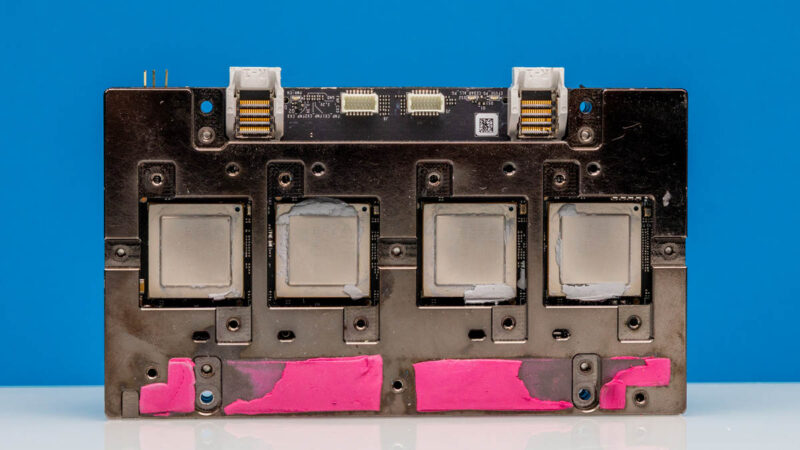

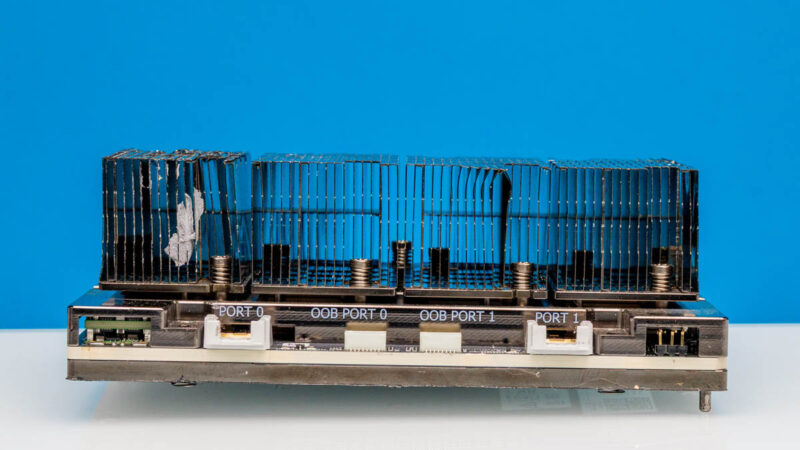

Now we have the actual modules. Here is the top with an attached heatsink for an air-cooled server. As you can see, these modules have lived a rough life, even though they were packaged well on their way to us.

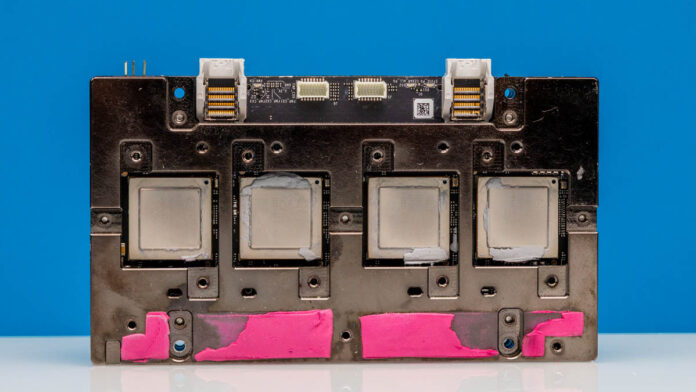

On the top face, we have four NVIDIA ConnectX-7 NICs.

Each of these is capable of 400Gbps of network throughput.

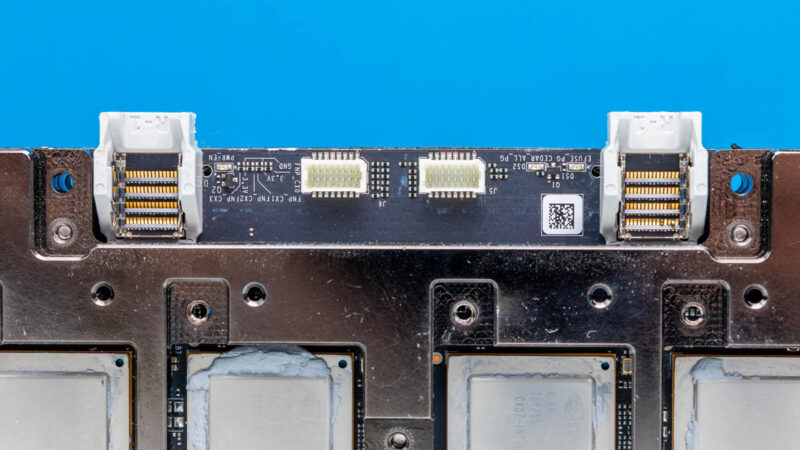

Something we often do not get to see is that NVIDIA has connectors for both the out-of-band management, as well as two cable headers for the cross-chassis cables.

Here are the ports from the top side. The OOB port connectors are designed for much lower performance connectivity which is why they are relatively simple.

Here is another angle of Port 0 and OOB Port 0.

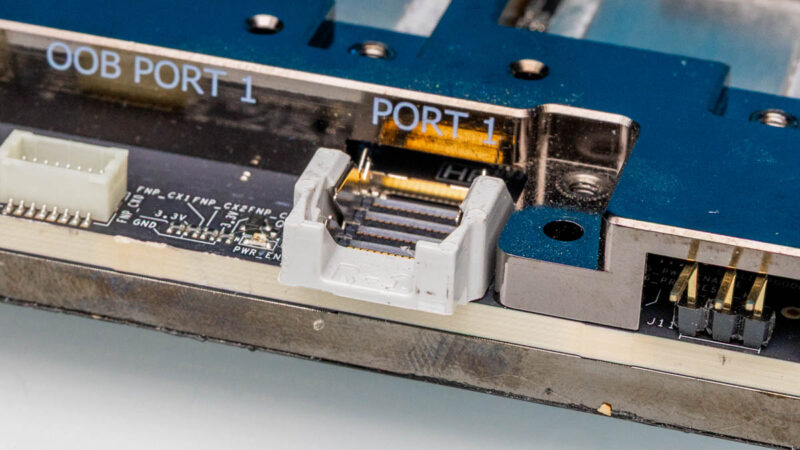

Here is OOB Port 1 and Port 1.

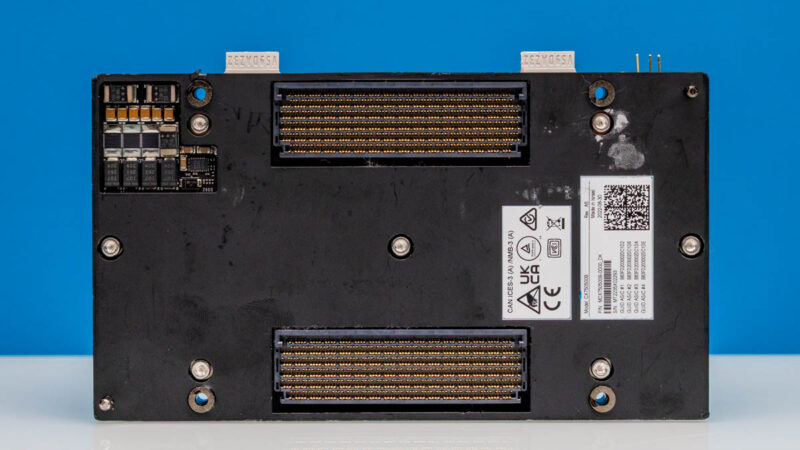

On the bottom, we have custom connectors.

In some ways reminiscent of the connectors at the bottom of SXM GPUs, NVIDIA Grace SuperChip, and NVIDIA Grace Hopper parts.

A big advantage of this is that it is much more compact than having eight PCIe NICs. These custom horizontal form factors are easier to make heatsinks for in the context of a DGX. Perhaps more profound is that the horizontal NIC orientation side-by-side makes it much easier to put a single liquid cooling block over the module. That minimizes the number of cold plates and liquid cooling connections in a system.

Final Words

This is one that can just be categorized as “neat”. These are very custom parts that only a few in the industry use. As a result, a lot of folks have yet to see these modules. We had an opportunity to get a pair and pull off the coolers, so we thought that, despite these being in a condition we would not want to deploy, they are still interesting.

In the future, the HGX B300 largely makes this type of module less useful. We discussed how NVIDIA is doing the next step of integration with ConnectX-8 in our Substack.

This is why I’m on STH every day. You’ve always done cool features like this.

another benefit of these modules is that they cut the # of optics needed in half. They use desilink cables to combine 2 x 400G IC into a single 800G port.

That is one of the few reasons that they need flat top 800G osfp optics! That and the new cx8 nics.