Today we are looking at the ICY DOCK ToughArmor MB852M2PO-B. This product converts an unused slimline optical drive bay into a set of two front-loading slots, each capable of housing a PCIe 4.0 NVMe M.2 SSD, and connects them to the host system via OCuLink. Much like our previous review of the MB840M2P-B, this is a niche product that I suspect will polarize our readers; some will immediately see a use for this product, and others will be scratching their heads wondering why such a device even exists. In our review, we are going to take a look at the unit, then discuss what we found when testing it.

ICY DOCK ToughArmor MB852M2PO-B Overview

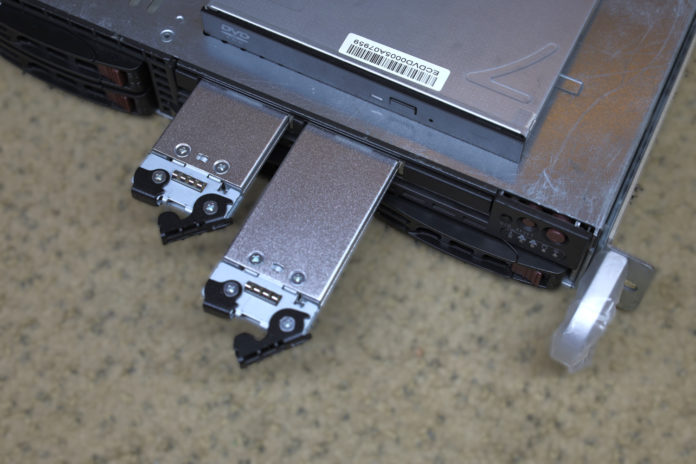

The MB852M2PO-B has a relatively simple design. The external dimensions of the enclosure mimic the dimensions and mount points of a slim optical disk drive.

I mounted the drive enclosure in a Supermicro 1U chassis, and as you can see the Icy Dock was a little bit slimmer than the DVD drive I removed to make room for it.

Once installed, the MB852M2PO-B provides room for two drive sleds that are identical in design to the one in the MB840M2P-B. The sleds are mostly metal, with a sliding retention mechanism for holding a M.2 NVMe SSD of any size between 2230 (30mm) and 22110 (110mm.)

The SSD of your choosing simply fits into the caddy. After that, you slide the metal retention clip into place and then install the cover.

On the MB852M2PO-B, each sled has a corresponding OCuLink port on the rear of the enclosure. Also included is a SATA power header, which is required for operation.

One thing to note is that no OCuLink cables are included with the dock itself and must be provided by the end-user.

Next, we are going to discuss what we found when testing the unit.

Hot-swap Support

Much like the MB840M2P-B, hot-swap support for the MB852M2PO-B is going to be dependent on support for hot-swap NVMe at the platform level. As an example, my test bench is a desktop Ryzen system and does not support hot-swap/ hot-plug functionality. Physically the MB852M2PO-B supports hot-swap, but it just provides the physical interface to allow hot-swap to happen; support still has to exist on your system to take advantage of it.

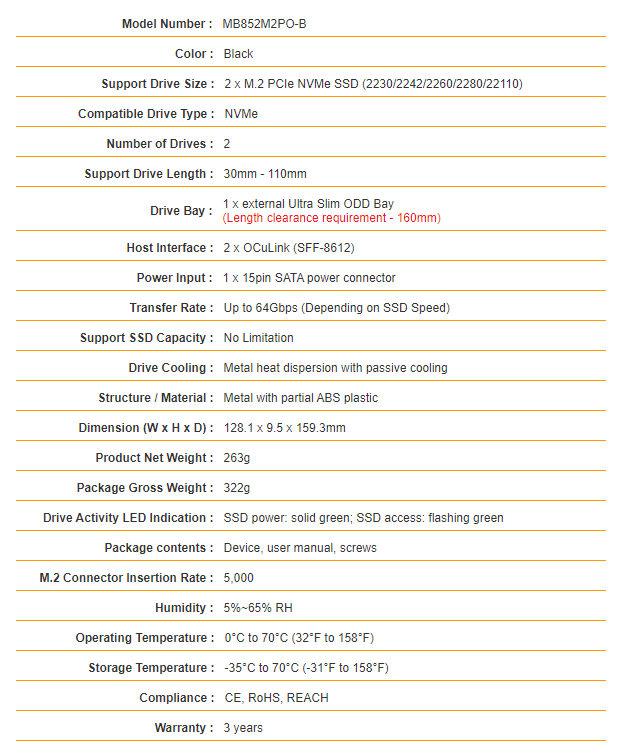

ToughArmor MB852M2PO-B Specs

ICY DOCK provides a product spec sheet for the ToughArmor MB852M2PO-B which you can see here:

In an improvement to the ToughArmor MB840M2P-B, the MB852M2PO-B officially lists PCIe 4.0 as supported. Back when I reviewed the MB840M2P-B I had no problems with Gen4 support, but it was unofficial. As you might imagine, PCIe 4.0 support was no problem for the MB852M2PO-B.

In order to connect the ToughArmor MB852M2PO-B to my system, which does not natively have OCuLink interfaces, I tested both a M.2-to-OCuLink adapter as well as a PCIe-to-OCuLink adapter. These worked just fine, though the preference for a device like this would be to utilize the OCuLink ports already on your server. If you do need to purchase an adapter, ICY DOCK also provides a list of officially compatible devices on their FAQ page.

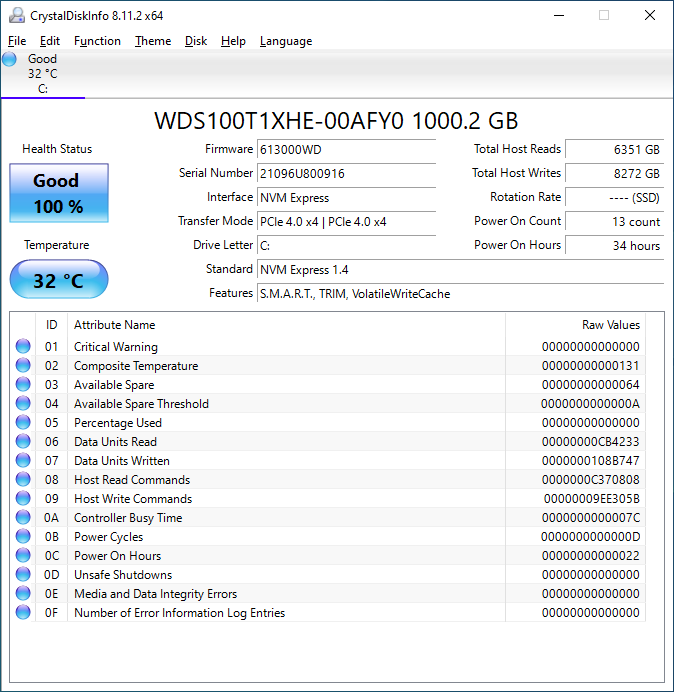

Performance

Since the ICY DOCK ToughArmor MB852M2PO-B is largely functioning as a physical interface converter with no significant logic onboard, we would not expect it to have any impact on the actual performance. I ran some tests utilizing both PCIe 4.0 and 3.0 SSDs, and no performance difference was noted between drives installed in a ToughArmor sled and one that was installed directly in the motherboard M2 slots.

Final Words

The ICY DOCK ToughArmor MB852M2PO-B is a well-made niche product. Many legacy servers have unused optical drives or drive bays on the front, and something like this would easily allow for front-loading of NVMe drives for boot purposes rather than using an internal SATA DOM or similar.

Often servers have unused PCIe lanes or OCuLink ports internally, and the ToughArmor MB852M2PO-B allows those lanes to provide useful functionality rather than sitting empty. Even if not used to boot the system, another option would be as a fast removable storage to shuttle data between two systems both equipped with ICY DOCK enclosures that fit the same model sled. For example, those who need to move TBs of data across town but cannot do so over a network can use this ToughArmor product to greatly increase SneakerNet efficiency. Good examples of those may be for moving machine learning/ AI data sets from field sensor aggregators and transporting them to an ingestion node. Another is for videographers that may need to transport copies of data and send them to editors and effects artists. Even if your system does not support hot-swap or hot-plug of NVMe drives, the time cost of a reboot could be easily recouped with the speed of a native PCIe 4.0 transfer.

In the end, I think the MB852M2PO-B makes a bit more sense overall than the MB840M2P-B. At $178 on Amazon, the ICY DOCK ToughArmor MB852M2PO-B is somewhat expensive, but I feel like it will allow server administrators to take better advantage of unused resources already present on their own servers. One also must take into account the need to purchase cables and potentially adapters based on the system. If your servers have OCuLink ports and an unused or underutilized ODD bay, the MB852M2PO-B can expose some very useful additional functionality, and for that it gets my recommendation.

I like this way more that the 1x M.2 PCI-E thingy. I am really not sure what people are using optical drives for, in servers, these days. The only thing I could see being useful is a BD-R drive that supports writing 100+GB discs, as a pseudo-tape drive for archival and backup of backups.

Optical drives? Aren’t those related to 3.5″ diskettes?

We should ditch PCIe slots in favour of cabled Occulink connections across the PC & server macro-ecosystem. Would make for smaller machines and better thermal management solutions.

$180 with no cables included definitely cooled my home lab enthusiasm a bit; and that’s certainly one place where you’ll find a lot of servers that shipped with an optical drive and not enough m.2

I wonder if making a rather niche product to their standards(in my experience IcyDock doesn’t make garbage) just meant it costing more than one would prefer; or if they are gunning for people with real, professional, in-production servers looking to give them a late life boost, for which a couple hundred bucks is a relative pittance.

This is a pretty interesting product to give some legs to otherwise old kit. Definite overkill for us homelabbers, but plenty small businesses that are handled by one semi-tech-savvy person or local IT guy could use this as a way of giving either their old kit or some some refurbished kit a significant performance and longevity boost. Loads of Sandy Bridge and Ivy Bridge era servers out there that are still perfectly serviceable that came with optical drives. I’d challenge the business case for doing this to an old server vs buying a new one and consolidating, but if your business is already virtualised and on the minimum sensible number of nodes it could be an option for redundant VM and/or DB storage.

Using it in my Dell PowerEdge and I must admit, it is working perfectly fine with me. The drives aren’t overheating like I initially thought they would be, good speeds, and feels solid in hand. Regardless of the price tag, no other storage enclosure manufacture has something unique like this. Wish it was hot-swappable but that is more hardware-based than the actual unit and downsides of using NVMe. Hopes it last me a long time.

Hmm. What everyone has to realize, is that this is a bet on newer server systems with SAS 4.0/PCI-E 4.0 embedded controllers. The OCU-LINK interface is somewhat discouraging, as many do not still yet indulge in new-gen tech, with ocu-link being one of them. For those who don’t know, OCU-Link is like USB-C for SAS 4.0/ PCI-E 4.0 if you want to see it that way.

As for this cage, it’s perfectly fine, but this is clearly not for the end-consumer at this time. This is for those who (have a newer system) and wish to have a clean system, with easily accessible hot-swap m.2 bays available.

I confess, I don’t know what reason you would need to hot-swap these little things right now, but enterprise workloads and server applications often find a way.

This is a nice little niche device, but nothing more. Good article though. Thanks for this writeup.