This week, Gigabyte announced the availability of a new product line of multi-node servers. The company has made 2U 4-node servers for many years, but it is now going into the market of even higher-density designs based on both AMD EPYC and Intel Xeon. It has also shown off liquid-cooled blade server designs.

Gigabyte B363 Multi-Node Servers

The Gigabyte B363 was at Computex, but these are officially coming to market. The Gigabyte B363 has both AMD EPYC 4005 / Ryzen 9000 series and Intel Xeon 6300 series versions that fit 10x single-socket nodes in 3U. The initial versions have dual 1GbE NICs, but let us just say I think there is a good reason for Gigabyte to offer faster onboard networking as well.

In the rear, Gigabyte has four power supplies for redundant power, and also has shared chassis management NICs. The feature that may be easily overlooked here are the OCP NIC 3.0 slots. There are three slots that can be used with multi-host NICs. Instead of consolidating networking and cabling using a chassis switch, one can use multi-host NICs which actually reduces complexity by removing a layer of switching.

The Gigabyte B343-C40 is the AMD version while the B343-X40 is the Intel version. While the specs say the systems support three OCP NIC 3.0 multi-host adapters, it appears as though the systems have five slots.

For dedicated hosting companies, this type of deployment model is great because it allows for shared power supplies, chassis, and cooling thereby lowering the total CapEx cost to get nodes online.

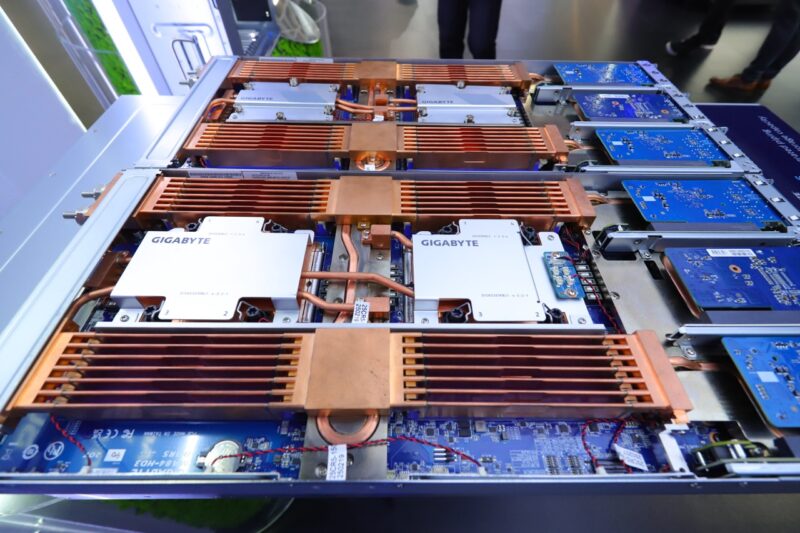

Gigabyte B683 6U Blade Servers at Computex 2025

At Computex 2025, there were a few early Gigabyte B683 blade servers. These are 6U 10-node designs.

Making these notable is not just having multi-node systems. The company is also working on liquid cooled designs. If you look at the prototype nodes below, you can see not just CPU and memory liquid cooling, but the rear nozzles are for an internal manifold which means each node does not need external warm and cool liquid cooling connections to a rack manifold.

That may not seem like a big deal to some, but 20x 500W TDP CPUs in a 6U chassis would be around 10kW, making the total system power in-line and sometimes denser than modern 8-GPU air-cooled AI servers.

Final Words

We cannot go into more details around unreleased products, but Gigabyte has more versions of these multi-node systems in the works. Given the popularity of the multi-node form factors in the entry Intel Xeon and AMD EPYC markets, those make a lot of sense. Also, liquid cooling blade servers is going to become a more mainstream option as AI data centers transition to liquid cooling in 2026.

Stay tuned to STH for more on these soon.

For the B343:

The reason for 3 usable OCP 3.0 slots in the back is simple. Each blade has x4 PCIe going to them making it 40 lanes going to 48 lanes of 3 OCP x16 slots. Utilizing more OCP slots would require redesigning both the motherboards and connections to the chassis.

Also the AMD versions allows for on-blade networking with 2x 1GbE or 2x 10GbE or 2x 25GbE while Intel is limited to 2x 1GbE. Looking at the manual it seems that both AMD and Intel have an internal removable IO board, but Gigabyte didn’t want to invest into the aging Intel platform to offer faster NIC versions.

are we are really doing this, we are going to liquid cool the entire data center?

I suspect this gen of AI servers is getting so much money thrown at it that by God, America and apple pie we WILL make the number bigger, the time shorter and have the line go up, at any cost.

Maybe I’m just looking real hard at the “at any cost” side of the equation and not hard enough at the “line go up side”. I’m rarely tech negative but I just suspect that few of us will look back in 5 years and be proud.

B363 is limited to 160 CPU cores/20 memory channels/40 DIMMx48GB/20 NVMe in 3U; that’s way lower than some EPYC 9005 or Xeon 6900 2U servers.

What’s the real advantage except physical separation?

@Joel Nolan

Do it? unfortunately it’s already been done, and being done. In Factorio fashion, “the data center must grow”. And also, “$$$$$$$$ line must go up”.