AMD EPYC 3451 Benchmarks

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. Starting with our 2nd Generation Intel Xeon Scalable benchmarks, we are adding a number of our workload testing features to the mix as the next evolution of our platform.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results, and highlight a number of interesting data points in this article.

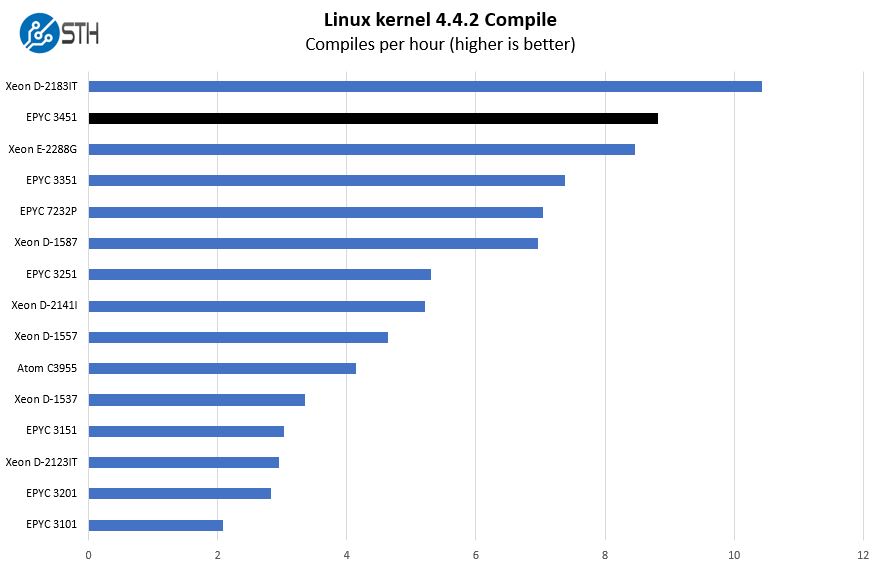

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

We are adding several layers of results here. These include the Atom C3955, a 16-core Atom part, a Xeon E-2288G top-end Xeon E part, and an AMD EPYC 7232P part to show a delta to the mainstream socketed platforms. We have all EPYC 3000 SKUs except the EPYC 3255 which is an extended temperature part. In these results, we also have a number of higher-core count Xeon D-1500 and D-2100 parts for comparison.

As you can see, the EPYC 3451 is set to perform near the top of all of the charts we are about to show you.

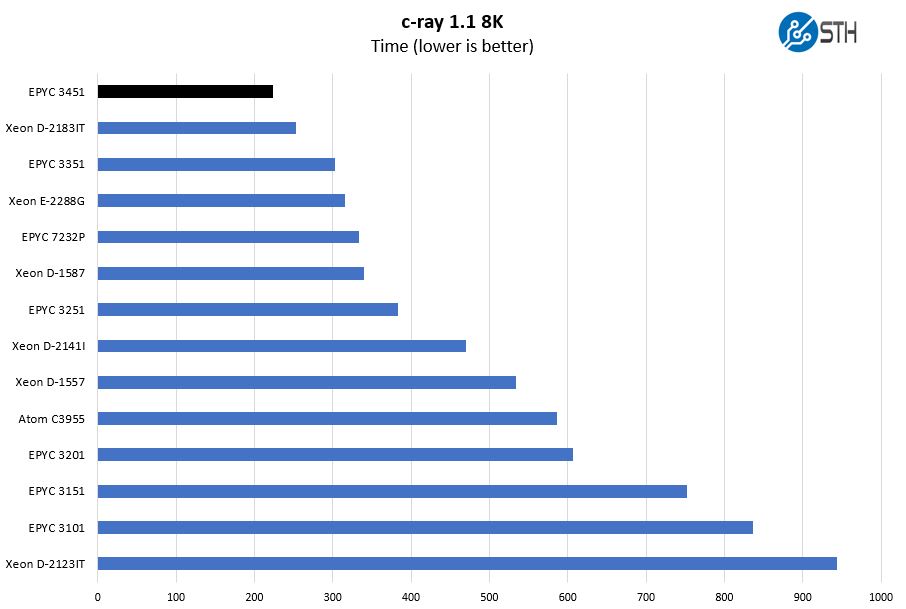

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

AMD Zen and newer architectures perform well on these types of benchmarks. That is why AMD marketing uses them so heavily. We instead are going to use it to point out the scaling between the single and dual die parts and how much the new parts expand the performance range of the embedded series.

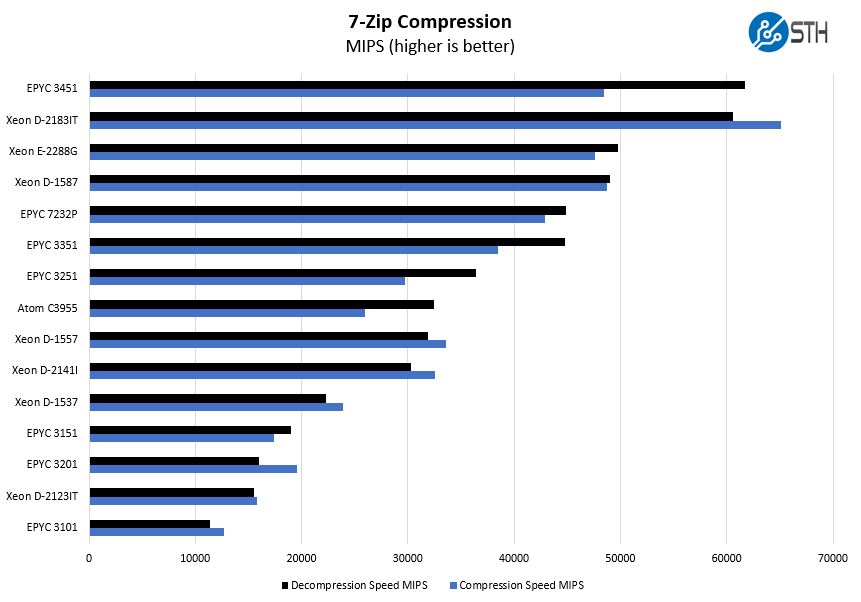

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Here we see the EPYC 3451 rival the Xeon D-2183IT in terms of decompression speed. It falls behind in terms of compression speed. A strange quirk is that we always sort this chart by decompression speed, and have been doing so for 5+ years. As a result, every so often we get a chart like this where had we sorted on compression we would see a few results flip.

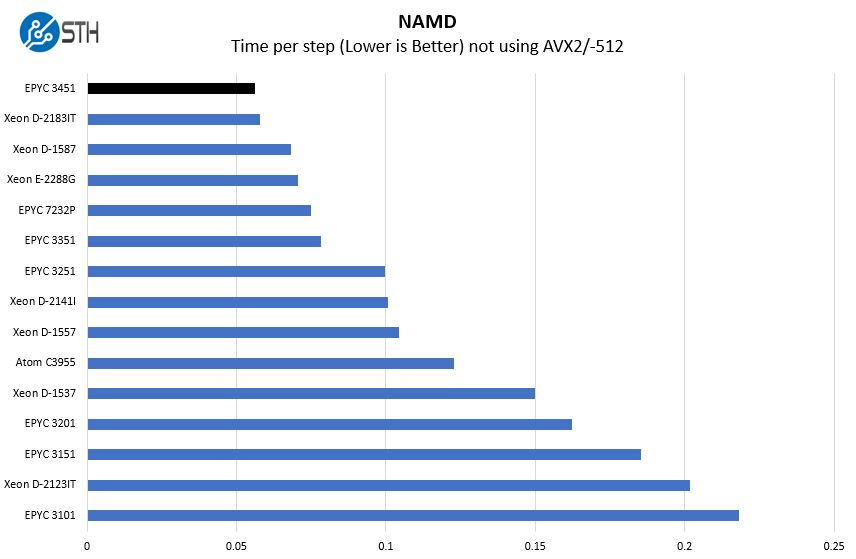

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. With GROMACS we have been working hard to support AVX-512 and AVX2 architectures. Here are the comparison results for the legacy data set:

When we take away specific AVX2 and AVX-512 optimizations, our NAMD results again show the dual-die parts perform extremely well, especially at 16 cores.

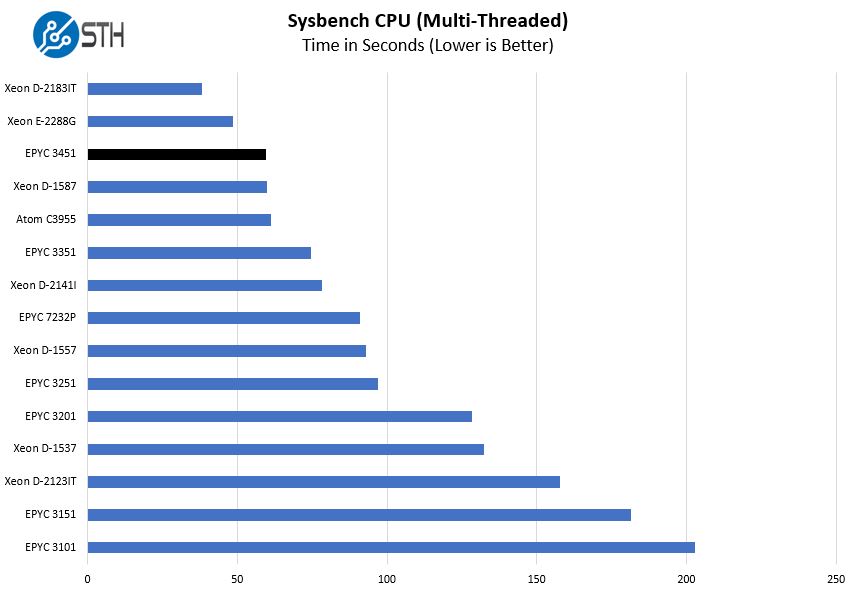

Sysbench CPU test

Sysbench is another one of those widely used Linux benchmarks. We specifically are using the CPU test, not the OLTP test that we use for some storage testing.

This was an interesting result where we saw the EPYC 3451 still perform better than the Xeon D-1587 16-core part, but only by a smaller margin.

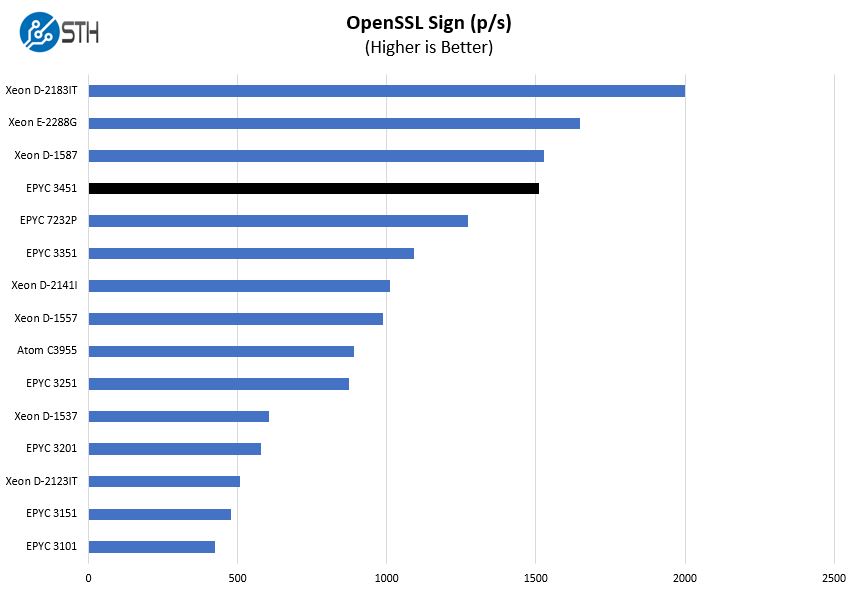

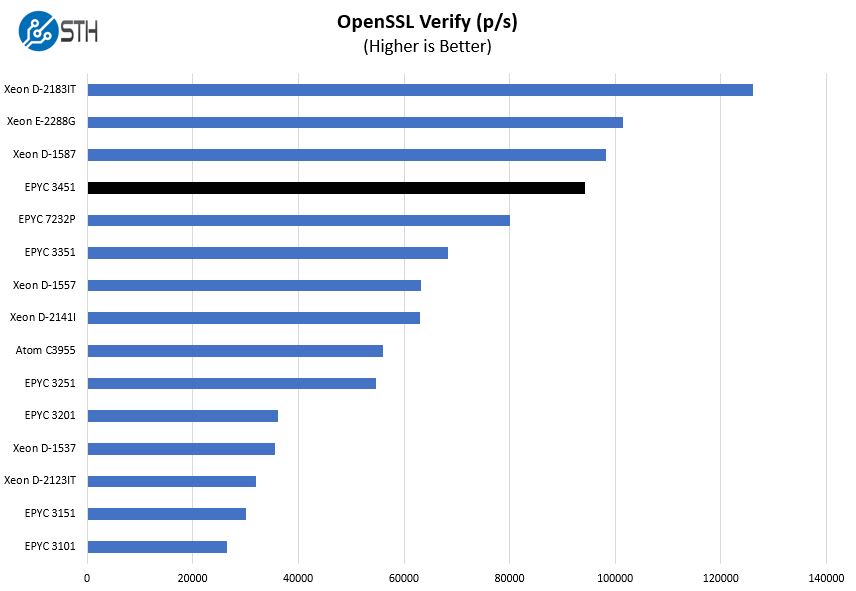

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

OpenSSL is foundational web technology. Here the Xeon D parts pull slightly ahead. We also wanted to point to the Intel Xeon E-2288G. The Xeon E-2288G has around the same TDP. It has much lower memory and PCIe bandwidth and only eight cores. Those eight cores have a maximum turbo boost clock of 5GHz which means these are high-speed cores. In many tests, it is actually very competitive.

That 8-core Xeon E-2288G is a $539 list price part, and one needs to purchase a PCH as well. AMD is delivering the EPYC 3451 with more cores, cache, memory, more PCIe, a more compact BGA SoC footprint, and more 10GbE all at a price that is only slightly higher. While Xeon D parts in this performance range are set to a completely different pricing scale.

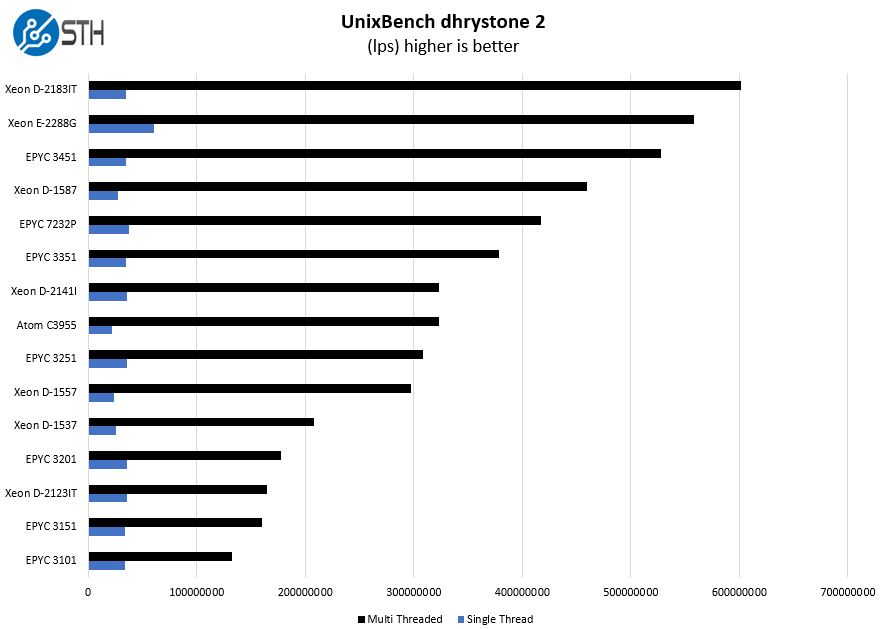

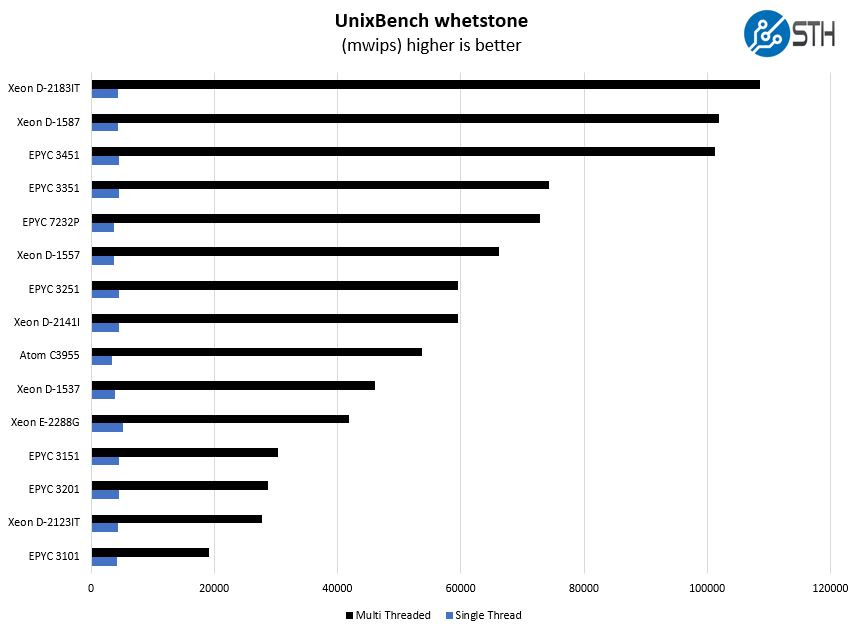

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

Here are the whetstone results:

Here again, we see the Intel Xeon D-2183IT performs better than the EPYC 3451, but it is not by a large margin. If you are currently using the Xeon D 16-core parts, the EPYC 3451 provides a very similar level of raw performance.

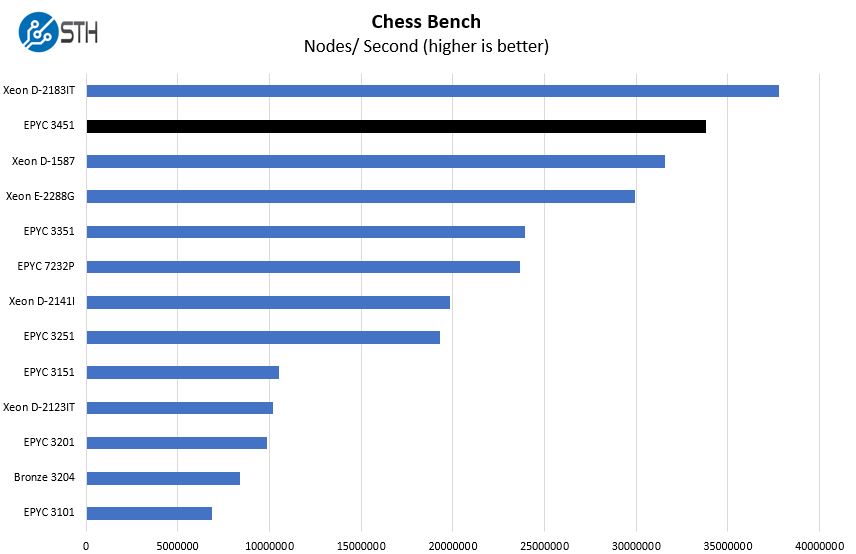

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and now use the results in our mainstream reviews:

On our chess benchmark, we see about the same stack ranking that we have seen throughout this article.

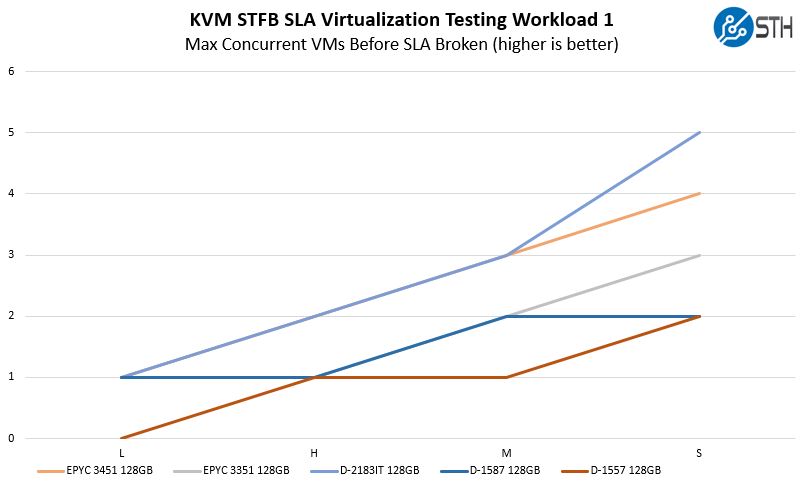

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker.

These systems really are designed to run smaller, less CPU heavy VMs. Still, we can see a fairly decent mix here for the AMD EPYC 3451 that aligns well to what we saw from lower-clock 16 core mainstream socketed processors. This is our first time showing these results on embedded parts. Part of the reason is that the chips are starting to get core counts high enough to really handle these STFB SLA Workload 1 VMs that are more CPU bound.

What was uniquely interesting here is that the lower-clocked Xeon D-1587 only handled two of the “small” VMs while the EPYC 3351 actually handled three. We also saw the Xeon D-2183IT and EPYC 3451 match each other for three of the four VM sizes, but the D-2183IT was able to push one extra VM on that small size test.

Next, we are going to discuss market positioning and impact before getting to our final words.

The market analysis vs Intel is great. I’d skip the benchmarks and go there after the first page.

Love to see these. Like you said, there needs to be more platforms. And someone to actually take advantage of all the expansion capability. I’d like to see Supermicro do a v.2 that does that…

I like to see this AMD EPYC 3000 SoCs in product like Supermicro Flex-ATX boards since I first read about them in 2018.

We use especially the X10SDV-4C-7TP4F (4Core Xeon-D) for edge storage servers, because this boards are small enough for short depth 19″ cases that fit into short depth network gear cabinets.

Furthermore, I like them for the low power consumption.

I know there are cheep NAS boxes that also fit into small recks, however this Xeon-D Flex-ATX boards are way more flexible, reliable and powerful (think of clients working while a RAID set need to be scrubbed, rebuild, backuped and so on). It is possible to connect up to 16 SAS drives without requiring a SAS expander.

We also run Mail-Servers, SAMBA as a directory controller and Free RADIUS for VPN and WiFi access management on it.

Why not mini-ITX? Because it is not possible to have an SAS controller and SFP+ nic on a mini-ITX board (only on PCIe slot!).

The Supermicro X10SDV-4C-7TP4F has both onboard and provide 2 PCIe slots for further expandability!

I have already seen mini-ITX boards with 10GBase-T interfaces. While this would allow 10Gbit/s network connection together with a SAS HBA on a mini-ITX board, it is usually not very practical because a lot of 1G edge switches have 2 or 4 SFP+ ports. However, I don’t know a single 24 port 1G switch with a 10GBase-T interface. Furthermore, I think SFP+ is in general more flexible and cheeper then 10GBase-T. Therefor, I would really like to see mainboards for AMD EPYC 3000 series CPUs that exposes the included 10Gbit/s networking as SFP+ ports.

I often think about using Supermicro Flex-ATX boards with higher core counts for small 3 node Proxmox HA clusters. However, the boards with 16 core Xeon-D CPUs are too expensive compered to single socket EPYC 7000 servers. Furthermore, they are hardly available. The EPYC 3451 could be a game changer for this. We could probably fit 3 servers with EPYC 3451 CPUs in one single short depth rack. With at least 4 SPF+ interfaces we wouldn’t even need a 10Gbit switch for inter-cluster network connection, because we could simply connect every node to all the other ones directly!

I really hop I can read I review about a small AMD EPYC 3000 mainboard with SFP+ ports on STH soon.

Thanks to Patrick for his great work!

Why use such an old kernel (4.4) vs a newer (maybe have optimizations) kernel (5.x)?

Rob, that is just the software code that we are compiling. We are more likely to add another project such as chromium or similar as a second compile task. We are getting to the point where the kernel compile is so fast that we need a bigger project. That is even with the fact we compile more in our benchmark than many do.

EPYC3451D4I2-2T

https://www.asrockrack.com/general/productdetail.asp?Model=EPYC3451D4I2-2T#Specifications

Nearly one year since this post and we’re unable to buy any platform rocking a 3451 :(

Getting close to 2 years, and still cannot get a system with 3451, or even other 3000 series ones with all the 10Gbps PHYs exposed, and all the PCIe lanes in small form factors (i.e. to two or three OCP 3.0 connectors would be nice option for example – for 25Gbps nic) . All the solutions I found only exposed very small subset of functionality of this SoC, and are super overpriced for what they provide. There is technically asrock rack, but still I am not able to order it, and it still uses Intel NIC to do networking, which is silly. I asked asrock rack and supermicro, and they said they are “working” on it, but still nothing in sight.