The day 1 Keynote at Hot Chips 2025 is titled Predictions for the Next Phase of AI by Noam Shazeer. Aside from authoring the transformer paper, Attention Is All You Need. He has also brought a multitude of things to the world, such as improving spelling checks in Google Search. He later built a chatbot that Google refused to release, causing him to leave Google. He started Character.AI, which Google did a $2.7B deal for sometime after Google realized it needed its own chatbot. Noam is now a co-lead at Google Gemini.

Google Predictions for the Next Phase of AI

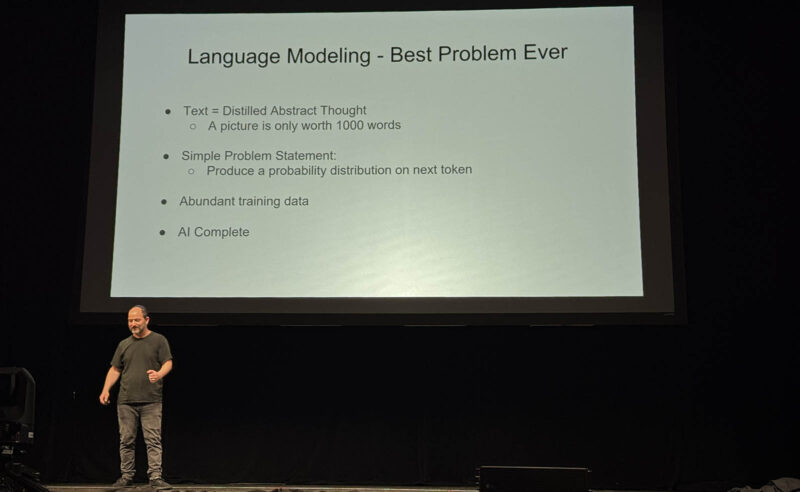

We are not going to go into the entire keynote, instead, we will let folks watch that if they missed it. I wanted to highlight a few key takeaways. First, Noam thinks that language modeling is the best problem ever. To the point, there is a slide and a portion of the talk dedicated to this concept. Over a week later, and it was awesome to see how much passion he had for the topic.

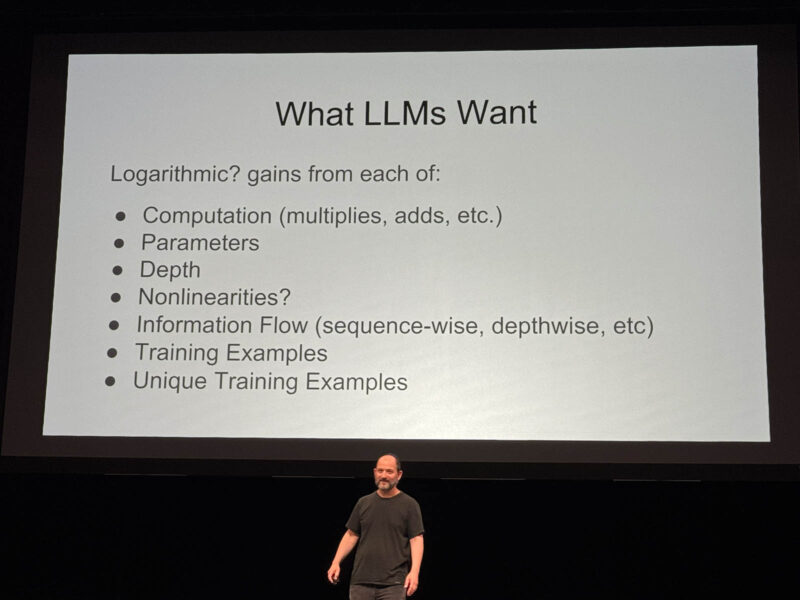

He then went into “What LLMs want.” That almost made me think about how we say “more cores more better.” He was focused more on how more FLOPS are more better. That is important because as we get more parameters, more depth, nonlinearities and information flow help the scale of the LLMs, but can require more compute. More good training data also helps create better LLMs.

He also went into how in 2015 it was a big deal to train on 32 GPUs, but a decade later it can be hundreds of thousands of GPUs. Another neat tidbit is that he said in 2018, Google built the compute pods for AI. This was a big deal because before this, often Google engineers were running workloads on a thousand CPUs but then they would slow down and do other things like crawl the web. Having the big machine dedicated to deep learning/ AI workloads allowed for an enormous amount of performance.

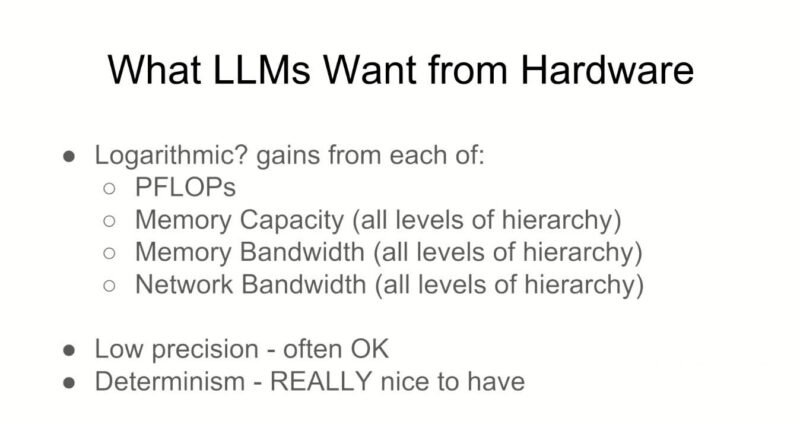

That brings us to the big slide at a chip conference, What LLMs Want from Hardware.

The funny takeaway from this slide, and perhaps I was in the minority receiving it like this, is that more compute, memory capacity, memory, bandwidth, and more network bandwidth were all important for driving future generations of AI models. On the “all levels of heirarchy” that is not just DDR5 capacity and bandwidth, but also HBM, and on chip SRAM. Lower precision to help drive better use of those four was also seen as a good thing in many cases. Determinism helps make programming better.

Final Words

Even after re-watching the keynote some time later, I still think that the message boiled down to bigger faster everything in clusters would lead to gains in LLMs. That is probably good for Google and a number of other companies. If you were wondering about the “Thank you for the supercomputers!” slide, that was because growing the accelerators, networks, and scale of clusters has directly led to the current wave of AI becoming more useful than what was trained on those 32 GPUs clusters back in the day.

Frankly, my big takeaway is that a luminary in the space thinks more compute will lead to better AI models. It was also really neat to see someone so passionate about language modeling.

I’m surprised that light-tailed network latency wasn’t mentioned as an AI training requirement. Maybe there are asynchronous workarounds, but then determinacy is also mentioned as “REALLY nice to have.”

I see two emerging points of view: One is that the current approach to AI will scale to super intelligence with improvements in hardware; the other is that the current approach has reached a point of diminishing returns and will not progress beyond hallucinating chatbots no matter how much hardware improves.

Personally I have no idea what will happen next. For example, there is a possibility that even better models and training techniques will be discovered that avoid another AI winter.

I’d be curious to see a plot of # of parameters and LLM accuracy vs Cost over the last decade.

Because I suspect a lot of the More is Better folk would be surprised at the diminishing returns.

@Intone Searching for what you asked about returns some useful answers: “plot of # of parameters and LLM accuracy vs Cost over the last decade”.

In particular, see: “How Much Does It Cost to Train Frontier AI Models?”, Published Jun 03, 2024, Last updated Jan 13, 2025, Authors: Ben Cottier, Robi Rahman, Loredana Fattorini, Nestor Maslej, David Owen

The latest models are far better than early models, but use over a few trillion parameters, while much earlier models didn’t crack a billion. Cost is dropping extremely fast if you measure bang/buck but a much bigger bang is always sought, so the amount spent increases as more GPUs (and other hardware) is interconnected at higher speeds.

Some things aren’t as obvious as others, like understanding finer nuances, or reducing hallucinations; suggesting that it ought to have been correct in the first place misunderstands the answers the tiny models provide (interesting, and sometimes correct, but wanting for some point(s)).

The realm of diminishing returns is more confined to things like: DLSRs with the greatest diminishing returns, cellphones (with their slightly better screen, at twice the cost), laptops have the lowest spread of diminishing returns due to much greater competition.

If your not buying for multi-trillion parameters you’re looking at an uncertain future.