At HPE Discover 2025, we saw the new Intel E610 NICs for Low Power 10Gbase-T and 2.5GbE for the first time in person. We also saw something that can only be described as wilder. Intel had a double OCP NIC 3.0 form factor card with its new Intel E830 controller and eight 25GbE ports.

This is the New Double Wide NIC with 8x 25GbE Ports Using the Intel E830 NIC

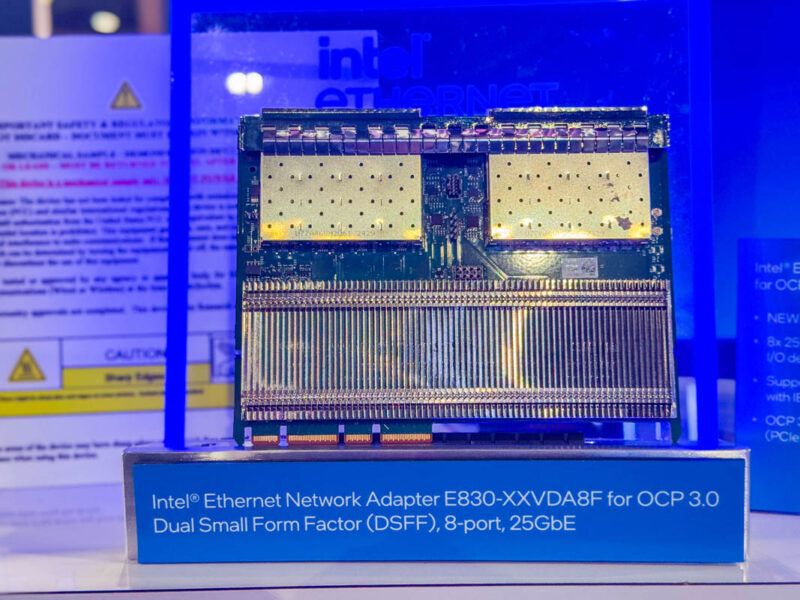

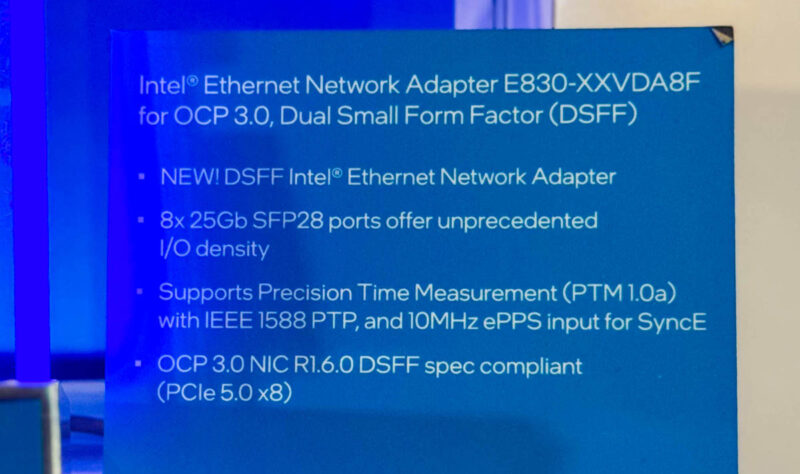

Feast your eyes on the aptly named Intel Ethernet Network Adapter E830-XXVDA8F for OCP 3.0 Dual Small Form Factor. You might think we made that up, but it is th ename on the placard under this absolute unit of a card.

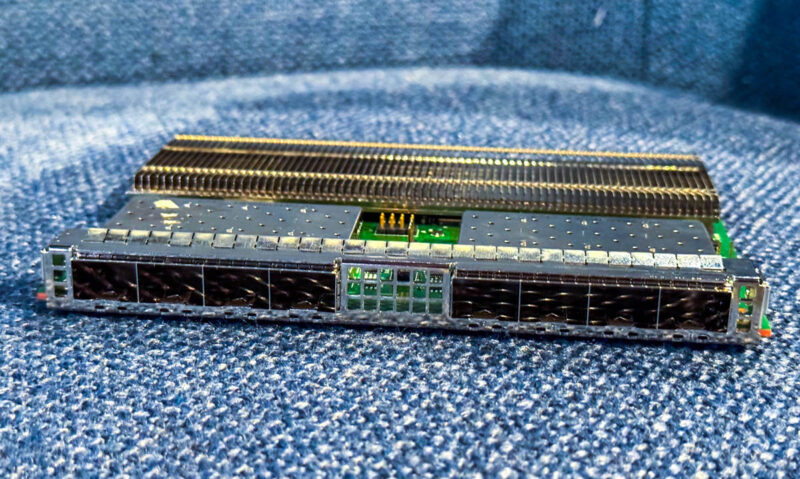

There are many things different with this card, but let us start with the neat part, there are eight SFP28 cages for 8x 25GbE. Another way to look at that is that it is 200Gbps of network ports. The Intel E830 is the update to the Intel E810. While the E810 let you have two QSFP28 ports and up to 100Gbps of bandwidth between them, the E830 allows you to have 200Gbps of bandwidth. For those newer to networking, the SFP28 cages are in sets of four below. For 100GbE ports we would instead use QSFP28, and that Q means quad so that gives you some idea of how the 200Gbps is presented from controller to ports.

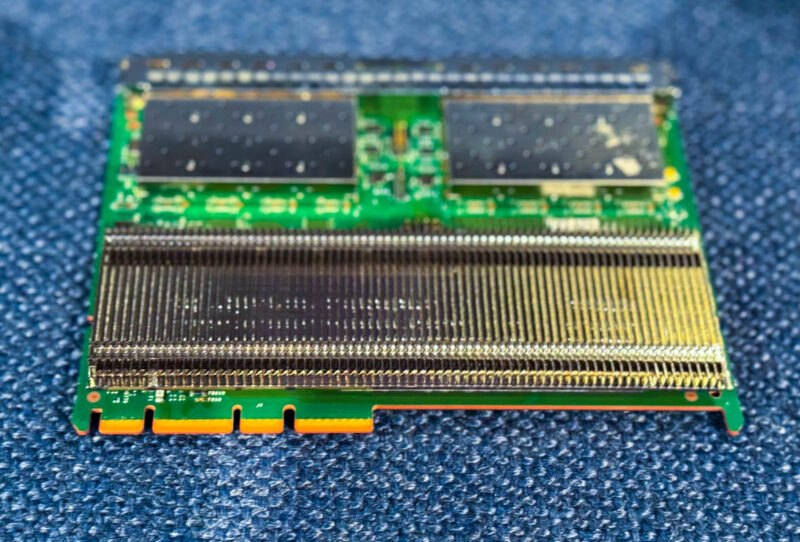

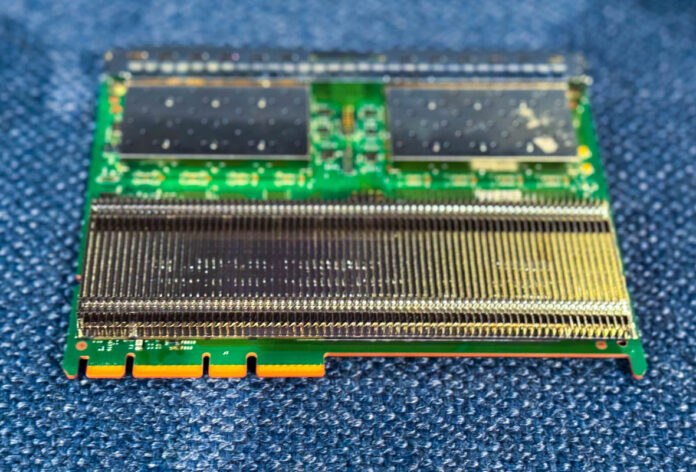

Of course, the above does not look like a standard PCIe card, or even a typical OCP NIC 3.0 SFF NIC that is used in just about every server, including HPE’s ProLiant Gen12 systems. At the same time, we can see the familar connector here. What is going on is that this is a DSFF or dual small form factor card but it only has one set of connectors since you can drive a 200Gbps link off of a PCIe Gen4 x16 link or a PCIe Gen5 x8 link. You do not need two sets of OCP NIC 3.0 x16 connectors on the card.

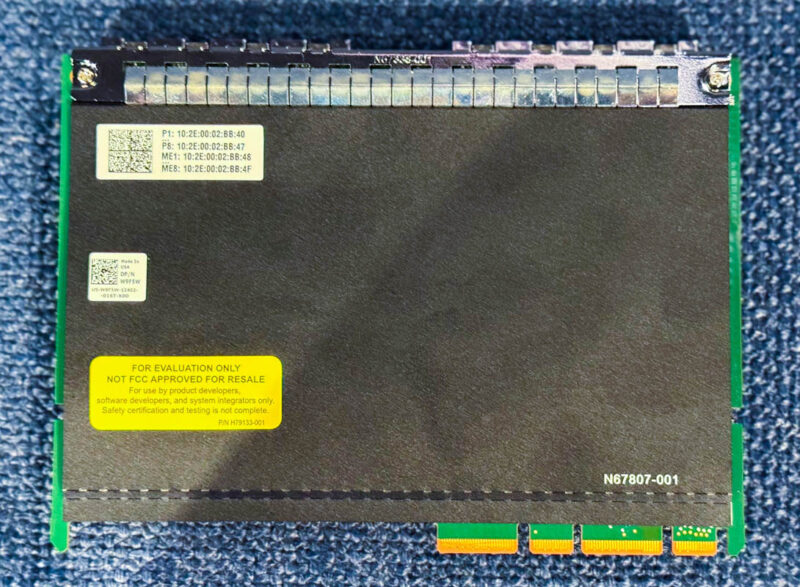

Here is a look at the bottom of the card to give you some other sense of the sheer size.

We were told that the idea here is that you can use two of these in a 1U edge server, say one based on the Intel Xeon 6 SoC, and add 16x 25GbE ports to an edge machine while keeping it compact.

Final Words

I have to say, that the whole Intel Ethernet Network Adapter E830-XXVDA8F for OCP 3.0 feels like a bit of a long name. Still this is a neat concept. Many edge CPE boxes and other devices have long histories of using custom form factors for NIC and port expansion. If this is something that the networking industry standardizes on, then it could be a neat development. At some point, having dozens of vendors make their own expansion cards is probably the wrong answer and the Dual Small Form Factor might at least be a better one. Still, this thing just looked neat and I thought folks on STH might want to see it as it is the first Intel E830 NIC I have held and the first DSFF NIC as well.

Why are you fapping over this ?

Yes, E830 is cool, but this is simply 200GbE model with split both 100GbE ports 4-way each.

You could do the same with classic PCIe 2x 100GbE NIC, when it comes out.

BTW, do you know that 25GbE model is already on shelves in EU ?

https://geizhals.eu/intel-e830-xxvda2-25g-lan-adapter-e830xxvda2-a3486674.html

How is this review fapping over it? You just seem upset over nothing.

I’ve seen standard 2P adapters. I’ve seen OCP NICs. I haven’t seen DSFF. I’ve also never seen E830 in a configuration we’ve been quoted.

I don’t get comments like that, but I dig this kind of content.

Time to break out the Dremel!

just checked geizhals and found they list E610-XT2 adapters sold in bundles of 5 starting at 247 EUR.

That’s under 50 EUR per nic. And why has it not been reviewed yet, seems like the most important nic for any home user atm.

Well scratch that, seems like an error in geizhals listing.. still unclear but they seem to be 250 a piece..

Genuine question – what’s the use of 8 or 16 ports on a server? I would’ve assumed two 100 Gb ports would be more useful, as those could be plugged into one or two 100 Gb switches and from there broken out into as many ports as needed, potentially without the need for a double-wide card.

If the idea is that the server connects to 16 other devices and does routing then it still seems a bit strange to me, as a failed server means finding a replacement with that many ports which is not that common. It seems simpler to replace a server with another when they only have one or two Ethernet ports on the back, or replacing a 16/24/48 port switch if that’s what broke, rather than trying to combine the functionality of both devices into one and making maintenance/replacement more difficult.

Does anyone here make use of servers with 4 or more active Ethernet connections in a data centre environment (not counting link bonding)? If so I’d be interested to learn what your use cases are.

@Malvineous

In my home lab and work I routinely configure my servers with four active ethernet connections, and could justify six or more if I needed. in my VM host two are dedicated to VM traffic (one port per switch) and the other two for SAN traffic. SAN I don’t do link bonding but use the pair for MPIO to my SAN chassis through redundant switches. If I was saturating my 10Gb SAN links I’d move towards a quad ethernet card for my SAN, running two pairs of ports per switch, putting my total to six.

In the datacenter I’ve seen cases where management traffic to VMware hosts run over a dedicated port for security. And some cases tenants require a measure of isolation that a VLAN on a virtual switch can’t offer so again, more ports.