After posting the initial memory bandwidth figures in our Supermicro Hyper-Speed tests we decided to take a deeper look. This time we did a few optimizations, including turning off all unnecessary hardware devices on the Hyper-Speed platform. We also changed from our own compiled version where we saved results, to the Phoronix Test Suite pts/stream benchmark. One optimization we did make there was to NOT save results using the in suite feature as those results were consistently lower. The test goal was simple, see what the impact of memory speed is in a dual Intel Xeon E5-2600 system. We also swapped memory to our standard Kingston DIMMs to test compatibility and to see if they would withstand the test of higher clock speed.

Test Configuration

Supermicro sent the following test configuration for our testing (although we used the opportunity to validate the Kingston DIMMs.) This represents one common configuration for a compute node. One other popular configuration is using dual Intel Xeon E5-2643CPUs (4C/8T) for applications where one needs high clock speed and lower core counts due to per-core license costs.

- Processors: Intel Xeon E5-2687W @ stock and 3.224GHz base clocks

- Chassis: Supermicro SYS-6027AX-TRF with Supermicro X9DAX-iF Motherboard

- Memory: 64GB using 8x 8GB Kingston 1600MHz Registered ECC DDR3 DIMMs

- SSD: Samsung 830 256GB

- Operating System: Ubuntu Server 12.10

One other configuration change we made was to remove the Mellanox Infiniband cards. The goal was to get the configuration lean for memory benchmarking.

Memory Clock Speed Versus Bandwidth

For this test we took the 8x 8GB Kingston 1600MHz Registered ECC DDR3 DIMMs that Kingston provided through a range of speeds. We used 1066MHz representing memory from the Intel Xeon 5500 days. We then used 1333MHz speeds which were from the Intel Xeon E5600 generation. With the Intel Xeon E5 series and the AMD Opteron 6200 series, 1600MHz memory became standard. Finally, we used the capability of the Supermicro Hyper-Speed server to clock the 1600MHz DDR3 at a higher speed. We first forced 1866MHz which is the next generation registered memory speed and then we raised the BCLK taking the memory to 1978MHz and the dual Intel Xeon E5-2687W CPUs to a 6% clock speed increase. Let’s take a look at what this all means in terms of Stream benchmark performance.

[toggle_box title=”About the Stream Benchmark” width=”Width of toggle box”]Stream is a benchmark that needs virtually no introduction. It is considered by many to be the de facto memory performance. Authored by John D. McCalpin, Ph.D. it can be found at http://www.cs.virginia.edu/stream/ and is very easy to use.

For this test we used the pts/stream version. One of the nice features is that it runs the benchmark 10 times and provides a standard deviation. One can find a comparison to other user runs here.[/toggle_box]

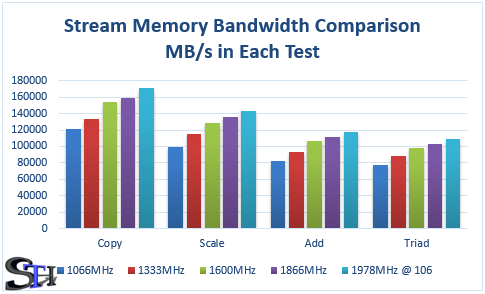

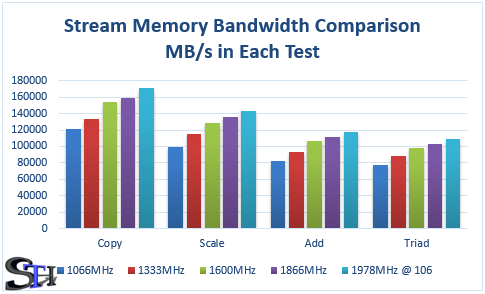

For this test, results are organized in two ways. The first is that we look at the four main Stream tests (Copy, Scale, Add and Triad.) We then look at each memory speed configuration’s scores in the different tests.

From this one can see that generally there is fairly solid scaling when we look at memory speed. Currently, the 1866MHz (purple) memory is not widely available, and the Intel Xeon E5 range does not currently support that speed. So when comparing what is available today (early 2013) one would compare the 1600MHz figures to the Supermicro Hyper-Speed (1978MHz @ 106) speeds.

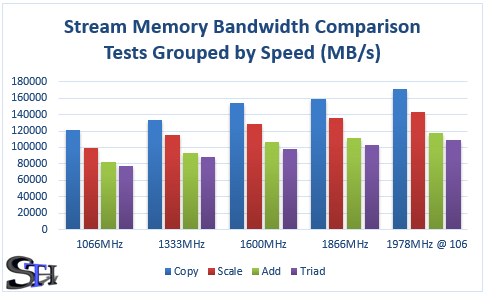

Since some people prefer this view, here are the four tests by memory speed. Again, one can see the Kingston memory added to the Supermicro Hyper-Speed server dominate the tests.

Conclusion

We can take away three insights from these figures. First, if one has a memory intensive application the Supermicro Hyper-Speed platform shows significant gains over 1600MHz DDR3 today as well as the next-generation 1866MHz registered ECC DIMMs. Second, the Kingston KVR16R11D4/8HC modules held up to a 23.6% overclock without a stutter and even passed memtest at these speeds. The HC modules are part of Kingston’s premier line and have a locked BOM so they are all tested and configured the same. Here we see that is a very strong set of memory modules. The third item we notice in this comparison is that recycling older and slower memory from older generation servers can have a noticeable impact on memory bandwidth.

Charts pop out. Like the look. Good set of results. Why even test DDR3-1066?

As a “server guy” I generally ignore the topic of overclocking. This, however, is a kind of overclocking that I could really use. All of a sudden those new Supermicro server boards sound very exciting.

@dba

Yeah, use specialized hardware and waste more electricity to boost memory speed from 120G/s to 140G/s and gain 0.0000001 sec per process.

Very exciting indeed. I wonder why didn’t anyone think of that before.

@M I doubt it is more than 6-8% more power so 20% improvement compared to total consumption is good.

@Meat agreed. Like the colors. GJ on this new re-design.

@Ravi

By “20% improvement” you mean 0.0000001 sec improvement in real world applications.

Patrick,

I am a bit confused about the Stream numbers listed in the charts.

The values are above the physical memory speed the system is capable of. Or the numbers do not represent MB/s on the memory bus transferred.

Clarification would be helpful.

thanks and regards,

Andy

Andy – Stream benchmark numbers at each memory speed. Actual results not theoretical numbers.

Patrick,

I know they are at each memory speed, but the numbers (at each memory speed) are above the theoretical max – which is normally unlikely to achieve :-)

The theoretical limits (which actual numbers normally should not exceed) on a dual Sandy Bridge system:

DDR3-1066: 66,625 GB/sec

DDR3-1333: 83,3125 GB/sec

DDR3-1600: 100 GB/Sec

DDR3-1866: 116,625 GB/sec

I run my dual E5-2687W system at 1600 MHz RAM speed and stream reports 80 GB/sec memory bandwith, which is approx 80% of the theoretical bandwidth the memory system of the Sandy Bridge is capable of – seems reasonable.

Your numbers (at 1600 MHz) would represent approx 150% of the peak bandwidth the memory subsystem is capable of at this speed. The other numbers at respective mem speed are each one above their respective limits (1066,1333,1866,..).

I’d rather suggest you check the numbers with a different utility for verification. They are simply not possible if they should represent main memory bandwidth.

Unless they are skewed by some cache influence, timer dependencies or the reported metric does represent something different but not the main memory bandwidth measured in MB/sec, etc …. :-)

rgds,

Andy

NB:

Looking at your numbers. your measured numbers seem to be a factor of 2 too high – roughly speaking.